00:00:05.460

Hello. It was Friday afternoon around 4 PM when I saw a green build on our CI server. I knew that I had to deploy it to production before the weekend, but I also had a strong gut feeling that it was a bad idea. It wasn't merely superstition about Friday deployments; I had already broken production a couple of weeks before that and had to stay late after work to revert my changes. I knew the risk.

00:00:36.200

Still, I knew that I had to deploy it. You may wonder why I wanted to deploy anything on Friday, especially so late in the afternoon. What was so important about it? I’ll answer those questions soon, but first, let me introduce myself. I'm Maciek Rzasa, and I live in southeastern Poland. At Toptal, I am leading a significant initiative to migrate the entire technical infrastructure to a service-oriented architecture. Currently, I am part of the team responsible for service extraction.

00:01:05.460

After work, I enjoy sharing knowledge, which is why I’m here today. However, the most important details of that process are beyond the scope of this talk. So, back to business: what is the platform and architecture we’re discussing? Our platform is our main application; it handles most of our business domain and is a standard Rails application with over one million lines of code. Hundreds of engineers work on this app every day, and we have thousands of users relying on it. I must emphasize that it is a monolith, a majestic monolith.

00:01:39.899

My team, the one I work in, is trying to extract cohesive parts from the whole domain. We aim to extract the logic related to billing and deploy it as another service. That's our task. So how do we tackle this? We have to keep in mind that there are hundreds of other people actively developing this app, so we need to do it safely and incrementally.

00:02:09.420

The first step we took was to extract the billing logic into a Rails engine, relying on direct method calls whenever the platform needed to access this billing logic. The next step was to add networking for external calls while still keeping the billing logic inside the same Rails process. We added Kafka for asynchronous API communications, which is interesting but unfortunately outside the scope of this talk. We also added an HTTP interface for synchronous API, which is what this talk focuses on.

00:02:37.200

To make it safe, we implemented two safeguards. The first was an HTTP feature flag, enabling us to switch our experimental REST API on and off without deploying and for a fraction of the overall traffic. The second safety feature was a fallback. So, whenever an HTTP call failed, be it due to client error, server error, or timeout, we would fall back to a direct call. This way, users of the billing engine would still receive the needed data even during an error.

00:03:03.600

If we consider the triad I mentioned earlier: monitor, fix, deploy, I have to say that we started with the last part, the deploy. Besides the usual CI checks that are quite complex at Toptal, we also introduced a safe environment where it was acceptable if our external call failed because we had a fallback in place, and we implemented feature flags to toggle the experiment whenever necessary.

00:03:32.700

How did the extraction process look? At the beginning, we had two models: the platform product and the billing record. The product was meant to stay on the platform, while the billing record was to be moved to billing. After the extraction, the platform side looked like this: instead of a has-many relationship in Rails, we implemented a method that called a special service accessing billing data. We also created a plain old Ruby object for the billing record, which wrapped the response from the billing service and referenced the product from the database.

00:04:04.920

All the magic, including the safeguards and feature flags, was encapsulated inside the billing service. This was our approach to ensuring isolation. We deployed it and enabled it for a small fraction of the traffic at 10 to 20 percent, but the performance was abysmal. We weren’t able to make it work, and we knew we had to optimize the service.

00:04:34.680

So, it’s a standard scenario. We had a small API call, so we sat down and looked at New Relic to check the performance. We optimized it and moved to the next API call. However, we realized that REST wasn’t flexible enough for us. In the middle of refactoring, we made a radical decision: we would remove the already implemented REST API and switch to a GraphQL API.

00:05:01.200

We created the GraphQL layer, added another set of feature flags, and enabled us to switch between REST and GraphQL on the fly. We could only enable the GraphQL API for a small part of our traffic, and we incorporated a fallback for GraphQL requests to respond even in case of errors. At this point, the instinctive response would be to start coding right away. We had the complete specification for the REST API and thought we should just take endpoints one by one and code. However, that wasn’t what we chose to do. We understood we needed to enhance our monitoring first to avoid flying blind.

00:05:49.560

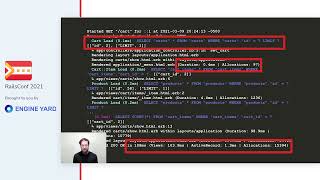

We enhanced the standard monitoring tools we already used, such as Rollbar for error tracking and New Relic for performance monitoring. We implemented custom request instrumentation so that every time we sent a request to billing, we logged the method name, the service arguments, the call stack trace, the source of the call, the response time, and any errors encountered. This allowed us to identify not only slow calls using elapsed time but also measure the volume of calls simply by counting the logs. Additionally, we identified frequently used methods, reproduced slow calls locally using their arguments, and pinpointed the actual users causing the slowness.

00:06:33.060

This power of enhanced monitoring supported us in prioritizing the GraphQL migration based on performance needs. We isolated three main performance indicators for issues: the four to nine HTTP errors captured in Rollbar, many entries logged for slow responses, and identifying performance spikes through New Relic’s monitoring of the billing service.

00:07:08.940

With these two pillars—good monitoring and safe deployment—we could then jump into fixing the performance optimization. I’ll share three test cases with you, considering them as a series of loosely connected episodes. The first thing we tackled was the flood of requests we observed in our logs. A single view or job in the platform was generating multiple requests to the billing service, potentially leading to thousands of requests from a single view. Imagine how slow that was and how it burdened the billing service.

00:07:52.260

So, why did this happen? Let’s look at an example involving a background job. We were selecting products in batches and executing business logic. Within this logic, we accessed billing records, which in turn acquired billing query services. Thus, if we had thousands of products, we performed thousands of billing queries akin to an N+1 problem where multiple database queries are sent in a loop. This is exactly what we faced. Since we weren't capable of employing standard N+1 anti-techniques, we needed to implement an alternative.

00:08:54.300

What we did was move data loading one level up; instead of loading the data inside the model, we cached it before it was necessary. For every batch processed, we ran a caching method that first queried the billing query service to index the records by product ID. Then, we iterated over the products, assigning each corresponding billing record. By adopting this approach, we reduced our requests to just one for every 100 products, a significant improvement from the initial thousands of requests.

00:09:20.340

We learned that when sending many requests originating from the same place, it’s prudent to cache data from external services. The next episode relates closely to the first; instead of N+1 billing queries, we encountered N database queries. As an example, while fetching data from the billing query service, we iterated over billing records, which also required accessing the product from the database.

00:09:51.420

Therefore, if we had multiple billing records, each iteration caused a request to the database, which was not performant at all. In this case, we enhanced the query service itself because we already received the product needed. What we did was first index the products then request the query service, subsequently iterating through the billing records and assigning the relevant products using hashed values. This resembles a hash join commonly known from database theory, and it was fascinating to leverage such classic algorithms at the application level.

00:10:29.760

Afterward, we encountered another issue: we were fetching too much data from the billing query service because we aimed to maintain a small and flexible API. We included all fields necessary for the platform side in the REST response, resulting in almost 40 fields populated with tens or hundreds of records for requests that often required just a few. Consequently, we had to query them from the database, serialize them, and finally, process them on the platform side, making it slow and memory-intensive. Worse yet, part of the filtering occurred on the client side instead of server side.

00:11:02.340

When we implemented this in GraphQL, we observed its true potential. We leveraged the power of GraphQL to build queries that fetched only the necessary data without modifying the server-side implementation. When we defined types on the server, we then could optimize requests on the client side. This optimization allowed us to retrieve just four fields instead of 40, streamlining our data fetching process.

00:11:41.940

At that point, we were progressing with the migration efficiently, and I was pleased with my work since I experienced a short feedback cycle. I would take a task, analyze how to improve performance, implement it, check locally, wait for reviews, merge it, and enable the feature flag to observe the metric improvements on New Relic and in our logs. It was gratifying to see immediate results from my efforts.

00:12:21.240

So, I developed and deployed it to production, activated the feature flag, and awaited positive metrics, expecting significant improvements. However, what transpired was quite the opposite. By the way, do you recall last summer in the northern hemisphere? The hottest day was July 28. I remember the day vividly because, as I analyzed my newly deployed changes on that day, developers started reporting issues with Sidekiq workers consuming excessive memory.

00:12:59.940

Another developer mentioned a problem in staging where the deployment failed at the last stage of our CI pipeline. I received direct messages indicating that my build caused the staging breakage. Until that point, I was confident it was someone else's doing. I began checking the logs, convinced it was a hypothesis rather than a reality.

00:13:36.420

As the business hours began in the US, our internal users started reporting more issues, leading me to realize that the fault might lie with my build. I soon found myself sweating and anxious on the hottest day of the summer.

00:14:14.700

From the metrics we reviewed, it was bad news. The CPU usage for background jobs skyrocketed to 20 times normal levels. After we fixed the issue, we returned to a standard use level, which was reflected in the graphs we analyzed. For memory, the spikes were about 50 times worse than expected, and our Postgres queries were taking an alarming one minute.

00:14:43.740

Fortunately, two positive developments occurred on that day. First, even before I comprehended the gravity of the situation, our infrastructure team swiftly reverted my build and restored a working version swiftly. With their assistance, I reverted my code in master to make it green again. I had to stay those extra hours and monitor production, but ultimately, I was able to resolve the issue with their help.

00:15:26.760

The second stroke of luck was that the next day, my manager approached me on Slack with a remarkably positive attitude. Usually, a manager's visit could be a sign of impending doom, yet she simply asked if I needed anything and praised my efforts. She acknowledged that breaking production was a setback but emphasized how quickly it had been rectified, encouraging me to focus on fixing the issue.

00:16:06.060

So, what went wrong? While working on the REST branch of the client, I had implemented parameter slicing that allowed only one parameter, but on the server side, the billing record method accepted numerous parameters. If none were present, it triggered a generic call from the billing record class.

00:16:50.520

I overly sanitized parameters so when a billing record was requested for a single client, no parameters were sent to the billing service. Thus, every client billing record request fetched all records from the database, including related tables. This caused millions of records to be loaded into memory, overwhelming our servers.

00:17:30.840

To rectify this, we implemented safeguards on both sides: if the parameter list was empty, we ensured that nothing would be returned. I fixed the slicing mechanism to require populated parameters before querying the billing service. The issue was quite common; forgetting a condition when querying leads to fetching underscores from entire tables or accidentally deleting tables.

00:18:17.640

Once we identified the problem, we resolved it, and the graphs reflected our fix. One showed New Relic data plotting the failed deployment period of no HTTP traffic caused by halting the billing operations. After the fix, we consistently experienced faster and more reliable responses without those alarming spikes in response time, particularly due to our efforts in query customization and under-fetching approaches.

00:18:59.460

It became evident that no matter how much I trusted our CI, reviewers, and even myself, manual testing was crucial. At this particular moment, when asked how to test this, I simply answered, 'Trust the specs.' In hindsight, I should have suggested testing methods via the console. If only I had spent 15 minutes testing it manually, I could have identified an abstraction issue.

00:19:45.660

This journey of calibrating performance was not the final hurdle. We faced another issue: one specific field accumulated about a thousand hits a day with spikes during certain times. To address frequent uses, we decided we couldn’t preload this data in the classical sense and ought to strategize intelligently to pre-load and cache it.

00:20:25.800

We proposed sending this field to Kafka events, building a read model on the platform side, running migration to backfill the data, and eventually deploying this model. I was excited since it aligned with contemporary trends, but then Samuel, our co-worker with great knowledge on the billing side, suggested we might already find that data on the platform database.

00:21:10.260

Although I was initially frustrated by Samuel’s suggestion—he seemed to dismiss the knowledge I was eager to demonstrate—discussions among developers and product people led us to investigate. Upon comparing the two fields, we confirmed that we could indeed use the field from the platform database, effectively replacing our ambitious Kafka plan in just two or three days instead of weeks.

00:21:56.540

This simple solution significantly improved maintainability over the complex Kafka pipeline. Upon deployment, it operated beautifully. This situation illustrated that overly complicated solutions may not be the best options. Instead, we must leverage domain knowledge and develop solutions based on what’s feasible versus just what might seem 'cool' or cutting edge.

00:22:41.520

From time to time, we continued to encounter errors—specifically, the four to nine HTTP errors showing up in Rollbar logs every Sunday evening. Oddly, we could not reproduce these locally. We established when and where it occurred: during the scheduling of weekly talent reminders, standard solutions were utilized.

00:23:16.200

During this process, we utilized batching and preloaded data. In our anticipation, we deployed the seasonal reminders. We observed errors again on Monday morning, despite our precautions. Our reminders not only scheduled sending them but also called and checked the validity of those reminders; if they called billing to do this, they overwhelmed the service.

00:23:56.620

We acknowledged that this could happen, so we calculated how many talents existed across time zones by dividing by 24, determining that this load was acceptable. What we overlooked was that 25% of our talents shared the same time zone, with another 50% living in the second most popular time zone. Consequently, at the same time on Sunday evenings, we overloaded the billing service.

00:24:39.840

Finding another solution hinged on the fact that we couldn’t preload data due to the setup—each reminder ran in separate Sidekiq jobs. Therefore, we added time variance for our reminders by introducing a small jitter of 120 seconds to distribute the load evenly.

00:25:28.800

After developing this change, we tested locally, checked deployment schedules, and awaited Sunday. However, on Monday morning, we encountered the same error again. What was the problem this time? Our scheduling did not match the execution, and instead of distributing reminders over the 120 second jitter, they clustered into eight large spikes.

00:26:14.320

The issue with Sidekiq presented low-resolution scheduling—it polled every 15-30 seconds, executing older jobs in between polling. This resulted in overloading during the peak response time. To solve this, we decided to upgrade our setup to Sidekiq's Enterprise Edition that had rate-limiting features and could allow for better control over the system.

00:27:02.580

After deploying these changes and implementing rate limits allowing for term requests per second, we monitored the following Sunday for errors. Fortunately, Monday morning brought no reports of errors. Of course, it took us four weeks to reach this stage of conclusion. The optimization journey made it evident how effective our safe deployment framework had been. During each four-to-nine error occurrence, we were able to utilize fallback systems to test solutions incrementally.

00:27:50.340

It was during past optimization that I encountered another green build on a Friday, and I had to weigh my gut instincts about deploying it. The decision revolved around a recently proven safe deployment method for the platform, and I took the plunge, deploying my changes successfully as I typically would.

00:28:37.560

Reflecting back, you can appreciate why it was so important to deploy before the weekend, ensuring no last-minute washes on deployments before a slow weekend. Notably, while preparing for this talk, I discovered that I had actually executed two successful Friday deployments—one on the first week and another on the last week of my optimization saga. Both were around 4 PM and helped solidify my confidence.

00:29:22.500

To recap, what truly assisted us during the optimization were three crucial elements: the first was proper monitoring. We incorporated standard monitoring methods for errors and performance alongside custom requests that pinpointed issues efficiently. Our deployment practices were also characterized by safety; we ensured strong CI checks coupled with easy deployment and reliable rollback.

00:30:09.660

Apart from that, we enforced robust safeguards enabling fallback to direct calls when remote calls failed. The agile feature flags facilitated flexibility in both deploying and retraction of experimental features without additional complications, allowing constant iterative improvement.

00:30:55.740

The methods applied assisted in fixing service performance through pre-loading, caching, server-side filtering, and fetching only required data while balancing loads. When you consider the list of tactics, they might not come as a surprise; you likely already engage with them in your processes.

00:31:42.840

So, what’s the take-home here? Why did I take the time to share this? It’s simple: recognize the common patterns applied in different contexts. Learning about these trade-offs, like those between trade-off iterations, is significant.

00:32:25.620

Refactoring situations often mask performance issues before taking steps forward, especially when replicating production traffic can prove challenging during code changes. Our journey was conducted through experiments in production settings followed by removals of performance disparities. Regarding simplicity, we focused on simple yet generic API solutions and gradually integrated complexity upon necessity.

00:33:07.920

Lastly, the trade-offs learned affected our optimization efforts. For instance, while distributing load, our feature teams were worried about instantaneous responses and had to understand that speed necessitated balancing among response time and workload sharing.

00:33:48.600

The essence of the narrative lies in the fact that we effectively determined the known patterns in a new setting, understood necessary trade-offs, and applied our knowledge in the context we faced. By sharing our failures, you can learn from them and avoid similar pitfalls. Please carry this lesson back to your environment and consider evaluating the factors that help ensure you can deploy safely, particularly on Fridays.

00:34:28.440

Thank you.