00:00:12.349

What if you could predict the future? What if we all could? I'm here today to tell you that you can, and we all can. We have the power to predict the future. The bad news is that we're not very good at it. The good news is that even a bad prediction can tell us something about the future. Today, we will predict. Today you will learn why Bayes is BAE.

00:01:30.750

Introducing our protagonist: this is Thomas Bayes. Thomas was born in 1701, though we don't know the exact date. He was born in a town called Harford. We can't be certain about his appearance either, but we do know that Bayes was a Presbyterian minister and a statistician. His most famous work, which gave us Bayes' Rule, wasn't published until after his death. Before that, he published two other papers: one titled "Divine Benevolence," an attempt to prove that the principal end of divine providence is the happiness of creatures, and another a defense of mathematicians against objections raised by the author of "The Analyst."

I prefer my titles a little shorter, but to each their own. Why do we care about this? Well, Bayes contributed significantly to probability with the formulation of Bayes' Rule.

00:02:21.250

Let's travel back and immerse ourselves in the era, the year is 1720. Sweden and Prussia just signed the Treaty of Stockholm. Anna Maria Mozart, the mother of Wolfgang Amadeus Mozart, was also born in 1720, and statistics and probabilities were all the rage at the time. People could make claims such as: given the number of winning tickets in a raffle, what is the probability that any one given ticket will be a winner? In the 1720s, "Gulliver's Travels" was published, a full 45 years before the American Revolution, and Easter Island was discovered by the Dutch.

00:03:05.440

Now, while people knew about the island, they didn't understand that there was a lot more to the statues than what appeared above the surface, much like probability. While we knew how to get the probability of a winning ticket, we didn't know how to do the inverse. Inverse probability examines this: if we draw 100 tickets and find that 10 of them are winners, what does that say about the probability of drawing a winning ticket? In this case, it's simple. Ten winners out of a total of 100 tickets yields about a 10% probability. But what if we have a smaller sample size? If we drew one ticket and it was a winner, would we assume that 100% of tickets are winners? The answer is no; we wouldn't guess that.

00:04:10.120

You might wonder how we can correct our guess about the winning ticket. This is where Bayes' insight comes in. He showed us that by taking related probability distributions, even if they're inaccurate on their own, we can combine them to achieve a more accurate result. This principle is useful in fields such as machine learning and artificial intelligence. I'll focus on artificial intelligence in this talk, but let me introduce myself first. My name is Richard, pronounced like 'schnapps.' I maintain Rocket jobs poorly, contribute to Rails and Puma, and I'm pursuing a master's in computer science at Georgia Tech.

00:05:22.720

Before this, I studied mechanical engineering for my bachelor's degree and absolutely hated it. Despite my experience, Georgia Tech offers an affordable online program. I currently work full-time for a timeshare company that uses computer systems. Now, some of you may know what Heroku is. Instead of overselling it, I’ll share some new features you might not be aware of. One feature is Automatic Certificate Management, which provisions a Let's Encrypt SSL certificate for your app and rotates it automatically every 90 days. Additionally, we also provide free SSL for all paid and Hobby dynos, which is essential given recent FCC rulings on data privacy.

00:06:26.100

While the free SSL option does leak the hostname to your ISP, we also provide an NSA-grade SSL as an add-on. For those interested in continuous integration, we have a beta feature called Heroku CI, which deploys a staging server automatically for each pull request, allowing reviewers to see a live application and verify fixes. This would typically be the moment I market my service, Code Triage, which is a great place to start contributing to open source, but instead, I want to address a critical issue my home state of Texas faces: gerrymandering.

00:08:12.760

Gerrymandering creates a political system where districts are drawn disproportionately. It's a fundamental problem that undermines the power of individual votes, and I advocate for countrywide redistricting reforms. The district I live in was ruled illegal by Texas' judicial branch, yet those in charge refuse to address the issue legislatively. If you find this alarming, I recommend looking up your state representatives and communicating your concerns. In Texas, there's an organization called 'Fair Maps Texas' that offers guides and keeps its members informed about the current legislation.

00:09:34.770

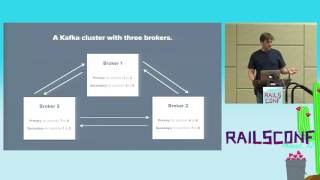

Returning to Bayes, I want to discuss how we can apply this understanding to artificial intelligence. In my graduate course at Georgia Tech, we've been using Bayes' Rule for robotics. When we send a robot to a location, we need to know both where it is and how to get it there. However, robots don't perceive the world as we do; they depend on noisy sensors. For example, if we have a simple robot that can only move left or right, we can represent its position on a graph as a normal distribution. While the robot may be at position zero, it could actually be at any point nearby, although less likely further away.

00:10:40.350

Even if we take multiple measurements, uncertainties in the world can still affect our accuracy. Therefore, we can employ Bayes' Rule. Using it, we can predict and increase the accuracy of our estimate of the robot's position. If we think we're at zero, but our movement suggests we should be at ten, and our sensor says five, we have conflicting data. A good guess would be a point between the two discrepancies, utilizing all gathered information to make a more informed decision. The result is a Kalman filter, which helps us produce more reliable predictions than either initial guess.

00:12:31.410

In class, we learned about an example in which a robot's path is represented by a green line, while a series of noisy measurements are displayed as red dots. The noise can be so overwhelming that distinguishing the movement direction becomes untenable. However, when we correctly implement a Kalman filter, our predictions start aligning closer to the actual robot movement, allowing us to reliably track its path without confusion.

00:13:38.390

Now, let's switch gears a bit. Who here likes money? Some people may not have raised their hands, but I believe we can agree that we all appreciate the value of a rare coin. Take, for instance, the 1913 Liberty Head nickel. Only five were minted without the US Mint's approval, making it incredibly rare and worth around $3.7 million. If you draw a coin from a bag containing both a fair coin and a trick coin and it shows heads, you might think you have a chance at the million-dollar coin. To determine the likelihood of this, we can apply Bayes' Rule.

00:15:12.140

First, we ask for the probability of landing on heads. There are three total possibilities for getting heads: one for each of the coins. If we calculate it logically, we see that the probability of our outcome is based on the chances provided by each individual coin. While they may all show heads, it’s essential to discern where each result originates from. When we lay out these probabilities mathematically, we can discover the odds of having the rare coin. By considering both the fair coin and the trick coin, we can clearly calculate that the probability of owning the rare coin falls to one in three or about 33%.

00:17:05.289

This illustrates Bayes' Rule effectively. If we understand related probabilities, we can make predictions about unknowns, find solutions, and update accordingly. Understanding these correlations aids in refining our approach. To further explore this concept, we can use visual aids known as probability trees. In essence, we create branches that represent each outcome. The first decision branches out into choosing one of the two coins; the next split indicates the various results of flipping the coin. We trim our tree based on gained evidence and can calculate probabilities when outcomes are confirmed.

00:19:48.040

However, keep in mind that the tree approach also introduces total probability. When faced with multiple related events, we summarize their total probabilities by calculating proportions of heads versus tails in each possible outcome. This allows us to wrap the entire equation together in a cohesive manner and provides insight into how total probability serves Bayes' Theorem effectively. But it can get more complicated. If we flip the coin multiple times and it lands on heads each time, the probability adjustment becomes incrementally sophisticated. Despite this complexity, we can determine with certainty that our predictions lack absolute guarantees.

00:21:50.460

As noted, any incorrect assertions stating that absolute certainty exists can mislead. Once we declare something a zero percent chance, the framework of Bayes' Theorem becomes ineffective. Staying vigilant and skeptical remains vital. Using natural occurrences, like predicting the sun rising based on historical data, further emphasizes this notion of uncertainty in translating our observations. Despite the best initial intuitions or experiences, discovering new evidence or data keeps refining our theories.

00:23:54.727

Before continuing, I want to look back at Bayes' Rule. A critical aspect is prediction; if you don't try predicting outcomes, you can’t derive meaning from right or wrong outcomes. Scientists work from hypotheses or assumptions and adjust their beliefs based on new data. Skepticism fosters better judgment and prevents complacency in knowledge. You should keep an open observational mindset, as not all outcomes match historical expectations—be it with daily events or statistical analyses.

00:25:56.999

In closing, I highly recommend a book titled "Algorithms to Live By" - each programmer should read it. This compelling narrative includes a chapter dedicated to Bayes' Rule, offering insights without delving deep into complex mathematics. Another vital read is "The Signal and the Noise" by Nate Silver, which discusses probability and forecasts regarding political events, including elections. If you want to delve deeper into Kalman filters and their applications, I recommend exploring resources by Simon De Levey.

00:27:28.200

Finally, one more thing: Bayes is not short for 'baby.' In African American Vernacular English, it refers to the phrase 'before anyone else.' So, while figures like Copernicus and Laplace built on Bayes’ theories, what those scholars developed stemmed from the concepts Bayes established. The truth is, before there was Copernicus, before there was Laplace, there was Bayes. Thank you very much for your attention.