00:00:11.740

All right everyone, I think we're going to get started, and I'll let some people trickle in. Welcome, and thank you for coming. My talk today is called 'Beyond Validates Presence Of.' I'm going to be talking about how you can ensure the validity of your data in a distributed system.

00:00:19.630

In a distributed system, you need to support a variety of different views of your data that are all, in theory, valid for a period of time. My name is Amy Unger. I started programming as a librarian, and library records can be these arcane, complex, and painful records. However, the good thing about them is that they don't change very often. If a book changes its title, it's usually because it's been reissued, and a new record comes in.

00:00:32.180

We don't deal with much change or alteration within the book data; obviously, users are a different matter. So, when I was first developing Rails applications, I found Active Record validations amazing. Every time I would implement a new model or start working on a new application, I would read through the Rails guide for Active Record validations and find every single one that I could add. It was a beautiful thing because I thought I could ensure that my data was always going to be valid.

00:00:58.340

Well, fast forward through a good number of consulting projects, some work at Getty Images, and now I work at Heroku, and unfortunately, this story is not quite as simple. I wanted to share today some lessons I've learned over the years. First, kind of speaking to my younger self: why would I let my data go wrong? That would be how I, five years ago, would have reacted to this question: what did you do to your data and why? Next is prevention. Given that you may recognize that your data may look different at different times, how do you prevent your data from going into a bad state if you don't have only one good state?

00:01:48.080

Then finally, detection: if your data is going to become inconsistent, you better know when that's happening. If you were here for Betsy Hables' talk just before me, a lot of this is going to sound familiar; this will just be a little more focused on the distributed side of things. So, first, let's talk about causes and how your data can go wrong despite your best intentions. I like to start with reframing that by asking, why would you expect your data to be correct? Five years ago, I'd say, 'But look, I have all these tools for correctness! I have database constraints and validations; they're going to keep me safe.'

00:02:35.120

Let's take a quick look at what those might be. For database constraints and indexes, we're looking at ensuring that something is not null. For instance, any product we sell should have a billing record, as it's kind of important for our business to keep track of things we sell. The statement would help keep us safe in the sense that before we can actually save a record of something sold to you, we need to build up a billing record. The corresponding Active Record validation would relate to the title of this talk: where a product validates the presence of a billing record.

00:03:22.250

However, after submitting this talk, I realized the syntax is a little different. Why would this go wrong? Well, first, let's take a product requirement; it's taken too long for us to sell things. There's so much work going on when a user clicks a button, and we want to speed up that time. Since billing only needs to be right once a month at the beginning of the month, we have a little bit of leeway. So, why not move that into a background job? That leads us to a situation where we have to comment out this validates presence of billing record because we want to get our product controller to have this particular create method.

00:03:52.790

In that create method, we take whatever the user gave us, and say, 'All right, we now have a product we have sold, and we're going to include this job to create the corresponding billing record.' Then we respond immediately with that product. That's great; they can start using their app, whether it's Redis or Postgres, and it just leaves us with needing to create that billing record within a few milliseconds. Unfortunately, what happens if that billing creator job fails? You're left with a product that is not valid.

00:04:40.159

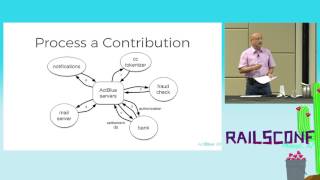

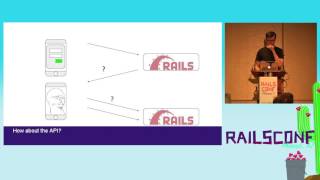

Then we have another fun complication. Your engineering team might think it kind of sucks that we're doing all of our billing and invoicing in a legacy Rails app; that doesn't seem like the right engineering decision. So, let's pull out all of our billing and move it into something that can be scaled at a far better pace. Now, our billing creator job just gets a little more complicated, because when it is initialized, it finds the product, builds up the state, and then calls out to this new billing service.

00:05:54.370

We have two modes of failure: your job could just fail, but the job could succeed while your billing service fails. That leads us to a discussion about all the ways your network can fail you. Some of these are easier than others: for instance, if you can't connect, you can probably try again. No harm, no foul; just give it a shot. What happens if it succeeds partially on the downstream service but doesn't fully complete? You might think, 'Gosh, I'll retry!' but is it going to immediately error because it's in a terrible state? Or will it suggest creating a new one?

00:07:03.860

There’s another option where the service completes work, but the network cuts out in such a way that you think it's done, but you don't see that. Do you retry that and risk billing for something not successfully completed? Finally, if you distinguish between which systems will roll back if they see a client-side timeout, we're left with all these critical aspects regarding designing high-performance distributed systems. We need to accept that your data won't always be correct; instead, there will be a variety of different ways it can be correct.

00:07:40.319

It is acceptable for a product to not have a billing record, as that means the billing record is in the process of being created. We want to express the fact that eventually, we expect something to coalesce into one or possibly multiple valid states for most of its lifespan. However, that’s not always the case. Sometimes, users create products, realize they've made a mistake, and immediately cancel it. So, you might not even see this product coalesce into something you’d think would be valid.

00:08:54.270

Next, let’s discuss prevention. It’s more about caring to handle those errors. We have to make sure that everything is in the perfect state, but we need to handle errors more sophisticatedly. The first thing is if you can’t connect, you might as well just try again. However, this leads us to a few issues. You want to ensure the downstream service supports idempotent actions. If it does, just keep retrying; even if it succeeds, keep trying!

00:09:57.991

Next is a strategy that, if your service mostly does background jobs, you can implement some sort of sophisticated locking system. I haven't done that myself; it seems like more work than I want to do, but if you're only doing jobs within one system, that might be the right solution. If you don’t trust your downstream service to be idempotent, you need to choose between retrying your creator or deletes, but please do not retry both! Otherwise, you need far more confidence than I do that your queuing system will always retrieve things in order.

00:11:10.070

You might think you don’t need to make a choice here, because if you put file requests on a queue, you can get a first-in, first-out order quite well. But what if timed with the downstream service? You want to retry multiple times because you might encounter a blip of failure. If your delete calls take longer to fail, it can leave you in a situation where someone who just wanted to cancel something is getting billed ad infinitum.

00:11:57.920

If you're going to do many retries, consider implementing exponential back-off and circuit breakers. Don’t make things worse for your downstream service if it's already struggling. Rolling back is an excellent option if only your code has seen the results of this action. Your codebase and your local database are the only ones recognizing this user wants this product. But what about external systems? You need to begin considering your job queue as an external system too.

00:12:46.330

Once you say, 'Hey, go create this billing record,' you can't delete the product even if the result is that the billing record is going to be in the same local database. You can't just have that record magically disappear! So, rolling forward means you have multiple options. You can enqueue a deletion job right after your creation job or create something and then delete it. You can also have cleanup scripts that run to detect corrupted states and clean them up quickly.

00:13:26.450

Rolling forward is about accepting that something has gone wrong, but that something existed for just a short period of time, and we can't make that go away because someone out there knows about it. With that in mind, let’s talk about transactions. Transactions will allow you to create views of your database that are local to you.

00:14:12.250

For instance, if I want to create an app to register five users and call two downstream services, I can wrap that all in a transaction. If any exceptions are thrown and bubble up out of that transaction, all those records go away. You'll still need to worry about your downstream services, but it’s a handy tool for making local things disappear.

00:15:10.190

That said, there are a couple of things to consider. First, understand what strategy you're using. This will usually be the ORM default. In Betsy’s earlier talk, you saw Active Record's base transaction; it chooses one of four transaction strategies. If you're using Postgres, read the documentation, as they choose a sophisticated default.

00:15:40.050

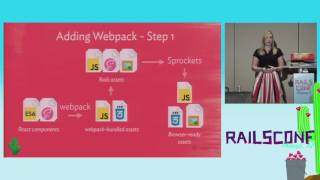

Please understand which transaction strategy you’re using because it can impact what things outside of the transaction can see and the behavior that follows. The next suggestion is to add your job queue to your database. If this causes you horror because of the load you foresee putting on your database, you're right!

00:16:25.610

This might feel daunting, but if it doesn’t terrify you, you should definitely do it. This way, you don't have to worry about pulling deletes off the queue; they just disappear! Instead of having that race condition with a delete possibly outrunning a create, it just never happens.

00:17:13.130

You can write code as if you could go ahead and think about the errors; if there is an issue, it's as if that job never came in. The next suggestion is to add timestamps. Add a timestamp to every critical service call; for a product you're selling, consider adding billing start and billing end times.

00:17:51.570

Set these timestamp fields within the same transaction as your call to the downstream service. If the downstream service fails, it raises an error that you choose not to catch, which will exit the transaction. This results in the timestamp not being set. Having timestamps allows for easier debugging across distributed services.

00:18:49.750

Another important aspect is code organization. I advocate for writing your failure code in the same place as your success code. If you have a downstream service, let's say you're creating a new employee in an app like Slack. For your employee creation code, please ensure you only have a few lines away the code that handles unwinding that action.

00:19:47.440

No matter where in the process the employee creation fails, the code path has to return through that unwind code. This approach helps developers think about failure paths while they're working on success paths. Let’s illustrate this with a scenario where S3 is down: your employee creator class should have a clear unwinding path. You should call the underlying HR API, pull the user from Slack, then cancel the transaction.

00:20:51.329

However, if the current development resembles the code you usually write, imagine the added struggle for understanding and repairing failures, particularly if you haven't interacted with the API in a while. This leads me to suggest a Saga pattern, which allows you to create an orchestrator that manages the various paths things take, keeping your rollback or roll-forward code in sync with creation code.

00:21:43.279

Now, how do we detect when things have gone wrong? The first thing to note is using SQL with timestamps. Since we’ve added timestamps such as deleted_at, created_at, and billing_status, we gain the ability to reconcile across a distributed system. Though we may not reach a perfect state, we can judge small aspects of the system with SQL queries to maintain coherence.

00:22:47.030

For instance, let’s say we want to ensure we aren't continuing to pay for something that has been deleted. This query looks for all active billing records connected to products that are inactive, ensuring they were deleted 15 minutes ago at the least. This gives us a buffer of time to maintain eventual consistency before billing API checks.

00:23:46.000

Thus, timestamps in SQL have significant advantages. They keep the logic clear and concise, allowing for easier maintenance versus more complex code in Ruby. Setting them to run automatically makes it easy to monitor data flow without requiring constant rework. If records appear suspicious, you should have contingency plans.

00:24:42.150

As a developer on-call, I have little desire to become an expert in our credit policy, so we need documentation for remediation plans to avoid interrupting workflow with quarterly reviews. Some challenges arise with non-SQL stores. This encompasses all forms of storage. Every large organization typically has a centralized reporting database.

00:25:57.630

People often use ETL scripts for data migration, simplifying the transformation process into a usable format. The key is understanding the context and requirements of your data. If your business model ensures you can maintain a solid integrity of vast amounts of distributed systems, you can either send queries through ray caching or your data store.”

00:26:55.210

One might implement snapshot strategies for maintaining an overview of configurations, marking time-based conditions to signify correct or erroneous configurations. This process also involves determining if your actions have achieved the desired state and tracking back potential issues.

00:27:58.670

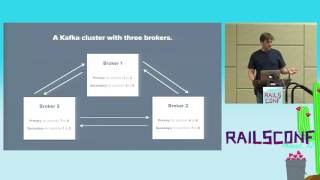

We should consider using event streams, which serve a similar purpose to log analysis but offer an improved mechanism to monitor ongoing events. As Heroku emits events regarding transactions, we can trace transactions in a streamlined manner through a single consumer, providing clarity about the outcomes of our user actions.

00:29:06.920

For example, while buying a product, the series of events emitted can indicate if a request was properly authenticated, and whether the product remained available for installation while maintaining user permission during the entire extent of the transaction's lifecycle.

00:30:28.880

The benefits of using event streams stem from standardization. Instead of managing various backed types or numerous flat file interactions, we have a centralized avenue for monitoring. New consumers can easily register to handle events. This structure keeps the process efficient.

00:31:30.480

Despite the advantages, I have concerns regarding oversight if we omit necessary events. I have more faith in static data than what can be captured dynamically. There is a key difference between structured data and its corresponding events, and missing any events can seriously impact the integrity of our system.

00:32:45.670

Finally, I acknowledge the engineering effort required for implementing effective monitoring and error-handling strategies. It’s crucial to remember that these systems create layers of complexity in operations, so you must maintain clarity. We cannot afford for critical elements to falter, particularly when billing errors or security vulnerabilities could translate to a significant business loss.

00:34:16.010

In any case, I hope there has been something relevant for everyone in the room, whether discussing why data might become invalid, ways to prevent it, or how to detect mistakes when they inevitably happen. Thank you; I really appreciate you all sitting through the talk, and I have about five minutes for questions.