00:00:05.120

Hi, I'm Nathan, and thank you for joining me here on the internet. I'm happy to be here at RailsConf, both on the internet and in my home, which is not the internet. My talk is titled "Can I Break This?" and it's about our intuitions and how to write code that can survive the harsh conditions of production.

00:00:16.859

There will be two relatively code-heavy example problems that we'll work through in a bit, but more on that later. First, let me tell you a bit about myself and what motivated me to give this talk. As I mentioned, I'm Nathan. I live in New York, and you can find me on GitHub and Twitter. I have a website where I'll post these slides.

00:00:34.800

When I'm not coding, I enjoy reading books and karaoke, although I haven't done much of that lately. Instead, I've been streaming a lot of Jackbox games over Zoom. I am also a staff engineer at Betterment, which is a smart money manager, providing cash and investment services and retirement advice. However, I believe that our true product is financial peace of mind.

00:01:00.600

My role at Betterment is on one of the platform teams, focusing on various cross-cutting concerns like performance, reliability, and developer efficiency. I like to think that we deliver operational peace of mind to our product teams. Oh, and I should mention that we are hiring.

00:01:26.220

I've been working with Ruby on Rails since about 2008 when I was hired to help build things for my university's IT department. Over the years, I've observed Rails applications evolve, and I've had the privilege of aiding in solving some of the challenges that have emerged along the way.

00:01:48.180

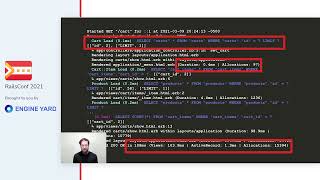

Let me tell you a story about one such challenge. It was many years ago, working at a growing B2B company, probably doing some Monday morning tasks like drinking coffee, when an urgent bug report hit my radar. A customer claimed they had been charged for a subscription 12 times. I checked, and yes, over the weekend, a piece of payment processing code that had been in production for almost a year suddenly decided to run about 12 times instead of once.

00:02:13.380

I had no idea how this was possible, but there it was. I spent the next few hours delving through layers of payment code. I don't remember most of the details, but essentially, something had caused one of our scheduled jobs to get stuck in a retry loop, resulting in a sequence of credit card charges. Once I figured this out, it was pretty obvious what went wrong, but of course, hindsight is 20/20.

00:02:49.440

Thankfully, the client had a great sense of humor, so we refunded them and shipped a fix. All was well again. Perhaps you've experienced something similar. I found myself reflecting deeply on this incident. There was something about this error that kept gnawing at me.

00:03:09.300

The question that kept coming back to me was, why now? Why did it suddenly fail now when it hadn't failed in the past 364 days? This particular bit of code had an entire year to fail, and yet for the majority of that time, it worked just fine.

00:03:31.620

If you're like me, this will lead you to question your entire codebase. What other potential errors might be lurking, scattered throughout years of features? Now, when I started building Rails applications in 2008, I would have been overjoyed to get them functioning as I had imagined.

00:03:43.920

In my early days, all of the code underneath either worked or it didn't. If it didn't work, we fixed it until it did, and then we shipped it. Mission accomplished. But as many of you know, that's not how the real world operates. As I took on more responsibility for the uptime of applications, I realized that the extent to which an application works or doesn't work is more of a sliding scale, and it changes over time.

00:04:02.340

If I were to take an application and simply look at all the top errors in the bug tracker sorted by recency, I might get an ever-changing list. The issues at the top tend to be the most frequent, and those will persistently reappear. If we were to graph that list of issues against their frequency, we might produce a bar chart that tells a story.

00:04:25.020

For many years, I focused entirely on the most salient issues at the top of the bug tracker. These were the errors that made themselves known with enough frequency for me to notice and act upon. But what about everything else? These are the less frequent errors; I mean, I would notice them, but they would often drop off the list, making it hard to prioritize them.

00:04:54.540

Still, some of them, like that payment processing error, are very real errors. They might only occur a couple of times and not right away, but they can add up to significant Monday morning headaches. If you step back and squint, you might start to perceive a curve to this graph.

00:05:25.620

Not to get overly mathematical, but I began considering how different types of errors might fall along a distribution curve. I was particularly interested in the outliers. I realized that errors exist as probabilities. Some errors are more likely to happen than others. Some might have a 100% chance of occurring.

00:05:57.840

But the bugs we don't see right away, the ones that lie in wait, they might only happen one in a thousand times, or even one in a million. Does that mean we shouldn't worry about them? Or should we invest more effort in locating them? Well, probabilities are just math. I'm certainly not a mathematician, so to address these questions, we may need input from one.

00:06:31.560

A couple of years after that payment incident, I read a book while on vacation titled "How Not to Be Wrong: The Power of Mathematical Thinking." It's a fascinating read, and I absolutely recommend it. One line from that book particularly resonated with me: improbable things happen a lot. Upon reading this, I thought about how such improbable errors could apply to the errors listed on my bug tracker.

00:07:05.460

The concept of improbable errors occurring frequently felt very real. It made me reflect on my instincts about bugs and errors, which were often misguided. Application development is, as it turns out, highly probabilistic. Yet, I had no idea how to think in terms of probabilities. Interestingly, I'm not alone; the human brain is not very adept at doing this.

00:07:35.220

So much so that numerous popular science books discuss how our intuitions often lead us astray when dealing with random, infrequent occurrences that are highly probabilistic. We typically struggle to predict what is likely to happen next, and we're poor at calculating risk in those scenarios. Nevertheless, when we write code, we rely on those intuitions daily.

00:08:08.820

Much of our jobs require us to make design decisions based on our instincts about how components will behave in production. Now, as an industry, we already recognize this. We build confidence through tests and automation. We test our assumptions because our assumptions are frequently incorrect.

00:08:23.580

Yet, we can typically only test for errors that manifest—those that are probable. But what should we do about the outliers, the improbable errors? Have we ever asked ourselves how many web requests it takes for an improbable error to become likely? If there’s a one-in-a-million chance of a particular error occurring, should we expect maybe one of those per month, give or take? Or are we doomed to be genuinely shocked when something incredibly improbable occurs?

00:09:07.620

When we write code, we often picture it running under ideal conditions, basing our intuitions on what we expect to see in that happy scenario. But we shouldn’t be surprised when a storm rolls in and conditions change, leading our expectations about how likely something is to break to become incorrect.

00:09:19.440

The key to understanding risk and probabilities is using the right model. If your model is flawed, your best guesses may be even more erroneous. So, what should we do when we confront the realities and challenges of maintaining a live system?

00:09:32.880

How do we minimize the impacts of improbable occurrences when we may not even know what they will be? Years ago, I didn't realize that the word I was trying to express was resilience. When you hear resilience, you may think of circuit breakers and bulkheads, but I'm not merely referring to network resiliency patterns. I’m speaking more broadly about software resilience.

00:10:15.360

We often find ourselves lacking a specific vocabulary for resilience in the software domain, so we borrow our language and concepts from the real world. To explain resilience further, I'm going to refer to an old fable you might know: the story of the oak and the reed.

00:10:33.420

Essentially, the oak tree is strong but rigid. Its response to adversity is to continue upholding its position, standing tall. However, one day, when a storm arises, despite the tree's best efforts, it eventually breaks and falls. In contrast, the reed is small and weak but resilient; it can bend without shattering.

00:10:51.660

During the storm, the reed gets flattened to the ground but quickly recovers once the wind and rain subside. This encapsulates the essence of resilience. Literally, in Latin, 'resilio' means to spring back or rebound.

00:11:08.460

Software systems can exhibit similar behavior. Code designed rigidly often only caters to success, succeeding until it doesn’t and failing catastrophically. Resilient code, on the other hand, is structured to expect failure, and paradoxically, when it does, it manages to come through to the other side successfully.

00:11:27.960

For example, instead of charging a client twelve times, a resilient system might fail to charge a customer at all until a single charge is more assured. The storm in this analogy reflects the realities of the production environment, such as traffic spikes, resource limitations, timeouts, network failures, and service outages. This storm is, in essence, entropy.

00:11:45.900

Entropy tends to escalate over time as an application grows, attracts more users, manages increased traffic, and performs various functions. I suspect this is what we commonly mean when we refer to scaling an application. As we scale, new challenges will arise, and we must solve many of them, or we won't truly scale our application.

00:12:09.600

Entropy will inevitably increase, and if we aren't cautious, we may find ourselves overwhelmed by a multitude of infrequent and improbable errors. Each individual error may not crop up today or even this week; in fact, it might not happen at all until, suddenly, entropy and probability declare that today is the day.

00:12:27.180

Consequently, as we scale, we are destined to grapple with numerous such issues. Are we fated to drown under the weight of these errors? My answer is no—if I believed that, I wouldn't be delivering this talk. We can prepare for adverse conditions. Like the reed, we can cultivate resilience.

00:12:49.320

We can write code that bends but does not break. But how, you might ask? By asking ourselves one simple question: Can I break this? If I can break this, I know it might fail in production. If I can identify ways to prevent a method from failing in production, I can apply those lessons across multiple methods in my code.

00:13:11.640

In doing so, I can reduce uncertainty about what might go wrong, even if I can't predict, with precision, what will go wrong. So let's practice this. Let's apply some resilience to some seemingly real code. I've prepared two practice problems with a few embedded resilience concerns.

00:13:34.160

The rules are simple: we'll focus on persistence operations—those operations that perform updates, insertions, and deletions; they persist changes. I will assume your application is primarily backed by a SQL data store like Postgres or MySQL, as these persistence operations are generally the operations around which we establish our resilience.

00:13:55.740

This persistence code could reside anywhere—in controllers, model callbacks, or perhaps a rake task. For simplicity, my examples will revolve around controllers. One last thing: if this were a live talk, I would ask you to raise your hand if I was speaking too quickly, to remind me to slow down. Since I can't do that here, feel free to pause at any moment, especially if you want to work through a problem on your own.

00:14:23.520

The solutions I employ might not align with the ones you would choose, and that's perfectly fine. I aim to demonstrate a process that anyone can utilize. Let’s follow that process in four steps. Step one: extract the entire persistence operation into one place. I like to place it in a method called save.

00:14:48.579

The objective is to consolidate and isolate it into one coherent list of steps that can be read procedurally. Next, we'll ask ourselves, 'Can I break this?' Knowing what I know and anticipating entropy and chance, what ways could this operation theoretically fail?

00:15:06.540

We'll then refactor to enhance resilience. We might not prevent failures entirely, but we can manage their impact on our code—essentially, we can be the reed. After that, we'll repeat the process until we're at least a bit more confident we're guarding against the riskiest outcomes. Let's begin.

00:15:31.740

I'm about to show you some code, but there’s no need to read it just yet; we’ll step through it together. Problem A: the account transfer. Don’t worry about searching for bugs right now; let’s focus on understanding what’s happening and isolating the parts that involve persistence.

00:15:42.660

At a high level, this is a transfers controller designed to transfer funds between two accounts. Our inputs are the accounts and an amount, and all we do is reassign the new balances and then save them to the accounts. If that works, we send a couple of emails and redirect to the next step, or we re-render the form if something doesn't work.

00:16:00.120

However, everything in the middle—that's the persistence operation. So, let’s extract that. Here's the same controller with the persistence code abstracted away. We’ve created a new concept called a transfer and defined a save method within this context.

00:16:14.640

This save method encapsulates the essential parts of the persistence that we care about. Essentially, we have three steps: we assign balances, save the accounts, and then, if that works, we send the emails before returning true or false at the end.

00:16:44.340

Now, can we break this? What happens if that second save operation fails after we've already saved the first account? That would leave us in a pretty broken state, right? A half-completed transfer. So, let’s add that to our list of ways things can break: an expected step might fail, and data is only partially saved.

00:17:05.220

It doesn’t matter why it doesn’t work. We just know that any step might not work for various reasons. Maybe we've hit a deposit limit for the account, or the account itself is invalid or even closed. Someone could even unplug the server at the wrong moment. All we can do is control the outcomes.

00:17:24.900

Thankfully, there is a resilience pattern we can apply: transactions. A transaction guarantees both isolation and 'all or nothing' execution. Thus, no other service can observe these changes until the transaction commits. If something goes wrong, it will roll back as if it never happened.

00:17:56.580

You might still consider this a failure since the transfer didn't complete, but we have successfully reached a recoverable state where the entire action can be retried later. Let’s keep in mind that when executing processes with multiple steps, we should ensure those steps are within a transaction.

00:18:18.960

Active Record transactions require code exceptions to roll back, so we’ll ensure our save methods raise exceptions if they don’t succeed. This is why I rely on these calls to 'valid.' If you remember, the controller expects our save method to return either true or false, which informs the page to show next.

00:18:38.100

We can still do this by first asking the models if they’re expected to save. If an account is closed or in a bad state, we can return false and render a different page. Thus, the functionality we care about should still perform as intended.

00:19:02.640

Because of these save calls, our method overall may now raise an exception. To me, that's a win for resilience because we’ve differentiated between user-correctable validation errors and everything else that can go wrong, including developer errors. This allows us to be alerted on our issue tracker if something truly exceptional occurs.

00:19:30.480

This was a significant win, but we’re not finished with this example just yet. Remember the steps from earlier—we should repeat step two again to ensure we’ve identified all possible errors and that we haven't introduced new resilience issues.

00:19:46.020

Now, can we break this? You might have already spotted an issue with the first couple of lines of this method. If not, don’t worry—I often need to look at the screen for a long time before realizing this kind of issue exists. But anyone can learn to spot these once you've got the hang of it.

00:20:18.180

The trick is to visualize the code while considering two requests happening simultaneously: two persistence operations on two different servers or in different threads competing for the same information. Let’s visualize it: we have two web processes, both receiving transfer requests at the same time.

00:20:47.640

They query the accounts and their balances, do their calculations to find the new balances, and then each issues their updates. Request 1 commits its update and then Request 2 commits its as well. The problem? Request 2 operated on stale information, resulting in an overwriting of request 1’s changes.

00:21:04.320

Let's add this to our growing list: two processes attempting to update the same data simultaneously competes, leading to one winning while the other fails, or perhaps no one wins at all. How should that work instead? Ideally, we want to ensure that by the time Request 2 queries the balances, Request 1 has already committed its changes.

00:21:24.240

Active Record provides a method for this called 'lock.' This will reload the account models and lock their rows, preventing any other processes from accessing them until we either commit or roll back the transaction.

00:21:47.040

This necessity is why we want to move the transaction to the outermost part of the method. It ensures we hold onto these locks until we validate and persist the entire operation, affording us confidence that as we calculate our balances and save the records, we're the only ones with access.

00:22:10.680

Let's again add this to our list: the means of preventing concurrency issues is by blocking concurrent access with a lock. Rails also offers optimistic locking, and many databases provide advisory locks. All of these methods aim to manage concurrent access in different ways.

00:22:30.480

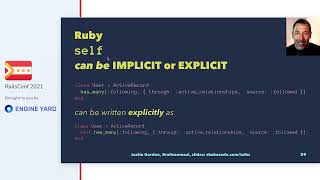

Now, I know some of you are already familiar with locks and transactions, but I must remind myself to think about them in terms of resilience rather than just functionality. You can create seemingly functional code without utilizing either.

00:22:50.520

It's essential to highlight how foundational these two concepts are to resilience. However, they're not straightforward to implement. There’s another resilience challenge in our code, and pulling that transaction to the outer layer may have complicated things further. Let’s explore this by repeating the process a third time.

00:23:12.600

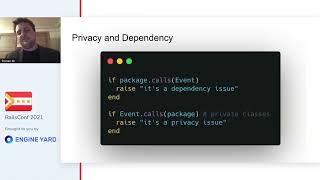

Can we break this now? When we initiate an Active Record transaction, we create a special block of code that exists between the 'begin' and 'commit' statements of a SQL transaction. Let’s underscore that entire section representing our database; operations to the right must be aware they’re in a transaction.

00:23:36.900

This set of operations must remain isolated and capable of rolling back with the transaction. Most of these operations utilize models having the same Active Record connection, indicated by green dots. These operations should ideally not affect the outside world until the transaction completes.

00:23:56.520

However, the next lines in this method present an issue; these mailers are intended to send emails, so their actions do not directly interact with the database. As a result, they are unaware of their role in the transaction and not co-transactional with the remainder of our data.

00:24:09.480

Consequently, before we ascertain whether our transaction will successfully commit, we've already sent one or two emails. Let's add this to our compilation: if a transactional persistence operation has consequences outside the database, we should account for that.

00:24:33.180

You may be wondering if these mailers are genuinely critical. While I didn't start with them because they're typically not the most riveting aspect, it doesn't mean we can disregard them. Many emails hold significant importance, and they aren’t the only outbound messages our applications send.

00:24:55.560

We need to establish this pattern, as it will arise often. So, what can we do? For starters, we could prevent these mailers from running synchronously during our web request. Right now, we connect to an SMTP server or utilize a mail API.

00:25:11.640

Fortunately, Rails has a built-in method for executing background operations: the Active Job framework. However, it may still not render this transaction safe for those operations. If I were to be in a room with all of you and ask how many of you utilize a Redis backend for the Active Job queue, I suspect I would see a majority of hands raised.

00:25:38.820

Given the number of downloads from RubyGems, most likely already rely on this. However, for most of you, Active Job isn't safe for use within a database transaction. It won't become co-transactional with your primary data store, which means the job worker may execute them before the transaction commits, if it ever does.

00:26:05.400

So, if your job queue isn't co-transactional with your primary data, what should you do? Dual writes present a notoriously tricky issue, and while I won’t delve into that, one common practice is to enqueue jobs in an after-commit callback, effectively lining them up just after the transaction.

00:26:30.240

However, this leads us to an alternate problem: we can't guarantee these jobs will run even if the transaction commits. We might execute the account transfer, but we wouldn't notify anyone. While this might be acceptable for certain use cases, at the very least, we avoid sending an email that we didn’t intend based upon a failed operation.

00:26:51.720

If your code resembles this everywhere, what would it take to uncover when you drop a message? Are you monitoring that? Improbable errors often happen, so we should expect such occurrences. What if I told you we could, with some ingenuity, ensure these actions prove transaction-safe?

00:27:13.680

Many clever methods exist for achieving this, but they generally boil down to a simple principle: we save a record to the database, indicating our intention to send the email, yet we don’t send it immediately. Unfortunately, this introduces the requirement for a new background process to monitor the database for these intended emails. This feels complicated.

00:27:37.560

Our primary queue operates within its own datastore, but we may utilize a database-backed queue for our transactions and funnel the jobs into the main queue. This concept is often referred to as the outbox pattern, although I personally seldom see it in practice, likely because it creates a lot of operational complexity in response to issues we frequently overlook.

00:28:03.420

But just because we aren't aware of them doesn’t mean those errors aren’t happening. So, this decision doesn't feel entirely satisfying. Do we enhance resilience but simultaneously increase operational complexity? Or are we okay with dropping messages when it’s quicker? Which of these reduces uncertainty? Which will yield a system that can bend but not break under adverse conditions?

00:28:39.120

Consider this my proposal: think about employing a database-backed queue by default so you don't have to invent your own co-transactional semantics to be resilient. You might make exceptions for high-volume transactions, but in most cases, optimizing for resilience is exceptionally convenient, providing the assurance of co-transactional jobs and queues.

00:29:08.340

Furthermore, whatever you do, avoid deleting failing jobs by default. As long as those jobs aren't lost, they can always be completed eventually. Once again, try to weather the storm without breaking. Now, let’s revisit those mailer calls with a database-backed Active Job adapter. We can simply call deliver later, and these dots become green.

00:29:37.560

Will it scale? It certainly will withstand some forms of entropy, so I would argue yes by certain definitions of scale. Let’s finish our list: if transactional operations have side effects, we can use a database-backed queue to safely unblock the critical path without risking item loss.

00:29:50.760

Now we have arrived at a much more resilient iteration of our initial save method. This whole operation will either succeed or fail successfully, and it's significantly less prone to breaking in perplexing ways several months later.

00:30:05.520

Yes, it contains more lines of code, and it does appear more complicated. There are certainly ways to condense this code and enhance clarity, but I leave that as an exercise for you. We've recognized that we can improve resilience, which in turn can diminish operational uncertainty. We now understand that by asking, 'Can I break this?' we identified and alleviated actual concerns, demonstrating that this process is effective.

00:30:47.220

Moreover, when modifying those emails, we successfully found a resilience pattern applicable in broader contexts, not just emails. This leads me to a significant point about writing resilient systems: many high-value actions tend to carry side effects.

00:31:14.340

While it would be ideal for everything we perform to be saved in the database, this rarely occurs. Many applications communicate with APIs, message queues, shared file systems, and more, and how we handle these side effects greatly influences our overall resilience. As such, we must plan for them.

00:31:35.520

Let’s consider one final practice problem to drive this point home. Problem B: the purchase button. It sounds simple—when a customer clicks a purchase button, we charge them for an item. Once again, don’t concern yourselves with identifying bugs right now; instead, we want to pinpoint and extract the persistence components.

00:31:58.740

This is simply a hypothetical. Still, I imagine we’ve all likely encountered scenarios like this—involving order verification, ensuring valid shipping and payment info, executing billing and fulfillment actions, and marking the order as complete. The rest, like before, involves controller actions, so let’s isolate the persistence code at the center.

00:32:32.940

Here we have the same controller. We’ve created a purchase class; once again, we supply the order as an argument and define a controller-friendly save method within this class.

00:32:48.180

And just to give you a glimpse, the purchase class is quite straightforward with its save method. It checks validity and attempts charges and updates. Now, let’s ask ourselves: can we break this?

00:33:01.560

We can apply the insights from our previous problem. Our goal is to prevent duplicate purchases in the event the operation is double submitted. We'll do what we did previously by implementing a transaction and a lock. There we have it: a lock held in a transaction encircling the entire operation.

00:33:24.720

One advantage of this approach is that after we secure the purchase lock, we can check to ensure it hasn't already been completed. This verification is crucial; when implementing locks, we must validate our actions, or it’s not safe.

00:33:42.120

However, remember the key rule of transactions: the block is green, so everything to its right requires a green dot. We've got a few other colors at play here because the billing and fulfillment services are remote HTTP APIs with independent databases.

00:34:01.680

Given this is a purchase button, we need to ensure the purchase was successful before directing the user to any confirmation page. Thus, we face a catch-22: this code isn’t safe left within a transaction, but we also can’t just fire and forget it.

00:34:16.560

What do we do, then? Here’s where creativity comes into play. Currently, we try to execute everything simultaneously, meaning the purchase button on the client anticipates a yes or no answer from the billing API.

00:34:32.520

But if the database operation rolls back for any reason, we might have already charged the customer’s card. This presents yet another dual write dilemma, and coordinating dual writes tends to be critically complex.

00:34:54.720

To avoid this pitfall, we need to embrace a more nuanced strategy. If we attempt to background this action, it inevitably leads to more intricacy. After the client submits their request, the app could respond with a reassuring message, stating that the action has been submitted, and we’ll do our utmost to follow through.

00:35:15.240

We can then send a purchase confirmation email once the charge clears and update the order page to reflect its status. This strategy also enables us to better accommodate potential failure states, contributing another layer of resilience.

00:35:34.860

If something fails or takes too long to process, we can implement the background process to subsequently cancel the order if need be. Thus, we once again ensure that our transaction is green.

00:35:51.060

Our primary assumption is that you are using a database-backed queue or possess a mechanism for asserting co-transactionality. So here's the next entry: when an external API request forms part of the critical path, we can separate the persistence action into steps linked together via a background job.

00:36:08.760

Next, let’s examine that background job. Can we break this? A critical aspect of any background job is that it can retry—if desired—typically with an increasing interval between each attempt. This is exactly what we want here; we want the job to have multiple opportunities to succeed before marking it as failed.

00:36:38.880

Most job backends guarantee at least one execution, meaning there's no assurance our job is executed only once. With this understanding, this job could fail, possibly charging our customer multiple times—perhaps even twelve.

00:37:05.040

Moreover, it gets even more convoluted; we might successfully send a request to the billing service, only to encounter a failure when awaiting the response, leaving us uncertain whether the charge succeeded. Fortunately, there’s another resilience pattern we can incorporate: item potency.

00:37:28.920

What we want is to employ an item potency key. By including a key with the request, we assure that subsequent retries using that same key yield the same response as earlier without duplicating the action. This assumes the remote service offers item potency; if it doesn't, we may need to pivot creatively.

00:37:50.760

However, if you control the service, it’s best to add item potency support, and most third-party payment providers also support item potency keys. Consequently, you should rely on these every time you're charging a customer.

00:38:16.200

Here’s our example of implementing the item potency key; it’s included in the arguments of the perform method to maintain a consistent value every time the job executes. As a result, the job can retry as many times as necessary, without the billing or fulfillment API duplicating their actions.

00:38:48.300

With that, we find ourselves in a position where we want something to operate effectively just once—we can retry it with item potency until it either succeeds or we decide on an alternative approach. Whatever enables us to weather the storm.

00:39:05.520

At this point, we've reached a suitable stopping point. While we could delve deeper, we have already divided the operations into two significantly more resilient phases. What’s the key takeaway from this purchase button problem?

00:39:22.260

My primary takeaway is that side effects are integral to persistence. I would argue that side effects are, in essence, saves. We might be making API requests, publishing messages or jobs, and uploading files, among other actions.

00:39:36.960

Though these aren't directly classified as database operations, they still serve as forms of information persistence—they create changes in the universe. Even emails can be viewed as a write-only, distributed data store!

00:39:53.640

Now, I realize that may seem like a stretch, but for the sake of resilience, I’ll stand by that argument. So, if resilience remains our objective, we need resilient patterns to perform side effects. Let's summarize all the patterns we’ve explored:

00:40:11.280

We can utilize a transaction to ensure atomic execution and isolation; subsequently, we can employ a lock within the transaction to prevent concurrent data access. For side effects outside the database, we can leverage a database-backed queue to safely unblock the critical path.

00:40:30.420

In more intricate cases, we can fragment our persistence operation into steps chained together by background jobs so that we can capture external results asynchronously. Thus, leveraging an item potency key and retries solidifies this asynchronous process, ensuring resilience against potential failures.

00:40:56.220

I want to add one last insight to our collection: we should align our implementation with the needs of the customer. In our example, it wasn’t enough to merely execute the action behind a loading spinner.

00:41:17.220

We needed to deliberate on how a positive user experience could be anchored by resilient patterns. That’s my final point: there’s no universal solution for resilience, and every decision is a trade-off. Our implementation choices profoundly impact product behavior.

00:41:39.960

Before I conclude, I want to mention that my approach to these problems greatly stems from opportunities I've had working with and learning from remarkable individuals who invested substantial thought into software resilience long before I did. It’s because of them that I learned to ask that pivotal question: Can I break this?

00:41:54.600

Thank you.