00:00:11.990

Sweet! All right, everybody ready to go? I'm sorry, this is a very technical talk in a very sleepy time slot. So if you fall asleep in the middle, I will be super offended, but I won't call you out too hard. So, yeah, I'm Stella Cotton. If you don't know me, I'm an engineer at Heroku, and today we're going to talk about distributed tracing.

00:00:19.320

Before we get started, a couple of housekeeping notes: I'll tweet out a link to my slides afterwards, so they'll be on the internet. There will be some code samples and some links, so you'll be able to check that out if you want to take a closer look. I also have a favor: if you've seen me speak before, I have probably asked you this favor. Ruby karaoke last night! Did anybody go? Yeah! It totally destroyed my voice, so I'm going to need to drink some water. But otherwise, I get really awkward and I don't like to do that, so to fill the silence, I'm going to ask you to do something my friend Lola Chalene came up with, which is this: each time I take a drink of water, start clapping and cheering. All right? So, we're going to try this out. I'm going to do this; yeah! Hopefully that happens a lot during this talk so that I won't lose my voice.

00:00:39.000

Now, back to distributed tracing. I work on a tool team at Heroku, and we've been working on implementing distributed tracing for our internal services. Normally, I do this whole Brady Bunch team thing with photos, but I want to acknowledge that a lot of the trial and error and discovery that went into this talk was really a team effort across my entire team. So, the basics of distributed tracing: who knows what distributed tracing is?

00:01:18.150

Okay, cool! Who has it at their company right now? Oh, I see a few hands. If you don't actually know what it is, or you're not really sure how you would implement it, then you're in the right place. This is the right talk for you. It's basically just the ability to trace a request across distributed system boundaries. And you might think, 'Stella, we are Rails developers. This is not a distributed systems conference. This is not Scala or Strange Loop. You should go to those.' But really, there's this idea of a distributed system, which is just a collection of independent computers that appear to a user to act as a single coherent system.

00:01:40.470

As a user loads your website, and more than one service does some work to render that request, you actually have a distributed system. Technically, because somebody will definitely meet us if you have a database and a Rails app, that's actually technically a distributed system. But I'm really going to talk more about just the application layer today. A simple use case for distributed tracing: you run an e-commerce site, and you want users to be able to see all their recent orders; you have a monolithic architecture. You've got one web process or multiple web processes, but they're all running the same kind of code and they're going to return information about users' orders. Users have many orders, and the orders have many items. A very simple Rails web application where we authenticate our user, and our controller grabs all the orders and all the items to render on a page; it's not a big deal, just a single app and a single process.

00:03:18.700

Now we're going to add some more requirements: we've got a mobile app or two, so they need authentication. Suddenly, it's just a little more complicated. There's a team dedicated to authentication, so now you maybe have an authentication service. They don't care at all about orders—that makes sense. They don't need to know about your stuff, and you don't need to know about theirs. It could be a separate Rails app on the same server, or it could be on a different server altogether. It's going to keep getting more complicated. Now we want to show recommendations based on past purchases, so the team in charge of these recommendations is a bunch of data science folks and they write a bunch of machine learning in Python. So, naturally, the answer is microservices. Seriously, it might be microservices. Your engineering team and your product grow, and you don't have to hop on the microservices bandwagon to find yourself supporting multiple services. Maybe one is written in two different languages and might have its own infrastructure needs, like for example our recommendation engine. As our web apps and our team grow larger, these services that you maintain might begin to look less and less like a very consistent garden and just more like a collection of different plants—lots of different kinds of boxes.

00:04:57.360

So, where does this distributed tracing fit into this big picture? One day, your e-commerce app goes to load your website and it starts loading very, very slowly. If you're going to look in your application performance monitoring tools like New Relic or Skylight, or use a profiling tool, you can see that the recommendation services are taking a really long time to load. But with the single process monitoring tools, all of the services that you own in your system or that your company owns are going to look just like third-party API calls. You're getting as much information about their latency as you would about Stripe or GitHub or whoever you're calling out to. From that user's perspective, you know there's 500 extra milliseconds to get to the recommendations, but you don't really know why without reaching out to the recommendations team, figuring out what kind of profiling tool they use for Python, and digging into their services. It's just more and more complicated as your system becomes more complicated. And at the end of the day, you cannot tell a coherent macro story about your application by monitoring these individual processes. If you've ever done any performance work, people are not very good at guessing and understanding bottlenecks.

00:05:20.710

So, what can we do to increase our visibility into the system and tell that macro story? Distributed tracing can help. It’s a way of commoditizing knowledge. Adrian Cole, one of the Zipkin maintainers, talks about how in increasingly complex systems, you want to give everyone tools to understand this whole system as a whole without having to rely on these experts. So, cool; you're on board. I’ve convinced you that you need this, or at least it makes sense. But what might actually be stopping you from implementing this at your company? A few different things make it tough to go from theory to practice with distributed tracing.

00:06:18.039

First and foremost, it's kind of outside the Ruby wheelhouse. Ruby is not well represented in the ecosystem at large; most people are working in Go, Java, or Python. You're not going to find a lot of sample apps or implementations that are written in Ruby. There’s also a lot of domain-specific vocabulary that goes into distributed tracing, so reading through the docs can feel slow. Finally, the most difficult hurdle of all is that the ecosystem is extremely fractured. It's changing constantly because it’s about tracing everything everywhere across frameworks and across languages. Navigating the solutions that are out there and figuring out which ones are right for you is not a trivial task. So, we're going to work on how to get past some of these hurdles today.

00:07:01.930

We're going to start by talking about the theory, which will help you get comfortable with the fundamentals. Then, we'll cover a checklist for evaluating distributed tracing systems. All right! I love that trick. Let’s start with the basics: black box tracing. The idea of a black box is that you do not know about and you can’t change anything inside your applications. An example of black box tracing would be capturing and logging all of the traffic that comes in and out at a lower level in your application, like at your TCP layer. All of that data goes into a single log, aggregated, and then with the power of statistics, you magically understand the behavior of your system based on timestamps.

00:08:26.130

But I'm not going to talk a lot about black box tracing today because for us at Heroku, it was not a great fit and it’s not a great fit for a lot of companies for a couple of reasons. One of them is that you need a lot of data to get accuracy based on statistical inference, and because it uses statistical analysis, it can have some delays returning results. But the biggest problem is that in an event-driven system, such as Sidekiq or a multi-threaded system, you can't guarantee causality.

00:09:24.490

What does that mean exactly? This is sort of an arbitrary code example, but it helps to show that if you have service one kick off an async job and then immediately, synchronously call out to service two, there's no delay in your queue; your timestamps are going to correlate correctly to service one async job. Awesome! But if you start getting queuing delays and latency, then a timestamp might actually make it consistently look like your second service is making that call. White box tracing is a tool that people use to help get around that problem. It assumes that you have an understanding of the system and can actually change your system.

00:10:29.600

So how do we understand the paths that our requests take through our system? We explicitly include information about where it came from using something called metadata propagation. That's the type of white box tracing. It’s just a fancy way of saying that we can change our Rails apps or any kind of app to explicitly pass along information so that you have an explicit trail of how things go. Another benefit of white box tracing is real-time analysis. It can be almost in real-time to get results with a very short history of metadata propagation. The example that everyone talks about when discussing metadata propagation is Dapper and the open-source library that inspired it, Zipkin.

00:11:54.060

Dapper was published by Google in 2010, but it's not actually the first distributed systems debugging tool to be built. Why is Dapper so influential? Well, honestly, it’s because, in contrast to all of these other systems that came before it, those papers were published pretty early in their development. Google published this paper after it had been running in production at Google scale for many years, and so they're not only able to say that it's viable at scales like Google scale, but also that it was valuable. Next comes Jaeger, a project that was started at Twitter during their very first hack week, and their goal was to implement Dapper. They open-sourced it in 2012, and it's currently maintained by Adrian Cole, who is no longer at Twitter but now works at Pivotal, spending most of his time in the distributed tracing ecosystem.

00:12:55.890

From here on out, when I use the term distributed tracing, I'm going to talk about Dapper and Zipkin-like systems. Because, to clarify, white box metadata propagation distributed tracing systems are not quite as zippy. If you want to read more about things beyond just metadata propagation, there's a pretty cool paper that gives an overview of tracing distributed systems beyond this. So how do we actually do this? I'm going to walk us through a few main components that power most systems of this caliber. First is the tracer; it's the instrumentation you actually install in your application itself. There’s a transport component, which takes the data that is collected and sends it over to the distributed tracing collector.

00:14:14.620

That’s a separate app that runs, processes, and stores the data in storage components. Finally, there’s a UI component typically running inside of that which allows you to view your tracing data. We’ll first talk about the level that’s closest to your application itself—the tracer. It’s how you trace individual requests, and it lives inside your application. In the Ruby world, it’s installed as a gem, just like any other performance monitoring agent that would monitor a single process. A tracer's job is to record data from each system so that we can tell a full story about your request. You can think of the entire story of a single request lifecycle as a tree, this whole system captured in a single trace.

00:15:45.590

Now, let's introduce a vocab word: span. Within a single trace, there are many spans; it's a chapter in that story. In this case, our e-commerce app calls out to the order service and gets a response back—that's a single span. In fact, any discrete piece of work can be captured by a span. It doesn’t have to be network requests. If we want to start mapping out this system, what kind of information are we going to start passing along? You could start just by doing a request ID, so that you know that every single path this took through your query logs, and you can see that's all one request. But you're going to have the same issue that you have with black box tracing: you can't guarantee causality just based on the timestamps.

00:16:58.100

Therefore, you need to explicitly create a relationship between each of these components, and a really good way to do this is with a parent-child relationship. The first request in the system doesn’t have a parent, because someone just clicked a button loading a website. We know that's at the top of the tree. When your auth process starts the e-commerce process, it's going to modify the request headers to pass along a randomly generated ID as a parent ID. Here it’s set to one, but it could really be anything, and it keeps going on and on with each request. The trace is ultimately made up of many of these parent-child relationships, and it forms what’s called a directed acyclic graph.

00:18:33.139

By tying all of these components together, we’re able to understand this not just as an image, but as a data structure. We’ll talk in a few minutes about how the tracer actually accomplishes that in our code. If those relationships are all we wanted to know, we could stop there. But that’s not really going to help us in the long term with debugging. Ultimately, we want to know more about timing information. We can use annotations to create a richer ecosystem of information around these requests. By explicitly annotating with timestamps when each of these things recur in the cycle, we can begin to understand latency. Hopefully, you're not seeing a second of latency between every event, and these would definitely not be reasonable timestamps, but this is just an example.

00:20:35.379

Let's zoom in on our auth process and how it communicates with the e-commerce process. In addition to passing along the trace ID and parent ID, the child span will also annotate the request with a tag and a timestamp. By having our auth process annotate that it’s sending the request, and our e-commerce app annotate that it received the request, we can understand the network latency between the two. If you see a lot of requests queuing up, you would see that time go up. On the other hand, you can compare two timestamps between the server receiving and the server sending back the information, and you could see if your app is slowing down. You’d see latency increase between those two events, and then finally, you’re able to close out the cycle by indicating that the client has received the final request.

00:21:53.869

Now let's talk about what happens to that data. Each process is going to send information via the transport layer to a separate application that's going to aggregate that data and do a bunch of processing on it. So how does that process not add latency? First, it's only going to propagate those IDs. By adding information to your headers, it will gather this data and report it out of band to a collector. This is what actually does the processing and the storing. For example, Zipkin is going to leverage sucker punch to make a threaded async call out of the conserver. This is similar to what you would see in your metrics logging and systems that use threads for collecting data.

00:22:53.750

The data collected by the tracer is transported via the transport layer and collected, and finally ready to be displayed to you in the UI. So this graph we reviewed here is a good way to understand how the request travels, but it's not actually helpful for understanding latency or even comprehending the relationship between calls within systems. We’re going to use Gantt charts or swim lanes instead. The open tracing I/O documentation has a request tree similar to ours, and looking at it in this format will allow you to see each of the different services in the same way that we did before, but now we can better visualize how much time is spent in each sub-request and how much time that takes relative to the other requests.

00:24:32.730

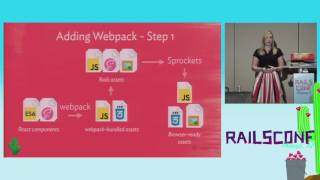

You can also instrument and visualize internal traces happening inside of a service, not just service to service communications. Here you will see that the billing service is being blocked by the authorization service. You can also see we have a threaded or parallel job execution inside the resource allocation service. If there starts to be a widening gap between these two adjacent services, it could suggest that network requests are queuing. Now we know what we want, but how are we going to get it done? At a minimum, you want to record information when a request comes in and when a request goes out. How do we do that programmatically in Ruby? Usually with the power of rack middleware.

00:26:29.940

If you’re running a Ruby app, the odds are that you are also running a rack app. It provides a common interface for servers and applications to communicate with each other. Sinatra and Rails both use it, and it serves as a single entry and exit point for client requests entering the system. The powerful thing about Rack is that it’s very easy to add middleware that can fit between your server and your application, allowing you to customize requests. A basic Rack app, if you’re not familiar with it, is a Ruby object that responds to the call method, taking one argument which returns status, headers, and body. Under the hood, Rails and Sinatra utilize this structure, and the middleware format is quite similar.

00:27:33.990

If we wanted to implement tracing inside our middleware, what might that method look like? As discussed earlier, we want to start a new span on every request. It’s going to record that it received the request with a server received annotation, as we've talked about earlier. It’s going to yield to our Rack app to ensure it executes the next step in the chain and will run your code before returning the response. This is just pseudocode; this is not actually a working tracer, but Zipkin has a great implementation that you can check out online. So then we could just tell our application to use our middleware to instrument on request. You’re never going to want to sample every single request that comes in—that is crazy and excessive when you have high traffic.

00:28:52.090

So tracing solutions will typically ask you to configure a sample rate. We have our requests coming in, but to generate that big relationship tree we saw earlier, we’ll also need to record information when our request leads our system. These can be requests to external APIs like Stripe, GitHub, etc. But if you control that next service that it’s talking to, you can keep building this chain. You can do that with more middleware. If you use an HTTP client that supports middleware, like Faraday or Excon, you can easily incorporate tracing into the client. Let’s use Faraday as an example since it has a similar pattern to Rack.

00:30:18.990

So passing on our HTTP client app will do some tracing and keep calling down the chain. It’s pretty similar, but the tracing itself is going to need to manipulate the headers to pass along some tracing information. That way, if we’re calling out to an external service like Stripe, they’re going to ignore these headers completely because they won’t know what they are. But if you’re actually calling another service that’s within your purview, you’ll be able to see those details further down. Each of these colored segments represents an instrumented application. We want to record that we’re starting a client request, ensuring we’re receiving client requests, and adding middleware just as we did with Rack. It’s pretty simple; you can do it programmatically for all your requests for some of your HTTP clients.

00:31:43.610

We’ve covered some of the basics for how distributed tracing can be implemented. Now, let’s talk about how to evaluate which system is right for you. The first question is how are you going to get this working? I’ll caveat this: the ecosystem is ever-changing, so this information could be incorrect right now and could be obsolete, especially if you’re watching this at home on the web. But let's talk about whether you should buy a system. Yes, if the math works out for you, it’s kind of hard for me to really say whether you should do that. If your resources are limited and you can find a solution that works for you that isn’t too expensive, then probably yes.

00:32:45.260

Unless you’re running a super complex system, light step and Traceview are examples that offer Ruby support. Your APM provider might actually have it too. Adopting an open-source solution is another option for us; a paid solution just didn’t work. If you have people on your team who are comfortable with the underlying framework and have some capacity for managing infrastructure, then this could really work for you. For us, with a small team of just a few engineers, we got DefConn up and running in a couple of months while simultaneously managing a million other tasks. This was partly because we leveraged Heroku to make the infrastructure components much easier.

00:34:04.000

If you want to use a fully open-source solution with Ruby, Zipkin is pretty much your only option as far as I know. You may have heard of OpenTracing and might be asking, 'Stella, what about this OpenTracing thing? That seems cool.' A common misunderstanding is that OpenTracing is not actually a tracing implementation; it is an API. Its job is to standardize the instrumentation so that all of the tracing providers that conform to the API are interchangeable with your app side. This means if you want to switch from an open-source provider to a paid provider, or vice versa, you won't have to re-instrument each and every service you maintain.

00:35:35.390

In theory, they all have to be good citizens conforming to this API that is all consistent. Where is OpenTracing currently? They published a Ruby API guideline back in January, but only Lightstep, which is a product in private beta, has actually implemented a tracer that conforms to that API. The existing implementations are still going to need a bridge between the tracing implementation they have today and the OpenTracing API.

00:36:02.210

Another thing that’s not clear still is interoperability. For instance, if you have a Ruby app tracing with OpenTracing API, everything's great, but if you have a paid provider that doesn’t support Ruby, you can’t necessarily use two providers that use OpenTracing and still send to the same collection system. It’s really only at the app level. Keep in mind that for both open-source and hosted solutions, Ruby support means a really wide range of things. At the minimum, it means that you can start and end a trace in your Ruby app, which is good, but you might still have to write all your own Rack middleware and HTTP library middleware.

00:36:58.350

It's not a deal breaker. We ended up having to do that for Excon with Zipkin, but it may be an engineering time commitment you are not prepared to make. Unfortunately, because this is tracing everywhere, you're going to need to rinse and repeat for every language that your company supports. You’re going to have to walk through all of these thoughts and these guidelines for Go, JavaScript, or any other language. Some big companies find that with the custom nature of their infrastructure they're going to need to build out some or all of the elements in-house. Etsy, obviously, or Google—they're running fully custom infrastructures. Other companies are building custom components that are tapping into open-source solutions, like Pinterest with PinTraces, which is an open-source add-on to Zipkin, similar to Yelp.

00:38:40.420

If you're curious about what other companies are doing, both large and small, Jonathan Mesa from Brown University published a snapshot of 26 companies and what they're doing. It's already out of date; one of those things is already wrong, even though it was published just a month ago. Out of these companies, 15 are using Zipkin while nine are using custom internal solutions. Most people are indeed using Zipkin.

00:39:50.590

Another component to consider is what you're running in-house. What does your ops team want to run in-house? Are there any restrictions? There's this dependency matrix between the tracer and the transport layer, which needs to be compatible with each of your services. Both JavaScript and Ruby need to ensure that the tracer and transport layer are compatible across the board. For us, HTTP and JSON are totally fine for a transport layer; we literally call out with web requests to our Zipkin collector. But if you have a ton of data and need to use something like Kafka, you might think that’s cool and totally supported, but if you look at the documentation, it may actually say Ruby, and then if you dig deep, you’ll find it’s only JRuby—so that’s a total gotcha.

00:41:18.760

For each of these, you really should build a spreadsheet because it can be challenging to make sure you're covering everything. The collection and storage layers don’t have to be related to the services you run, but they might not align with the kind of applications you’re used to managing. For example, my team runs a Java app, which is quite different from a Ruby app. Another thing to figure out is whether you need to run a separate agent on the host machine itself. For some solutions, and this is why we had to exclude a lot of them, you might need to actually install an agent on each host for every service that you run.

00:43:10.740

Because we are on Heroku, we can't give root privileges to an agent running on a dyno. Another thing to consider is authentication and authorization: who can see and submit data to your tracing system? For us, Zipkin was missing both of those components, which makes sense because it needs to accommodate everyone. Adding authentication and authorization for every single company using that open-source library is not reasonable. You can run it inside a VPN without authentication. The other option is using a reverse proxy, which is what we ended up doing. We used build packs to install nginx onto the Heroku slug, which is just like a bundle of dependencies, allowing us to run our Zipkin application alongside it.

00:44:56.170

Adding authentication on the client-side itself was as easy as going back to that Rack middleware file and updating our hostname with basic authentication. This was a good solution for us. Additionally, we didn’t want anyone to access our Zipkin data on the internet. If you run an instance of Zipkin without authorization, there’s nothing to stop anyone from accessing it. We used Bitly’s OAuth2 proxy, which is super awesome. It allows us to restrict access to only people with an email address from Heroku.com. If you're on a browser and try to access our Zipkin instance, we're going to check to see if you're authorized; if not, the proxy will handle the authentication.

00:46:21.960

Even if you're going the hosted route and don’t need to handle any infrastructure, you’ll still need to sort out how to grant access to those who need it. You don’t want to find yourself as the team managing sign-on and sign-in requests, passing messages to this or that person. Ensure it’s clear with your hosted provider how you’ll manage access and security, especially if you have sensitive data in your systems. There are two places where we specifically had to monitor security: one is with custom instrumentation.

00:47:37.350

For example, my team, the tools team, added some custom internal tracing of our services by prepending to trace all of our PostgreSQL calls. When we did this, we didn’t want to store any private data or sensitive information blindly, particularly if we have PII or any type of security compliance data. The second thing is to have a plan in place for what to do in the event of a data leak. Running our system helps us because if we accidentally delete data or leak it to a third-party provider, it’s easier for us to validate or confirm that we have wiped that data rather than having to coordinate with a third party. It doesn’t mean you shouldn’t use a third-party solution; but you should ask them ahead of time, 'What’s your protocol for handling data leaks? What’s their turnaround? How can we verify it?' You don’t want to be scrambling to figure it out in a crisis.

00:49:39.890

The last thing to consider is the people part: is everyone on board for this? The nature of distributed tracing means the work is distributed; your job isn’t going to just be getting the service up and running. You’ll have to instrument apps, and there's a lot of cognitive load involved, as you can see from the 30 minutes we just talked about understanding how distributed tracing works. So set yourself up for success by getting it onto team roadmaps, if you can. Otherwise, start opening PRs. Even then, you'll likely need to walk through what it is, and why you're adding it. But it’s much easier when you can show tangible code and how it interacts with their system.

00:50:42.550

Here’s a full checklist for evaluation. Before we conclude, if you’re feeling overwhelmed by all this information, start by trying to get Docker and Zipkin up and running during some of your free time, like a hack week. Even if you don’t plan to use it, it comes packaged with a test version of Cassandra built-in. You only need to get the Java app itself up and running to begin with, which simplifies the process. If you’re able, deploying one single app to Heroku that essentially just makes a third-party Stripe call will help you transform abstract concepts into concrete tasks.

00:52:21.210

That’s all for today, folks. I’m heading to the Heroku booth after this in the big Expo hall, so feel free to stop by. I’ll be there for about an hour if you want to come ask me any questions or talk about Heroku. I’ll have some stickers too!