00:00:07.220

What do Teslas and dairy barns have in common? Welcome to "Exploring Real-time Computer Vision Using ActionCable" with me, Justin Bowen. First, I want to thank you for joining my talk at RailsConf 2021. In this talk, we'll go over some of the computer vision experiences I've had and how I use those experiences to develop cannabis computer vision.

00:00:18.240

Then, we'll review the typical architecture I like to use for deploying Rails apps and explore how we can utilize it for processing imagery with computer vision. This is a cat—how about that? She is a cute cat. We'll just put her down here and move along.

00:00:44.340

So, what do Teslas and dairy barns have in common? Well, they both utilize cameras to derive insights using computer vision. Probably the most advanced computer vision application available to consumers is Tesla's autopilot.

00:01:02.879

Tesla vehicles sport a suite of seven cameras—three front-facing with varying fields of view for short (60 meters), mid (150 meters), and long (250 meters) range. The forward-facing cameras use wide-angle, mid-angle, and narrow-angle lenses, while there are two side front-facing cameras with an 80-meter range and two side rear-facing cameras with a 100-meter range.

00:01:20.640

These cameras, combined with their specifications, can be utilized to measure and assess object size and distance in front of and around the vehicle. All of these cameras are processed in real time using GPU-powered computer vision onboard each Tesla to detect lane markers, vehicles, bikes, motorcycles, cones, pedestrians, trash cans, and intersections using neural networks combined with some rules.

00:01:40.979

These components are used to provide feature sets known as autopilot. Autopilot is a driver assist feature with the ambition of achieving full self-driving capabilities. At the time of this talk, Teslas are able to navigate freeways, change lanes, and take exits automatically—this feature is known as 'nav on autopilot.'

00:02:03.659

Teslas can also stop at intersections with red light and stop sign detection. With Tesla's full self-driving beta, they're capable of turning at intersections and driving users from point A to point B with little or no intervention. Teslas today are able to safely maintain their lanes with auto steer by monitoring the lane markers.

00:02:20.580

They're also able to park with auto park and unpark using the enhanced 'summon' feature, which can allow your car to come to you from across a parking lot. Okay, well that's impressive, but what was I talking about with dairy barns? Some modern dairy farmers have computer vision currently being used to monitor dairy barns for cow comfort because comfy cows produce more milk.

00:02:45.959

Cameras monitor, log, and report eating and drinking behavior to provide farmers with real-time insights into cow comfort and health metrics. I had the honor of leading the team building this dairy barn offering where we were tasked to deploy computer vision capable of detecting and identifying cows' eating and drinking behavior across multiple pens. After all, cows need water and food to make milk.

00:03:22.860

This provides farmers with the ability to determine cow health by alerting them to feeding, drinking, standing, and laying activity. The first thing to go when you're not feeling well is your appetite and energy—this is also true for cows. Similarly to Tesla's approach for computer vision, GPUs were deployed in the field to analyze cow data on the farm in locations with limited bandwidth, where streaming video from one camera would have been challenging.

00:03:45.540

These remote locations sometimes had tens or hundreds of cameras to cover a herd of 4,000 cows across multiple barns. Edge computing was the only option for computer vision since there was no way to upload all that data to the cloud. My experience actually started with drones and pivoted to dairy cows, and eventually, I ended up exploring cannabis computer vision.

00:04:04.319

Let's talk a little bit more about my drone experience. In 2016, I joined an Irish company called Kanthus with the challenge of building a data pipeline for processing drone flight imagery for field crop analysis. Interestingly, they switched to dairy cow monitoring in one of the most fascinating pivots I've experienced in a startup.

00:04:53.220

When I first joined, they were trying to load massive geotiffs (gigabytes in size) into memory and analyze all of the pixels at once, reporting back pixel similarity across a field based on user input. The only problem with this process was that it took seven minutes from when the user selected a target to receiving a heat map end result. While the industry standard for field crop analysis at this scale was a 24-hour turnaround, we were determined to devise a new process for breaking up the work to make it more scalable and respond in under a second for a better user experience.

00:05:41.220

The process involved unzipping archived JPEGs and stitching them into a giant mosaic geotiff—again, these were gigabytes in size, but this was only happening once when the flight data was imported into the system. These geotiffs were then tiled with GDAL to output tiles to be served in a tile map service (TMS) URLs. These TMS URLs could be used for mapping in Google map-style interfaces rendered by Leaflet.js, allowing farmers and agronomists to select an area of interest on the map to search the rest of the field for similarity by outputting a heat map of the similar pixels across the field or a subset of field tiles.

00:07:09.600

This could be used to determine harvest stability or crop failure rates. The business logic required tiles to be processed at render time as TMS image URLs in order to visualize a heat map of this similarity match, as well as return a percentage match across the field. We simply did this at the highest zoom level to reduce response time—the higher the zoom, the fewer the pixels. We also rendered and processed heat maps for tiles currently in the viewport at the zoom level the user was on.

00:08:37.500

This created the illusion that the whole field had been processed when really only the TMS URLs requested by Leaflet were processed on demand. These experiences gave me exposure to the architecture and implementation strategy I've used for computer vision. This inspired the work I've done on GreenThumb.io, my blog focused on cannabis cultivation and automation using computer vision as the driving data set.

00:09:57.060

While it seems overwhelming, there are common techniques employed in this process, which I've used for scaling all of the Rails apps I've worked on over the last ten years. First, let's consider some of the challenges that cultivation has to offer, which can be observed visually. Detritus is one of the most common diseases that impacts various crops, from grapes to cannabis, also known as bud rot.

00:11:02.880

Cannabis plants will present discolored bud sites. These spots change color from a healthy bud color to gray to brown, eventually covering most of the leaf and bud, causing them to wilt and die. White powdery mildew is a fungal disease that affects a wider variety of plants. Powdery mildew can slow down the growth of plants, and if affected areas become serious enough, it can reduce yield quality. Plants infected with white powdery mildew present an appearance of being dusted with flour.

00:12:26.700

Young foliage is most susceptible to damage, and the leaves can turn yellow and dry out. The fungus might cause leaves to twist or break and become discolored and disfigured. The white spots on the powdery mildew spread over to cover most of the leaves in the affected area. Another challenge is tracking biomass rate of growth, which is a key metric when growing any crop. Conventionally, NDVI is an agricultural approach to computer vision used to calculate plant health, usually at very low resolution.

00:13:39.660

More recently, with drone plates or stationary cameras indoors, conventional yield estimates have been calculated in the past by literally throwing a hula hoop into a field and counting the number of plants or bud sites within the known area of the hoop. The agronomists can then extrapolate yield potential from the hoop for a rough statistical estimate of the field's potential yield. So, how do we do this with computer vision? First, we perform a leaf area analysis.

00:14:49.680

This can be used to track our biomass rate of change to indicate healthy growth or decay. Increases in the number of pixels matching the healthy target color range indicate biomass increase, while changes in target color, something outside of the range, will trigger our matching pixel values to drop. We can also perform a bud area analysis, which can be used for tracking yield estimates by counting bud sites, tracking their size, and monitoring signs of stress due to color change at each bud site.

00:16:01.260

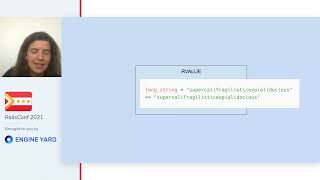

This can be tracked for pixel area growth and decay based on their similarity to a healthy bud target color range. To begin, we need to define a calibration value for our target of interest. We can use the range detector I found on piimagesearch.com's imutil library to select a low and high HSV color value for the target—in this case, the target is the bud sites. HSV is a color space value with values of hue, saturation, and value. By dialing up the low end and dialing down the high end, we can capture a clean mask of the bud sites without any additional cleanup.

00:17:13.740

We can use OpenCV for post-processing the image and pixel selection using the calibration HSV target range. We take advantage of Python's scikit-image library and its measuring and labeling tools to have a good understanding of the size of each of our contours. We take these components and use OpenCV to find and annotate images with contours of leaves and buds. Going back to the problem of white powdery mildew, we can use the leaf area mask to detect it by tracking the decay in healthy leaf area pixels.

00:18:41.400

You can see in this example that the leaf on the left is healthy, while the leaf on the right shows only a small percentage of its area as healthy leaf pixels. You can also track the rate of biomass increase from these test images to determine week-over-week growth. The output from week one's leaf mask to week two's leaf mask shows that the plants grew 27,918 pixels in five days. Using some basic trigonometry, we can calculate pixels to millimeters; cameras are really just like pyramids.

00:19:56.580

With the base of the pyramid being the frame of the image and the peak representing a horizontal and vertical angle of view based on the camera's specs and distance to the canopy, we can calculate that approximately 27 square centimeters of cannabis grew in five days. Bud site detection is similar to leaf stress in that we are looking for a rate of growth or decay in healthy bud area pixels and a change in the color of the contours we've detected as individual bud sites.

00:21:33.300

In this example, you see a large portion of the bud site has turned from a healthy target color to brown. We can also measure and count the size of individual bud sites we've detected, so if any bud site changes color or size, we can alert users of growth or decay. We can be very specific about which bud site is growing and which one may not be doing so well.

00:22:38.760

Here's an early stage visualization of the heat map, with the data set side by side with the source imagery. In this example, we see leaf area and bud count and size decaying over a matter of hours. This series shows minute-by-minute image analysis after drought-induced plant stress. The plants will recover with water, but the time spent in this stressed state will reduce yield potential and may cause infections to the bud sites, such as white powdery mildew or botrytis.

00:23:30.840

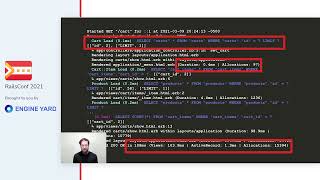

So, how do we deploy these visible indicators of plant health with Rails? Well, there are common component services that I prefer to run for all of my Rails apps. For background jobs, I like to use Sidekiq. For ActionCable, I usually start with Ruby's cable process, but I've found great success with AnyCable, Go, or web workers. I use Puma for essential queuing and Pub/Sub message service.

00:25:01.020

I use Redis for persistent data storage and querying of data sets, and I utilize PostgreSQL or MySQL depending on the use case. I really appreciate PostgreSQL's extension PostGIS for its ability to geo index and geo query. When I use OpenCV, I prefer Python as it's a developer-friendly language that I've come to appreciate, very similar to Ruby but with some differences.

00:26:19.500

The nice aspect of this architecture is that you can scale up individual services independently as work and jobs increase. For the background workers, we can scale up our Sidekiq processes or servers as WebSocket connections increase, and as our CPU load or memory increases, we can scale up our cable service. When HTTP requests rise and we need more workers, we can scale up our Puma processes. All this can be done independently without one overloaded service taking down the entire system.

00:27:45.240

It can be overwhelming to view the architecture all at once, so we'll take things step by step and visualize how each component of the system interacts to provide a user experience that exposes the inference derived from our computer vision analysis. The OpenCV code can run on devices like the Raspberry Pi, which can be accelerated with Intel's Movidius vision processing units or even the new OpenCV AI Kit, which has the Movidius Myriad X VPU integrated.

00:29:05.340

This can significantly increase your frame-by-frame processing time with an edge-based implementation. After image capture and analysis, the number of bud sites and their individual sizes can also be reported to the Rails app either through Puma and an HTTP request or ActionCable using a Python WebSocket connection to our ActionCable service. This way, we can offload some of the server-side processing such as leaf area and bud area to the camera.

00:30:12.600

In this case, the pre- and post-processed images can be uploaded to the Rails app or directly to S3 using direct file uploads. The metrics, such as leaf area and bud area in pixels or converted to millimeters based on their distance from the camera, can then be uploaded to our database so that we can store them in a geo-index in PostgreSQL. Another option is cloud computing—the captured images can be uploaded from the browser or devices.

00:31:28.560

I prefer using Thumbor, which is a Python image service that can be extended with our computer vision code using Thumbor's OpenCV engine. The computer vision code is deployed to Google Cloud Platform's Cloud Run service, where you can deploy highly scalable containerized applications on a fully managed serverless platform. I use Docker to do this; with a URL from a direct file upload, the Rails app can use the image service in the cloud and provide users with processed image URLs.

00:32:48.300

I've packaged the leaf area and bud area into Thumbor filters that can be passed into the URL as parameters with the HSV color space lower and upper bounds as arguments. The Thumbor filters have been put into Python pip packages and are installable through PyPI. This code is used in a Thumbor container deployed to Google Cloud Run. The Thumbor image service is extended using the two methods, and all it needs is an input image URL, which then outputs a processed image masking leaf area or bud area.

00:34:05.640

The method can also take a channel identifier for notifying back to ActionCable, again using a Python WebSocket client or Puma through an HTTP request. Either method can be utilized, and both can notify through ActionCable. Eventually, you can see here that the Thumbor image on the right and the number image service on the left, when combined, output the masked image—I'll call this the Green Thumbor.

00:35:14.220

I actually named the repo that I pushed to GitHub 'The Green Thumb Image API,' but while I was putting these slides together for this talk, I thought of 'Green Thumbor.' I thought it sounded better. Anyway, I've mentioned direct file uploads a couple of times—what are they? Well, they're a great way to relieve pressure on our Rails app. Puma workers can focus on responding to HTTP requests quickly without having to wait for blocking I/O from large objects like images and videos.

00:36:36.660

With AWS S3 or Google Cloud Store, our Rails app can provide a client browser or device with a pre-signed key to upload files to a location on our storage service directly. Once the files are transferred, the client can notify the Rails app that these files can now be processed or served. From there, we can insert the image URL data into our database and enqueue a background job with the image ID and a channel identifier to a Sidekiq worker.

00:37:57.540

The job will make a request to the Thumbor image service to process the image URL and send back a response to the channel's report ActionCable. Sidekiq's third-party API request to Thumbor's service allows for keeping the external requests in the background and off our Puma workers' plate. The report Action will then receive a message either from the Thumbor service directly using ActionCable or from Puma.

00:39:06.240

In this visualization, you see it coming from the Thumbor service directly to our ActionCable service, which can enqueue a job to update the inference data into our SQL database and broadcast to our ActionCable subscribers. The ActionCable consumers that are subscribed to this channel can then render the image and graph the values. Looking at this graph, we see that our rate of decay in healthy leaves and bud area can be utilized to generate an alert or trigger an automated watering.

00:40:00.120

This is a time series over a span of three hours, and you can see there's plenty of time to alert the user before it's too late. This video was provided by my friends at SuperGreenLab, who sell smart micro grow systems using LEDs and sensors controlled by a custom-designed ESP-based PCB. They also use Raspberry Pi's for capturing time-lapses.

00:41:54.660

Another great use for ActionCable is WebRTC signaling. WebRTC signaling allows two clients to establish peer-to-peer connections, enabling bi-directional communication of audio, video, and data without a trip to our WebSocket server. This involves sending a session descriptor offer from one peer to the other and sending a session descriptor answer back to the other peer, and then both peers can request a token to create what's called interactive connectivity establishment candidates or ICE candidates.

00:43:18.360

Once the peer connection is established, bi-directional communication from device to browser can be accomplished. This allows real-time video feed from the camera to your browser and the ability to trigger actions from your browser to the camera, which could control sensors, actuators, fans, or pumps. While I've seen implementations of WebRTC signaling done with HTTP polling, I've had great success using ActionCable to handle the initial exchange of ICE candidates.

00:44:43.740

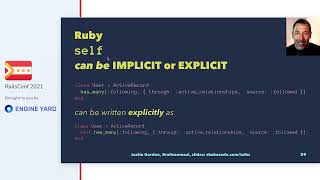

Now, does it scale? The short answer is yes. I've been using Puma for a while for my web workers. These are just some graphs of the memory footprint and responsiveness at scale that I pulled from their website. Nate Berkopec, the author of the Complete Guide to Rails Performance and co-maintainer of Puma, recently said that in order to scale a service properly, you need to know the queue times for work and jobs, requests, the average processing time, and arrival rate for work and jobs.

00:45:57.300

You also need to monitor CPU load utilization, CPU count, and memory utilization of the underlying machine. This principle applies to any language and any framework. I had a recent consultation with a startup founder who was asking if I thought .NET was scalable. I used our time together to help them better understand the systems and concepts required for a scalable system and emphasized that it's best to use what the developer feels most comfortable and productive with.

00:47:18.060

I love Ruby and feel most productive and confident scaling a Rails app. Python is very friendly for me too, but I wouldn't say I love or feel comfortable with Django, though I have deployed some Django apps before. I certainly wouldn't personally feel comfortable scaling a .NET application, but in that case, I told the startup founder to listen to their developer and ensure they follow scaling practices outlined here in .NET with different tools similar to Puma and Sidekiq, and potentially a WebSocket service if they need it.

00:48:44.520

Anyway, I highly recommend checking out Nate's book and following him on Twitter for great write-ups on optimizing Rails apps. I've been using Sidekiq since around 2012, and I really appreciate the work that Mike Perham has done with contribsys.com, which is responsible for Sidekiq. Factory provides a feature-filled job processing system for all languages, employing a common queuing mechanism using Redis that supports workers in Node, Python, Ruby, Go, Elixir, and more.

00:49:57.540

While I haven't used Factory, I am curious and excited to see how our architecture could be enhanced with background jobs across our Rails and Python code bases. Sidekiq itself is 20 times faster than the competition. I think the old saying goes that one Sidekiq worker can do the work of 20 Resque jobs or something along those lines. It does scale.

00:51:13.680

Nate also mentioned that WebSockets are far more difficult to scale than HTTP in many ways. The lack of HTTP caching and the ability to offload to CDN are significant challenges. In this graph, we can see the CPU load comparison on the left with ActionCable Go versus the right with ActionCable Ruby under 10,000 concurrent connections each. The Ruby cable service is fully loaded, while the AnyCable service has headroom to spare.

00:52:38.880

You can also notice in this comparison chart that Ruby uses four times as much memory as AnyCable Go during these load tests that I found online. AnyCable Go has performance under load that is similar to Go and Erlang natively. I haven't used Erlang Cable, but it appears to have similar performance metrics. This is a report showing the decay from the video we saw earlier with a graph, where the plants were wilting from not being watered.

00:54:06.660

This is over a span of 10 hours, so there was ample time to notify the user that there was a critical issue needing their attention sooner rather than later when they may not have come in to see the issue until the next day. Going back to the initial step of manual calibration, how can we overcome this step? Well, masked images are great annotated data sets that can be utilized as training data for a neural network.

00:55:22.680

We could potentially leverage the detection of bud sites and leaves without calibration. This would present a great opportunity to integrate with something like TensorFlow to utilize what's known as transfer learning. Transfer learning takes an existing neural network trained to detect objects and teaches it to recognize new ones, such as buds and leaves.

00:56:48.990

There is a lot of theory and techniques out there; I have experience with some of these but not all. It's important to explore and experiment with common solutions in practice but not to get too overwhelmed with theory, especially when starting out. So where do you go from here? Adrian Rosebrock, PhD from Pi Image Search, suggests learning by doing and skipping the theory.

00:58:13.260

You don't need complex theory; you don't need pages of complicated math equations, and you certainly don't need a degree in computer science or mathematics. I personally don't have a degree, so I appreciate him saying that all you need is a basic understanding of programming and a willingness to learn.

00:59:40.740

I feel that this goes for a lot of things, not just machine learning, deep learning, or computer vision, but programming in general. Don't get me wrong; having a degree in computer science or mathematics won't hurt anything, and yes, there's a time and a place for theory, but it's certainly not when you're just getting started. There is a lot you can do off the shelf.

01:00:17.880

High Image Search is a great resource for computer vision and deep learning examples and tutorials. There are many off-the-shelf solutions that Adrian will walk you through, including face detection, recognition, and facial landmarks used for things like Snapchat filters. Body pose estimations, like those used in human interface devices such as the Xbox Kinect, object detection and segmentation.

01:01:35.520

This applies to techniques similar to what we just did with leaf area and bud area, but can be adapted for things like cats, dogs, and other common objects, as well as optical character recognition for reading text in images. Super-resolution, akin to what is shown in CSI when they zoom in and say 'enhance'—that image gets clearer—is also something implementable using a deep neural network these days.

01:02:52.560

There are also interesting computer vision medical applications, such as detecting if people are wearing masks or identifying COVID in lung X-ray images. So what else exists? How can we integrate machine learning and deep learning into our Rails apps without computer vision? Aside from computer vision, there are disciplines like NLP, where you can analyze the meaning of text, computer audio, where you can analyze sound, recommendation engines, and even inverse kinematics for training robots to control themselves.

01:04:07.560

That could be an interesting use for WebRTC connections for low-latency peer-to-peer communication to control or send commands. But most importantly, have fun with it! Thank you for watching my talk. If you're interested in learning more about these topics, check out greenthumb.io or follow me on Twitter. I'm always happy to collaborate. There's my cat, who has been with me during this entire talk, and if you follow me on Twitter, you know she helped me out—so I've got to include the cat tax at the end of this.

01:05:15.960

I'm also open to consulting or potentially taking on a full-time employment opportunity if it's the right role. Whether it's through my agency, CFO Media and Technology, or as myself—an experienced product and team builder—I would love to chat and look forward to getting to know the community more. Thank you!

01:06:00.000

Look at this cat... that's all!