00:00:09.330

Today, we're going to be talking about third-party dependencies. Ask yourself, what's the weather like for your app? Obviously, I've been spending a lot more time at home lately. However, I've still been trying to get out into the neighborhood for a walk once a day. After spending the day cooped up inside, I often wonder: before I head out, should I put on a sweater? This decision is partially based on the weather outside, as well as personal preferences.

00:00:21.599

A few years ago, The Weather Channel attempted to answer this question by running a survey asking people what they thought the start of sweater weather was across the United States. The result was that many believed it to be between 55 and 65 degrees Fahrenheit, though this answer varied regionally. For me, living in Massachusetts, the average recognized was about 57 degrees Fahrenheit. Today, we’re going to build a system to help answer the question: is it the start of sweater weather? My name is Kevin Murphy, and I'm a software developer at The Gnar Company, a software consultancy based in Boston, Massachusetts.

00:01:07.440

Let's start thinking about what it would look like to build one of these systems. One of the core things we need is the ability to know what the temperature is. Right now, I really just need to know the temperature right outside my window. One potential solution is to pull a Raspberry Pi out of the closet, connect a temperature probe, and place it outside my window. That would be sufficient for my immediate needs.

00:01:38.310

However, hopefully sometime soon it will be safe to go out and about throughout the world. I might want to know what the temperature is in other places, like my office or my parents' house in the next town over. I could consider deploying a fleet of Raspberry Pis to gather temperatures everywhere I care about, but that’s not a very scalable solution. It’s not very general-purpose, either. If anyone else wanted to use this, they'd have to have very specific interests, which limits the application's usefulness.

00:01:59.670

Instead, I need a weather satellite so that anyone can find out the temperature wherever they are. I’d probably need a rocket ship to launch this satellite into orbit, and as much as I'd like to build redundant systems, I probably need several of these satellites. All of this is to say that this presentation is really just my pitch deck for my latest startup: Sweater. Sweater aims to provide real-time notifications, telling you if you should be wearing a sweater. This is a wonderful investment opportunity; all I need from you is probably something in the realm of eleven billion dollars, or I mean maybe twenty-two billion. I’ll be honest, I don't really know how much a weather satellite costs.

00:02:25.830

The reason I don’t know what a weather satellite costs is, aside from the obvious, I would never actually propose that we do this. Instead, we can build Sweater in this presentation, using an existing system that can tell me the temperature, and then Sweater will add the value of whether or not you should be wearing a sweater. This existing system is a weather API, which serves as our third-party dependency. For the purposes of this presentation, this dependency will be an HTTP API. The methods we'll discuss for testing this HTTP API apply similarly, whether it’s a persistence layer, a message queue, or someone else's code.

00:03:09.080

Let’s start building Sweater. The main component will be a Location class that answers the question: is it sweater weather? We will build a URI that is the URL we need to communicate with for our weather API. Then, we will issue a GET request. I know that this will return JSON, so I’ll parse that to create a hash. Now, I’ll be able to extract the information I need from the response, specifically whether it feels like sweater weather outside. I can easily determine if the temperature is between 55 and 65 degrees Fahrenheit.

00:03:39.410

So, here it is: we’ve basically built Sweater. If anyone’s looking for an investment opportunity, I have a working product ready to go. You’ve sort of taken me at my word that it works, so let me prove it to you with a test. I will create a new location and check if it's sweater weather, asserting that the result is true. When I originally wrote this test, it was an unseasonably warm day where I live, around 70 degrees. I ran the test, and it failed, which was quite disappointing. I don’t want to admit this to potential investors, but I need to come clean: I didn’t have much time to investigate the failure, so I moved on with my day.

00:04:12.320

Later, around dusk, my desk temperature was more in line with what I expected. I felt good about things until I ran the entire test suite later on and it again failed. This was disappointing, but I soon realized the test’s success or failure had nothing to do with my implementation. The problem is that this test is literally dependent on the weather outside. Every time I run this test, it queries the weather API for the temperature. Thus, whether it’s sweater weather or not fluctuates based on the actual weather conditions.

00:05:06.720

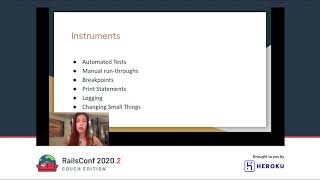

In this test, I am directly interacting with my dependency, which has its benefits. When I run the test and it works, I'm confident it'll continue to work because I’m using the actual thing. However, this test is also pretty slow. I have to make an actual API request, and we've seen it may pass one time and fail the next, simply based on the current weather. Even situations that aren’t as unpredictable can pose problems; for instance, if the server goes down, my test suite won’t pass.

00:05:50.760

Additionally, running this test requires access to that dependency, which seems kind of silly. This means that I can’t run this test if I don’t have internet access. If my dependency relies on a VPN connection that I don’t have access to at all times, I’ll run into problems, and my tests won’t pass. I also face issues if the dependency has rate-limiting; if I can only issue a certain number of requests during a predefined period, particularly in a team setting where we might all share an account, I don't want to encourage others not to run their tests frequently.

00:06:39.580

There is merit in using this approach, though. It's valuable to use real dependencies when you’ve used them before; it’s not worth the effort to imagine how your dependency might interact. It's often easier to just use the real thing and understand how it works. In many Rails apps, we may have model tests we consider unit tests that interact with the database. From an academic perspective, this shouldn't count as a unit test because it interacts with an external collaborator. However, the cost of that one particular test is often so low and easy that, in some cases, it's a worthwhile trade-off, despite the baggage that comes from the slower tests and their dependence on the external system.

00:07:24.330

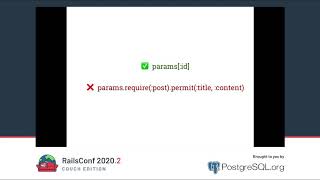

Of course, this pattern isn’t ideal for our purposes. We want to ensure our tests pass consistently, so let’s try something else: stubbing out the response. Here, we will prepare some JSON that always says it’s 55 degrees outside. Since this is an HTTP API, we'll use a tool called WebMock. What WebMock does is intercept any GET request in our tests to that specific URL and return a 200 response with the JSON we’ve prepared.

00:07:54.900

Now, when we run the same test again, it will always pass, assuming our implementation is correct, because the API will always respond as if it’s 55 degrees. This approach is fantastic because our tests will pass continuously, unless there's actual code that is incorrect. Furthermore, we won’t even need the dependency, allowing us to run our tests without internet access. This method also aligns more closely with the academic definition of a unit test, as it removes the need for the dependency. However, I must have some knowledge or intuition to set up the test correctly. I need to understand what the JSON response should look like, and I need to keep it up to date with reality.

00:08:53.610

These response values can become hard-coded, and there's no guarantee that the dependency won’t change over time. If my tests aren’t updated, I'll run into a situation where my tests pass, but production is in chaos because my code wasn't set up to handle a changed response I wasn’t aware of. Another concern is somewhat stylistic, depending on your responses and how much you need. Your tests might have a lot of code just preparing what will be responded from your stub, which could detract from the readability of your tests. I find stubbing particularly effective when working with stable dependencies, as this minimizes the likelihood of the hard-coded response getting out of date, though it doesn’t eliminate that risk entirely.

00:09:47.730

Lastly, if you care about the verbosity of your test setup, you might prefer stubbing in situations where you only care about a small part of the response or if the response itself is particularly small. If the stubbing feels excessive and you want to see your code exercised a little more, that's entirely reasonable. Let’s build an alternative: a fake. A fake is an actual version of the dependency that will stand in for the real one. In this case, we will construct our own weather API. I know this is RailsConf, but to keep things straightforward, I’ll build a Sinatra app.

00:10:32.240

This app will have one route that matches the real weather API and will return JSON that looks just like it. When we go back to the test we've been working on, I’ll use a tool called Capybara Disco Ball. This tool allows every time I make an API request to use my fake weather application instead of the actual API. Now, when I ask if it’s sweater weather, the answer will always be true because my fake weather app consistently says it’s 56 degrees everywhere.

00:11:21.340

This approach is effective because it provides a full-stack test; I'm issuing an API request to a local server that we’ve set up solely for testing. It allows us to have everything we need on our local system while removing concerns regarding unnecessary noise in the setup. We can specify how simple or complex we want this fake to be for our test's needs. Having it return 56 degrees everywhere is sufficient, but nothing stops us from making our web app say it’s 12 degrees in Alaska and 70 degrees in Alabama, or even have special cases, like stating it's negative two degrees in a particular zip code every third Tuesday when the moon is full. It all depends on the specific needs of our tests.

00:12:14.720

However, we still face the same issue we encountered with stubbing: we must ensure that the fake responds the same way as the real dependency does. The routes and responses need to match in structure and content. Initially, I jokingly suggested creating weather satellites, but now I’m proposing to build an entire application just for testing our interaction with another weather system. When our tests fail, we’ll need to determine if the issue is with our test, our actual implementation, or simply with our fake. Moreover, we’d have to ask if we need tests for our fake to ensure it's performing correctly.

00:13:16.750

I find that fakes can be an effective method for testing third-party dependencies, particularly when the goal is to evaluate the communication mechanism. If I were building an HTTP client library, I would definitely want some tests involving actual HTTP requests because that’s essential to the library's function. However, I wouldn’t want to rely on an external system for those tests. With fakes, we can also test situations where multiple steps are involved between different systems. For instance, if you're testing an OAuth handshake, you could develop a fake web app that responds accordingly to the side of the handshake you're verifying, minimizing the complexity of request sequences.

00:14:31.860

Even though this method works well, it introduces a certain level of complexity. Creating a dedicated web app for testing concerns can feel like an overkill, especially for simpler applications. Thus, let’s consider another option: using fixtures. Fixtures are simple files documenting specific requests and responses that can be replayed on subsequent requests.

00:15:08.400

For our example, we’ll again utilize the tests we've been discussing. We can employ a tool called VCR to generate and manage these fixtures. VCR takes its inspiration from a VCR, which is a device used to record and play videos before the rise of DVD players and streaming services. VCR will check for the presence of a fixture—referred to as a cassette. When we run the test for the first time, if it doesn’t find a cassette, it will issue an actual API request to the weather API and record the response.

00:15:57.450

In subsequent test runs, VCR will recognize the existing fixture and substitute it for the actual API request, effectively allowing us to run the test without hitting the live API. This is advantageous because we gain a true snapshot of the interaction we had at that specific moment, capturing all details of what was sent and received. However, this also makes it somewhat difficult to understand the connection of why it’s sweater weather just by looking at the test. We might need to dive into the fixture to see why it considered it 58 degrees.

00:16:46.510

Another challenge is that, while it provides a correct representation according to the last interaction, it risks becoming stale as the real dependency changes. To keep these fixtures up to date, we need consistent access to the dependency. Additionally, I need to ensure that generating these fixtures doesn't adversely affect the API. When dealing with a weather API, this may not seem particularly problematic, but considering a user registration service, for instance, we may face unique constraints.

00:17:44.030

Let's say we're testing an API request to register a user and everything works perfectly during our first attempt. After saving that fixture, if we try to reproduce it later, we might face issues due to uniqueness validation on email addresses. Regenerating the fixture will necessitate continuous adjustments to align with email constraints in the registration system. The implication is that it's less likely we’ll keep refreshing those fixtures, making it crucial to establish a smooth mechanism for doing so and ensuring our systems respond as expected.

00:18:39.940

Reflecting on what we’ve explored, we’ve considered four methods for testing a third-party dependency. Now, let’s revisit our implementation and understand why we needed these various approaches. The underlying method isn’t overly complex, but there’s still a considerable amount happening. Although the business logic is primarily a number comparison, testing it requires taking the entire setup, which includes interacting with our dependency.

00:20:10.170

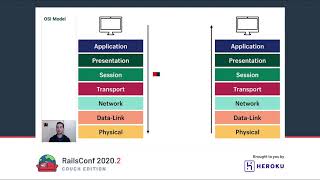

The test consists of dealing with the dependency, extracting the relevant information, and finally checking if the number fits our expected range. We can’t effectively test that last part without taking into account the other elements. Let’s decompose this into two distinct classes. First, we will create an API client specifically responsible for issuing requests and managing responses. This client will build a URI, send out the request, and parse the JSON into a hash for convenience.

00:21:23.460

Now, to test this API client, we can utilize a fixture again, as we did previously. We'll run through the same process, checking the structure of the response without worrying about receiving a specific value. The API class itself won't concern itself with the shape of that data; it only needs to ensure that it sends a request and receives a response accordingly.

00:22:20.360

The application layer, conversely, focuses on the relevant information from the response. Therefore, we need to ensure that this layer detects any changes in the actual dependency. It’s essential for the application to receive JSON in a specific structure, even if the temperature at that given moment isn’t significant. To ensure the ease of regenerating fixtures, we need to focus on retaining the structure while allowing changes to the response values.

00:23:17.220

To extract and manipulate the response, we can build a Ruby object called CurrentConditions. It will accept a hash—the JSON response from the API—and respond to methods with the information we care about, such as the perceived temperature. By parsing the hash, we can retrieve the needed data, converting it into a float for comparisons. Thus, the tests for this class become straightforward input-output assessments.

00:24:04.600

Next, we create a Weather module that interacts with the API client while extracting the needed conditions from the response. It will include a method that makes API requests and passes the results to the CurrentConditions class. Consumers of this module can then simply query the current conditions without needing to interact directly with the API.

00:25:18.610

Going back to our sweater weather method, we will now reference the Weather module to obtain current conditions based on a specified zip code. This module will tell whether it’s between 55 and 65 degrees, sticking strictly to our business logic while keeping the underlying data-gathering separate. By decoupling concerns, we allow for better adaptability. We’re isolating our business logic from how we retrieve information, ensuring that our implementation doesn’t hinge on the weather API.

00:26:41.150

A change I want to suggest for the Weather module is the ability to not only retrieve current conditions but also to set the client. This means we can designate any particular class as the client on a per-use basis. In doing so, we can build and use a fake client that allows us more control over testing scenarios. Much like our fake web server, the fake client is also programmed to respond to the same messages as the real one but can extend additional responses with methods producing the desired temperature for any zip code.

00:27:35.860

Now, in testing, I can manipulate the state of the world solely for my tests. Implementing a test mode will facilitate switching to a fake client when necessary. The test mode involves a module that can set the client to our specified fake client or revert it to the real API. This ensures that you don’t accidentally test against the actual API, which saves time and provides greater control. Performing everything in memory rather than making true API requests accelerates the testing process.

00:28:48.320

Switching into test mode enables clearer tests since it explicitly shows the current temperature in our tests, alleviating the need to consult other configurations. Furthermore, we can track interactions with our client to count the number of API requests executed, which provides additional insight into the system’s operations. By removing interactions with the external dependency, we can focus exclusively on our tests without fear of interference or failure due to external issues.

00:30:00.440

However, we still need to ensure our configurations are accurate, as our responses may alter based on updates to the API. Therefore, having a file structure to independently test client interactions while isolating tests for other components makes it crucial to build layers between our direct business logic and the API. Overall, I find test mode can be very useful in scenarios where a sufficient degree of coverage exists in client class tests.

00:31:21.830

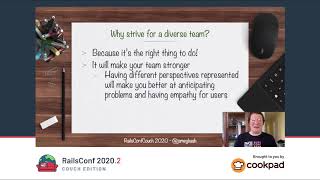

Ultimately, this presentation was about testing third-party dependencies in our applications. My conclusion is: don't. What I mean is to avoid interactions with those dependencies as much as possible. Instead, create sufficient layers separating your business logic from external dependencies, so you don't have to account for them in every test. As we've seen, we built a client class to handle interactions and transformed that data with a module used for business logic.

00:32:25.680

While it's essential to test the client class for proper interactions with the third-party dependencies, we can use any of the techniques discussed here. For the other modules associated with data representation and business logic, we can perform tests independently. It's all about ensuring that confidence in our client tests is maintained, thus shielding the higher layers from direct dependency interactions. This segregation allows us to focus solely on our primary concern: effectively responding to current temperature conditions in different locations.

00:33:39.370

To wrap things up, while testing third-party dependencies, I suggest constructing ample abstraction layers to manage interactions with them, thereby enabling safe and confident testing practices. Ensure the fundamental layers operate with the real dependencies and are tested individually so that we have the clarity needed to work effortlessly with those higher-level abstractions without being overly concerned with their underlying mechanics. If you’re interested in a copy of these slides or want to see the Rails application utilized for these examples, you can visit my GitHub page: my username is Kevin-J-M, and the repository is named Testing Services. I'm also on social media at @Kevin_J_M.

00:35:07.560

Please feel free to provide any feedback or questions you may have; I’d love to hear from you. Otherwise, thank you very much for your time, and I hope you enjoy your day.