00:00:10.719

Hey.

00:00:11.599

Thanks so much for coming to my talk about how to stop breaking other people's things.

00:00:13.440

I'm Lisa Karlin Curtis, born and bred in London, England.

00:00:16.640

I'm a software engineer at Incident.io, where we work on being the best way to help your team manage incidents across your whole organization. You should check us out online.

00:00:23.840

Today, I'm going to be talking about how to stop breaking other people's things. We're going to start with a sad story.

00:00:34.880

A developer notices that they have an endpoint with extremely high latency compared to what they expect. After finding a performance issue in the code, they deploy a fix.

00:00:41.040

The latency on the endpoint goes down by half. The developer stares at the beautiful graph, posts it in the graph Slack channel, and feels really good about themselves before moving on with their life.

00:00:48.800

Meanwhile, in another part of the world, another developer gets paged to find their database's CPU usage has spiked, struggling to handle the load.

00:00:59.039

Upon investigation, they notice there's no obvious cause. No recent changes were made, and the request volume is pretty much what they expected.

00:01:04.960

They begin scaling down queues to relieve the pressure, which resolves the immediate issue. Despite that, they still don’t know what happened.

00:01:11.439

Then they notice something odd: they have suddenly started processing webhooks much faster than usual.

00:01:19.040

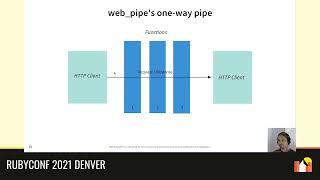

It turns out that our integrator, as seen on the right-hand side of the situation, had a webhook handler that would receive a webhook from us and then make a request back to check the status of a resource.

00:01:28.799

This was the endpoint that our first engineer had fixed earlier that day.

00:01:36.960

Just as an aside, I'm going to use the word 'integrator' frequently, which refers to people integrating with the API you are maintaining. This could be someone inside your company, a customer, or a partner.

00:01:46.880

Back to our story: that webhook handler spent most of its time waiting for our response before updating its own database. Essentially, our slow endpoint rate-limited the webhook handler's interaction with its own database.

00:02:03.680

As a result, when it would receive a webhook, send a GET request, and wait around for us to respond, it would lead to a series of bottlenecks.

00:02:11.440

The webhooks in this system are a result of batch processes—they are very spiky and would tend to arrive all at once a couple of times a day.

00:02:27.040

As the endpoint became faster during those spikes, the webhook handler began to exert more load on the database than normal, leading to a developer being paged to address the service degradation.

00:02:35.680

The fix is fairly simple: you can either scale down the webhook handlers to process fewer webhooks or beef up your database resources. This situation illustrates how easy it is to accidentally break someone else's functionality.

00:02:44.160

Even when you're trying to do the right thing by your integrators and improve the performance of your API, issues like this can arise.

00:03:03.680

To set the scene, let’s consider some examples of changes that I’ve seen break code in the past: traditional API changes, such as adding a mandatory field, removing an endpoint, or changing validation logic.

00:03:34.000

I think we all understand that these changes can easily break someone else's integration. Other examples include introducing a rate limit or changing a rate limiting strategy.

00:03:53.439

Docker recently communicated changes in their rate limiting effectively, but it still had a significant impact on many integrators.

00:04:11.920

For example, changing an error response string can lead to issues. At my previous job with GoCardless, we had a bug where the code didn’t respect the Accept-Language header on a few endpoints.

00:04:45.360

When we corrected the error response to provide the correct language based on the Accept-Language header, one of our integrators raised an urgent ticket, stating that we broke their software because they relied on our API not translating that error.

00:05:13.440

They had written their code to specifically check the error string, applying a regex in order to ensure their software behaved as expected.

00:06:07.520

Now let’s discuss breaking apart a database transaction. It's essential to recognize that in our systems, internal consistency is crucial.

00:06:28.360

For instance, if you have a resource that can either be active or inactive, and you deactivate it while failing to create an event for that change, an integrator could run into issues trying to understand why the resource was deactivated.

00:06:59.360

This can lead to their UI breaking if they can't find the corresponding event, illustrating that breaking consistency is not just about our own systems but also about how it affects integrators.

00:07:22.320

Now, let's talk about the timing of batch processing. Companies often have daily batch processes in place, which are pivotal for their operations, particularly in payment transactions.

00:07:52.960

At GoCardless, there were numerous cases where integrators created payments just in time for the daily run, making it critical that we maintain a predictable timing for these runs.

00:08:09.200

If we changed the timing of the daily payment runs, all those integrations would break since they depended on our established schedule.

00:08:35.680

Lastly, reducing the latency on an API call can also have a breaking impact. This brings us back to the story we started with.

00:09:01.120

Throughout this talk, I will define a breaking change as an action that I, as the API developer, perform that inadvertently breaks an integration.

00:09:22.040

When that happens, it usually results from an assumption made by the integrator that is no longer valid. It's important to recognize that assumptions are inevitable.

00:09:38.720

Even if a developer's assumption leads them to a breaking change, the reality is that it often turns into a problem for the API provider as well.

00:10:10.400

For many companies, when the integrators feel pain, the company will feel it too. Therefore, dismissing or pushing responsibility solely on the integrators is not productive.

00:10:38.720

Assumptions can be formed explicitly through questions and answers, as well as implicitly through industry standards or behaviors that become ingrained over time.

00:11:12.840

Integrators frequently assume that standard HTTP behaviors (like JSON content types) won’t change, and this can lead to unforeseen consequences.

00:11:52.800

For example, we faced an incident where a standard change in HTTP header casing caused a significant outage for two key integrators who relied on the previous behavior.

00:12:29.120

When integrating, developers want reliability, but they also expect innovation, leading to a tricky balance when changes are made.

00:12:43.920

Developers tend to pattern match aggressively based on previous experiences, assuming others’ systems are flawless while often overlooking potential issues in their own.

00:13:15.680

Interestingly, we all have instances where we experience rare edge cases and yet continue to trust other systems will behave consistently.

00:13:40.080

A great example here is the early history of MS-DOS—developers had to reverse engineer undocumented behaviors, influencing how applications operated initially, and preventing Microsoft from making changes that would break compatibility.

00:14:06.240

After a period of stability, teams may begin to assume that changes are impossible, leading to risk by ignoring potential volatility.

00:14:45.440

To avoid breaking others' things, we need to help our integrators stop making poor assumptions. One key way to accomplish this is by documenting edge cases thoroughly.

00:15:30.960

Documentation should be discoverable; consider SEO for your documentation and ensure critical information is easily accessible.

00:16:06.400

Additionally, never withhold documentation about changing features. Consistently keep your support articles and blogs updated, making it clear if certain features may change.

00:16:40.240

Keeping industry standards in mind is critical; follow them whenever possible and clearly indicate if you can't or if the industry is in transition.

00:17:16.640

When discussing observed behavior, naming can be a primary factor. Developers often skim through documentation; they focus on examples over narrative.

00:18:01.040

A frequent issue arises when numerically formatted strings (like registration numbers) are treated inappropriately as numbers, leading to unexpected outcomes.

00:18:41.520

Highlighting edge cases in documentation can curb many errors, as your examples help integrators avoid misinterpretation.

00:19:23.120

Using communication to counteract aggressive pattern matching is also helpful. If a change to a process is anticipated, clearly note this within the documentation.

00:19:50.480

For instance, clearly denote batch processing timings, while reserving the right to make changes without notice.

00:20:14.559

When unexpected changes occur—like sending additional events in webhooks—making sure that all communication with the outside world is controlled and documented is paramount.

00:20:47.839

It's vital that there’s clarity around constraints when interfacing with integrators. Furthermore, be prepared to roll back changes should integrations break.

00:21:39.680

While changes can't always be avoided, managing the risk they pose is critical. We've discussed the importance of preventing mistakes and fostering robust communication.

00:22:14.880

Engaging with integrators personally is a productive way to troubleshoot potential issues ahead of time. Implementing tests in sandbox environments can also provide insight.

00:22:49.680

Considering changes incrementally can help prepare integrators for more significant changes. Notifications directly through documented communications allow a smoother transition.

00:23:33.120

Interfacing with key integrators can set up enhanced communication for significant changes, ensuring the support team is ready to react if issues arise.

00:24:06.560

Keeping a live or ongoing communication channel with not only your key integrators but all integrators can help maintain open dialogue about ongoing needs.

00:24:41.680

Empathy for their perspective can foster goodwill while also ensuring that appropriate actionable information is floating between both parties.

00:25:15.440

Remember that not all changes can be categorized as simply breaking or non-breaking; they exist on a spectrum with each change presenting a likelihood of causing an issue.

00:26:00.800

So while it might not be your fault that an issue arose, it remains your problem if it impacts integrators. Your biggest customers may face significant challenges due to changes.

00:26:55.680

We must think about how we scale communication and deliver information relevant to integrations without overwhelming or under-informing our developers.

00:27:30.160

Finally, I hope you have enjoyed the talk. Thank you so much for listening.

00:27:47.680

Please find me on Twitter @paprikarti if you'd like to chat about anything we've covered today. I hope you have a great day!