00:00:12

I'm surprised by the number of people here for the second talk after the party. Well done! Welcome to my talk on keeping developers happy.

00:00:22

My name is Christian Bruckmayer, and you can find me on Twitter @bruckmayer. Today, I’ll share several topics related to Continuous Integration (CI) and our team's approach to improving it.

00:00:31

I will start with a quick overview of CI and what our team does. We'll dive into data-driven development, as well as three additional topics: test optimization, test selection, and our realizations and experiences.

00:00:57

Let’s begin with Shopify. Shopify is one of the oldest and largest e-commerce platforms out there. We manage a large number of Ruby tests, approximately 210,000, and we add around 30,000 more tests every year.

00:01:22

Our mission is to ensure that our CI systems are scalable and usable, focusing on optimizing our testing process. Historically, CI was someone’s task for a few hours when something broke. However, with the scale of Shopify, this approach is no longer effective.

00:01:44

The Rails core monolith has about 2.8 million lines of code, with over a thousand engineers working on it. Executing all our tests would take around 40 hours. We typically run a thousand builds per day, leading to about 100 million test runs daily. This massive scale presents our biggest challenge: growth. We anticipate having around 260,000 tests next year, alongside hiring plans to double our engineering team this year.

00:02:15

With increased builds and commits, our goal is to make our developers happy by providing the best tools to enhance productivity.

00:02:35

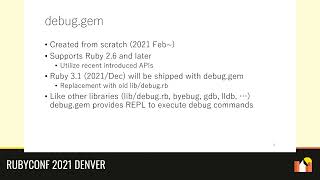

First, let’s discuss data-driven development. Data-driven means that decisions about activities are influenced by data rather than intuition or personal experience. Our team heavily relies on data—we measure everything across all CI builds and test commands, gaining insights into performance.

00:03:20

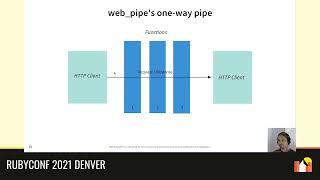

To achieve this, we run our CI on Buildkite, which manages our CI processes. We utilize various test agents that execute tests, scaling them automatically based on demand. Overnight, we can reduce active agents to nearly zero and scale them back up during working hours.

00:03:45

In terms of build structure in Buildkite, each build can contain several jobs. The idea is to parallelize tasks by running multiple jobs that execute tests simultaneously, each with its own set of commands.

00:04:20

Instrumenting jobs and builds is relatively straightforward since Buildkite supports webhooks. We developed a small Ruby application that listens to these webhooks; when a job or build finishes, we receive a webhook and stream the data into Kafka, eventually landing in our data warehouse for analysis.

00:04:54

This provides distribution data for job and build times. To pinpoint issues more effectively, we also drill down to command-level performance measurements. Every command executed in CI runs in a wrapper that measures its execution time before sending this data to Kafka.

00:05:15

For tests, we generate JUnit reports, which also stream to Kafka. Consequently, we can see precisely how long bundle installations or individual tests take, setting priorities based on how often a command is executed and how long it takes.

00:05:58

This approach assists in project management. Last year, we launched an initiative to improve our P95 time because our data indicated it was becoming slower. We observed that our P95 was around 45 minutes to run tests in CI.

00:06:18

The next topic I'd like to address is test optimization. Optimizing tests becomes challenging with so many tests, as we can’t efficiently improve individual tests. Instead, we analyze the overall test suite and identify patterns regarding performance bottlenecks.

00:06:45

For instance, we reviewed our slowest builds daily to find patterns. We quickly discovered that particular tests were hanging, facing timeouts after 30 minutes, which significantly impacted overall build times.

00:07:06

To address this, we wrapped every single test in a timeout to ensure those taking too long are terminated, avoiding lengthy delays. Although Ruby’s timeout functionality is somewhat unreliable, we created a solution to notify developers of tests that slowed down our suite.

00:07:45

Ultimately, we identified about 10 tests that were causing considerable delays. By disabling these tests, we improved our overall build times significantly, showcasing the 80/20 rule where a small fraction of tests contributed substantially to delays.

00:08:15

Next, let's discuss test selection. Imagine having a test suite with 200,000 tests and sending a pull request. The ability to select relevant tests based on your changes reduces execution time significantly.

00:08:40

This improves not only the execution speed but also the overall stability of our CI system by reducing the number of machines required for testing.

00:09:04

We generate a mapping of files to relevant tests every time we merge to the main branch. This mapping is then used to determine which tests to run for each pull request based on the changes made.

00:09:40

Additionally, different file types require distinct strategies for mapping, such as Ruby files, fixtures, and JavaScripts. For Ruby files, we implement dynamic analysis using a gem called Rotoscope, allowing us to trace which test accessed which files.

00:10:16

In this process, we execute test code to gather data on file access. The output records which tests executed which Ruby files, and we use this information to create a reverse mapping from files back to tests.

00:10:55

For fixture files, we utilize Active Support notifications to determine which tests access specific tables. This method allows us to run only necessary tests when fixture files are altered, providing a high level of efficiency.

00:11:32

This entire system has been in production for nearly two years, resulting in an average selection rate of only 40% of our tests. Instead of running 210,000 tests, we typically execute around 85,000, marking a significant improvement in our CI performance.

00:12:16

The final topic I want to cover is test prioritization. If you think about your test suite, you want to ensure that important tests run first as they provide confidence in your changes.

00:12:50

Our strategy is to identify which tests offer the most value and execute those first. By structuring our test runs this way, we aim to reach a satisfactory confidence level with fewer tests executed.

00:13:20

We developed several prioritization methods based on various metrics, such as failure rates and test complexity. Interestingly, random prioritization performed surprisingly well when we evaluated our tests.

00:13:49

In fact, we found that a significant percentage of test failures were discovered early on during random selection, sparking interest in how we can utilize this information more effectively.

00:14:15

Determining the point at which we can cease further test execution without losing confidence in the results is vital. Research suggested that achieving 50% coverage can yield meaningful insights into test failures.

00:14:46

This data demonstrates that while we strive to prioritize tests, random selection may still yield significant results, particularly for specific scenarios like deployments.

00:15:22

Overall, we learned that while it's challenging to develop a perfect prioritization strategy, it's crucial to have a flexible approach to adapt to various testing requirements.

00:15:57

If we can limit the tests run during deployments to just those that identify failures quickly, it enhances our efficiency without compromising quality.

00:16:25

Today, I've shared insights on five different topics concerning CI at Shopify. It's important to recognize that these methods haven't evolved overnight; they’ve developed over the past several years through collaboration and experimentation.

00:17:00

We aim to treat our CI system as a production environment by applying the same rigorous techniques and data-driven methods we would for any production application. If implemented well, this leads to overall happiness and satisfaction among our developers.

00:17:42

I have a few minutes left, so if anyone has questions, I’d be happy to answer them.

00:18:10

The first question was about what happens if a bug is deployed to production. It can happen, but often it’s the test that don’t work properly rather than an issue with the application itself.

00:18:50

When failures occur, we have a stabilization build that runs all tests following a merge. We then investigate to find which commit may have introduced the problem, ensuring swift resolution.

00:19:20

Regarding the question on open sourcing our methods, we generally aim to create generalized solutions. However, due to heavy customization for Shopify's needs, it may not yet be beneficial to a wider audience.

00:19:50

How we manage memory overhead is through increasing the resources assigned to each agent handling our tests, allowing for efficient execution without significant performance hits.

00:20:13

For our measurement tools in CI, we implemented a custom solution utilizing webhooks and test reports to collect and structure data efficiently. The core of our process relies on Kafka for seamless event processing.

00:20:55

In conclusion, by treating our CI like a production environment, we enhance our testing processes substantially, leading to better tools and developer satisfaction. Thank you for your attention!