Ruby

Mixed Reality Robotics Simulation with Ruby

Summarized using AI

Mixed Reality Robotics Simulation with Ruby

by Kota WeaverOverview of the Video

The video titled "Mixed Reality Robotics Simulation with Ruby" presented by Kota Weaver at RubyConf 2021 explores the application of mixed-reality simulation technologies in robotics. Weaver discusses the significance of bridging simulated environments with real-world scenarios to enhance robot development and testing.

Key Points Discussed

Introduction to Robotics and Simulation:

- Kota Weaver introduces his company, Sky Technologies, which focuses on creating robots that are human-aware and capable of navigation without collisions.

- He highlights the importance of simulators in robot development, noting commonly used tools like Gazebo.

Challenges with Simulators:

- Weaver discusses issues faced in simulation, including the misrepresentation of speed and size, which can lead to a disconnect from real-world performance.

- He emphasizes the significance of maintaining a feedback loop with actual robots to avoid losing touch with reality during development.

Introducing DragonRuby:

- Searching for better simulation tools, Weaver found DragonRuby, a pure code game engine, suitable for creating simulations without unnecessary complexity.

- DragonRuby’s ease of use allows team members with different programming backgrounds (like Elixir, Python, and C++) to quickly adopt it for simulation projects.

- Searching for better simulation tools, Weaver found DragonRuby, a pure code game engine, suitable for creating simulations without unnecessary complexity.

Live Coding Demonstration:

- Weaver conducts live coding to illustrate how DragonRuby can be used for creating simulations, demonstrating a simple physics engine using principles like Hooke's Law.

Application of Projectors:

- To enhance robot testing, Weaver explains how he employed projectors to visualize simulations in physical spaces.

- He details the integration of real-time sensor data into simulations to create a more immersive and applicable testing environment.

- He shares photos of projectors set up in a testing lab, indicating the effort to correctly calibrate the projection environment.

- To enhance robot testing, Weaver explains how he employed projectors to visualize simulations in physical spaces.

Future Plans:

- The speaker outlines future ambitions, including enhancing visualizations with additional sensors, possibly improving performance, and expanding the use of projectors for more comprehensive coverage in real-world environments.

Conclusion and Takeaways

- The synthesis of simulation technology using tools like DragonRuby substantially benefits robotics development by facilitating the creation of realistic testing environments.

- Enhancements in visualization techniques and mixed reality interactions with real robots could lead to more effective and efficient robotics advancements.

Overall, the presentation indicates that mixed-reality simulations can proactively contribute to the robotics domain, aligning development processes closer to real-world applications, thereby improving robotic behavior and interactions in real environments.

00:00:06.710

I lost my voice last week, so I still don't quite sound like myself right now. But anyway, welcome, and thank you for coming.

00:00:12.559

Today, I'm going to talk about mixed reality simulation, focusing on the robotics context. I'll provide a high-level overview of why we might want to engage in this and how Ruby ties into it.

00:00:30.800

First of all, how many of you here are involved in robotics? Anyone do any robotics? Cool! All right, a couple of people. Sounds good! And then, does anyone know about DragonRuby or has played with it? Okay, great! There's a good assortment of knowledge here.

00:00:42.399

I'm Kota Weaver. I'm a founder of a robotics company based in Boston called Sky Technologies. We primarily focus on building robots that are aware of humans, allowing them to navigate without running into people and without slowing down too much.

00:00:54.640

Additionally, we work with robot arm manipulation. This is a robot we have available in the market that we collaborated on with DMG Mori, a machine tools company. Essentially, we build software for machines like that.

00:01:06.640

I mainly work with C++ and Python, so I must admit that Ruby's not actually my primary language. Our stack also includes a lot of Elixir and Rust. Most of our work is done in simulation environments.

00:01:18.880

We often start by building models and placing them in simulations. Gazebo, for instance, is a standard simulator used widely, including by NASA.

00:01:27.360

Here's an example of an omnidirectional wheel, which we simulate as well. Simulators can be a lot of fun to experiment with. In this example, we have a vehicle with four omni wheels rolling down a hill, and you should be able to see the individual wheels rotating, which allows for comprehensive simulations on standard hardware.

00:01:44.560

However, there's a common problem: when observing a robot in a simulation, we often don't know how fast it's moving or the actual size of the robots just by looking at them.

00:02:01.120

This is an issue we frequently encounter, especially when we present our work to customers. For instance, a robot might be moving at about 0.5 meters per second, which is relatively slow, but it's hard to gauge just by observation.

00:02:19.199

Additionally, when robots operate in larger environments, their movements can appear much slower than they actually are, which complicates our understanding.

00:02:39.439

We also occasionally run into strange behaviors in simulations, such as the robot flipping over unexpectedly. This can occur due to the sensitivity of parameters and issues with different integrators. We need to consider stability during simulations.

00:03:08.640

For example, here is another case where instability occurs. Don't worry, though—this isn't a robot that gets sent to customers! They do perform better than this, but these types of issues are common in simulation work.

00:03:30.720

Thus, we started looking for better simulation options. Our goal was to find simulators that wouldn't easily destabilize and were not overly complex. In most cases, we deal with vehicular 2D simulations.

00:03:49.040

For robot arms and other tasks, we didn't need complexity. We also require headless simulations for continuous integration testing. Fast startup is crucial, as the longer it takes to boot up a simulator, the slower our development cycle.

00:04:05.280

It's fine if the simulations remain two-dimensional. Another essential aspect is the ability to easily describe different scenarios and environments, but we couldn't find existing solutions that met our needs.

00:04:24.000

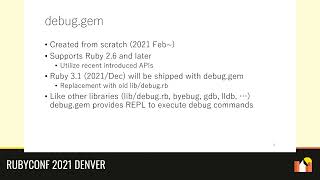

While searching for a simple game engine to build a simulator that fits my needs better, I discovered DragonRuby. It's a pure code game engine that doesn't layer on unnecessary UI.

00:04:39.840

Many other game engines complicate the learning process with their complex UIs. Since I primarily build robots, I want to minimize the learning curve because I don't spend much time doing game development.

00:05:06.080

DragonRuby is straightforward to set up, which means that other members of my team, who primarily work with languages like Elixir, Python, or C++, can easily get started as well.

00:05:18.960

The API is also very small, and it's designed for making real video games, as Amir, the founder of DragonRuby, developed games for mobile and various console platforms.

00:05:37.360

Now, I promised you it’s easy to get started, so I thought I would do a little live coding demonstration. How is everyone’s high school physics? Okay, good! Let's see if mine is still up to scratch.

00:06:02.160

I am currently in DragonRuby's root directory, and even though Amir might not approve of my method, I'm going to create a new directory named springs and add a folder called app.

00:06:16.560

Inside, I’ll create a file named main.rb, which will be the main file we work out of for our project.

00:06:27.360

After that, we can set up the main event loop by going into the terminal. Let's check my directory and make sure everything is set.

00:06:41.680

Now, if I navigate up one directory and call DragonRuby on the springs directory, we'll see that our DragonRuby window is up and running!

00:07:03.360

One of the cool features about DragonRuby is that we can start typing away and, whenever we save it, the code hotloads. For instance, we can create a rectangle, positioning it in the center of the screen.

00:07:19.520

I’ll create a square with a width and height of 40 at the coordinates x = 20, y = 2. Now that I’ve saved it, we can see the box appears in the middle of our screen.

00:07:31.440

Next, we can move this box. I will create a variable to allow for movement. DragonRuby allows state management, which helps to serialize and deserialize data much more easily.

00:07:44.880

We can set our initial value to center, and we will use the tick count to call this when we start up. We can reset this when saving, ensuring a smooth coding experience.

00:08:01.440

We need to establish our initial velocity. By modifying some values, we can see the box shifts left when changing the velocity to 100.

00:08:17.120

Now, we want this box to move based on spring dynamics. The spring equation we’ll work with is: f = -k * x, where 'k' is the spring constant and 'x' is the displacement.

00:08:34.080

I’ll use some pre-tested values that work for the center of the screen, which is 640 pixels on a 1280-width screen.

00:08:50.640

For calculating force, we can use the well-known equation F = ma. Let’s assume a mass of 10 units for our simulation.

00:09:01.680

Using the equation v = at, we'll assume a constant acceleration for simplicity. Now in DragonRuby, let’s add the current acceleration to our velocity.

00:09:16.160

Lastly, to find the position, we can integrate the velocity. If I save this now, we should observe that nothing happens since everything is centered.

00:09:41.360

If I change the x-coordinate to 100, it should shift left naturally. However, if there's a movement issue, that indicates a mistake in our code, which is common in early development.

00:10:01.840

Despite some hiccups, with just a few lines of code, we could create meaningful simulations. DragonRuby has been the enabling technology that allows for rapid experimentation.

00:10:18.560

On my first day using DragonRuby, I created a basic system to generate various shapes, similar to a vector editing tool where you can save and load images.

00:10:38.640

On the second day, I implemented a simple physics simulation with soft-body dynamics and spring-like systems, and within a week, I was working on 3D models.

00:10:56.080

Although DragonRuby is primarily a 2D engine, I've been able to create effective drone simulations as well.

00:11:11.280

This progress showcases how quickly I could move from one project to another using DragonRuby. The main point here is that it’s incredibly fun, quick, and solves many issues present in robotics.

00:11:26.640

Regarding projectors, we often encounter challenges with the correct scale in our simulations. It's essential to integrate real robots into the development cycle.

00:11:46.240

When working with real robots, we aim for a better understanding of motion and situations that are difficult to simulate, particularly regarding human behavior and sensor data.

00:12:03.360

To overcome these challenges, I decided to experiment with projectors. I purchased about 15 projectors to help visualize the data.

00:12:21.680

Although my initial attempts at installation didn't go as planned, I managed to assemble everything in the ceiling after some necessary adjustments.

00:12:38.880

Now, lining our ceiling with projectors allows us to merge sensor data from the robot with simple simulations and visualize them effectively.

00:12:53.760

We're using various programming languages for our tasks. The robot primarily operates with Elixir, C++, and Python, while configuration occurs in Ruby.

00:13:12.160

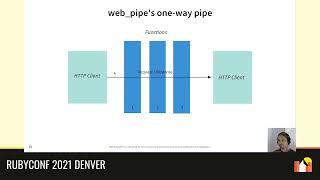

We send the data to the simulation, developed in Ruby, and then utilize ZeroMQ (zmq) to relay the information to DragonRuby. This is necessary as DragonRuby doesn't currently have native TCP support.

00:13:30.560

With the right setup, this allows us to visualize what our robot perceives in real time. All of this is accomplished with just a few lines of DragonRuby code.

00:13:47.760

The process is accelerated through DragonRuby, significantly reducing our calibration times. I can implement changes quickly since hot loading allows direct access to our projectors.

00:14:09.600

Using our client application, we can manipulate the simulation environment easily. We can zoom in, rotate, and move items, allowing for real-time adjustments.

00:14:29.440

A dynamic obstacle can be represented in our visualizations. This is crucial for testing how the robot interacts with its simulated surroundings.

00:14:44.160

In these simulations, you can see the robot responding to obstacles, like slowing down when approaching the blue box and waiting for it to pass.

00:15:01.680

This effectively demonstrates how we've utilized Ruby in our robotics simulations. Looking ahead, I want to visualize more sensor data—however, I'm unsure if DragonRuby can handle the performance requirements.

00:15:19.760

Additionally, I aim to scale our projector setup to cover the entire testing space instead of just six mounted currently.

00:15:34.880

We also aim to implement hardware-in-the-loop simulation, enabling us to have virtual robots interacting with real robots in a mixed-reality scenario.

00:15:51.920

I think that wraps up what I wanted to discuss today. If there are any questions, I'm happy to answer them.

00:16:03.760

Sorry, the question was about visualization capabilities—yes, the Raspberry Pi is connected to the projector, allowing me to manipulate simulations directly.

00:16:18.080

Regarding dynamic obstacles, yes, we create a representation of these in simulations - essentially, the robot uses data from cameras and lidar sensors.

00:16:33.760

The data allows us to make quick adjustments, utilizing a min comparison and sending the result for further processing.

00:16:50.480

Camera data handling is more complex, but we convert information to cost maps, showing areas that are occupied or dangerous.

00:17:04.800

If there are no further questions, please let me know, and we can discuss more details offline if you are interested.

00:17:26.320

We’ve been shifting our focus away from hardware development to concentrate on software, partnering with larger companies for production.

00:17:34.320

Thank you very much for your time and attention!

Explore all talks recorded at RubyConf 2021

+92