00:00:10.320

Hello everyone! Today, I'm going to talk about optimizing production performance with MRI JIT. Let me introduce myself first; my name is Takashi Kokubun. On the internet, especially on GitHub and Twitter, I use the account ID 'kokubin'. I also work as a recommender in my spare time, primarily focusing on JIT compilers and maintaining ERB, and sometimes contributing to ILB for colorizing the output and introducing some useful commands.

00:00:45.360

Today, I'm going to discuss four main topics. First, I will talk about what MRI JIT we have today. After that, I will discuss how to tune JIT performance for Rails applications, as Rails represents production workloads of Ruby usage personally. Then, I will explain how we can warm up the MRI JIT performance to reach its peak. Lastly, I will briefly discuss the future of MRI JIT.

00:01:25.520

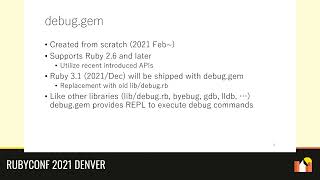

The first section is about an emergency introduction. First of all, there are basically three types of JIT implementations currently. The first one is called MJIT, which was developed by a person named Brady B. Makarov. It was merged into Ruby in version 2.6, and we have been using it until today. By default, it is not enabled, so we need to enable it via a specific option. The main characteristic of MJIT is that it runs a C compiler to generate native code, meaning you have to have a compiler ready at runtime for it to function correctly.

00:02:26.560

Because it is implemented with a C compiler, it can support GCC, Clang, and Microsoft Visual C++. As long as you are using one of these C compilers, it operates well and is multiplatform. However, recently Shopify has been developing another JIT compiler application called 'WIDGET' that is currently in discussions to be merged into Ruby 3.1. When you see this recording, it might already have been merged. WIDGET is also planned to be optionally enabled by specifying a configuration option.

00:03:01.680

Unlike MJIT, WIDGET uses an in-process x86 assembler, so there is no need for compiling processes under a separate instance. MJIT is slower due to the overhead of invoking a C compiler process. However, WIDGET has quicker warm-up times because of its native code compilation performance. Additionally, there has been some discussion about an initiative called MIR, which is a JIT framework originally motivated by Oleg. It is designed to improve JIT performance by executing inline C code directly without invoking a separate compiler process, thus mitigating certain bottlenecks.

00:04:45.360

The next topic focuses on tuning JIT performance for Rails applications. This section begins with a graph shared by software teams that show how MRI JIT compares to non-JIT performance and MJIT. Despite some improvements, we often find that MJIT can run slower than no JIT at all. However, some tricks exist to enhance performance above the level of non-JIT execution, which I will delineate in this section. You can see that in Ruby 3.0, you can actually see performance improvements over the virtual machine's performance by utilizing features available in the new versions.

00:06:20.400

Firstly, you need to use Ruby 3 to take advantage of these improvements as Ruby versions 2.6 and 2.7 face challenges achieving optimal performance. In real-world applications, the cache efficiency of compiled methods significantly improves with Ruby 3, so if you’re on Ruby 2, you may notice duplicated cores between different methods, which leads to poor caching. Even with Ruby 3, certain issues remain, so the performance may not be consistent across all environments.

00:09:10.880

Moving on to garbage collection and compacting, some versions default to moving pointers that could be affected when embedding C pointers into native code. To address this, it's crucial to manage execution carefully when using methods compiled by MJIT. The trace point functionalities have received optimizations as well, ensuring that instructions within the virtual machine can be efficiently compiled.

00:13:39.680

When it comes to warming up the environment for the JIT compiler, you should ensure methods are called a sufficient number of times to get them recognized by the JIT. For example, you need to ensure a method is executed at least 10,000 times for it to gain JIT compilation, so if your benchmarks don’t reach this threshold, they don't effectively measure JIT performance.

00:16:15.440

As a final point, I'll delve into the future of MRI JIT. You might be curious why we have multiple JIT compilers. Rather than competing with each other, these teams work towards shared enhancements. For example, one idea being proposed is a multi-tiered JIT, utilizing both a lightweight and heavyweight compiler to optimize frequently used methods. However, the current state indicates the need to further refine the engine to enhance performance. In the long run, the focus will likely shift toward WIDGET, which has demonstrated superior performance coupled with broader developer support.

00:27:32.320

In conclusion, while there are many benchmarks suggesting that MRI JIT applications may underperform compared to their non-JIT counterparts, careful tuning and configuration can yield significant speed improvements. We see this transition as a movement towards embracing and optimizing WIDGET in collaboration with ongoing development efforts. Thank you for listening to my talk!