00:00:16.320

Okay, I'm going to try this from a PDF, and hopefully this works well. Thanks for being here, and thanks for having me in Portland. It's really great to be here with all of you.

00:00:22.320

This talk is about split testing for product discovery. I've never taken a business or an economics class,

00:00:27.760

so I'm sorry if I'm the worst person to do this. But just as a basic introduction on economics and as a thought experiment, let's imagine I'm selling dollar bills for 99 cents each. How many would you buy?

00:00:39.280

All of them is the correct answer, right? So, at least as many as you could possibly raise the money for. So let's take a step back and discuss brick-and-mortar businesses for a moment.

00:00:46.399

Let’s consider Stumptown Coffee Roasters down the street. They presumably make a dollar or more for every 99 cents they spend on goods and materials. But how far can that possibly scale? At some point, you hit a ceiling where profits can only go so high. Even if you have long lines stretching down the street, you can't serve customers any faster.

00:01:06.080

This reminds me of the old computer game "Lemonade Stand." In the game, you open a lemonade stand and people walk by, stopping to buy your lemonade. Eventually, they tell their friends, and more and more people show up, forming a line.

00:01:11.119

However, there comes a point when your costs scale linearly with demand. As people start tapping their feet in line, you might face delays. If a rainstorm comes and wipes out your lemons and water supply, you're in trouble.

00:01:30.479

Now, how does that contrast with an online business? If we take 99 cents and make a dollar every single time, is this any different than a brick-and-mortar business? I think it is, but it all comes down to scale. On the internet, unless we're trying to service the tiniest niche market, the scale exists. With pretty small margins, we can make decent profits.

00:01:47.680

We've seen some promising results with daily deals sites, and this isn't always the case, but eventually, this is the holy grail for all web developers. We want to create a black box for our web business, where we funnel in dollar bills and print out two dollar bills on the other side.

00:02:05.280

AB testing is one effective way to move towards that goal. Even though it’s a challenging one to ultimately obtain, the prospect of putting a dollar bill into our machine and getting two bucks back sounds like giving us a license to print money.

00:02:17.120

So, how do we achieve this as web developers? We discuss agile development, rapid iteration, and continuous deployment. What do all these things have in common? They are essential for building agile software; everyone on the team needs them. However, the one thing they share in common is speed.

00:02:27.760

As agile software developers, we want to ship features as quickly as possible and see our users using them. We need data collection and analysis to align our efforts with measurable goals and ensure we're serving our business's bottom line.

00:02:53.520

AB testing is often seen as a fluffy task assigned to marketing or business teams, but I believe it can be a powerful tool to utilize your coding skills. It's essentially an optimization problem. You can analyze the flow of money, aiming to create trends with small improvements that over time can yield significant results.

00:03:05.120

For example, I’m Bryan Woods. This is a photo of me with a cockatoo I met in the Florida Everglades. I work at howaboutwe.com. We're located in Brooklyn, New York, in the Dumbo neighborhood.

00:03:18.239

This isn't actually the view from our office window, but it's pretty close. Currently, our landing page features a dating site for singles, and we launched a new feature about six months ago targeting couples.

00:03:36.560

A common thread in discussing dating startups is the warning against launching a dating website due to market saturation and strong competition. Our competitors have deep pockets, and it's challenging because online dating is dominated by established services.

00:03:59.679

To stand out, we needed to outperform competitors with fewer resources, focusing on the data we're collecting and the testing we're conducting to drive success. The fact that we're a subscription service that requires payment is crucial in achieving this scale.

00:04:15.040

Let’s discuss basic AB testing. Most people think of simple cosmetic changes, typical examples include modifying button colors or header text on a webpage. This might seem trivial, but even minor tweaks can produce surprising results.

00:04:29.280

Going back to the optimization concept, while these basic changes might not make or break your business, achieving improvements of one, two, or even three percent can add up over time. It's possible to continuously test these aspects without a high ceiling.

00:04:49.199

Let’s review a false fake graph for illustrative purposes. It shows that with consistent and minor AB tests, although improvements appear small month over month, significant overall progress can unfold over time, such as increasing a conversion rate from ten to fifteen percent.

00:05:05.280

There are great tools available to facilitate this process. Services like Visual Website Optimizer and Optimizely let you implement changes quickly without the need for server-side code. Just drop a JavaScript snippet on the page and allow marketers and product people to optimize freely.

00:05:29.040

If your project utilizes Rails, I recommend looking into solutions like Vanity and Bingo, which also allow you to conduct deeper tests with specific goals in mind, such as testing which button text on the signup page works better.

00:05:42.639

Basic testing allows for flexibility with things like color changes or font selections—experiments that are relatively low-risk yet hold high potential rewards. Testing can begin early in a product's lifecycle, from the moment the MVP is launched.

00:06:03.040

As we dive deeper into this discussion, it’s crucial to determine what features our customers want. Each business may vary, but often there’s a product person who monitors market trends and customer needs, proposing features to build based on new ideas or user interest. Our task is to ascertain the value of such features and understand when to build them.

00:06:52.640

This leads to the question of what is the simplest version of a feature we can create to gauge interest? What’s the tiniest iteration we can put forth to see if it attracts any response or drives any traffic?

00:07:38.479

Understanding how much customers will pay for particular features, which features are free, and what requires an upgrade is essential. Additionally, one should consider whether it is viable to shift the paywall around. There's no reason not to challenge fundamental assumptions regarding your business model.

00:08:05.760

For example, we charge users to send messages in our application, but we’ve experimented with allowing free messaging under certain circumstances. This flexibility in the business model might not be trivial to implement, but it's critical for testing your market’s reactions.

00:08:23.359

Next, when and how often should we remarket to users? Are we over-saturating our customer base or missing sales opportunities? I've noticed a trend where you sign up for a new application and then receive a follow-up email from the founder two days later asking how you liked the service. If managed well, this can boost retention, but it can also annoy users.

00:08:40.079

Similarly, e-commerce stores often follow up on abandoned shopping carts, offering a discount on items left behind in an effort to reinvigorate interest. While it's vital to respect customers' attention, tracking data collected from these approaches enables insights on their effectiveness.

00:08:57.279

Moreover, product curiosity plays a huge role in shaping your features. Anytime you wonder whether users would like something, if you can design a simple AB test to measure its potential impact, it’s worthwhile to collect data on it.

00:09:25.440

Recently, we’ve focused on increasing the number of messages users receive rather than just the quantity they send. Since we charge users to send messages, we've found that incentivizing users to receive more messages leads to greater engagement.

00:09:56.160

One of our features, called 'Speed Date,' showcases new users with fresh profiles. If users click 'yes', they'll send a message, while if they click 'skip', nothing happens. Initially, this feature was aimed at re-engaging users who had been inactive for a while.

00:10:17.440

However, we realized that it didn't perform well because inactive users generally remain disengaged. Next, we considered showcasing newer, active users. Our analysis indicated that in larger markets such as New York and Los Angeles, users were more active, thereby increasing interaction.

00:10:39.199

Conversely, in smaller markets, such as Kansas, there were fewer users to display, which led to poor performance. Thus, we adjusted the algorithm to show newer users in larger cities while maintaining appropriate exposure for smaller ones.

00:10:54.480

Additionally, we wanted to encourage users to generate more dates, enhancing their overall experience. Crafting a date isn't as straightforward as filling in a profile; it requires some creativity. We tested a 'Surprise Me' button that auto-generates ideas based on the user's location.

00:11:14.560

For the second test, we provided users with idea prompts after they had filled in the date submission form. This encouragement also proved successful in engaging users.

00:11:41.440

At this point, we had a question: should we enforce user participation in posting dates? During the sign-up process, we included a modal prompting users to post a date as the last step, which they couldn't skip.

00:11:54.639

While this action feels awkward, it is crucial to engage new users who show intent. The goal is to facilitate user involvement, but it raises a point regarding best practices in user experience.

00:12:18.479

Best practices suggest limiting friction for users. However, if sufficient data indicates that a particular approach works, it may override traditional best practices. Your data becomes more relevant than common wisdom.

00:12:36.400

As this process unfolds, we aim to expand our conversion funnel by iterating on feedback loops. When users sign up, upload photos, and post dates, they're likely to receive messages, which could lead to subscriptions.

00:12:51.600

This entire process resembles a funnel, and I believe that every business operates within a conversion funnel. Businesses often over-simplify the concept, focusing solely on optimizing landing pages.

00:13:05.200

In truth, optimization opportunities exist all over your application. By widening your funnel’s base, you can enhance overall revenue and conversion rates.

00:13:21.919

The results of AB testing aren’t surprising; we have measured significant boosts in both conversion rates and revenue from these practices. Now, let's touch on our technical implementation briefly.

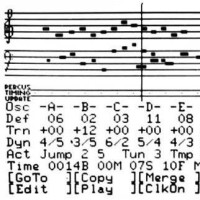

00:13:45.759

If I wanted to split users into A and B buckets for an experiment, the most naive solution might involve sorting by odd and even seconds to group users. This method presents numerous drawbacks, notably the inconsistency where users experience differing functionalities.

00:14:05.440

The next logical solution we attempted focused on assigning features based on the user’s ID parity. However, this resulted in some users having skewed experiences, creating a disparity in interactions.

00:14:22.560

To tackle this, we devised a more effective solution. We reference a users table, applying hashing functions to consistently assign users to specific buckets based on their IDs.

00:14:40.240

This approach allows us to determine consistently if a user is part of a specific experiment bucket.

00:14:48.560

Good news if you’re interested in any of this: we’ve released this experiment as open-source software on GitHub, along with a suite of convenience methods for managing user partitioning.

00:15:06.960

Our implementation allows for starting and ending experiments directly via an admin dashboard, which has proved invaluable, especially during one particular experiment weekend when we set prices exceedingly high.

00:15:23.600

This miscalculation showed that we must have the capability to easily end experiments at any time to avoid significant losses.

00:15:39.920

Looking to the future, I’d love to improve our system further by adding goal-tracking features that allow us to focus on specific performance metrics during tests.

00:15:56.480

Additionally, we may explore implementing statistical significance capabilities to monitor this in real-time on our admin dashboard. Some visualizations could provide insight into how the changes perform over time.

00:16:37.679

Transitioning to the topic of technical debt, particularly in a world where complexity doubles, it’s essential to take note when our applications must function across varied outcomes.

00:16:54.759

The need for rigorous automated testing remains paramount. All code must conform to consistent standards, as this will clarify expectations for code behavior after introducing any radical transformations.

00:17:25.680

Another core consideration is to keep AB test implementations isolated. We aim to limit any overlapping tests within the application to ensure clarity.

00:17:38.560

Additionally, it’s critical to discard any code from tests that failed to generate results; these remnants can lead to confusion and complicate the rationale behind application behavior.

00:17:54.639

Designing with the principles of easy revertibility is vital. Ideally, rolling back test implementations should align with a simple commit revert process.

00:18:11.760

While this is often straightforward, occasionally, other revisions may complicate the process, but maintaining a clear trajectory is very beneficial.

00:18:27.440

Lastly, don’t get emotionally invested in your features. You might spend several days on a specific feature, only to realize it isn't performing, and it must be removed.

00:18:45.280

This failure is frustrating; however, the essential point is to maintain objectivity, evaluate results, and pivot promptly when necessary.

00:19:05.360

Statistical significance is crucial for evaluating your tests. Implementing proven statistical algorithms and confidence intervals will help verify genuine trends in your outcomes.

00:19:44.680

In addition to utilizing rigorous metrics, we also rely heavily on cohort metrics to analyze user engagement and track overall site growth. Analyzing the impacts of our testing on long-standing users will illuminate how alterations affect various user demographics.

00:20:23.159

As a dating site, we’re mindful of parameters like gender ratios and user demographics, as these factors crucially shape our user experience. Maintaining a healthy ratio has proven beneficial, and monitoring these during testing can help us quickly identify any anomalies.

00:20:44.640

Utilizing a daily email alert system to track all ongoing AB tests helps ensure we remain fully informed regarding test performance.

00:21:00.160

In regard to testing outcomes that result in ties or inconclusive results, my advice is to err on the side of eliminating any new test code.

00:21:07.920

Removing the test code helps prevent unnecessary complexity and avoids contributing to technical debt.

00:21:21.520

However, if a project incrementally moves you closer to your ultimate goals, reevaluating the tie becomes important rather than just pausing to eliminate it.

00:21:39.760

Ultimately, experimentation has led to several unexpected benefits in our company. One primary advantage is a reduction in time spent debating potential feature implementations.

00:21:50.399

Instead of arguing whether it's worth investing resources into building a feature, we’ve shifted toward evaluating its worthiness for testing instead.

00:22:06.399

This shift has streamlined discussions and reduced hesitation around implementing ideas, allowing us to explore avenues previously deemed too risky.

00:22:22.160

Moreover, our testing regimen armors us against conflicting customer feedback. We adore customer feedback, but it often complicates decision-making.

00:22:38.320

Customers tend to voice strong opinions, making decisive execution difficult. In our case, a multitude of user requests advocates moving our dating site to a free model. Yet our testing showed that markets with freemium offerings didn’t experience accelerated growth as some expected.

00:23:12.799

If we had simply complied with those requests without testing, it would’ve aggravated operational stress without yielding positive results.

00:23:29.680

Finally, we acknowledge that revenue enhances sustainability. To summarize, rapid development lacks efficacy without direction.

00:23:52.160

Speed matters, yet tracking data ensures essential realignment toward measurable goals.

00:24:05.360

Small, compounded improvements can yield significant results over time through dedicated AB testing methodologies. It's essential not to be discouraged if progress seems slow; consistency is key.

00:24:32.560

Data empowers you to streamline conversations by removing the uncertainty from debates over feature implementations. Utilize the various tools available for testing; everything from web optimizers to open-source solutions can be leveraged.

00:24:45.960

Approach testing with rigor and an eagerness to explore even the wildest ideas you want to test. Conduct rigorous standpoints on this experimentation, ensuring code quality and overall app sustainability.

00:25:00.960

Thank you all for your time and engagement.

00:35:06.720

You.