00:00:05.940

This talk is called "Debugging Techniques for Uncertain Times."

00:00:10.800

It’s by Chelsea Troy, which is me.

00:00:14.700

You can reach me at ChelseaTroy.com, or you can reach out to me on Twitter at @hlc_troy.

00:00:27.180

Before I was a software engineer, I was almost everything else. I coached rowing at a high school in Miami.

00:00:33.960

I blogged for a startup whose business model turned out to be illegal. I attended a bar and performed stand-up comedy. I danced with fire on haunted riverboats. I edited a quack psychology magazine.

00:00:50.039

I did open source investigations for international crime rings. All that sounds very fun and exciting in hindsight, but at the time, it wasn't a fun journey of self-discovery.

00:01:04.500

I was compelled to adapt at frequent intervals in order to stay afloat. I got into software engineering for the job security, not out of passion for programming.

00:01:16.200

However, some of the coping mechanisms that I learned from those frequent adaptations followed me into the programming world. It turns out, the skills that equip us to deal with rapid, substantial changes in our lives also make us calmer and more effective debuggers.

00:01:32.820

Debugging, in my opinion, doesn't get the attention it deserves from the programming community. We imagine it is this amorphous skill, one we rarely teach, for which we have no apparent praxis or pedagogy.

00:01:51.659

Instead, we teach people how to write features, how to build something new in the software that we know when we understand what the code is doing and when we have certainty.

00:02:15.720

I suspect you've watched a video or two about programming. If I didn't know better, I'd say you're watching one right now.

00:02:23.700

This talk doesn’t deal in code examples, but I suspect you’ve seen demos where speakers share code on their screens or demonstrate how to do something in a codebase during a video recording.

00:02:31.500

Here’s the dirty secret, and I suspect you already know it: when we sling demos on stage or upload them to YouTube, that's definitely not the first time we've written that code. We've probably written a feature like that one in production before, then we modified it to make it fit in a talk or a video.

00:02:48.660

We then practice over and over and over, minimizing all mistakes and error messages, learning to avoid every rake just for that code. And sometimes, in recording, we still mess it up. We pause the recording, we back it up, and we do it again until it's perfect.

00:03:14.340

We know what we're writing, and that’s what gets modeled in programming education. But that’s not the case when we’re writing code on the job.

00:03:39.600

In fact, many of us spend most of our time on the job writing something that’s a bit different from anything we’ve done before. If we had done this exact thing before, our clients would be using the off-the-shelf solution that we wrote the first time, not paying our exorbitant rates to have it done custom.

00:04:05.400

We spend the lion's share of our time outside the comfort zone of code we understand. Debugging feels hard in part because we take skills that we learn from feature building in the context of certainty and attempt to apply them in a new context.

00:04:20.419

A context where we don't understand what our code is doing, where we are surrounded by uncertainty. And that is the first thing we need to debug effectively: we need to acknowledge that we do not already understand the behavior of our code.

00:04:53.280

This sounds like an obvious detail, but we often get it wrong, and it adds stress that makes it harder for us to find the problem. Because we've only seen models where the programmer knew what was going on, we think we're supposed to know what’s going on, and we don’t. We better hurry up and figure it out.

00:05:20.160

But speed is precisely the enemy with insidious bugs. We’ll get to why later. I struggled with this same thing in my decade of odd jobs. I felt inadequate, unfit for adulthood because I didn't know how to do my taxes or find my next gig or say the right thing to my family.

00:05:44.460

Failing enough times over a long enough period made me realize that not understanding is normal—or at least, it’s my normal. I learned to notice and acknowledge my insecurity and not let it dictate my actions. When my feelings of inadequacy screamed at me to speed up, that's when I most needed to slow down.

00:06:34.560

I needed to figure out why exactly I wasn't getting what I expected. I needed to get out of progress mode and into investigation mode.

00:06:48.000

And this is the second thing we need to debug effectively: we need to switch modes when we debug from focusing on progress to focusing on investigation.

00:07:20.520

The most common debugging strategy I see looks something like this: we try our best idea first, and if that doesn’t work, our second best idea, and so forth. I call this the standard strategy. If we understand the behavior of our code, then this is often the quickest way to diagnose what’s going on, so it is a useful strategy.

00:08:00.780

The problem arises when we don't understand the behavior of our code, and we keep repeating this strategy as if we do. We hurt our own cause by operating as if we understand code when we don’t.

00:08:35.220

In fact, the less we understand the behavior of our code, the lower the correlation between the things we think are causing the bug and the thing that’s really causing the bug. This leads us to circle among ideas that don’t work because we're not sure what’s happening, but we don’t know what else to do.

00:09:10.800

Once we’ve established that we do not understand the behavior of our code, we need to stop focusing on fixing the problem and instead ask questions that help us find the problem. By ‘the problem,’ I mean specific invalid assumptions we are making about this code—the precise place that is where we are wrong.

00:09:49.380

Let me show you a couple of examples of how we might do that. We could use a binary search strategy. In this strategy, we assume the code follows a single-threaded linear flow from the beginning of execution to the end of execution or where the bug happens.

00:10:46.560

We choose a spot more or less in the middle and run tests on the pieces that would contribute to the code flow. And by 'test,' I don’t necessarily mean an automated test, though that’s one instrument we can use to do this.

00:11:27.000

By 'test' in this case, I mean the process of getting feedback as fast as possible on whether our assumptions about the state of the system at this point match the values in the code.

00:12:30.600

It’s not just that insidious bugs come from inaccurate assumptions; it's deeper than that. Insidiousness, as a characteristic of bugs, comes from inaccurate assumptions.

00:12:53.940

We’re looking in the code when the problem is rooted in our understanding. It takes an awfully long time to find something when we’re looking in the wrong place.

00:13:44.520

It’s hard for us to detect when our assumptions about a system are wrong because it’s hard for us to detect when we’re making assumptions at all. Assumptions, by definition, describe things we’re taking for granted. They include all the details into which we are not putting thought.

00:14:35.100

We're sure that a certain variable has to be present at this point. I mean, the way this whole thing is built, it has to be. But have we checked? Well, uh, no.

00:15:17.760

We never thought to do that. We never thought of this as an assumption; it’s just the truth. But is it?

00:15:48.760

This is where fast feedback becomes useful. We can stop, create a list of our assumptions, and then use the instruments at our disposal to test them.

00:16:31.680

Automated tests are one such instrument. Tests allow us to run a series of small feedback loops simultaneously. We can check lots of paths through our code quickly and all at once.

00:16:52.680

Tests aren't inherently a more moral way to develop software or assemble, it's just that they do really well on the key metric that matters to us in quality control: the tight feedback loop.

00:17:22.920

Manual run-throughs are another instrument. Developers start doing this almost as soon as they start to write code, and we continue to do it when we want to check things out.

00:17:57.240

Breakpoints allow us to stop the code at a specific line and open a console to look at the variables in scope at that point.

00:18:04.920

We can even run methods in scope from the command line and see what happens. Print statements are useful if breakpoints aren't working, or if the code is multi-threaded or asynchronous.

00:18:26.760

In such cases, we don’t know whether the buggy code will run before or after our breakpoint. Print statements can come in really handy. Logging is valuable for deployed code or code where we can’t access standard output.

00:19:15.600

We might need more robust logging instead. A bonus is that a more permanent logging framework can help us diagnose issues after the fact or after deploying and changing small things.

00:19:32.760

If I think I know how a variable works, I can change its value a little bit and predict how the program should react, and then see if it matches.

00:19:52.080

This helps to establish my understanding of what’s in scope and which code is affecting what.

00:20:01.500

Now here’s where assumption detection comes into play. We’re likely to thoughtlessly assume that we know things at this point—that variable X should be this, that that class should be instantiated, etc.

00:20:22.200

This is where insidious bugs like to hide—in the stuff we’re not checking.

00:20:43.800

This is the third thing we need to debug effectively: the ability to identify what is the truth and what is our perspective.

00:20:56.840

I cannot tell you how many things in those early years of my independent life I knew beyond a shadow of a doubt to be true.

00:21:17.500

And maybe, just maybe, in a vanishingly small fraction of cases, I was half right.

00:21:36.660

But in all the other cases, learning to differentiate between my views and empirical evidence, and learning to reconsider my perspectives, has been my key to leveling up everywhere in my life.

00:22:31.320

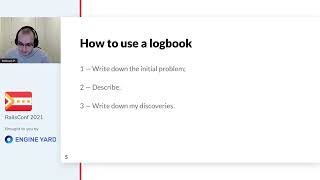

So let's whip out our programming journals and try an exercise to help us learn to detect and question our assumptions.

00:22:48.960

At each step represented by a rounded box in one of our debugging flow charts, we'll write down what step of the process we're checking and then make a list for assumptions and a list for checks.

00:23:20.520

In this example, the given section attempts to explicitly state our assumptions—the things we are not checking. The checking section lists the things we are checking, and we can mark each one with a check mark or an X depending on whether they produce what we expect.

00:23:53.960

This exercise seems tedious, right up until we've checked every possible place in the code and all our checks are working, but the bug still happens.

00:24:07.800

At that point, it's time to go back and assess our givens one by one. I recommend keeping these notes.

00:24:54.240

How often do bugs thwart us for long periods and end up hiding in our assumptions? What can we learn from this about spotting our assumptions and which of our assumptions run the highest risk of being incorrect?

00:25:17.000

At each check, whether we find something amiss or not, with a binary search, we should reduce the problem space by half.

00:25:38.760

Hopefully, that way, we find the case of our insidious bug in relatively few steps. But what we are establishing with notes like these is a pattern of what we think and where it lines up with a shared reality.

00:26:10.740

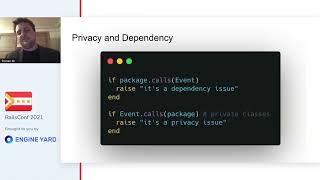

I should mention there are cases where binary search won't work, namely, cases where the code path doesn't follow a single-threaded linear flow from beginning to execution to end.

00:26:37.920

In those cases, we may need to trace the entire code path from beginning to end ourselves. But the concept remains: we explicitly list our assumptions and checks to investigate our code like an expert witness to gather answers that lead us to the defect.

00:27:12.840

We are training our brains to spot our own assumptions. We know it’s working if our given list starts getting longer. We especially remember to include givens that weren't what we thought when we hunted down previous bugs.

00:27:43.560

It is specifically this intuition that we are building when we get better at debugging through practice. However, because we do not deliberately practice it nor generalize the skill to other languages and frameworks, our disorganized approach to learning debugging from experience tends to limit our skills to the stacks we have written.

00:28:08.220

By identifying common patterns instead in the assumptions we tend to make that end up being wrong and causing bugs, we can improve our language-agnostic debugging intuition.

00:28:45.420

This is the final thing we need to debug effectively: the ability to see how the things we're doing now serve longer-term goals.

00:29:31.860

I am an expert at stressing myself out about things. I started young. On a trip to Disney World, my mom remembers changing my diaper on a bench as I cried, careful, careful, afraid she’d let me roll off.

00:30:28.560

I continued my winning streak of stress throughout high school, where I decided that college acceptances would determine my fate in life.

00:30:36.600

Afterward, I sensationalized the results of tests, sports competitions, and job interviews as make-or-break moments.

00:31:03.600

I have since learned to see no particular moments as make-or-break. I have taken the power back from my evaluators.

00:31:52.560

If I go to an interview now, my goals are to meet someone and to learn something, whether or not I get the job. I leave with more understanding than I went in with, and in that sense, I have succeeded.

00:32:03.840

Everything is in service to something else that's coming, so that even if I fail, I have taken a step forward.

00:32:23.280

In that same way, every insidious bug presents a golden opportunity to teach us something.

00:32:30.600

Maybe we hate what we learn; that’s okay. We know it now, and we can use it to save trouble for someone else later.

00:32:52.920

Or maybe we learn something deep and insightful that we can carry with us to other codebases, to other workplaces, or maybe even home to our hobbies and our loved ones.

00:33:05.880

But either way, we get to hone our skills at conversing with code and with navigating uncertainty in our lives.

00:33:20.880

We can practice acknowledging what we don’t understand, learning to slow down, differentiating our views from a shared reality, and finding ways to keep moving forward.

00:33:40.920

Spending time on those skills is a pretty good investment. Thank you.