00:00:05.660

Hi everyone. I'm sorry I can't be there to see you in person, but this is the best we can do, so let’s make the best of it. I'd like to talk to you today about the rising ethical storm in open source.

00:00:14.880

For those of you who don't know me, my name is Coraline Ada Ehmke and I'm a big-time troublemaker. I fought for codes of conduct at tech conferences in the early 20 teens. I created the Contributor Covenant, the first and most popular code of conduct in the world for open source communities in 2014. In 2016, I was honored with the Ruby Hero award. I spoke at the United Nations Forum on Businesses and Human Rights about human rights abuses by tech companies. I authored the Hippocratic License, an ethical open source license based on the UN Universal Declaration of Human Rights in 2019, and last year, I founded the Organization for Ethical Source.

00:01:01.500

I want to tell you a story. In the 1960s, amidst growing tensions between the U.S and the Soviet Union, a computer scientist published a short piece called "The Parable of the Locksmith," and this is my retelling.

00:01:14.760

One day, a curious stranger walked into a locksmith shop and came with a proposition. "I have a job that needs doing and requires someone with your highly specialized skills," he said. "I've done my research, and you're one of the smartest and most capable locksmiths in the city." The locksmith felt flattered, more than a little intrigued.

00:01:29.820

The man continued, "I want to hire you to open a certain safe. Never mind whose safe it is; that's none of your concern. Just do the job and I will make you rich beyond your wildest dreams." The locksmith was excited at the proposition of a lucrative job, but he was also a bit nervous about not knowing who the safe belonged to. Seemed suspicious.

00:01:44.880

But the stranger added, "There are also certain conditions you'll have to agree to. I'm going to blindfold you and take your phone away before bringing you to the safe location, and you can never tell anyone that I hired you." The locksmith thought this was very odd but he considered the man's promise of wealth. He felt as if he'd struggled all his life and was never properly rewarded for his hard work.

00:02:06.120

The stranger said, "You can have all the tools you need to do the job, the very best tools; I will spare no expense. Take your time. I'll be back for your answer tomorrow." Despite his hesitation about the nature of the job, the locksmith spent all night thinking about his cramped apartment, the shabby furniture, and his young daughter's dream of one day going to college. He and his family had learned to scrimp and save just to get by, and anyway, he thought to himself, if he didn't take this job, the stranger would just go to another locksmith—the second-best locksmith.

00:02:30.000

The next day when the stranger returned, the locksmith agreed to take the job. After multiple blindfolded trips to and from the unknown location, the locksmith finally cracked the safe. He wasn't allowed to see what was inside; the stranger blindfolded him again before the lock clicked open. True to his word, the stranger made the locksmith exceedingly rich.

00:02:49.320

We're going to come back to this parable and find out what happened when the safe was opened in just a few minutes. First, I want to tell you another story.

00:03:02.160

This is an HP LaserJet. It was one of the first laser printers on the market back in 1983. At the time, a man named Richard Stallman was working at an AI lab at Xerox and had access to one of these printers. It was cutting-edge technology, but the only problem was that it constantly jammed.

00:03:14.400

The lab had time-sharing software so you could book time for various resources, including a printer. But if you had set aside a schedule for 30 minutes and the printer jammed just three minutes in, you'd be frustrated. Stallman and his co-workers decided to change the printer driver so that it would report jams back to the time-sharing software, notifying users about the issues.

00:03:35.580

However, the software was proprietary and HP wouldn’t share the source code. Stallman found out that a colleague at MIT had the source code, but that person had signed a non-disclosure agreement and couldn't share it. Stallman became really angry, not just about the printer—not just about the fact that the world was shifting toward proprietary software. This incident with the printer would lead to the creation of the Free Software Movement.

00:03:53.580

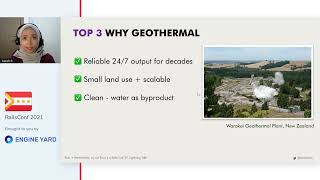

In the late 90s, when the world discovered the Internet, free software became a popular choice for web servers. Apache became the most used web server software, with many systems based on a stack called LAMP: the Linux kernel at the base, Apache providing web services, MySQL for storage, and PHP for dynamic pages—all of which were open-source technologies.

00:04:01.500

Christine Peterson coined the term "open source" in 1998, and in that same year, the open-source definition was penned by Bruce Perens. Nine months later, the Open Source Initiative was founded to promote the use of open-source software. Over the past 20 years, the open-source community has thrived, enjoying wild success and permanently changing the technology landscape.

00:04:21.180

But the world has also changed in the last two decades. Around the globe, we're seeing technology being leveraged to commit human rights abuses on an alarming scale. The technology powering some of these abuses includes free and open-source software.

00:04:40.440

Open source today plays an increasing role in mass surveillance, anti-immigrant violence, protest suppression, racially biased policing, and the development and use of cruel and inhumane weapons. The complicity of open source in this matter isn’t a bug; it's a feature—this is by design.

00:04:58.800

The open-source definition specifically allows for the use of software for any purpose, including for evil. They say giving everyone freedom means giving evil people freedom, too. But under what circumstances in human society do we grant complete freedom to evil people? Why is it different with software?

00:05:13.920

There’s an increasing discussion among developers about our ethical responsibilities as creators. The debates are heated and the media has been paying attention. The fundamental question seems to be: Are we responsible for how the technologies we develop are used?

00:05:30.500

Many of us are beginning to accept that yes, our work in open source is contributing to human rights violations in the U.S. and around the world. We're horrified by what's happening, and we're horrified at the thought that we may be contributing to it. We feel powerless; we want to find some way to do the right thing.

00:05:45.959

Conversations about ethics in technology and computer science are not new. They've been going on in our fields since before there was any such thing as software. I want to introduce you to a man named Edmund Berkeley. He was one of the most important pioneers of ethics in computer science in the 20th century, but almost no one knows who he is.

00:06:00.960

He got his start working on computers with the Navy during World War II. He published the world's first computer magazine and was among the first to propose the idea of a personal computer. Berkeley co-founded the Association for Computing Machinery in 1947. The organization's charter is to foster the open interchange of information and promote the highest professional and ethical standards.

00:06:17.220

The Berkeley Settlement Committee on the Social Responsibility of Computer Scientists published a historic and foundational report in 1958 on the ethical obligations of technologists. The findings of the report boil down to four simple statements: First, we cannot rightly ignore our social responsibilities. Second, our social responsibilities can’t be delegated to others.

00:06:38.400

Third, we cannot rightly neglect to think about how our special role can benefit or harm society. In other words, we must consider how our special capacities can help advance socially desirable applications and prevent undesirable outcomes. Finally, we cannot avoid deciding between conflicting responsibilities; we must think about how to choose.

00:06:57.600

The report went on to say that when one reflects upon the great forces that we computer people are associated with, it is no longer difficult to grasp and perhaps to accept our heavier-than-average share of responsibility. The committee believed that given the power and potential of computers, ethical considerations were paramount.

00:07:18.060

The study concluded that the scientist's credo—knowledge for knowledge's sake—easily comes into conflict with their ethical responsibilities. Given human society in our century, we can label some classes of work as obviously socially desirable and others as socially undesirable, even while we acknowledge that there’s a large middle ground which cannot be clearly classified.

00:07:39.420

And it was Evan Berkeley who wrote the parable of the locksmith. Remember, it was the height of the Cold War when he wrote it. The parable ends with a week later, the now-retired locksmith seeing a news headline about the theft of top-secret military schematics.

00:07:53.460

Soon after that, the stranger himself appeared on the world stage, declaring himself master of all nations, backed by the overwhelming threat of a devastating stolen superweapon. So Berkeley posed some questions: Did the locksmith do what was right?

00:08:06.120

He concluded that the locksmith had a responsibility to determine the motives of the stranger, that he should have viewed him as a criminal before agreeing to work with him. So no, the locksmith did not do what was right.

00:08:15.300

Berkeley concluded that the computer scientist does not have the right to shut their eyes regarding their responsibilities any more than the locksmith did, and he called on his colleagues to shoulder their proper social responsibilities. Unfortunately, he was largely ignored.

00:08:40.200

Fast forward about a decade to 1972, during the raging Vietnam War. Berkeley and his colleague Franz Alt had been invited to address the Association for Computing Machinery at a special dinner honoring them on its 25th anniversary. Alt's topic was reflections, and Berkeley was to follow with the talk on the future-looking topic of horizons.

00:09:02.460

While Alt's talk was celebratory, providing a retrospective on the advances in computer engineering since World War II, Berkeley's speech took on a distinctly different tone. He told the audience that anyone working to further the unethical uses of computers, including in developing weapons technology, should quit their jobs.

00:09:15.960

He called out members of the audience by name. Many of his colleagues were so upset by his comments that they stood up and walked out in the middle of the speech. Admiral Grace Hopper was among those who stood up and left.

00:09:29.100

Berkeley concluded his speech by stating that it was a gross form of neglect of responsibility that computer scientists were not considering their impact in terms of societal benefit or societal harm. Scientists from other fields faced ethical dilemmas, too.

00:09:48.900

For example, during World War I, the first large-scale deployment of chemical weapons and the horrors of death by poison gas reverberated throughout the chemical production world. Between the 1918 Armistice and 1933, international conferences were held to try to limit or abolish chemical weapons.

00:10:08.640

To this day, no chemical manufacturer in the U.S. will produce a solution that's used for death by lethal injection. Atomic scientists after witnessing the inhumane devastation of the atomic bomb during World War II actively sought to limit or eliminate the bomb threat to human civilization.

00:10:31.680

The Bulletin of the Atomic Scientists became the voice for the ethical responsibilities of physicists, and the Doomsday Clock project was launched, continuing to this day as a reminder of the danger of doing nothing.

00:10:46.920

Nazi Germany relied on technology and services provided by IBM in their efforts to identify and destroy the country's Jewish and Romanian minorities. The Nazis even shipped IBM punch cards on trains to concentration camps, leading to widespread acceptance that IBM was complicit in the Holocaust.

00:11:09.960

And how did the computer science community deal with this ethical conflict? The realization that they might be complicit in genocide and other atrocities? They got up and left the room. That shirking of responsibility is pervasive in the world today as well.

00:11:34.200

Technology companies routinely rely on open-source technology to provide services to organizations like ICE. How would we feel about the complicity of IBM in the Holocaust if their punch card system had been released under an MIT license? Because that is exactly the situation we're facing today.

00:11:48.720

In 1998, when the open-source definition was penned, the greatest evil conceivable by technologists was the market domination of the Microsoft operating system and its Internet Explorer browser. The founding thinkers responsible for free and open-source software understood the impact of technology on society, but they focused on technology in intellectual property terms rather than using an ethical framing.

00:12:08.160

In 2021, we face threats much greater than merely market domination of a web browser. We're in an age where corporations and governments are carrying out programs of mass surveillance, suppressing legitimate political protest, and perpetrating state-sanctioned violence—even genocide.

00:12:29.940

In the U.S., the Immigration and Customs Enforcement Agency (ICE) has been separating children from their parents at our border for years, even before President Trump took office, placing immigrants and asylum seekers in cages without reliable legal assistance or due process, let alone medical care. An estimated 40,000 people are currently in ICE custody, with hundreds of documented deaths in these concentration camps, most due to gross neglect.

00:12:51.000

Moreover, U.S. tech companies are collecting billions of dollars in contracts to support ICE's terror programs. So what does this have to do with open-source software? Let’s take a well-known example: Palantir Technologies, co-founded by top Trump advisor Peter Thiel.

00:13:08.700

Palantir collects tens of millions of dollars from ICE every year and hosts over 200 open-source projects on GitHub. These projects rely on thousands of other open-source contributions. Every single dependency in use, whether by ICE or Palantir, contributes to human rights violations. Palantir is explicitly leveraging open source to aid and abet ICE's human rights violations, and it's not alone.

00:13:29.580

The technology historian Melvin Kranzberg famously wrote in the first of his six laws of technology that technology is neither good nor bad, nor is it neutral. Professor Leila Green, in her important book "Framing Technology, Society, Choice and Change," stated that when technology is perceived as being outside society, it makes sense to talk about it as neutral, but the idea fails to recognize that culture is not fixed and society is dynamic.

00:13:48.360

When technology is implicated in social processes, there's nothing neutral about it. In 1999, U.N. Secretary-General Kofi Annan announced the United Nations Global Compact—a pact to encourage businesses worldwide to adopt sustainable and socially responsible policies. It is the world's largest corporate responsibility initiative, with over 13,000 participants and stakeholders in 170 countries.

00:14:03.119

Its very first section deals with human rights and states that businesses should support and respect the protection of internationally proclaimed human rights and ensure that they are not complicit in human rights abuses. Complicity is defined in two different contexts: first, providing goods or services that a company knows will be used to carry out human rights abuses, and second, when a company benefits from human rights abuses even if it didn't positively assist or cause them.

00:14:24.900

Many large tech companies have been profiting happily from human rights abuses for years. I've been calling for those who have the safety and privilege to do so to accept their ethical responsibilities and either orchestrate change in these companies or, as Berkeley suggested, quit their jobs.

00:14:35.660

This includes tech workers at Amazon, Microsoft, GitHub, Salesforce, Cisco, and many other technology companies—all of which profit from human rights abuses. According to this view and definition, these companies are complicit in these injustices.

00:14:54.180

And they’re using our software. Are we going to get up and leave the room again? Are we going to accept our ethical responsibility for how our work is being used?

00:15:09.000

The Ruby community came together and demonstrated its commitment to the values of diversity and inclusion by embracing the Contributor Covenant. Today, I'm asking the Ruby community to once again take a stand.

00:15:25.200

I don't expect you all to re-license your gems under an ethical source license, but I do expect you to come together as a community and reflect on our shared values—how we can center them in everything we do. How we can accept our outsized responsibilities as technologists and how we can prevent our work from being used to cause harm.

00:15:39.960

Because we face a much bigger ethical challenge than the threat of proprietary software. Stallman wanted a printer driver; we want to keep our work from being used by fascists. That's what this revolution is about.

00:15:57.600

And it's my hope that just like we did seven years ago, the Ruby community will stand up and lead the way. It's time for us to go beyond nice; frankly, I’m sick of nice. Nice is meaningless if we're not equitable.

00:16:14.700

We can't keep using 'nice' as a shield that we hide behind, ignoring our impact. I founded the Ethical Source movement to empower us to take responsibility for this impact—to find ways to promote justice and equity in our work—to ensure that our work is being used for the social good.

00:16:34.020

We must prevent the harm caused by pretending that technology is neutral. And I hope you'll join me. Thank you for listening.