00:00:08.990

Today, I'll be sharing the lessons learned from an 18-hour API outage, which I have called 'The Sounds of Silence.' This incident occurred in 2017, when Checkr’s most important API endpoint went down for twelve hours without detection.

00:00:14.759

Before diving into the details, I want to talk about bugs. Bugs are a constant companion for engineers, occurring any time a change is introduced into a complex system. No matter how strict the quality controls and tooling are, human error can lead to bugs. Therefore, the inevitable question becomes: how do we reduce their impact? My name is Paul, and I’m an Engineering Manager at Checkr. I live in Denver with my wife, Erin, and our three kids: Bennett, Delphine, and Hugo.

00:00:40.310

So, what is Checkr? Checkr is a people-trust platform that delivers background checks to make hiring safer, more efficient, and inclusive. Background checks are often a crucial final step in the hiring process for our customers. On October 6, 2017, we experienced an 18-hour API outage during which customers were unable to create reports using our API.

00:01:10.439

At that time, Checkr had grown significantly, but our team consisted of only about thirty engineers spread across six teams. The request volume had increased significantly over just a few years, and much of the team's focus was on stabilizing the system to handle this increased load. The team had doubled in the previous year, and much of the knowledge of the system was held informally by team members.

00:01:56.130

You might be wondering what a report is in this context. A report within the Checkr system describes a background check initiated when a customer submits a request via our REST API. This request triggers the mechanism to create a background check for a particular candidate, with screenings like motor vehicle reports or criminal checks associated with that report.

00:02:30.400

The incident began at 4:30 PM on a Friday when a script was run to migrate old screening records from an integer foreign key to use an AU ID instead. Within an hour, an on-call engineer from an unrelated team was paged due to a spike in errors on one of our front-end applications. This application submitted a small percentage of our total reports, which did not use the main API that our customers were utilizing.

00:03:02.810

After an initial investigation, the team suspected the error was likely due to user error and snoozed the page. Early on Saturday morning, the same error triggered another page. The on-call engineer began investigating further and noticed an unusual status code being returned by the report creation endpoint. Both our public and private report endpoints were returning 404 for many requests.

00:03:39.319

At 9:30 AM on Saturday, it became clear that there was a major problem affecting our APIs, not just the client application that originally was producing the error. The initial responder escalated the issue and involved all on-call engineers. With a smaller engineering team at the time, escalation often occurred via Slack, using a channel called 'Image Fire' to alert the rest of the team.

00:04:29.270

As the rest of the team began logging into our VPN, I remember pulling my laptop out in the parking lot of Crissy Field in San Francisco. Unfortunately, there was a surge in VPN traffic, which caused it to go down, and we all needed to go into the office to access the internet and see production logs on our servers. Eventually, we made it to the office around 10:00 AM. With full access to production logs and all the requests made, we identified the issue in about an hour and soon afterward reproduced it.

00:05:53.120

We quickly implemented a temporary fix to address the immediate issue. At Checkr, we strive for a blameless culture—one where we learn from our mistakes and improve as a result of outages. We create post-mortem documents as part of the process. The goal of these post-mortems is to learn from incidents to ensure that mistakes do not happen again.

00:06:42.780

The post-mortem document captures the root cause, timeline, and follow-up actions. Identifying the root cause is crucial, but we also value the action items that come from these analyses. One of the major learnings from this incident was that the root cause was exposed due to a backfill run at the beginning of the incident. Associated records and database-level constraints were not enforced, leading to problems.

00:07:37.070

The backfill corrupted records in our database. Furthermore, the system assumed that foreign keys would always be present, but some screenings ended up with a nullified reference to their parent report, resulting in no value for the report ID. Consequently, when a new report was validated using active record validations, it looked for screenings, but those nullified screenings were found instead.

00:08:31.645

This triggered an ActiveRecord::NotFound exception, which our API routes handled automatically by returning a 404 response. That's why we observed so many 404 responses. The backfill only impacted two of our screening tables; thus, some types of reports continued to generate successfully while others did not, contributing to our overall incident response.

00:09:37.710

Another significant problem we identified was the fourteen-hour lag time in noticing that report creation was significantly impacted. If we look back at the incident timeline, we can categorize it into two buckets: time to resolution and time to response. In this incident, the time to response severely impacted the overall duration of the degraded report API.

00:10:43.230

A staggering 75% of the incident's duration occurred before an active response was initiated. Each hour of additional downtime multiplied the impact on API requests. We asked ourselves what could have alerted us to the issue sooner. An alert was indeed sent out within an hour of the incident's start, but it was disconnected from the component affected.

00:11:46.560

As mentioned, the alert pointed to a front-end service that utilized an internal API receiving only a small percentage of our overall report traffic. Although the alert from Sentry was better than nothing, it lacked actionable information for a responder to make an informed decision.

00:12:49.040

Here's the most painful revelation from a response perspective: we had a dedicated monitor set up specifically to detect outages in report creation. We captured a StatsD event each time a new report was created in the system. This was precisely the data we needed. However, our alerting rules were too simplistic to detect the more subtle failures we encountered.

00:13:51.390

Upon setting up the monitor, we assumed we would witness nearly 100% of reports failing to create, which led us to establish a threshold of 100 reports created in thirty minutes. If that rate fell below that threshold, an alarm would sound. Unfortunately, the outage did not affect all reports; only certain configurations were impacted.

00:14:53.220

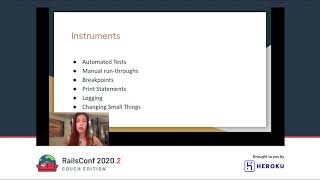

As a result, we observed the report creation metric drop abnormally low but not below the floor value in our monitor. Therefore, the monitor wasn't sensitive enough to detect ongoing issues. From the incident, we recognized the need to enhance our overall observability.

00:15:49.300

By definition, observability measures how well the internal states of a system can be inferred from knowledge of its external outputs. In the next section, I will explore tools to improve the overall observability of your application, how these tools work together, and how to craft meaningful alerts when something goes wrong.

00:16:52.290

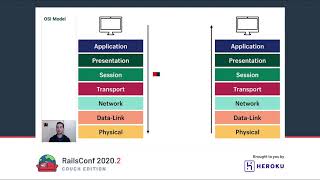

There are three basic components to building a durability stack. First, you need mechanisms for gathering metrics—measurable events that indicate the state of your application. Second, you must define monitoring rules that delineate when a particular metric is green or red. Finally, ensure those monitoring rules connect to an incident management platform, governing on-call responders and escalation.

00:18:07.490

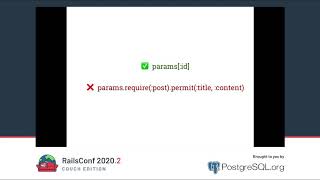

It's important not to rely solely on email or Slack as your primary notification methods. Let's talk about metric collection. There are three broad categories of metric collection commonly found in web applications.

00:18:51.470

First are exception trackers, which are services that provide libraries to capture events when exceptions occur. Examples include Sentry, Rollbar, and Airbrake. The second category is Application Performance Monitoring (APM), which grants access to industry-standard metrics across protocols and stacks, allowing you to drill down into request volume, latency, or investigate the full trace from application to database and back.

00:20:03.140

Lastly, real-time custom application metric collection gives engineers metrics to describe specific business processes performed by the application. These metrics are the hardest to define and maintain but provide direct visibility into the application's state. To visualize how each of these metrics contributes to your understanding, think of your application as a black box.

00:21:42.750

Exception tracking provides insights into hotspots, allowing you to assess the impact of errors based on event numbers, stack traces, and context. APM serves as a heat map overlay of your application, providing a high-level view of the overall health. It becomes invaluable for identifying system-wide outliers that may indicate problems.

00:22:49.270

For instance, an unexpected configuration change can impact several services in a system, as we experienced in February when a configuration altered authentication between services. The trend in targeted metrics confirmed something was wrong—highlighted by the spike in forw ones observed in APM.

00:24:05.270

Custom metrics let engineers maintain visibility into the health of specific features within the application, allowing them to view each component individually using telemetry from metrics. Once established, metrics assist in identifying when intervention is necessary. A good monitor must be a high-fidelity indicator of system health. We don't want to miss true positives or overstimulate ourselves with false positives.

00:25:27.360

It's best to define monitors that measure the health of discrete features, enabling responders to get actionable information that can lead to swift and effective responses. Furthermore, indicators should ideally be leading rather than lagging. At Checkr, we monitor report completion to ensure reports are successfully finalized, though this metric isn't useful for determining the health of report creation.

00:26:05.200

Lastly, let’s discuss some monitor patterns that could have alerted us sooner during the incident. Our monitor failed due to lack of sensitivity. One approach is implementing composite monitors, which tie multiple metrics together as a combined signal. We ultimately added a pos monitor following the incident, measuring both report and individual screening creation, identifying issues linked to screenings causing report failures.

00:27:31.420

We realized that measurements could be made relative rather than using absolute metrics. This allows for a responsive monitoring system that adapts to spikes and drops in overall request volume. Another option is using anomaly detection tools like DataDog, which apply statistical approaches to recognize metric anomalies.

00:28:25.540

While effective, these tools tend to be overly sensitive despite attempts at making them more flexible. A key lesson is how to build observability into your culture. Begin small when building observability; don’t attempt to measure everything immediately.

00:29:45.240

In a growing business, as you start your e-commerce store, low traffic is standard and a small team can easily identify exceptions. However, as your store scales and traffic evolves, it becomes crucial to instrument high-volume events first, gradually monitoring critical features.

00:31:48.020

When implementing custom metrics, consider two questions: First, what information do I want to know before my customers notice any issues? Target monitoring areas where you deliver the most value. Second, what systems are most brittle and at risk of breaking as your system expands?

00:32:42.990

As your organization grows, map the importance of specific services or components clearly to different tiers of importance. Outline monitoring and on-call structures, ensuring clarity on what reliability and observability need to be in place to maintain system importance.

00:34:21.430

In summary, bugs are an inevitable part of software development, but our responsibility extends beyond merely deploying our code. Observability is foundational for building reliable systems. Start small with tools like exception trackers and APMs that provide immediate value for minimal investment.

00:35:31.540

Focus on measuring critical aspects of your application, avoiding the urge to measure everything. Finally, iterate on your monitoring rules, refining them for higher fidelity and more actionable alerts for your responders.

00:36:10.380

Thank you.