GoRuCo 2013

To Know A Garbage Collector

It started as an obsession with making the web application used at my day job faster, and ended with trying to implement new Garbage Collection algorithms in a notoriously insane codebase. Garbage collection is an epic hack and a triumphant abstraction that supports various programming paradigms. As hardware and software changes, Garbage Collection's role also changes but remains equally important. I'll discuss my experiments with MRI Ruby, my investigations into other languages and the influence of their GC implementations, the history of the subject, and more.

Help us caption & translate this video!

GoRuCo 2013

00:00:16.480

Uh, hi everyone! Yes, this is the title of my talk. My slides are online; I'm sensitive to people with poor eyesight because I have poor eyesight too. If you want to grab them, feel free to do so if the Wi-Fi allows it. You'll notice that this particular slide is not in the online version—I'm playing games with time, so forgive me. Okay, cool! Let's start out with a little outline. I'm going to tell you a bit about me, why I'm interested in this topic, and why I'm qualified to discuss it with you today.

00:00:40.800

As part of this talk, I've essentially devised two goals for us. I have two major topics that I want to discuss, framed as goals. First, I'll introduce them before we pursue those goals. Then, I'll share a few of my influences that were really helpful in constructing the material for this talk, and after that, we'll pursue the goals. Finally, I'll say goodbye. So, who am I? This is my sixth consecutive GoRuCo, and I'm a New York City-based Ruby developer. I missed one GoRuCo—please forgive me! I've applied to speak for the last three or four iterations, and I'm actually quite happy that I haven't been accepted until now because I would have been embarrassed about any other talk I would have given. That's just how it goes, right?

00:01:15.759

I'm a professional, quote-unquote, programmer—I'm a former computer science teacher. I studied with some great people at Parsons in an MFA Design and Technology program, where I first developed a love for programming. I'm the development manager and the official salad thought leader at Paperless Post. If you want any details about that, feel free to hit me up later. And finally, I'm obsessed. Just wanted to get that out there! You're going to be like, 'Damn, this dude's obsessed!' Yeah, I know. I'm telling you up front, so don't look at me weird.

00:01:46.079

So, I'm obsessed—that's how I learn. On that note, I want to introduce the goals I have for us because I want you to feel some of that energy about my obsession. First, I want you to get excited about garbage collection, and hopefully, in getting you excited about it, I'll teach you a few things about it, or at least give you a new way to think about it or look at it. After you're super stoked—which is definitely going to happen—I'll ask you to think about the connection between programming languages and garbage collection. I think there's some interesting connections between them; obviously, they're intertwined, but there are different ways to look at them and design specifically. So, on to those influences!

00:02:57.600

The first influence: I love Twitter—I spend a lot of time on it. One of my friends on Twitter is Brian Ford, who is one of the core team members of the Rubinius project, which is a Ruby implementation I'll be talking about. In November of 2012, he posted this tweet that caught my attention: 'What’s that? That sounds cool!' I'm obsessed, and I love handbooks. You know, there’s a handbook—sign me up! The Garbage Collection Handbook by Jones is something I found interesting. I suffer because of garbage collection as a Ruby developer, so I should probably know something about it. I bought the book and started reading it. In the first few pages, there’s this quote: 'The undecidability of liveness is a corollary of the halting problem.' Liveness is a property of a system that garbage collection is very intertwined with.

00:03:43.840

So, I read that quote, 'The undecidability of liveness is a corollary of the halting problem,' and I was like, 'Holy, that’s really heavy!' Because it's not just about garbage collection being hard—you hear that garbage collected languages don't perform as well as non-garbage collected languages, right? But the notion that 'the undecidability of liveness is a corollary of the halting problem' explains why garbage collection is so particularly challenging. If you want to know what the halting problem is, ask someone smarter than me. But this is a pretty cool fact! The second influence is a paper called 'Teaching Garbage Collection Without Implementing Compilers or Interpreters.' There’s so much detail in this talk; forgive me—it means a lot to me and others. But typically, no one writes their papers alone—mostly because students write the paper, and the teacher gets the credit! Anyway, this paper is about an implementation in the Racket programming language, which is a Lisp dialect.

00:05:24.000

I really like this paper because, as I mentioned, I used to be a teacher. I was trying to understand garbage collection, so I wanted to know how some computer science professors taught garbage collection. I read this paper, and it’s amazing because it shows you that you can distill the heart of the algorithm into a very small amount of code. I won’t go into much detail about this paper, but it was heavily influential for me in designing this talk. I urge everyone to go check it out; I wrote a blog post about it that I think is pretty good and might help you understand what's cool about it.

00:06:08.160

Finally, there’s another influential paper called 'A Unified Theory of Garbage Collection' by Bacon et al. The title sounds bombastic, right? A unified theory of garbage collection—unified theories sound pretty challenging to establish in any field. Since I was reading a lot about garbage collection, I wanted to hear about this unified theory, and it turns out to be super impressive and very insightful. I hope to share a little bit of the insight from this paper with you. Now, moving on to goal number one: get excited about garbage collection! Here’s a picture of John McCarthy controlling robots with his computer—look at those cool robot arms in the background! I hope I don’t have to convince you that this is a cool photograph. If you don’t think this photo is cool, then you might not get excited about garbage collection, and you should probably go grab a coffee.

00:07:06.320

So, what is garbage collection? Here’s the definition before we delve into any details: Garbage collection is a form of automatic memory management that gives a program the appearance of infinite memory by reclaiming allocated objects that are no longer in use. Raise your hands if that’s a reasonable, comprehensible definition of garbage collection to you. Does that make sense? Okay, that’s why I love New York City; it’s a room full of smart individuals. I won’t go into too much detail on this because all of you seem to understand it, but I will provide some formal terminology necessary to discuss garbage collection with people like me who have read the handbook.

00:08:01.760

Here’s the terminology: we’ve talked about garbage collection; now, we’re going to discuss something called a heap, an entity known as the mutator (awesome name), something called a collector, roots, and something called barriers. The first is the heap, which is a data structure in which objects may be allocated or deallocated in any order. To illustrate what that means, I want to show you an image of a heap. I went out into the wild and saw a heap in its natural habitat. I even hid behind a bush to ensure I didn't scare it away before I got that photo. So here’s a graphical representation of a heap—it's in a grid. This image is actually from the Racket paper I mentioned earlier.

00:08:51.680

Now, I want you to look at this and simply acknowledge what a heap is. You can probably address any of the cells in this heap since both axes—top and left—are labeled (x and y). So I can ask you, 'What’s at 25,25?' and you can reply, 'Oh, that’s the value 20.' A heap represents the objects you have allocated in your program in memory, and how you organize those objects on the heap is one of the arts of garbage collection. I’m satisfied with that explanation! Now, let’s talk about the mutator. The mutator is the part of a running program that executes application code. It’s your code! It got its name because, in mathematical terms, it’s seen from the point of view of the collector as essentially mutating an object graph. That’s why it’s called a mutator—it changes things. For example, here's your ActiveRecord base Rails class—that's your mutator!

00:09:47.679

Now, let’s discuss the collector. It’s exactly what it sounds like. It’s the part of a running program responsible for garbage collection. If I'm running a Ruby process with the code I showed you earlier, that would be the mutator, and in the background, the garbage collector—doing its job—is the collector. Here’s some illustrative code from Ruby’s garbage collector, written in C. You’re not meant to read it all! Now that you have some terms, we can say garbage collection is automatic memory management.

00:11:00.880

While the mutator runs, it routinely allocates memory from the heap. If more memory than is available is needed, the collector reclaims unused memory and returns it to the heap. So this is a more technical definition—memory comes from the heap and is returned to the heap, used by the mutator, and overseen by the collector. And that’s enough! Okay, now let's go on a little bit. I want to talk about the history of garbage collection. The year 1960 was a significant year for garbage collectors; it was when the concept was invented. You’d never guess by the titles of these papers, but John McCarthy, that awesome robot dude in the other photo, wrote a paper titled 'Recursive Functions of Symbolic Expressions and their Computation by Machine, Part 1.' It’s probably one of the top three things ever written in history in my estimation—certainly one of the most important documents in computer science!

00:11:54.000

It's basically where Lisp and garbage collection, among many other things, originated. George Collins, whose work sadly lacks cool photos of him controlling robots, wrote 'A Method for Overlapping and Erasure of Lists.' So how we think about these two papers is important. Just remember that what we have here is archaeological stuff; we claim garbage collection originated from these two papers. If you were to read those papers, that wouldn’t be apparent right away! However, the more you learn about the subject, the more you’ll see how those influences are intertwined.

00:12:42.240

I'll say that McCarthy's style is referred to as mark and sweep or tracing collection, while Collins' style is called one. We’re going to dive into those algorithms now. To discuss mark and sweep or tracing collectors, we need to understand what are known as roots. Roots are references—specifically, a reference is an object on the heap. The heap contains objects that have addresses, which you can refer to. It’s quite common for objects to refer to each other for various reasons. So, the roots are references that are immediately accessible to the mutator without traversing through other objects. This is significant because when garbage collection occurs, the traversal of everything in the heap starts from the roots.

00:13:25.600

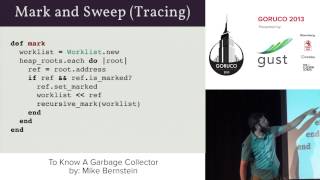

Let’s take a look at some code. This is Ruby pseudocode for a mark and sweep algorithm, which I will detail in a few slides. A note on the method – this is why I put my slides online. If you’ve got a neighbor with the slides, please follow along! The first step when we want a new object is to try to allocate memory. We can say ‘allocate’ – that's a method! If our reference is nil, that means I didn't get any memory, right? If it’s not nil, I return that reference. This gives me an address off the heap— a spot that's free that I can use for memory. If I can't get a reference, I need to mark and sweep, which effectively means garbage collecting—reclaiming the space on the heap from unused objects so that I can maintain the impression of infinite memory while my program runs!

00:14:01.120

So, after marking and sweeping, we allocate again. If I don’t have a reference, I might as well say: ‘Peace, I’m out of memory! I can’t be of service to you right now, or my program will probably crash!’ If I do have that reference, then we proceed to the marking phase. The mark introduces a new concept that wasn’t heavy enough for its own slide: it's called a work list. It's simply an array that the algorithm uses to aggregate addresses that it will sweep later. So, basically, we create a new work list, scan over the roots—these are the starting points in a Ruby application. If you look at the heap, roots might include things like the object class, right? So, there’s a semantic connection between how a program executes and how that plays out in its garbage collection.

00:15:49.760

Since you understand that everything in Ruby is an object, think of each object as holding a reference to the object class root—then consider how you could traverse that heap from that root and find all the live objects. That's the concept! We start from the roots, iterate over them, grab the address, and store it in a reference. We say: 'If that reference exists, it’s marked, then we add it to a work list.' You can think of it as adding that to a queue. Now we pass that reference to another function called recursive mark, which will do this process recursively for all the children of that node. Since it’s a tree-like structure, there's a natural recursion built into this process, which is actually very tied to why this was invented in the context of Lisp, because recursion is an essential concept central to executing Lisp applications.

00:17:11.360

So, that's basically how you can think about marking and sweeping. After we mark, we sweep. Here’s a brief explanation of how mark and sweep works: we traverse the entire heap, mark the objects reachable by setting a bit in their header, and then we sweep through the whole heap again. For marked objects, we consider them alive; for those not marked, we consider them dead and hence free them. The algorithm starts at the beginning of the heap, while an object cursor stays less than the address of the end of the heap. If that cursor is marked, we unmark it since we're performing a sweep now. If it's unmarked, we free the object and continue. So that’s mark and sweep! I'll give you an opportunity to ask questions at the end.

00:18:33.520

Okay, so we talked about mark and sweep, and I know that's heavy, but I'm okay with that. Now, let’s discuss barriers. A barrier is a concept necessary to explain reference counting. In the unified theory paper I will discuss in a minute, they pointed this out to me, and I think it’s a fantastic concept that barriers are essential to reference counting-based garbage collection algorithms. Let me explain what that means: a barrier is code that runs as a result of accessing or mutating an object on the heap. If you’ve followed any recent advancements in Ruby's garbage collector, particularly in version 2.1, you’ll see that it has a restricted generational garbage collector. The beauty of generational garbage collectors is this concept of a barrier.

00:19:46.560

In this context, what happens is that when the mutator makes changes on the heap, before it makes those changes, certain actions must occur—‘Tell this guy that I did that; tell that guy that I did that.’ If I’m trying to read and there’s a read barrier in place, it’s the same type of principle, where before reading from that address, specific tasks must be executed. This abstraction makes some very complex garbage collection algorithms possible. Reference counting is slightly simpler than mark and sweep in certain ways, but since most applications we use don’t directly interface with reference counting, the understanding can be a little bit counter-intuitive. Let's take a look at it in code.

00:20:51.360

Again, we have a new method, and this method will effectively operate the same way as the last one I showed you. But here's a notable difference: you’ll see that it doesn’t do the double-checking as the previous method. In mark and sweep, we had the procedure of checking if memory allocation is nil and then trying to allocate again. But in pure reference counting, freeing memory happens all the time. This means that the assumption here is that there will always be space on that heap. That’s quite different yet somewhat similar to how mark and sweep works. If it’s not there, we raise an error. If it is, we set the reference count to zero.

00:21:32.160

This is a right barrier. If my mutator is creating an object, and I have a reference to another object, I’ll add that reference, delete the old reference, and store the new reference on this list. This describes a right barrier, which I don’t think will be super instructive right now but it's very important. As a reference counting garbage collector, it must ensure there’s always free memory at the point of allocation. The concepts of adding a reference and deleting a reference are pretty simple: we simply increment the reference count for adding a reference, and for deleting a reference, we decrement it returning the count to zero, indicating the object is ready to be collected.

00:23:16.799

There are a few pros and cons of reference counting. A pro of reference counting is that it’s incremental as it collects garbage, freeing up memory, making it suitable for real-time environments. The limitations arise when it comes to collecting cycles—objects on the heap that reference one another—which can be difficult for a reference counting system to handle. If you think about the algorithm I just demonstrated, it makes perfect sense. There are methods to address this cycle problem as well. On that note, let’s also highlight the pro of mark and sweep: it can collect such cycles effortlessly. The downside is mark and sweep can incur long pauses and demonstrate poor locality, which refers to how effectively memory scanning occurs within a given timeframe.

00:24:09.680

Mark and sweep is often less efficient in terms of locality: it has to sweep across the entire heap multiple times to do its job, while reference counting remains very local. That’s key to its suitability for real-time garbage collection. Now having introduced you to tracing and reference counting, it’s time to talk about the unified theory of garbage collection. The core message from Bacon and his team was that the more we optimize garbage collectors, the more we notice that highly optimized mark-and-sweep collectors and reference counting collectors start exhibiting similar qualities. The naive implementations of these two strategies are very different. For example, one traces from live roots, and the other is incremental and stops the world. Yet, when you tweak the algorithms subtly, you see that they're not so different.

00:25:07.440

The first naive implementation of reference counting leads to free operations being expensive. Putting a reference count to zero should not free it immediately; instead, it’s ideal to place it on a list for batch-freeing. Consequently, when freeing everything from that list, you increase the pause times and reduce locality, beginning to replicate the behaviors of trace garbage collectors. Then, when subtly tweaking mark and sweep, instead of relying solely on marking an object, maintaining a reference count scales back the unnecessary marking. When you start implementing a reference count, you simultaneously capture certain characteristics of how reference counting works. These tweaks create parallels between both systems, leading to them both exemplifying similar qualities.

00:26:06.720

They even determined that a generational garbage collector operates under the umbrella of both mark and sweep and reference counting. Right barriers—essential for implementing generational garbage collectors—are also critical for reference counted garbage collectors. For instance, the JVM is an excellent example of a living, breathing hybrid garbage collector where many real-world implementations feature components of both styles. What’s important here is that garbage collector design can be more methodical. Recognizing that the construction of your garbage collection algorithm is based on three decisions is vital: how will you partition your heap, how will these sections be traversed, and what trade-offs will you make concerning space versus time in order for your garbage collector to suit your application needs?

00:27:09.440

Exhale! I understand that was quite dense. Now, let's proceed to our next goal. There’s McCarthy again—presumably kicking ass at chess. Look at that face! What is he even gazing at? I have no idea. McCarthy was a chess mastermind; much of why he wanted to write software was to beat robots in chess. Let’s discuss how programming languages interface with garbage collection, and how they are developed, designed, and interact with system memory. What aspects of their design pertain to our garbage collection discussions? I just bombarded you with information on the actual algorithms, and now I want you to consider how those design decisions impact how you use the Ruby implementation or any other programming language.

00:28:00.640

Bacon and his team said that the design of garbage collectors can be more methodical than it has been in the past—largely ad hoc until now! Think about those three design decisions regarding your garbage collection formation. When designing a programming language, consider that you can tweak the garbage collector to suit your programming language, or as Ruby developers do daily, adjust the code you write to account for the garbage collector's specificities.

00:28:59.840

So let’s talk about Ruby. It’s a dynamic language that we all love! I think there are several implementations of Ruby. Personally, I have a deep affection for Ruby as a programming language. It taught me a great deal, even though I don’t write much Ruby these days, but I still think about it and keep up with it. I want to tell you about the three main implementations: MRI, which serves as the reference implementation, had simple beginnings with a standard, conservatively implemented mark-and-sweep garbage collector. Fast forward 20 years later to today, and the discussion centers around restricted generational garbage collection, a truly hybridized garbage collection.

00:30:09.440

However, it’s highly compromised due to a concept referred to as conservatism—something I won’t delve into here as it’s beyond the scope of what I aim to discuss today. But if you wish to understand Ruby and its garbage collector, particularly in MRI, grasping conservatism is important. Then we have Rubinius, which is a thoroughly modern garbage-collected Ruby implementation. It has recently introduced concurrent and parallel components to its generational garbage collection—also highly hybridized and competently designed. Since Rubinius has sort of, in the Haskell sense, ‘avoided success at all costs,’ they've had more freedom to experiment than how MRI has.

00:31:23.120

MRI is very successful, in that sense. On the other hand, JRuby leverages the power of the JVM. This implementation involves much effort from the JRuby team—they’ve had to do extensive work to ensure Ruby operates smoothly on the JVM and cooperates with its garbage collector. They're experimenting with intriguing techniques, like inline caching and much more, that make it a very desirable Ruby implementation, especially when considering predictable and tunable garbage collectors.

00:32:50.080

Now, let’s look at Java. There have been extensive research efforts into JVM garbage collection, likely among the software projects with the highest counts of man-hours dedicated to it. The JVM grants multiple pluggable implementations, yet the basic idea celebrates the significance of garbage collection and the investment of time and resources it deserves. Its GC is adaptive, tunable, and utilizes a hybrid approach.

00:34:02.560

Typically, when you consider the JVM's garbage collector, you often think of its generational aspect. However, there are numerous approaches available, including real-time JVM implementations employing incremental garbage collectors to achieve their objectives. Ultimately, Java is an interesting case due to its considerable evolution over time and success, but because of its memory model, it allows easier manipulation of its garbage collector compared to MRI Ruby.

00:35:16.800

In summary, garbage collection is a remarkably diverse field and within those aforementioned three decisions, there are myriad possibilities. Thus, there is no universally ‘best’ garbage collector for any program. It all depends: what does the program do? How does it utilize memory? What locality does it possess? This is how one should determine the capabilities desired from the garbage collector. Does it need to have real-time guarantees? Do pauses affect you? Please consider the problem you're trying to solve rather than the tool you feel like using when it involves other people's money!

00:36:23.680

Lastly, let’s talk about Haskell, a strictly and statically typed programming language. Its type checker and compiler heavily inform the garbage collector, and I wanted to highlight it because Haskell’s design makes certain aspects of its garbage collector easier to manage. In Haskell, as a purely functional language, all data structures are immutable, meaning you never confront situations where older objects reference newer ones. Instead, references point backwards in time, alleviating many issues much earlier!

00:37:30.760

Because of these concepts, they’ve been able to innovate their garbage collector continually, producing a garbage collector that can modify without disrupting existing code, effectively parallelizing aspects necessary for garbage collection work. This gives a unique perspective into how the language influences its garbage collection framework compared to Ruby. In essence, many great programming languages continually work on their garbage collectors, and the design language profoundly impacts what is possible in garbage collection, and vice versa. If you’re tethered to a particular GC and cannot change it, you’ll be limited in changing the programming language and vice versa.

00:38:42.760

To quote Henry Baker, who invented the concept of semi-space collection in incremental garbage collection: “GC is not a generic solution for memory leaks, but a correct GC is a generic solution for dangling pointers. Just as there is no general solution for loops due to undecidability, there is no general solution for leaks.” While not the most appealing quote, in context, it's heavy, as it delves into the realm of possibilities within programming and computer science—what can we truly do?.

00:39:49.066

The essence is, garbage collection is an enthralling field, as I've demonstrated clearly. Deep knowledge of your tools is incredibly beneficial in not only understanding them day-to-day but also utilizing them effectively. This talk stemmed from my excitement in sharing this knowledge with you all, as I believe it can significantly benefit you. If we understand garbage collection better, we can enhance Ruby or another language certainly.