00:00:05.400

Hi everyone, my name is Will Jordan. I'm a software engineer at Code.org, and welcome to my RailsConf 2021 talk titled 'What the Fork?'. Code.org is a non-profit dedicated to expanding access to computer science in schools and increasing participation by young women and students from other underrepresented groups.

00:00:17.820

Our engineering team develops a computer science learning platform for kids, which is based on a Rails application I have been a part of building and operating since 2014. Over the years, this Rails app has evolved and matured to support a growing number of teachers and students. We now have over a million teachers and over 40 million students on our platform, and we have translated our curriculum into over 60 languages.

00:00:29.699

This is a pretty large Rails application! We're running our annual Hour of Code event in December, which introduces millions of students around the world to computer science with local events in over 180 countries. This week-long event has served over a billion total sessions since it began in 2013, and at peak traffic, our site typically serves over 10,000 requests per second during this event.

00:01:02.280

While operating—and especially debugging—performance, scalability, and reliability issues in our app as the infrastructure lead, there was one particularly magical method that seemed especially important and has always caught my interest: the Unix Fork system call. It's a tool that gets used all the time, and that we mostly take for granted. You could call it the staple utensil of Unix-based operating systems.

00:01:21.299

Here's Fork being used, for example, in the Puma parallel and Spring gems. At first, I only vaguely knew that Fork creates a new process, and it took me quite a while to grasp its nuances and dangers, to appreciate its significance in Unix, and to understand how it maximizes Ruby performance on multi-processor systems in particular.

00:01:39.119

In this talk, I'll share with you everything I've learned about Fork. I've broken this talk down into three sections: First, 'Unix Fundamentals,' where I'll look at Fork and related system calls; second, 'Ruby and Rails Deep Dive,' where I'll explore the use of Fork in Ruby in general and how it's employed in the Spring and Puma gems specifically; and third, 'Tips, Tricks, and Experiments,' where I'll review some best practices and share a couple of interesting experiments I've worked on.

00:02:04.079

All right, first off, 'Unix Fundamentals.' What exactly is the Fork system call? What does it do and where did it come from? As a starting point, let's look at the current documentation from the Linux Manual Pages project.

00:02:10.080

Here it says, 'Fork creates a new process by duplicating the calling process. The new process is referred to as the child process; the calling process is referred to as the parent process.' So, a call to Fork duplicates a single process into the parent and child, allowing each to run independently. If the system has more than one CPU, they can even operate in parallel.

00:02:38.160

This parent-child distinction is important because the parent process can keep track of its children through their process IDs, creating a process tree covering everything running on the system. The description continues: 'The child process and parent process run in separate memory spaces. At the time of Fork, both memory spaces have the same content, and memory writes performed by one of the processes do not affect the other.' This essentially means that the memory space in the child process acts like a copy of the parent's.

00:03:01.680

It doesn't actually have to copy all the memory; it just needs to behave like a separate copy when writes occur. Modern systems utilize an optimization called 'copy-on-write,' and I'll go into more detail on that later. The full documentation is much longer, but I pulled out one more note worth mentioning: 'The child inherits copies of the parent's set of open file descriptors. Each file descriptor in this child refers to the same open file description as the corresponding file descriptor in the parent.'

00:03:33.840

This is a little abstract but really important to how Fork operates in Unix. A file descriptor represents not only open files on the filesystem but also things like network sockets and pipes, which are input/output streams used to communicate between processes. This is really powerful but also dangerous, as using Fork effectively in more complex use cases usually requires carefully controlling these inherited file descriptors. I'll get more into that later, but first, I'd like to cover the most common and basic use of Fork, which is just to spawn a brand new process that executes another program.

00:04:15.780

For this, you follow Fork with the exec function. Here's the man page for exec: 'The exec family of functions replaces the current process image with a new process image. There shall be no return from a successful exec, because the calling process image is overlaid by the new process image.' So, Fork exec is how you spawn a subprocess with a new program in Unix. Then, if you want the parent process to wait for the child to finish before continuing—if you don't want them to run concurrently—then you can call wait.

00:04:51.660

The wait system call suspends execution of the calling thread until one of its children terminates. Now, I want to take a little digression here to point out one weird quirk of the Unix process model that you might have heard of or come across: the zombie.

00:05:04.320

Here they say, 'A child that terminates but has not been waited for becomes a zombie.' The kernel maintains a minimal set of information about the zombie process in order to allow the parent to later perform a wait to obtain information about the child. As long as a zombie is not removed from the system, it will consume a slot in the kernel's process table, and if this table fills, it will not be possible to create further processes.

00:05:28.320

If a parent process terminates, then its zombie children (if any) are adopted. One example of this is a couple of years ago when Puma had a regression in how it waited on terminated worker processes, which were listed in the ps utility as 'defunct.' This bug caused a zombie invasion in Rails servers everywhere. All right, back to Fork. Here's a simple example of the C interface of Fork.

00:05:46.920

In this example, we check the return value of the Fork call, see around different code paths in the child and parent, and how the child branch calls exit here to terminate its own process. It actually calls _exit, which avoids invoking any existing exit handlers that you might not want to run when the child process exits.

00:06:07.260

Then, the parent branch calls waitpid, which is a variant of wait to wait for the child process to finish before continuing. You could also replace the child's printf call here with a call to exec if you wanted it to run another program in the subprocess, for example. So that's the basic C API. Now let's go into a bit of Unix history to understand the fundamental significance of Fork and its expected behavior in systems.

00:06:30.360

The computer science term 'Fork' was coined just a few years before Unix, in a 1963 paper by Melvin Conway, as a model for structuring multiple processes and programs. This paper influenced the designers of a 1964 computer research project at UC Berkeley called Genie, which in turn served as inspiration for the original Unix developed around 1969. I'd like to point out how fundamentally significant the Fork call was and still is to the Unix operating system, as well as how much of a hack it was and still is.

00:07:14.160

Dennis Ritchie, who co-designed Unix along with Ken Thompson, wrote that the PDP-7's fork call required precisely 27 lines of assembly code. I can't say I went through the trouble of deciphering it, but Ritchie described it as follows: 'There was a process table, and the processes were swapped between main memory and the disk. The initial implementation of Fork required only one expansion of the process table and the addition of a Fork call that copied the current process to the disk swap area using already existing swap I/O primitives and made some adjustments to the process table.'

00:07:53.640

So that's it! Just those 27 lines of code. He went on to write how it's easy to see how some of the slightly unusual features of the design are present precisely because they represented small, easily coded changes to what existed. A good example is the separation of the Fork and exec functions, which exists in Unix mainly because it was easy to implement Fork without changing much else.

00:08:07.500

Fork was one of the fundamental primitive operations in Unix from its very first version. Here's an early Unix man page for Fork from 1971, which states, 'Fork is the only way new processes are created.' The original Fork worked well enough, but its implementation was relatively slow because it had to copy the entire process into a separate address space. This was not only slow for forking large processes but was also mostly redundant, especially when followed by exec for the common use case of launching a new program in a subprocess.

00:08:35.100

To address this common use case, in 1979, BSD3 added a vFork system call as an optimization specific to Fork exec. Instead of copying the address space, it shared the existing one with the parent process since it was going to be replaced by exec anyway. To avoid memory contention, it suspends the parent process until the child calls exec. This was efficient for a simple Fork exec, but it's pretty dangerous for any code that might happen to write to memory in between. vFork had lots of other race condition issues to deal with, like bad interactions with signal handlers or other threads in the parent process, and so on.

00:09:14.760

In 2001, the POSIX standard introduced posix_spawn to Unix, which wraps up the Fork and exec pattern into a single system call so that it can be as efficient as vFork while avoiding all of the different safety and race condition issues. If you can use it, this is the recommended API for launching new processes today.

00:09:42.260

The next important optimization to Fork was copy-on-write, which various Unix systems implemented in the 80s. For example, AT&T System V Release 2, which is a popular Unix system, leverages features of the Memory Management Unit (MMU) of a CPU architecture like x86_64. It needs that hardware support to function. An MMU typically uses a page table to map pages in the process's virtual address space to physical memory and handles the translation back and forth.

00:10:07.920

After a Fork, the page table is copied to the child process, and then the OS kernel marks all the pages as read-only, so that any future writes to the page from either the parent or child trigger a page fault. When a write occurs, the page fault is trapped by the operating system, which can then copy the page to a new location in memory, and the page table is updated. This makes Fork both faster and more memory efficient since it doesn't have to copy any memory pages until they're actually modified.

00:10:40.080

This optimization makes a normal Fork nearly as fast as vFork, but since Fork still copies page tables, vFork is still slightly faster for very large processes. Finally, beyond Fork, there are threads; Unix originally used only processes to run programs in parallel on multiple CPUs and to provide general concurrency. However, since processes were traditionally relatively heavyweight, they took a long time to spin up.

00:11:00.000

Other systems implemented threads, which are sequential flows of control like processes but that share a single address space instead of copying it. Kernel-level threads were added to various Unix systems in the 90s, and a POSIX thread standard called Pthreads was published in 1995. This standard defines that a process contains one or more threads, and for every process, there is an implicit main thread. When a process calls the exit function to terminate, all of the other threads are terminated as well.

00:11:35.640

The standard also states that on Fork, only the calling thread is running in the child process, so no other running threads are copied over. In general, there are three models for mapping user-level threads—threads presented to user programs through an API like Pthreads—to kernel-scheduled threads running under the hood. The first model is many-to-one or pure user-level threads: all of these run on a single OS thread and all the context switching between threads is handled by the program itself.

00:12:29.880

This model can be very fast since there's no overhead of entering and exiting the kernel for a system call, but they can't run in parallel on multiple CPUs. The threads aren't visible to OS utilities or profilers, and a blocking system call can stop all threads if it's not handled properly. The next model is one-to-one, where the kernel-level threads or native threads are mostly passed through directly to the user-level API. This is the model you find in most Pthread implementations today because it performs well and is relatively simple to implement.

00:13:06.840

Finally, there is the many-to-many or hybrid threads model: theoretically, you can get the best of both worlds, where your program can spin up M threads and then the mapping figures out the best way to multiplex them onto N threads in the kernel that can run most efficiently. This model was more popular for Pthread implementations in older Unix systems. Despite the overhead in creating new kernel threads, the one-to-one model has effectively won out in terms of performance and simplicity.

00:13:46.380

That said, the M-to-N model can still be the Holy Grail of performance—not for Pthreads directly, but as a construct within a programming language framework. For example, Go routines are a mature concurrency model with a scheduler that efficiently maps a large number of user-level Go routines onto the minimum number of OS threads necessary to utilize all CPU cores on the system. In modern Linux, threads and processes are actually two types of tasks: there's a more abstract, generic clone call that underlies all task creation, and both processes and threads differ only in their degree of resource sharing.

00:14:37.140

So, while traditionally threads are considered lightweight compared to processes, in modern Linux, their performance is quite similar, as they share a good chunk of implementation under the hood. They are also scheduled exactly the same. All right, so that's everything on Fork in Unix. Next up is the Ruby and Rails deep dive.

00:15:21.480

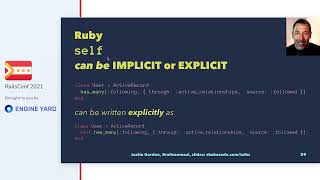

Here's a basic concurrent programming example that creates an array of 10 Ruby threads that each do some heavy work and then waits for all the threads to finish. Ideally, this code would run in parallel on a powerful machine with multiple CPUs, and on some Ruby implementations, it does. Unfortunately, it doesn't in CRuby thanks to the GVL, or Global VM Lock.

00:15:37.900

The one exception to this is when the GVL is explicitly released by a function in Ruby's C API, which can happen for all IO operations, many system calls, and some C extensions. However, since separate processes will have their own separate GVLs, Fork can actually allow similar Ruby code to run in parallel even on CRuby.

00:15:55.800

Here's Ruby's API for Fork. There are two ways to call it: with or without a block. If a block is specified, it runs in the subprocess (child process), while calling without a block is similar to the C API, where the caller returns twice: the child's process ID is returned to the parent and nil is returned to the child. I find the block version a little clearer and easier to use, so that's what I tend to use in practice.

00:16:13.680

Here's a parallel processing example using Fork. The Fork block is executed 10 times, creating 10 child processes. Each child process does work and then prints a line to standard out with the unique process ID. The parent process waits for all the children to finish. Note that the order in which each child process finishes and writes to standard out is not fixed, so the final printed output is also unordered.

00:16:55.020

Additionally, all the child processes' output will print to the same terminal, which may seem straightforward, but how are these streams getting mixed? The answer is that standard output is a standard IO stream represented by a fixed file descriptor number (1 by convention), and one of the properties of Fork is that child processes inherit copies of the parent's file descriptors.

00:17:09.840

So the output is already directed into the same stream connected to the terminal, which is the standard out of the parent process. The next example shows how the streams and file descriptors work a bit more explicitly: an anonymous pipe is created at the start before any Forks to pass the output messages from children to the parent.

00:17:29.880

Noting that the child inherits copies of the parent's open file descriptors, the parent reads the messages from the read end of the pipe and then prints them to standard out. This gives the same output and it's still not in order. A further modified example prints the output in order by creating a separate read-write pipe for each child and then reading and printing the output from each pipe in the original order in the parent process.

00:17:48.300

Finally, the existing gem parallel already wraps up this pipe-Fork pattern into a simple API. All right, so for this next section, we'll look at some more complex use cases. First up is Spring, the Rails application preloader.

00:18:11.340

The way Spring works is that the first time Spring is run, it creates a background server process that initializes your Rails application for a particular environment and then waits for a signal from another process to quickly serve up a new pre-loaded Rails process—for example, to run a console or test command.

00:18:43.400

Here's a process diagram of a Spring server process running, hosting a single Spring app process where the Rails app was fully initialized and two Rails console child processes below it. You can see two bin/rails console commands running, which are the original command processes that the user invoked via the command line interface.

00:19:13.320

These processes must somehow be directly connected to the two Rails console processes. Let's see what exactly is happening and how Fork fits into this picture. Here, we see that the code in Spring invokes Fork in the application class.

00:19:43.459

It receives the three standard streams on a client socket through the receive IO method, then replaces its standard streams with the received ones through the reopen method. It reads some JSON containing command arguments and environment values, and calls Fork. At the end of the Fork block, it calls the provided command within the child, still within the child process.

00:20:08.760

When a new client connects to this Spring app process through a Unix socket, it passes along its standard output and error file descriptors to the Spring app using a special feature of Unix sockets. The system call is sendmsg/recvmsg, and it's wrapped up as sendio/receiveio in Ruby. These streams are then reopened in the Spring app process, and the reopen method in Ruby corresponds to the Unix dup2 system call, which simply copies one file descriptor over another.

00:20:42.780

This allows the new process to behave as though it magically became a Fork of the existing pre-loaded application, even though it's still an entirely separate process. This diagram shows how the different Spring processes relate to each other. At the bottom left, the Rails console commands run in processes that pass their I/O streams to the server at the top left.

00:21:12.960

The server manages a set of application manager objects, one corresponding to each environment. These spawn separate Spring app processes that load the Rails app from scratch, and the manager passes the streams into these processes. Then, the application class within those Spring app processes calls Fork to create a copy of the pre-loaded app process and passes the streams onto them where the provided command is then executed.

00:21:54.240

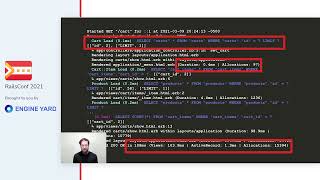

Next up is Puma, a Ruby web server built for concurrency. Here's what Puma's cluster mode looks like, running with eight workers. On the right is the process tree where all eight cluster workers are forked from the parent process.

00:22:12.600

Here's the code from the cluster mode of Puma that calls Fork to create these separate worker processes. To understand this better, here’s a diagram of how the main server loop works in Puma and how its hybrid model of threads and processes works together.

00:22:44.160

When the parent process running in cluster mode first boots up, it loads the Rack application, executes the listen system call, which creates a TCP server socket bound to an IP address and port number, and then calls Fork to create a number of cluster worker processes, each of which runs a server loop.

00:23:04.680

This server loop calls select, which waits for incoming data on their socket, and then accept, which opens a TCP connection that gets passed to one of several threads in a thread pool, one for each worker. In the thread pool, the HTTP request is processed, the Rack application is called, and then the response is written back to the TCP connection.

00:23:38.160

This model of multiple threads on top of Fork processes makes Puma particularly effective for I/O-bound loads compared to single-threaded servers because it can handle multiple requests on the same process. This benchmark shows multi-threaded Puma handling almost twice as many responses per second as single-threaded Passenger, and more than three times as many as single-threaded Unicorn.

00:24:15.780

However, for a long time, there was a tricky performance issue when running multiple threads in Puma. This other benchmark at the top shows multi-threaded Puma's performance falling behind Unicorn and Passenger in a database query test. These graphs are from a performance regression I observed when I first migrated Code.org's Rails app from Unicorn to Puma.

00:25:05.100

You can see the 99th percentile latency had much higher spikes in the Unicorn graph, which is a lot more stable overall. Similarly, a similar issue was reported by an engineer at GitLab, where this same performance regression was preventing them from migrating their apps from Unicorn to Puma safely.

00:25:45.840

This graph shows the percentage of time waiting on a database request was much higher in their Puma app server compared to their Unicorn one. It turns out that this performance issue had to do with how connections on the socket, shared through Fork, were being unevenly routed across processes, causing contention among multiple threads in a process.

00:26:24.600

To illustrate this, all four workers have a thread actively waiting to accept new connections from the socket through those inherited file descriptors. If one worker is busy processing a single connection but has an additional thread available for more work, when the next connection arrives, there's a race among all workers to get it.

00:26:57.360

The first thread that makes it from getting woken up to calling accept wins the race. If that's worker 3, it starts processing the connection in the thread pool, while the two requests in the system are being handled in parallel. However, if the next connection gets accepted by worker 1, it adds the connection to the thread pool but might run slower due to contention from thread mutexes or the GVL.

00:27:26.699

In order to run Puma most efficiently, it should route new connections in a balanced way across all processes while still allowing busy workers to accept new connections. One possible approach is to accept connections before the Fork and use a feature of Unix domain sockets to pass file descriptors from the parent process to its children, similar to what we saw in Spring.

00:28:00.030

The actual Unix calls are a bit complicated, but Ruby wraps them up nicely in the sendIO and receiveIO methods that we saw. The trade-off here is that there's some overhead in having the workers report to the parent their current load, and there would now be a critical thread in the parent responsible for all incoming connections.

00:28:30.120

If that thread gets blocked or crashes for some reason, the whole system could become unresponsive. Another approach is to have the parent do nothing and let each worker bind their own separate socket. It's possible for them to bind to the same port if you use the SO_REUSEPORT option available in the Linux kernel.

00:29:08.940

While this would distribute connections nicely, the implementation of SO_REUSEPORT distributes connections based on a hashing function that wouldn't know how to distribute based on the current load of worker threads in the process. Therefore, there would still be a possibility of connections getting unevenly bunched up on the same process.

00:29:43.920

This clever and relatively simple approach was contributed by Camille from GitLab, and it’s what current versions of Puma use to address this performance issue. All it does is inject an extra tiny wait in between the select and accept calls when a worker is busy with another connection. This way, any idle workers are more likely to pick up the connection first, and if they’re all busy, then a lightly loaded server will still pick up the connection after a small delay.

00:30:31.680

Now let's move on to some tips, tricks, and experiments. As we saw, the earliest and most common use case of Fork is to spawn a brand new process using the Fork exec pattern. However, in modern Unix systems, you should really use spawn instead of doing a Fork exec yourself to avoid dealing with various race condition headaches.

00:30:50.940

You can either use the POSIX spawn system call function directly, or Ruby provides the Process.spawn method, which is a similar API. Even though it still internally does Fork exec, it can handle all the tricky race conditions on your behalf.

00:31:14.640

Besides launching a new process, the next general use case of Fork is to run code in parallel across CPUs. This model is great when you want to run code that's fully isolated by default, since there's no need for mutexes or generally any thread-safe code.

00:31:41.520

This is particularly important for Ruby because it lets you bypass the GVL, which gives it a great advantage versus running threads concurrently. Finally, another use case for Fork is to take advantage of how it efficiently copies the entire memory address space of a process using copy-on-write.

00:32:04.920

This is what lets you do things like preload a large application in the Fork server process, as we saw in Spring. This same technique is also heavily used in other real-world systems, such as a fuzzer like AFL or the Android process launcher called Zygote.

00:32:36.400

Fork can also be used to freeze a consistent snapshot of fast-changing data in memory to run a kind of background save process. This is how Redis provides data persistence—by taking point-in-time snapshots at regular intervals with minimal impact on the main process that continues to serve requests.

00:33:04.800

Here are a couple of tips on how to design a good Fork server. The main piece of advice is to preload as much as possible into the Fork server process before forking, which would be in your initializers in Rails. This can be a balancing act because you want to make sure you don't preload anything too unique that isn't used often by all Fork processes.

00:33:28.920

You also want to avoid preloading data that might change too frequently, which would make it obsolete in a long-running process and take up memory. Beyond that, specifically for Ruby, ensure to perform what's called a Naka-Yoshi Fork to optimize memory pages for copy-on-write.

00:33:57.900

More accurately, this is a workaround for a copy-on-write regression introduced with the generational garbage collector in Ruby 2.2. Unfortunately, the attribute that marks an object's age is stored directly with the object instead of in a separate copyright-friendly bitmap. Thus, running garbage collection after a fork that updates an object's age will cause the memory page to be written and no longer shared across processes.

00:34:26.880

By running garbage collection four times in a row, which exceeds the max age of three, it marks all existing objects in memory as old; consequently, their age won't be updated anymore, hence they remain copy-on-write friendly. It's worth mentioning that GC.compact before a fork might theoretically assist with copy-on-write efficiency by reducing fragmentation of Ruby's heap, making existing objects less likely to need to be moved around later.

00:34:48.360

However, I haven't observed any significant effects on my testing so far with GC.compact; perhaps future profiling work on this can improve memory compaction for copy-on-write efficiency, or I simply haven't tested the right scenarios. Finally, transparent huge pages can destroy copy-on-write efficiency because minor changes in memory may cause larger memory chunks to be copied, thankfully, Ruby disables this feature automatically.

00:35:22.380

For using Fork for parallel processing, the big general tip is to Fork one process per CPU to maximize performance and memory usage. This way, each process has a full CPU to itself and you can theoretically hit 100% CPU utilization with a heavy workload. However, for I/O-heavy workloads, even more processes or multiple threads per process, as we saw in the Puma server, could work even better.

00:35:56.460

Beyond this, running multiple fibers in a process can even be more efficient than threads because it reduces OS context switching overhead by handling all scheduling at the user level. Finally, to maximize performance in Ruby 3 and onwards, you might want to start experimenting with reactors instead of Fork processes whenever possible.

00:36:35.760

From my understanding, reactors use kernel threads under the hood just like Ruby's thread object, but they manage separate VM locks, thus enabling multiple reactors to run in the same process and allowing Ruby code to run in parallel. Unlike threads, the trade-off is that any object their actor touches needs to be marked as immutable or specifically owned by that reactor, which is its concurrency model.

00:37:06.360

That's not to say that it will be easy to run an entire Rails app across multiple reactors instead of multiple processes very soon; however, for small chunks of isolated code, this could still be a good alternative to using processes for parallel processing.

00:37:37.860

The main advantage of actors over Forking processes is not actually performance; it's that it can improve memory efficiency, since copy-on-write memory isn't 100% efficient in sharing memory due to fragmentation and the movement of objects around both in the Ruby heap and the low-level memory allocator.

00:38:19.200

Message passing between reactors could theoretically perform better than IPC like pipes communicating between processes; however, I haven't seen any benchmarks confirming this advantage yet, so that's still pending validation.

00:38:49.560

Looking toward the future, if and when reactors become more mature, using many fibers per reactor and one reactor per CPU could potentially reach that M-by-N threads Holy Grail of performance I've mentioned earlier.

00:39:12.060

Here's my final set of tips to help make your code Fork safe. First, remember that exit handlers are copied to the child and run in both processes, so you need either to add logic in the handler to ensure the process ID still equals the parent before executing, or you should call exit! when terminating the child to bypass any handlers registered by the parent.

00:39:35.060

Next, be careful about sharing open file descriptors across processes. Make sure to protect any open network connections by closing them in the child process to avoid accidental usage by both processes simultaneously.

00:40:04.580

Finally, remember that background threads aren't copied to the child after a Fork. You'll need to restart any important threads in the child after the Fork or simply design your system with the expectation that they'll only be running in the parent process and make sure to clean up any associated state afterwards.

00:40:24.720

For the last part of this talk, I have a couple of Fork-related experiments I'd like to share. The first one is a Puma Fork eval server, which is a simple minimal Fork server interface I've written as a Puma plugin.

00:40:50.880

The idea of this plugin is to quickly and efficiently run code in your pre-loaded Rack app. The general use cases are to spin up an interactive console like Rails console, run one-off or scheduled rake tasks that might need access to the Rack application, or even to spin up asynchronous job processors running on a separate process while still referencing parts of the Rails app in their application logic.

00:41:20.540

I like to think of this experiment as a kind of Spring for production. It doesn't create any extra application copies in memory like a separate Spring server would. Instead, it Forks to run commands from the existing pre-loaded Puma process.

00:41:45.840

There's no risk of having stale app versions or keeping the Spring process in sync with Puma. Instead, it simply runs the exact code already being served to your users by Puma, allowing you to manage updates in line with your production deployment lifecycle.

00:42:10.740

This is the bulk of the plugin code. On the left is the server code that runs in a background thread on the Puma parent process, and on the right is the client code invoked by a command-line script.

00:42:42.600

The server just listens on a Unix domain socket and runs Fork for each new socket connection it receives. Within this Fork, the child process replaces its standard input, standard output, and standard error streams using reopen with three file descriptors it receives through the receive IO command.

00:43:20.040

It then reads a string from the socket until the client closes the connection and subsequently calls eval on the string it received. The client code is even simpler; it opens a connection on the server domain socket, sends its three standard streams using sendIO, and writes the code it wants to eval to the socket. That's it!

00:43:51.300

This is basically just a stripped-down version of the core logic that Spring uses to run its own Fork server that can run commands in a pre-loaded app. It’s not completely bulletproof yet; it doesn't forward signals to the forked process like Spring does, and it hasn't been tested on all the command types that Spring supports. Nevertheless, I thought it would be an interesting start to share.

00:44:25.320

The next experiment is also based on Puma. This one's not a plugin, but a new feature I merged as a pull request last year and was released as part of Puma 5. The general idea here is that it's possible to optimize copy-on-write efficiency on long-running servers by making the assumption that as a server serves requests for live traffic, the app grows and accumulates additional objects in memory that could be shared across all the running worker processes.

00:45:00.240

By reforking workers from a single running process, it's possible to reshare the larger application and then re-optimize the copy-on-write memory pages shared across them. To make reforking possible, I had to change the process hierarchy around a bit. Instead of forking all of the workers directly from the parent, when the fork worker option is enabled in Puma, only worker zero Forks directly from the parent.

00:45:31.440

All other workers Fork off of that worker after the app is loaded in the server. Reforking can happen automatically after a certain number of requests or zero control signals sent to the parent process. All workers greater than zero get shut down and are recreated from worker zero again by Fork.

00:46:10.680

Here's what the process tree looks like with the fork worker enabled. For testing the performance of refork, I wrote a simple contrived example Rack app that lazy loads a really big object as a global variable. In the real world, ideally, we could refactor this app to preload this object outside the Handler, so it gets loaded before the initial Fork. However, this is a contrived example, and sometimes this kind of refactoring isn't as straightforward for large, complex apps.

00:46:38.880

For this benchmark, I launched the Puma server with a load testing tool for about 20 seconds, then used a function called PSS to check the memory usage. I ran the refork command to trigger refork, ran work again, and then ran PSS again to check the final memory usage to see the difference. PSS, or the Proportional Set Size, is the best measurement of memory usage in a process that shares copy-on-write memory.

00:47:10.920

PSS is defined as the process's unshared memory (USS) plus a proportion of each shared memory chunk split across all the processes sharing it. To accurately measure copy-on-write optimized processes, you must use PSS. If you measure RSS (Resident Set Size) instead, the shared memory would be counted multiple times for every instance it's being shared. You can measure PSS using the s-mem utility or by directly reading a special path on the filesystem.

00:47:50.880

For this test, I used a Ruby script to read the process IDs of the Puma workers, read their PSS values, and then summed them all together. Here are the final results of the test. Before the refork, our memory usage was almost 5 gigabytes, and after the refork, it was reduced to around 1 gigabyte.

00:48:35.280

Here’s another view of that using s-mem to delve into more detail. This is the cluster after the first round of work, with each worker's PSS at about 726 megabytes, most of which was unshared (USS). The view immediately after running refork shows the RSS still just as high, but the PSS drop getting closer to 100 megabytes per process, and the unshared amount for USS is tiny—only about 5 megabytes per process.

00:49:22.080

After subsequent loads, there's about 100 megabytes of unshared memory per process, and the total PSS is now a little under 200 megabytes. This is still much lower than the original PSS of 750 megabytes per process while maintaining the RSS high at 750 as well.

00:50:06.040

Using refork with Puma can reduce your memory usage by up to 80%. This result is quite dramatic. I wanted to show you what this might look like for a real production Rails app. This is a comparison test that I ran on two production front-end servers running Code.org's Rails application—both servers serving live traffic and both on AWS EC2 instances with 40 CPU cores.

00:50:32.280

This graph shows total memory usage as a percentage on the left Y-axis. The orange line indicates the baseline instance, while the blue line reflects the instance where I invoked the refork command a couple of times. You can see two spikes in the graph where I triggered the command. The green line shows the calculated difference as a reduction percentage—the Y-axis on the right.

00:51:24.780

You can see that immediately after the refork, the memory usage drops significantly from 40% down to around 20%, but then it creeps back up again as memory gets modified, and the processes start to share less total memory. Nevertheless, the refork server has consistently used significantly less memory than the original server—around 20-30% less in this app.

00:52:06.000

This is a real production app, so whether this technique is beneficial or not likely relies on your app's unique workload and how important memory efficiency is to your environment. In any case, I hope this experiment was interesting to some of you.

00:52:50.040

That's everything I have to share about Fork. Thank you very much for listening. I hope you learned something from my talk, and thank the RailsConf organizers for giving me the opportunity to speak and present on this topic. To all the Rails developers, organizers, and maintainers, thank you for fostering such a great community and delivering excellent open-source software.

00:53:06.060

I look forward to more conversations in our RailsConf Q&A sessions and beyond! Thank you very much.