00:00:10.480

Thank you very much. We are very excited to present this workshop to you today.

00:00:15.759

It's a bit of an experiment in and of itself. We have a vast body of knowledge to cover.

00:00:21.760

We’ve distilled that down to what we think are the fundamentals for you as software engineers.

00:00:28.720

As Penelope said, this is a very interactive workshop, so we're going to have you turn and chat with people in your groups.

00:00:33.920

Then we have Daniella and Ty who will be running some microphones around at the outset.

00:00:38.960

I wanted to start off by getting a sense of who is in the room.

00:00:44.960

We do have to do this little dance of not getting too close to each other.

00:00:51.840

So, is anyone here a site reliability engineer or an on-call engineer?

00:00:57.600

Okay, well raise your hand if you've ever been on-call.

00:01:02.879

This will work; this is going to work.

00:01:07.920

It's fine if you haven't been on-call. That's totally cool.

00:01:14.240

I just wanted to get a sense—do we have any marketing, product, or customer folks in the room?

00:01:20.799

Great! We have a representative voice. Excellent!

00:01:27.119

All right, sweet. Well, let's get into it.

00:01:34.960

So, who are we? I am a researcher with jelly.io.

00:01:40.560

I've been working in high-risk, high-consequence industries for most of the last 15 years.

00:01:46.079

My interest in software came about during my doctoral program at The Ohio State University.

00:01:52.079

I came from an industry where things were very dramatic. There was a lot of high speed, high pressure, and adventure.

00:01:58.240

When I was first told I was working in software, I was like, 'What? Really?'

00:02:03.920

As I started to get more involved in understanding what you all do, I realized that this is actually the quintessential occupation.

00:02:09.119

To really understand cognitive work and to really understand what we’re going to talk about here today: joint cognitive systems.

00:02:15.760

I’m going to pull in examples from different industries and kind of draw that through line for you.

00:02:22.400

My name is John Allspaw. Like Laura mentioned, I became addicted—enamored—with seeing connections.

00:02:29.040

I come from a software background with an infrastructural bent.

00:02:34.400

In my early career, I worked in a bunch of different places.

00:02:41.040

None of that background is particularly interesting, so I don't want to spend too much time on it.

00:02:47.280

The point I want to get across is that workshops like this—and talks that touch on similar themes—are all part of what I'm pretty confident is a slow, but needed shift in the industry.

00:02:53.680

What we will talk about today is so closely related to what you all experience that we sometimes don't pay much attention to it.

00:02:58.959

I will describe that later.

00:03:04.959

I think we should also mention that we met at Lund University in the Human Factors and Systems Safety Design program.

00:03:10.879

I was struck by the similarities between the work I was doing and the things John was seeing in the software world.

00:03:16.879

This was almost ten years ago, and we are both still continually learning.

00:03:22.239

We are continually surprised by what we find when we actually look closely at people doing real work in real-world contexts.

00:03:28.560

It’s remarkably sophisticated, and it is fascinating.

00:03:34.400

But it is a long journey. Our goals for what we want to accomplish here today is to act as coaches.

00:03:40.800

Just as if you spent two hours on the pitch, you might know some of the movements, you might know some of the plays, but you won't necessarily bend it like Beckham.

00:03:46.800

So, we want to set that expectation right off the bat.

00:03:52.320

What we are doing here is challenging some of the paradigms—some of the ways that you've been thinking about.

00:03:58.800

How you develop technology, how you deploy it into fields of practice, how your users interact with it, and how you interact with the tools you have.

00:04:05.680

At the end of the day, we hope you walk away with questions and a lot of interest in pursuing this further.

00:04:10.720

This is an experiment that we see as a mission.

00:04:16.000

We have two hours to talk about a core concept that touches every part of an entire field of engineering.

00:04:23.360

Though it is not very well known, the bar is significantly high.

00:04:30.080

If we do our job here, and you all are into it, you might actually leave somewhat disappointed or slightly unsatisfied.

00:04:38.559

In that, you will want to know more because we simply can't get to it.

00:04:45.440

Still, I believe that’s a win for us.

00:04:53.440

One of the things I want to correct is that we are going to talk about multiple interacting fields.

00:05:00.800

Some of which you may have heard of, and others you may not.

00:05:07.440

We will talk about how many people in the room actually know what resilience engineering is, or have heard the term.

00:05:14.080

Okay, how many of you have seen something on Twitter, or have heard some talks at different conferences?

00:05:22.239

What we're going to do is break down these connections between these different fields and how they relate to software engineering.

00:05:29.840

As we were discussing this, we thought about how to make these connections for people.

00:05:36.480

We want to avoid getting into nerdy academic talk.

00:05:43.200

John had a really good example.

00:05:50.560

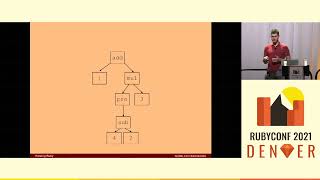

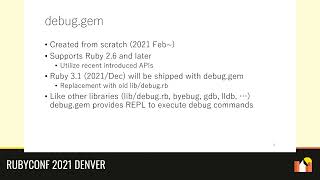

So, see this green bit here? This smaller circle—joint cognitive systems—is a core idea.

00:05:57.680

Everything within the multidisciplinary field of cognitive systems engineering relates and connects to it.

00:06:02.400

Think of it a little bit like this: if you’re familiar with the field of statistics.

00:06:10.640

Is all of it about normal distributions, averages, mode, and median? No.

00:06:15.200

It’s certainly a core part of it.

00:06:22.880

It would be very weird to talk about statistics without having that as a bit of a framing.

00:06:29.760

This workshop isn't on cognitive systems engineering because we don’t have time.

00:06:36.160

Laura just spent a dissertation on it.

00:06:41.120

So we’re just going to focus on this little bit.

00:06:46.960

Does that make sense?

00:06:54.000

We wanted to give you a bottom line upfront.

00:06:59.440

At a very high level, these are the core concepts we will discuss.

00:07:05.680

The first point is that all work is cognitive work.

00:07:12.639

We will break this down a little bit more and discuss what it means to perceive change in events in your world.

00:07:18.320

What does it mean to make sense of those changes?

00:07:24.560

How do you assess the meaning and implications of that change as it's happening?

00:07:30.080

The second core point is that work is always distributed across different agents.

00:07:36.640

In the joint cognitive systems world, we will predominantly discuss how cognitive work is shared.

00:07:42.080

This thinking involves both machine agents and human agents.

00:07:48.320

So, when I talk about machines, I am using that interchangeably with automation or various forms of artificial intelligence.

00:07:54.320

The level of analysis we will look at is cognitive work at this level.

00:08:01.080

The third piece is that these machine coworkers can sometimes be unhelpful.

00:08:07.200

They don’t always help you and can sometimes be slightly frustrating or catastrophic.

00:08:13.439

Lastly, we can design and develop these systems of work for greater safety, productivity, and overall more resilient and robust systems.

00:08:19.680

I think we are at a disadvantage because I didn’t manage to get the computer to make the next slides.

00:08:26.240

So now I have no idea what’s going to come next.

00:08:34.480

Well, we wanted this to be an interactive workshop, so we have a series of exercises.

00:08:40.160

First, we want to understand what a joint cognitive system is.

00:08:46.640

Imagine that it’s the end of your day.

00:08:51.200

You know the dog has been taken for a walk, or you’ve finished dinner.

00:08:57.760

You’re all set to settle down and go to bed.

00:09:03.080

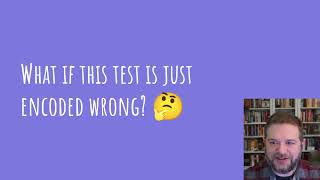

Then you receive an alert of some sort.

00:09:10.320

You look at it and it looks something like this.

00:09:15.680

I want to ask you a question, but first, I want to see if you will humor us.

00:09:22.240

We would love to come up with two tables for a group.

00:09:29.600

We could have one, two, three, four teams.

00:09:34.960

I want you to spend a couple of minutes talking about this question.

00:09:40.160

You just received this page alert.

00:09:47.520

What do you think you would do next?

00:09:52.560

That’s it! Yes, granted, there is lots in here.

00:09:59.680

We want you to talk with your neighbors about what might occur to you in this hypothetical world.

00:10:05.680

So, spend two minutes talking about anything that comes to mind.

00:10:10.240

Sounds like you all have some ideas to discuss.

00:10:18.160

Of course, you might be saying, 'I don’t know what the hell is happening or what the story is here.'

00:10:25.040

How do we want to do this? We will have Daniella and Ty in the front and back.

00:10:32.480

If you have something to say, raise your hand, and they will run over.

00:10:38.000

What would you do next? What’s an idea that came to you?

00:10:44.800

A brave soul in the back for the first.

00:10:51.200

You checked for urgency? How quickly has this become a problem? How quickly is it going to become a bigger problem?

00:10:58.480

Can I ask you just real quick, how do you check for urgency?

00:11:05.280

Hopefully, I’ve got something that has charts showing how much free space or other issues.

00:11:10.480

Excellent! What else? Any other ideas?

00:11:17.000

Being a DevOps, maybe I would go back to sleep.

00:11:23.520

Excellent! Legit! We have someone in the back.

00:11:29.760

I would ask why we are getting this notification at 99%, not sooner.

00:11:35.520

I’d probably recalibrate that.

00:11:41.760

There’s never been a film where you’re like, 'Why is this not fitting?'}

00:11:48.080

You said that historical context might matter, like if we’ve seen this alert before.

00:11:56.000

We'd react differently depending on our history with it.

00:12:02.160

I actually thought the same thing because now it's warning.

00:12:08.400

We have a warning, which means this may be something we don’t care enough to do anything about.

00:12:14.640

Or someone has been ignoring it for too long.

00:12:20.320

So, do you just go back to bed?

00:12:26.480

Probably.

00:12:33.760

This is excellent! Thank you! Anyone else?

00:12:39.360

I would probably log into Web 121 and make sure it’s not a false positive.

00:12:45.840

Some forms of verification, yes.

00:12:53.920

This is excellent. They all did a great job.

00:12:59.760

So, I have a question for you.

00:13:05.600

If this was your human colleague who phoned you up in the middle of the night and just told you this and then hung up, how happy would you be with them?

00:13:14.320

This is fundamentally what we’re talking about.

00:13:21.119

When we discuss joint cognitive systems, is your colleague your machine colleague performing well?

00:13:27.839

It can be both irritating and also confusing.

00:13:33.840

Sometimes, it's a mild inconvenience, while at other times it can be deadly.

00:13:39.199

This stuff truly matters and increases in importance.

00:13:45.440

As we enhance the speed and scale of critical digital infrastructure, we move more of society's core functions into the cloud.

00:13:52.640

So, here's where we will go.

00:14:01.280

We've already gone through one of the exercises.

00:14:07.760

Now John is going to talk a little about how this thinking developed and give you some background.

00:14:14.080

There are 40 years of literature and studies in high-risk, high-consequence type domains.

00:14:21.440

That’s where we draw a lot of this theory and knowledge from.

00:14:28.320

We will deep dive into what cognitive work is, so we can break that down a little more.

00:14:35.200

We will do another exercise there and then explore—if we have one machine and one human.

00:14:41.680

Is that a different interaction than multiple machines and humans?

00:14:46.960

Spoiler alert: it is!

00:14:53.680

Then, we’ll have you undertake a little activity.

00:14:59.760

We want to understand how we think about distributed cognition in these types of scenarios.

00:15:06.720

Now, I'll do an extremely abbreviated and cherry-picked history starting around World War II.

00:15:12.440

In 1943, the US Army was like many large organizations.

00:15:18.880

It was fashionable to think about hiring people.

00:15:24.560

You could, in the process of hiring, work out what they were good at.

00:15:31.280

So, you could fit the person to the job.

00:15:38.480

At that time, the B-17, the most advanced military apparatus, was known as the 'Flying Fortress'.

00:15:44.000

The issue in '43 was that they were crashing.

00:15:51.840

This was not the case of being shot down or being in battle.

00:15:58.239

They would land on runways, and about halfway down, the landing gear would retract, and they would belly flop.

00:16:06.560

This seemed to be a significant issue, often with bombs still onboard.

00:16:13.040

This was happening with enough frequency that the army was concerned.

00:16:20.160

It couldn't be the planes because they were amazing.

00:16:26.560

It had to be something about the pilots.

00:16:34.560

I'll show you a picture of what these planes looked like.

00:16:41.320

They brought in Alphonse Japanese, one of the first engineering psychologists.

00:16:48.960

The army said, 'We have all the pilots here for you to interview.'

00:16:55.680

He asked to see where they work.

00:17:01.600

They brought him to a cockpit.

00:17:08.000

When he arrived, he pointed out a design flaw.

00:17:14.080

The flap control, which is what you need for slowing the plane, was installed right next to the landing gear control.

00:17:22.640

He asked about the other planes, like the P-47.

00:17:27.680

Its controls were in different places entirely.

00:17:33.440

As it turned out, most accidents happened with pilots who had previously flown the P-47.

00:17:39.440

So this helped to inform design to prevent accidents.

00:17:45.040

To this day, you can see the distinctions in flap controls and landing gear controls.

00:17:52.720

As a key insight, James Reason said that we can't change the human condition.

00:17:58.640

However, we can change the conditions under which humans work. This was groundbreaking.

00:18:05.200

This perspective enabled future studies of human factors.

00:18:12.080

Safety can be encoded into technology.

00:18:19.440

Accidents can be avoided with more automation.

00:18:25.600

Procedures can be precisely specified.

00:18:32.800

Operators need to follow the procedures to get work done.

00:18:40.400

In aviation and nuclear power, there’s a running joke that it only takes a dog and a human to run an aircraft carrier.

00:18:48.000

The human feeds the dog, and the dog makes sure the human doesn't touch anything.

00:18:53.440

This notion persisted in that humans are better at certain tasks, while machines are better at others.

00:19:01.600

This lasted until March 28, 1979, a significant date.

00:19:09.040

Those who know about the Three Mile Island accident know how pivotal it was.

00:19:16.480

This completely flipped our understanding of how humans make decisions.

00:19:22.000

I won't go into the details of the accident, but it changed the game.

00:19:28.320

Safety and cognitive work are better understood through the lens of automation.

00:19:34.240

Automation introduces challenges and risks but is necessary.

00:19:41.120

Rules and procedures can’t guarantee safety by themselves.

00:19:47.840

Raise your hand if you work somewhere that has run books.

00:19:53.920

Raise your hand if you think you could follow these procedures exactly and not have a bad time.

00:20:00.400

Many won’t, as the procedures rarely match reality.

00:20:06.320

Methods for risk rely heavily on human error categories, which often fail to represent how things actually are.

00:20:14.560

Linear models of accidents, like dominoes falling, also fall short, leading to misunderstandings.

00:20:23.280

In the mid-80s, scholars came together following the fissures revealed in Three Mile Island.

00:20:30.640

They articulated the concept of a joint cognitive system.

00:20:37.840

The kernel of this involves two perspectives.

00:20:44.560

First, people using technology have a mental model of the technical system.

00:20:51.680

They understand its functions, limitations, how it fails, and how to respond.

00:20:58.080

In turn, the technical system also has a model of the user.

00:21:05.040

That model dictates how well that system supports the user.

00:21:12.080

This perspective shift is pivotal.

00:21:18.960

This brings us to today’s landscape.

00:21:26.000

But frankly, I have a qualm with how this is often presented.

00:21:32.640

That's why I'm standing here; there's much legacy residue from these old models.

00:21:39.840

As a researcher, I have many opinions about where the industry is today.

00:21:47.920

I would like to survey you in the room about how you feel your organization handles these concepts.

00:21:54.640

How many believe their company thinks accidents can be avoided through more automation?

00:22:01.200

Kind of maybe a few on the fence.

00:22:07.680

How many feel your company thinks it is necessary but also introduces new challenges?

00:22:13.120

Great! That's inspiring to see!

00:22:18.760

What about the idea that everything can be put in run books if documentation is up-to-date?

00:22:26.080

I want to talk to you later.

00:22:32.879

Remember this is your company's view, not your own.

00:22:39.120

Rules and procedures are always underspecified.

00:22:46.919

What about engineers having to just follow the procedures?

00:22:53.679

Nice! Okay, a few in the back.

00:23:01.279

As we are discussing these views, I think it’s important for us to recognize that every organization faces these gaps.

00:23:06.879

What kinds of support do you have? It’s crucial to recognize them.

00:23:15.919

We will begin to make the case for nurturing those gaps and opportunities.

00:23:20.960

We want to take a look at broadening our perspectives on joint cognitive systems.

00:23:28.160

And our understanding of the complex nature of systems, which have many inherent challenges.

00:23:34.960

This further emphasizes the coordination needed among multiple interacting agents.

00:23:40.880

If we already know who is engaged in this interaction, we can push others to support the system.

00:23:49.480

It doesn’t have to bear all the load.

00:23:55.919

One takeaway from today is recognizing the role of different agents in problem-solving.

00:24:03.039

The collaboration of multiple actors brings numerous benefits.

00:24:09.760

The performance of the system improves through their interactions.

00:24:16.080

Collaboration helps bridge gaps between humans and machines.

00:24:24.080

As we explore lessons learned from both successful and unsuccessful events, we recognize that improvement is necessary.

00:24:30.800

Persistent gaps highlight the need for adaptive solutions.

00:24:38.240

We can enhance cognitive dynamics in shared systems.

00:24:45.440

To thrive, we must strategize to achieve collaborative comprehension.

00:24:51.920

We cannot ignore the realities we face.

00:25:00.080

Understanding the substantial nature of our challenges can lead to better outcomes.

00:25:06.239

In articulating what we must overcome, our explanations become more joyful.

00:25:12.240

Presenting the costs of cognitive work can help us understand its complexities.

00:25:18.080

Being conscious of like workloads assists us in delegation.

00:25:23.680

That awareness iteratively helps improve performance across an organization.

00:25:30.080

In moments of uncertainty, learning about others’ experiences is vital.

00:25:43.040

So, at the end of the day, what are some general conclusions?

00:25:48.960

Recognizing the models we engage with in shared cognitive systems is incredibly valuable.

00:25:55.760

You see now the importance of collaboration in the systems we engage with.

00:26:03.760

As you move forward in your work, we encourage a newfound understanding of how to embark on problem-solving.

00:26:12.000

Thank you, we welcome any follow-up questions or clarifications you require.

00:26:20.000

Feel free to reach out to us regarding any concepts we covered in today’s workshop.

00:26:29.000

Thank you once again for your participation!