00:00:00.900

Hello everyone, it's a pleasure to be here. My name is Cristian Planas, and I’m excited to share some insights on performance in Rails.

00:00:24.199

I've been in the audience for many years, watching presentations from my home in Spain. So, being here is truly a dream come true for me. One thing I've noticed while watching countless conferences is that great speakers often provide a quick summary in their first two slides, which allows the audience to catch key points before moving on to the next talk.

00:00:32.340

With that in mind, I’ve tried to summarize my ideas on this topic in just two slides. The first slide presents the main idea, while the second slide follows closely. Performance is a topic I think about very often, and I find it intriguing because while Ruby has its own unique challenges, many of them are shared with other programming communities like Python and Django.

00:00:49.500

In fact, I've developed microservices in Java, and ultimately, you’ll discover similar bottlenecks across languages. This led me to develop a comprehensive explanation regarding the origins of the myths surrounding Ruby’s performance issues. But to understand this, you’ll need to accompany me on a bit of time traveling. Let’s set the scene and travel back to 2010.

00:01:19.560

This is me back then, sporting a rather fabulous jumper, I must say. I was working on a photo site for movie magazines, and I wanted the critics to be able to write their own reviews, which meant I had to implement user authentication.

00:01:37.380

Authentication is a tough problem, as many of you already know. I rolled up my sleeves and conducted thorough research into this issue, eventually finding a solution. You might know the popular gem called Devise. I installed it quite simply, and although I didn’t fully understand how it worked, the point is, it worked.

00:01:58.259

For me, back when I was 22, it didn’t feel like such a hard problem at all. Fast forward a bit to 2012. I'm quite proud of this period; I started my own startup and found myself as the CTO, also known as the only engineer on the team.

00:02:11.580

We achieved success, but with that came the need to scale our application, a challenge I believe is the reason you're all here today. Again, I dove deep into researching this scaling issue before discovering a gem called ActiveRecord.

00:02:28.800

I assume everyone here is familiar with it. I initialized ActiveRecord with sufficient parameters and, surprisingly, everything just worked beautifully without it being overly complicated.

00:02:41.099

But scaling is quite a tricky topic; it feels like you might encounter a scenario where you just copy-and-paste some Rails screenshots, tweaking names here and there. The reality of scaling is that there are no free lunches, which is something I learned during my experience.

00:03:00.000

Scaling has a problem, and when you dive deep into it, you'll realize it often feels easy until you hit a wall. One of the significant hurdles in software engineering becomes apparent once you encounter scaling challenges.

00:03:17.280

Now, before delving into the main body of my presentation, I’d like to share some general thoughts about the audience for this talk. The primary purpose is to introduce problems associated with scaling applications.

00:03:39.120

I want to provide some overarching ideas, particularly revolving around issues we’ve faced while scaling to a significant degree. Unfortunately, I won’t have time to go into specific details, but if you’d like to discuss more later, I’d be happy to chat.

00:03:56.700

Importantly, when it comes to scaling, there are typically no silver bullets to resolve all issues within your application. As the famous author Tolstoy once said, every poorly performing system has its unique performance bottleneck, and without examining your application, you won’t know why it’s slow.

00:04:12.480

For instance, you might be facing an N+1 problem, or perhaps you’re simply trying to return too much data. Additionally, another common issue we encounter is that the number of performance improvements I can implement is nearly infinite—there are innumerable strategies to enhance the speed of your application.

00:04:31.800

However, we must also recognize the limitations we face: the time available to implement all those improvements, and perhaps more importantly, the constraints imposed by acceptable trade-offs.

00:04:49.560

As I've often stated, if a feature is too slow and you can't change it, dropping it can make your application perform faster, but that’s typically not a viable option—or maybe it is; you might have a feature that is not being utilized and negatively impacting your database, and in that case, it might be sensible to drop it.

00:05:07.860

The reason I’m sharing all of this with you today is that I believe the size of the problems we face when managing data becomes incredibly relevant, particularly in the context of a Rails application.

00:05:22.680

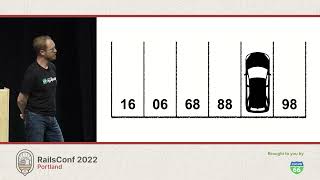

At Zendesk, for example, our basic unit of data is the customer ticket. You might consider that someone complains, for instance, about an Uber ride not arriving. To date, we’ve logged a staggering 16.3 billion tickets, which is more than double the current global population.

00:05:39.360

To put it simply, this data is continuously growing; a third of that data was created just in the last year. This growth impacts the relational databases associated with our models, increasing them to around 400 terabytes.

00:05:53.160

However, the tables themselves often contain many associations, contributing to our database’s complexity. For example, the largest ticket table alone is 41 gigabytes, and, interestingly, our events table in one chart amounts to two terabytes.

00:06:07.559

All these numbers illustrate the challenges we face. They are not trivial, but they do pose intriguing problems to solve.

00:06:23.499

Finally, I want to touch on what I consider the right mindset for a performance engineer. Don't worry, this isn’t going to be a lecture about LEDs or hardware specifics. My belief is that performance engineering should be enjoyable.

00:06:38.160

If you don’t feel a sense of joy when making an endpoint 100 milliseconds faster, perhaps you should explore different avenues in the field. This work should indeed feel rewarding.

00:06:57.720

In summary, I’d say a performance engineer genuinely thrives on witnessing the fruits of their labor, such as in this graph which illustrates a real optimization I achieved some months ago. It invokes a very satisfying feeling, a sense of achievement.

00:07:19.680

The first element I want to discuss is perhaps the most fundamental: monitoring. Without awareness of the problems at hand, you can’t fix them.

00:07:37.260

Monitoring can be expensive, and you might run the risk of over-monitoring, leading to a flood of data that you cannot effectively analyze. However, when working in performance contexts, it’s often sufficient to sample data instead of collecting every single piece of information.

00:07:56.520

For many types of data, I’ve found that a one percent sample is adequate to reveal where the inefficient queries lie and identify the underlying issues.

00:08:16.020

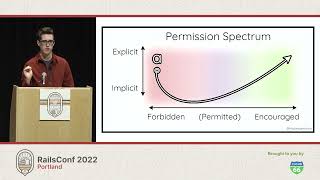

Next, I want to talk about error budgets. Most of you may associate error budgets with uptime, tracking how many times your application might fail. However, you can also establish error budgets based on latency.

00:08:33.780

It’s essential to recognize that while returning a 200 response is nice, taking 30 seconds to do so isn’t acceptable. In the accompanying slide, the left column outlines uptime error budgets, while the right column details unacceptable latencies and acceptable latencies.

00:08:51.540

Defining what constitutes acceptable and good latency is completely customizable, and it serves two important roles: first, to provide a north star for performance goals, and second, to help detect any changes that negatively impact performance without affecting uptime.

00:09:10.920

This brings us to the core of my discussion: database query performance. Although it dominates much of my daily work, I want to examine performance from a broader standpoint.

00:09:31.200

When I mention the term 'N+1,' it’s likely the first performance issue that comes to mind because it's a foundational problem that many overlook. It can slow down our applications dramatically unless addressed.

00:09:51.780

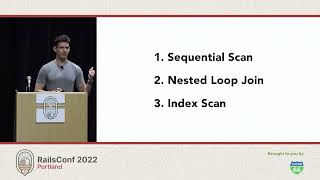

The next critical point I believe is crucial, even more so than N+1 issues, is the use of database indexes. Frequently, I fail to see a good reason not to create carefully crafted indexes for specific queries.

00:10:13.380

While writes may become marginally slower, it’s usually a worthwhile trade-off, as applications are generally read-heavy, and having well-tuned indexes benefits overall performance.

00:10:32.880

Now, one prevalent misconception in database engineering is that it is some kind of omniscient mechanism that automatically selects the best indexes. Unfortunately, this is often not the case. You need to validate your indexes and use tools such as the EXPLAIN command from ActiveRecord.

00:10:51.960

Another frequent issue faced in Rails, particularly with Active Record, is what I call greedy selects. This occurs when you irrationally select every column from a table using SELECT * when only a few attributes are necessary.

00:11:09.600

This approach can lead to significant problems on various levels, affecting both performance in the application layer and the database layer itself.

00:11:28.800

When you have a large table with many columns, especially those that store large data types, and you fetch all available data at once, this can result in your database having difficulties allocating enough memory, thus leading to increased latency.

00:11:46.920

Moreover, earlier I showed you an example of optimization that we achieved using cache, which resulted in noteworthy improvements in response times.

00:12:05.080

In regards to caching, it's critical to remember that although using caches can enhance performance, it also adds another layer to our application architecture.

00:12:27.280

Therefore, if used incorrectly, the complexity of caching can drive unforeseen issues into your application. I refer to this as what I call the 'Doraemon Paradox'—drawing parallels to the widely popular character from the 22nd-century.

00:12:44.640

Doraemon is characterized by his infinite pocket, where he can seemingly pull out any gadget to help solve problems. Some of us fall into that same mindset of thinking that we can pull out any solution without considering its implications.

00:13:02.420

In that light, while caching can be a powerful solution, it should not be approached all too casually. You need to carefully assess and choose the right moment to implement such solutions.

00:13:20.400

Now, I'd like to pivot to the final part of my talk: special interventions. In many contexts, we don't always scale things effectively.

00:13:38.880

Often, we can adopt a more manual approach, seeking to optimize and achieve considerable performance gains for certain use cases without losing sight of quality.

00:13:55.800

One method we’ve implemented at Zendesk is splitting user accounts. When power users outgrow our original setups, we enable them to operate separate systems designed to handle their specific workloads more effectively.

00:14:14.160

Finally, I want to leave you with some key takeaways regarding performance tuning in your applications. In this realm, trade-offs are inevitable.

00:14:33.840

You must recognize that there’s no perfect system; rather, the optimal design will be the one that aligns best with the core needs of your business.

00:14:53.160

In our community, understanding of performance and trade-offs is critical, and I urge you to apply this knowledge to develop solutions that harmonize immediate user needs with system stability.

00:15:12.840

As we move forward, please consider the factors that impact the performance of your applications, always keeping trade-offs in mind.

00:15:30.000

Thank you for your time today! Let's continue to strive for performance that optimizes both system capabilities and user satisfaction.