00:00:16.880

Welcome to the talk on geolocation, where we will explain how we can determine the location of someone visiting our site and do it quickly. Today, we have about 200 slides to cover, so I hope you're ready to have some fun as we explore this topic.

00:00:25.019

The idea for this talk originates from recent work I did at Home Chef, where I am part of the engineering team. As you may know, Home Chef is a meal kit delivery company. Each week, we release a menu with various meals from which customers can order.

00:00:39.540

These meals come with pre-portioned ingredients that are ready to cook. We ship orders from three facilities located across the United States. Until recently, all customers had access to the same set of meals, regardless of their location.

00:00:58.620

However, we wanted to make a change. The product team aimed to offer additional meal options specifically to customers serviced by our West Coast facility. This process was similar to conducting a digital A/B test, as we wanted to test the impact of different meal offerings.

00:01:12.000

This change introduced several challenges, primarily regarding how to determine which meals were eligible for a customer. For example, if I order from our West Coast facility, I would have access to all the meals, while customers ordering from the Midwest or East Coast facilities wouldn't have access to the test meals offered only in the West.

00:01:40.079

For logged-in customers, it was relatively simple to figure this out since we had their delivery addresses and could identify which facility would ship their order. However, the experience became more complicated for logged-out visitors.

00:02:07.560

Without any address information for guests visiting the site, our marketing team wanted to conditionally display the different meal offerings. We aimed to ensure that visitors from within the service range of our West Coast facility could see those test meals, whereas those outside of that range would not.

00:02:21.420

Given that we lacked shipping address information for logged-out customers, we needed to find a way to locate individuals visiting our site. That's the focus of my talk today: how to geo-locate site visitors efficiently.

00:02:45.060

To reiterate our challenge: when someone visits our website, we want to find their geographic location swiftly and conditionally display content based on that location. Since we are dealing with display logic, it’s crucial to determine that location as quickly as possible.

00:03:19.500

We began by exploring a few options. Our first thought was to leverage a third-party service with an API, but this was quickly ruled out as it would add too much latency to our web requests. The time taken to make a request and await a response from a third party is simply a non-starter.

00:03:41.500

Next, we considered Cloudflare, which manages our DNS. A request to our site first passes through them, and they provide geolocation features as part of their analytics platform. We explored whether that information could be forwarded from Cloudflare to our application.

00:03:57.720

Unfortunately, they only provide country-level data, which isn't granular enough for our needs. Then we discovered a service called MaxMind that provides geolocation data, allowing us to find a location based on an IP address.

00:04:16.860

Rails gives us easy access to a visitor's connection address, making MaxMind a suitable fit. The geolocation data consists of two tables: one for IP data and another for location data, linked by a foreign key. Each network block record corresponds to a network location, and a network location can have multiple network blocks.

00:04:34.699

Looking closely at the network address field, it resembles an IP address. However, the slash and the number that follows represent Classless Inter-Domain Routing (CIDR) notation—a way to specify how many bits of the address are used for the network portion and the range of IP addresses assignable from that network.

00:05:15.480

To understand this better, let’s break down what an IP address actually is. An IPv4 address is composed of 32 bits divided into four 8-bit segments, typical numerical values between 0 and 255, often referred to as octets.

00:05:56.279

The CIDR notation’s number following the slash indicates how many bits make up the networking portion. In an example where a network address is used, clients on this network will have IP addresses with the last segment ranging between 0 and 255.

00:06:14.160

For instance, in a city like Chicago, numerous Internet Service Providers (ISPs) can have different network nodes handling their respective clients. Each node has its unique network address alongside allocated IPs, which can be illustrated by CIDR notation.

00:07:05.280

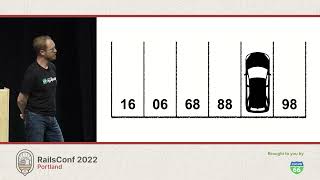

Considering network nodes, if we know the first x bits represent the networking portion, the remaining bits are addressable. For instance, if the address for one node is represented by 16 bits, that leaves the last 16 bits available for addressing.

00:07:29.640

When clients connect to a specific network node, they receive an IP address falling within the range of that node's CIDR block. Once we have established the bounds of a CIDR block, we can identify the starting and ending addresses and find out which block contains an incoming request.

00:08:09.900

Our goal is to code this lookup efficiently. For each client visiting our site, we start by filtering our network blocks table to only those entries where the IP address falls between the starting and ending addresses.

00:08:54.300

Once we find that specific record, we can utilize the GeoName ID field as a foreign key to link to a record in the network locations table. This allows us to pull all necessary location data.

00:09:55.740

In Active Record syntax, this isn’t complex. While we may have a limit applied to our query results, we expect a unique match which simplifies the interface within our application.

00:10:05.760

With our geolocation system set up, we noticed everything was functioning correctly, but it's slightly slower than anticipated. Monitoring performance through New Relic, we can see that our query lives in the key transactions overview.

00:10:24.600

This list indicates the longest running transactions within the application, which is not a place you want to be. Diving deeper into the performance breakdown reveals that it takes about 200 milliseconds to find our network location.

00:10:53.699

Interestingly, this 200 milliseconds represents about 95 percent of our geolocation request time—definitely not optimal for end-users. Now we had the opportunity to see where we could make improvements.

00:11:36.300

Returning to our original query, while we could make assumptions, we need to examine why the time consumption is so high. Database technologies offer utilities to break down how queries will be executed.

00:12:29.759

By prefixing our query with an explain statement, Postgres evaluates the request, considering the schema and various statistics on the database. The result is a set of nodes representing operations that will fulfill the query.

00:12:47.880

When Postgres is optimizing, speed is the main focus. It tries to provide a response as quickly as possible, making informed decisions based on the data available. Using explain analyze will execute the query and provide runtime data alongside execution plans.

00:13:34.860

The output includes how long each node takes and rows evaluated, creating a roadmap to see where the query is creating bottlenecks. Understanding this takes practice, especially as we learn the terminology used in evaluating queries.

00:14:10.680

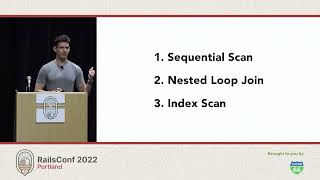

In examining query performance, there are three fundamental operations involved in optimizing our queries: sequential scan, nested loop join, and index scan. Sequential scans are linear operations, making their execution time tied directly to the data size.

00:15:01.200

In a worst-case scenario, if the queried item is at the end of our dataset, the time it takes to get there correlates to the dataset size. Understanding Big O notation (time complexity) helps us describe the performance of our database operations without being bogged down by raw numbers.

00:16:05.880

In practical terms, if we apply a WHERE statement to bound an IP address in our geolocation query, it necessitates a full sequential scan, finding matches through a linear operation.

00:16:17.840

If a database needs to evaluate every entry to find the match, this pushes performance needlessly slow. Postgres can optimize by predicting the need for future actions, stopping the process once it finds the first matching record.

00:17:01.200

Nested loops are algorithms that Postgres can employ when joining two tables. This process can occur through a sequential scan as each row in the primary and secondary tables is evaluated for matches.

00:17:35.780

While the quadratic nature may be slow, it emphasizes why databases like Postgres prioritize indexing primary keys—facilitating more efficient joins. This is especially evident with joins utilizing foreign keys.

00:18:41.880

By creating an index on our primary key, searching through our table becomes significantly quicker than scanning each entry linearly. Postgres typically seeks to return results as efficiently as possible, assessing how to locate entries rapidly. This motivates query performance optimizations.

00:19:52.500

The goal here is to minimize runtime. Analyzing the query interaction between sequential scans and index relationship—where it optimizes found records through indexes—causes improvements. The understanding of time complexity helps refine our index comparisons.

00:21:09.240

Explorer a better 查询Plan indeed reveals it's helpful to check settings on active indexes. To optimize, suppose we create an order statement in our condition. Postgres recognizes the need to sort at some stage, benefiting from the indexed order solids.

00:22:15.900

The approached transition from performing two tasks (scanning and sorting) separately to a singular step removes the overhead, refining efficiency.

00:23:45.080

By switching from a sequential scan to an indexed one, we can substantially reduce processing times. Through testing, we observed the runtime for our geolocation request drop from 200 milliseconds to 0.05 milliseconds.

00:24:57.120

This reduction is a staggering improvement of over 100 times faster. The results greatly improved overall system performance and will likely reflect positively on my performance review.

00:25:10.200

Thank you all for attending this talk. The slides are available at KevinLesh.com/RailsConf2022. If you have any questions or feedback for me, feel free to reach out on Twitter @KevinLesh. You can also follow my other endeavors at KevinLesh.com. Thank you!