00:00:12.860

Thanks for coming! My name is Jamie, and for the next 30 minutes, I'm going to attempt to convince you that if you know Heroku, you can use Kubernetes. I know that's a lot like saying if you can dodge a wrench, you can dodge a ball. While I'm not going to throw any wrenches at people unless you ask me nicely and provide the wrench, because I didn't bring any with me, I hope to convince you that this is not too clickbaity of a title.

00:00:46.920

So, Kubernetes can be abbreviated as K8s. The name starts with a 'K', ends with an 'S', and there are eight letters in between. You may have encountered this kind of naming convention with words like 'internationalization' abbreviated as i18n, or 'accessibility' abbreviated as a11y. It's kind of amusing because it highlights the fact that 'internationalization' is a 20-letter word.

00:01:15.780

Now, what is Kubernetes? You may have heard it referred to as a container orchestrator or potentially a container scheduler, which sounds a lot like how functional programmers describe monads. A monad is a monoid in the category of endofunctors, but that doesn’t really clarify much. It's not incorrect to describe Kubernetes this way, but it also doesn't explain a whole lot. You can tell it's a poor explanation by how often people respond with, 'Okay, well, what's that?' after hearing it.

00:01:50.340

The purpose of explaining something is to help people learn. If your explanations aren’t doing that and you're using fancy terminology, then you’re not really teaching. So let's drop the fancy words and try to meet people where they are. Kubernetes is a platform for running containers. Orchestration and scheduling are definitely parts of that, but they refer to the 'how' rather than the 'what'.

00:02:24.000

You may have heard of Docker containers in the past. Docker introduced many people to the idea of packaging up your application and all its related resources into what we call a container. Essentially, a container is like a tarball or a zip file that can contain anything from a single binary to a complete Linux operating system without the kernel. If you're curious about how they work, Liz Rice has a great talk from Container Camp 2016 where she live-codes a primitive container in less than 100 lines of Go code.

00:03:10.620

Ultimately, it doesn't matter which particular Linux distribution is running on the host or which packages it does or does not have installed. Your container has all the binaries and supporting files necessary so that it can run completely isolated from or effectively isolated from that host operating system.

00:03:44.400

While containers are fundamental to scalable architectures, creating a container is just one part of the solution; we still need to be able to run them. An application is only useful when it's running, and that’s where Kubernetes comes into play. It takes that container and actually runs it. How it does this is quite interesting and surprisingly similar to how Heroku runs your dynos. In fact, if Heroku didn't exist today, Kubernetes might be something that people would use to build it.

00:04:30.420

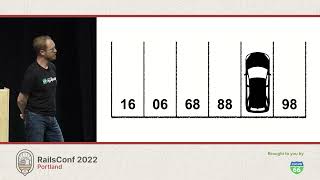

If you're familiar with Heroku, you likely already understand several concepts in Kubernetes, despite the dramatically different vocabulary between the two. For example, in Heroku, the unit of running software is called a dyno; in Kubernetes, that same concept is called a pod. There are slight differences between them, especially in more complex Kubernetes architectures involving things like sidecar containers.

00:05:30.960

The most significant difference you'll see, however, is that while Heroku automatically provides the computing resources along with the dyno—meaning you get CPU and RAM along with it—Kubernetes requires you to set up a pool of nodes beforehand to assign your pods to. This is what the scheduling part of container scheduling refers to, which Kubernetes calls the assignment of pods to nodes. Nodes are just basic server types that your cloud provider uses, and most cloud providers will automatically place those servers within your cluster.

00:06:53.040

Going back to the earlier point, a dyno on Heroku is roughly equivalent to a pod in Kubernetes. On Heroku, you set up multiple entry points to your application using lines in a Procfile. For example, you might have Procfile entries for your web server and your background job runner. Kubernetes refers to these as deployments. However, Kubernetes does not put deployments underneath anything else the way Heroku puts your Procfile entries within your application repository; they are simply an entry point into your application.

00:09:09.060

Hence, your web server and your background job runner would be two completely separate but nearly identical deployments within Kubernetes, differing only in the commands that you run. When you run `git push heroku`, Heroku executes a build pack, which installs your gems, compiles your JavaScript and CSS assets for production, and performs any other necessary pre-deployment preparations.

00:11:03.060

The equivalent for containers is a Dockerfile if you're building your containers with Docker; if you're using a different format to build your containers, it might be called a container file instead. Regardless of the file's name or the implementation, you're using it for the same purpose. After Heroku runs your build pack, it compiles all those files into a deployable artifact called a slug. Every time you restart your dynos or scale them, those dynos are running a copy of that slug.

00:12:27.720

With Kubernetes, your Dockerfile results in a container image similar to the Heroku slug, and every time you restart your pods or scale them, it runs a copy of this container image, pulls a fresh copy, and executes it. Deploying to Heroku with `git push` builds your slug, replaces your dynos with the new ones, and the new ones run the new slug. This process is directly analogous to building a container, pushing it to a container repository, and updating the necessary deployments with the new container image tag from that repository.

00:13:27.960

Scaling up your dynos on Heroku can be done with the Heroku scale command using the Heroku CLI or through the UI by dragging the dyno slider. With Kubernetes, you can scale the number of pods using the command `kubectl scale deployment`. Some people pronounce it 'kube control', but I prefer to pronounce it 'kube cuddle' because it's adorable. However, they effectively represent the same operation.

00:14:46.380

When you need to restart your dynos for any reason, you run the Heroku restart command. In Kubernetes, you achieve the same effect with `kubectl rollout restart deployment`. The primary distinction is that Kubernetes does not restart everything simultaneously by default like Heroku does. Instead, what it does is spin up a few new pods, wait for them to become operational, and potentially pass any readiness checks you've defined, such as handling requests successfully before proceeding to terminate the old ones. This is done in batches instead of all at once, and this process is highly customizable.

00:16:40.920

One of Heroku's killer features is its add-on ecosystem, where you can provision anything from databases to video encoding within your application with just a few clicks. The Kubernetes ecosystem has a couple of analogs to this, such as Helm charts, which install things into your server as if they were managed by a package manager, and a pattern called operators, where you define custom resources and run one or more pods inside your cluster. These utilize the Kubernetes API to respond to changes in those resources.

00:18:02.400

Kubernetes employs the operator pattern extensively. Whenever you're scaling your deployments, there’s an operator checking those numbers and verifying against the number of running pods. If there's any discrepancy, it will add or delete more pods as necessary. Helm charts and operators are not necessarily mutually exclusive; you can actually use Helm charts to install operators into your cluster. However, these are not identical, and there are trade-offs when choosing between one or the other.

00:19:33.840

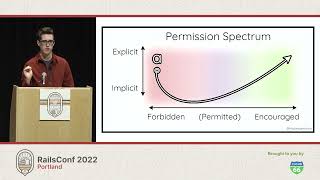

Trade-offs arise in what you can do. One example is the extent to which you can dig in. When so much of the operational side is abstracted for you, this can potentially lead to little or no visibility into what's happening. If you've used Heroku, you may have struggled to understand why something wasn't working, opting to restart various dynos and move things around. Conversely, Kubernetes can provide you with visibility into the operations; however, this added visibility may be overwhelming. This is also one of the strengths of platforms like Heroku and similar ones.

00:20:18.780

So, what does it take to close off services from the public internet? I’m uncertain if Heroku allows for that option. I’m seeing people shaking their heads, indicating it may not. Essentially, when you're running something on Heroku, you may not have the ability to restrict access from the public internet, keeping it available solely for the internal services you're running. Pricing is another trade-off that can swing either way depending on how far you intend to go.

00:22:32.760

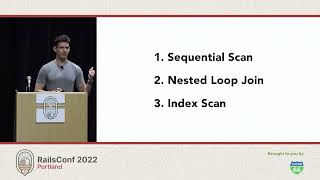

Now, moving toward a live demonstration, we are going to deploy a Rails app to Kubernetes from my iPad. It's going to get weird. I've already set up my Kubernetes cluster on DigitalOcean, as it's convenient and already running. The preliminary tasks we need to set up inside our cluster include configuring Ingress to route requests from the public internet to our cluster, making our application accessible.

00:23:22.900

This is not strictly necessary, but I’m installing it as a convenience, as well as a cert manager to automatically provision TLS certificates. Additionally, I’ll implement some elements specific to DigitalOcean load balancers, like validating TLS certificates. We've got some configurations to work through, such as annotations, before running our provisioning script.

00:24:12.280

The script runs a variety of installations inside our cluster, and it also encompasses a pause to wait for the load balancer to spin itself up. We’ll keep an eye on that to ensure everything goes smoothly. As soon as our load balancer receives an IP address, the pending status should change, confirming that it’s operational.

00:24:55.880

While we wait for the load balancer to finalize its IP address, we can review the set annotations for our load balancer. These annotations are simply key-value pairs, specifying our hostname for easier domain configuration. I'm going to continue filling time while we wait for everything to deploy properly.

00:26:29.340

The application definition we'll be applying includes the abstraction for our Rails app, which Kubernetes does not understand natively. This abstraction is provided by the operator I wrote, and it will define specific parameters for our Rails app, such as the name, container image to pull, and essential environment variables that are necessary for operation. We need to ensure everything aligns with our deployment expectations.

00:28:01.060

Can you believe we still don't have an IP address on this? I hope it doesn't become embarrassing. Thankfully, our environment is robust, and we can double-check our load balancers. Even if we don't see success at this moment, I assure you it will come together.

00:29:33.140

This demonstration is to show that you can use abstraction frameworks like the one I built to simplify your Kubernetes deployment process. Spending time wiring configuration details lets us focus on larger application developments without losing sight of the underlying architecture and deployment intricacies.

00:30:36.840

As we proceed, I’ll be addressing some questions. One attendee inquired about the terminal dashboard for Kubernetes. Yes, there was indeed a dog emoji representing nodes, which humorously ties back to the K8s naming convention with canines. Another question arose about the Sidekiq process and whether it needs health checks. If a process runs without health checks, Kubernetes assumes it is healthy as long as it is running.

00:31:23.940

The final inquiries focused on encrypted storage for environment variables and the minimal actions required to transition a Heroku app to run on Kubernetes. Encrypting environment variables typically involves third-party solutions like Vault, as Kubernetes Secrets are not inherently encrypted.

00:32:40.500

Remember that the Docker file you create is vital for successfully transitioning a Heroku app to Kubernetes. Writing that Dockerfile enables the creation of the containers, establishing a pathway to achieve seamless replication of the app architecture. With that said, I appreciate everyone for participating in the talk today. Thank you for your time!