00:00:11.179

All right, well thank you everyone. I'm glad you're here. I hope you enjoyed the cheesecake, and I hope it doesn't put you to sleep. I'm Amy, and I'm a back-end engineer at Heroku. Today, I will be talking about how you can add knobs, buttons, and switches to your application to help it alter its behavior when things go wrong.

00:00:21.720

We've all seen applications that can fail dramatically when a single, unimportant service is down, so let's not let that be you. Pilots operate their airplanes from the flight deck, and I have fond memories of Captain Kirk yelling every week to divert power to the shields. This talk is about what kinds of levers you should have for operating your application when the going gets tough.

00:00:41.910

I want you to feel like when you're on call, you have that level of control over your application. This talk is about application resilience, but it's only one part of the topic. This is what I call the 'just right' talk—not just about the major fires, but also the casual, everyday failures.

00:01:07.500

No one action you take on behalf of a customer has a 100% chance of success. Maybe they provided bad data, maybe there's some conflicting state either between you and another service or between two dependent services.

00:01:19.020

It could also be that the customer has encountered a particular race condition, or you hit a network glitch. Whatever the reason, that request and many others like it may not succeed, but those are not what I'm talking about today. This talk assumes that you have functionality for retrying requests, unwinding multi-step actions when needed. I've talked about those strategies at previous RailsConf events and wanted to highlight them, as they may offer you more bang for your buck depending on your current situation.

00:02:10.940

I'm also not talking about disaster recovery scenarios—the catastrophic events such as having your database gone, losing all backups, and aliens abducting everyone. Good luck with that! While this is a 'just right' talk, it may be more useful for you to work on failures happening quietly right now or to plan for failures you hope will never occur but could lead to the end of your business.

00:02:29.680

Now, this entire talk may not be 'just right' for you. I've been fortunate to work at companies that care deeply about providing a great, reliable, and resilient customer experience, and how we provide those services reflects our values. You must make difficult choices about what to do in bad situations, depending on the size of your application, your customer base, and your product.

00:02:59.660

You may end up asking your product people or even your business owners, 'What would you like me to do in this situation?' So, what am I talking about? I'm discussing strategies to help you shed load and fail gracefully, protecting struggling services. We'll go over seven tools that can assist you in doing that. I'll provide some implementation details for each and offer some 'buyer beware' warnings at the end.

00:04:01.340

Let's jump right in. The first one I want to talk about is maintenance mode. Going into maintenance mode is your hard no. It should have a clear, consistent message with a link to your status page, and most importantly, it should be easy to switch on. At Heroku, we implement this as an environment variable.

00:04:14.330

The key thing here is that it's one button you can press, not a series of levers and dials. You should not have to follow a long playbook to make this work for you.

00:04:38.550

The next feature I want to discuss is read-only mode. Most pieces of software exist to effect some sort of change in another system. I'm guessing that for most of us at RailsConf, the work our applications perform is to alter a relational database.

00:05:01.410

Consider what your application does for users. Whether it stores data in a database, transforms files and uploads them to a file server, or launches containers on EC2 instances, once you comprehend what your application is modifying, think about what you can do if you can't modify that. What questions can you still answer? Some of you may be operating a narrowly scoped service, and the answer may be nothing—and that's fine; this tool may not be for you.

00:05:41.490

If you have a service like a classic Rails blog, this can be very useful. Most people probably just want to read your blog; they don't want to alter it; they aren't publishing. My current job involves an application that has a variety of disparate services, so we need finer-grained tools. However, this is a good first step.

00:06:10.830

The way we typically implement read-only mode is through an environment variable to maintain similarity to maintenance mode. Remember to consider what tool you want to use and apply it consistently.

00:06:36.840

Next, let's talk about feature flags. Feature flags can be used for more than just new features; they can provide control when part of your app isn't functioning properly. Imagine that a failure in billing or selling new features is a new feature flag for you.

00:07:04.009

There are different levels of feature flags that may be useful. The first is an individual user feature flag, although this may not help you much during an incident since your incidents hopefully don't only affect one user. The second type is global, application-wide flags.

00:07:36.340

As mentioned before, a global billing feature can freeze modifications to all containers running for customers. However, what we find most useful is the group level. At Heroku, we run user applications on our platform, so the most relevant groups typically consist of applications running in a particular region.

00:08:18.050

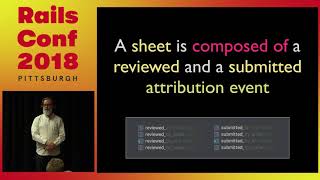

You should consider what groupings are meaningful for your business, as it significantly impacts what you want to control and who your users are. We implement this by having a class that answers questions about the current application state. For us, this class interacts with our database.

00:08:53.759

However, that may not be the right choice for you. This model could also rely on Redis or an in-memory cache. That would imply that each different web process would have a different application state, possibly complicating matters. One interesting option I can think of is curling a file named 'billing_enabled' in a specific S3 bucket.

00:09:26.600

You want to ensure that this check doesn't fail when you're handling the failure of another component. For groups, I recommend having one switch per entire group. That might sound silly having numerous strings, but during off-hours, we find that strings are easier to copy and paste rather than instantiating an application setting model.

00:10:06.230

We can quickly toggle the enabled flag, which gives us more confidence that when we ask about the current application state, we know exactly what we're getting.

00:10:29.210

Next, I want to discuss rate limits. Rate limits protect you from disrespectful and malicious traffic and can also help you shed load. If you need to drop half of your traffic to remain operational, you should do that. Customers displaying respectful traffic may need to try a couple of times to get a particular request through, but if they keep trying, they'll be able to achieve their goals.

00:10:56.590

This strategy is similar to what AWS employs when they need to reject a significant number of requests due to load. Once we understand we are in that state, we start behaving in a way that is beneficial to both them and us.

00:11:17.250

We stop sending excess traffic and focus on repeating our most important requests to them. Eventually, those crucial requests get accepted without overwhelming them.

00:12:02.970

Rate limits can also help protect access to your application from other parts of the business that rely on you. Often, the single application a user interacts with is a confluence of different services all working together to present a unified user experience.

00:12:30.080

While it could be argued that you can make that internal system work even when other services are down, it can be easier to ensure that preferred traffic is prioritized. This approach helps maintain a unified front to customers and keeps you operational longer.

00:12:50.470

We implement rate limits as a combination of two types of levers: a single default and numerous modifiers for user accounts. This grants us the flexibility to provide different users the rate limits they need while retaining a single control over traffic processing.

00:13:13.680

For example, we may set a base rate limit of 100 requests per minute, which hopefully we can handle, but let's say it's just for easy math.

00:13:45.450

Our customer may start with a modifier of 1. To determine the customer's rate limit, we multiply the default of 100 by their modifier of 1, resulting in a rate limit of 100 requests per minute.

00:14:02.940

Let's say that same customer writes in and requests a temporary increase due to legitimate reasons, so we bump them up to a modifier of 2. Their new rate limit becomes 200 requests per minute.

00:14:31.800

Later, when we are under heavy load and need to reduce traffic, we can cut the default rate limit; say we cut it from 100 to 50. This adjustment means all customer accounts automatically reduce their rate limits.

00:14:54.050

Thus, our customer drops back to a limit of 100 requests per minute. This approach allows us to quickly address increased loads without adjusting every single user’s rate limit.

00:15:19.050

I should mention that depending on your application, you may want to consider cost-based rate limiting, which might be a better choice than request-based limiting. In such a case, you charge users a number of tokens based on the type of their requests.

00:15:42.559

This can prevent users from overwhelming slow endpoints while allowing more feasible traffic through faster endpoints.

00:16:12.510

It's crucial to understand that the more complex the algorithm for rate limiting, the worse it can be for denial of service attacks. The more computation time it takes to reject a request, the more problematized you will be when dealing with a flood of requests.

00:16:29.470

This does not mean you should shy away from complex rate limiting if necessary, but you should ensure you have other layers in place to fend off distributed denial of service attacks. This applies even to unintentional denial of service attacks.

00:16:58.950

Next, I want to discuss the importance of stopping non-critical work. If you’re hitting limits on your database, maxing out your compute resources, or reaching limitations of another dependent service, you should stop any non-urgent reports or jobs that don’t need to run immediately.

00:17:17.780

This could mean turning off jobs that don’t require attention for the next hour or perhaps the next four hours. How do we do this? With application settings using a model for report settings.

00:17:48.050

Every report and every job assesses to ensure that it is enabled before it runs. For example, let’s say we have a monthly user report that triggers a run method.

00:18:06.600

Before doing any work, we check that we are enabled for the monthly user report by implementing a method that assesses report settings.

00:18:28.680

To make this more general, your monthly user report can inherit features from a parent report class, meaning the parent class can encompass additional features that respond to run methods.

00:18:56.210

This means that anytime a user creates a new job, it can be enabled or disabled through one change in the database, Redis, S3, or any other method you prefer.

00:19:37.090

Next, I want to address known unknowns. I am confident that all of you have never shipped non-performant code, but I have. Code deployments may not perform up to expectations for your biggest customers.

00:20:05.290

You might want to have a way to control it if it goes out of hand. Many new features go live under a controlled assumption that they will perform well; however, it's common to have concerns.

00:20:38.250

To take precaution, put a flag around new features. You could use feature flags or the 'scientist' gem for refactoring, allowing you to roll out changes gradually and quickly disable experimental code if issues arise.

00:21:04.950

The great advantage of having many configurable items is that if you have even a hint of doubt, you can simply turn things off. This is effective in eliminating rabbit holes where an engineer might spend hours verifying the cause during an incident.

00:21:37.550

Next, I want to discuss circuit breakers. Circuit breakers allow you to treat dependent services well and not overwhelm them when they are recovering. They're typically responsive shut-offs, reacting to metrics such as the number of timeouts or error rates.

00:22:08.060

When the thresholds are met, the circuit breaker can cut off calls, giving those services time to recover while allowing your web processes to focus on other requests.

00:22:39.260

Circuit breakers should function more rapidly than a monitoring service that pages your on-call engineer. The hope is that by the time you page the on-call engineer, the circuit breaker is already in effect, improving the failure mode.

00:23:04.599

Additionally, circuit breakers can be manually shut off. This is useful in cases where you want to temporarily keep traffic from a struggling service or if an internal service misbehaves.

00:23:51.440

These shut-offs would function similarly to how our billing report inherits properties from the report class. In our billing service client, we would integrate automatic circuit breakers for any of its children.

00:24:19.060

Incorporate good tools to manage circuit breakers, ensuring they do not rely on developers typing into a production console. It's essential for your on-call engineers to avoid confusion in the terminology used.

00:24:46.280

The language should be universally understood to avoid misunderstandings, especially at critical hours.

00:25:02.750

Now, regarding implementation, with all these buttons and switches, you should consider how to design them and where to maintain their state.

00:25:35.330

There are a variety of options available; you could store them in a relational database, a cache layer, environment variables, or as a last resort even directly in your code.

00:26:06.690

Consider whether flipping a switch could require access to a down component, as you may want to use the switch during that downtime.

00:26:44.370

Maintaining a mutable structure with environment variables may hinder certain failure management cases. One reason we heavily rely on databases for our application state is our confidence in our database team's ability to assist us.

00:27:07.090

Ultimately, when our application behaves poorly, our pathway to resolution often involves running SQL commands to toggle state on our still-operational system.

00:27:34.020

Finally, when utilizing switches, it's important to consider the complexity in determining whether a switch is flipped. The fancier the switch, the more likely it is to become a part of your problem.

00:28:07.230

You trade knowledge for control, leading to unpredictability in application responses. Have you tested how your application performs with multiple user conditions?

00:28:40.189

Keeping production, staging, and development environments consistent has been a challenge for many teams. I don't know of a definitive solution to that, but I'd prefer to have control over my application to mitigate issues rather than confidently know how it's down.

00:29:08.859

Thank you for joining me today. I hope I have given you some ideas for improving your application's resilience to the inevitable fires you may encounter in the future. We have two excellent speakers coming up tomorrow morning after the keynote, so please check them out.

00:29:45.150

If you're interested in learning more about using Kafka with Rails or how Postgres 10 can improve your life, come by our booth tomorrow. I am happy to take questions for about seven minutes before we wrap this up.

00:30:15.150

Yes, please go ahead. The question is about when we start thinking about adding a new knob or switch.

00:30:27.820

I typically consider this after an incident. Some knobs are longer-term solutions, but many stem from situations where something went wrong, and we lacked the ability to control it.

00:30:47.469

Yes, regarding the training of new developers, I believe this ties into how we onboard people to be on-call.

00:30:54.229

We implement shadowing opportunities, allowing new hires to observe on-call engineers during the daytime when they aren't receiving pages.

00:31:06.590

We do have documentation, but it can be challenging to navigate while in a fatigued state, so ideally, we want them to know what to search for.

00:31:21.590

Encouraging engineers to reach out to others for clarification is crucial; I want new engineers to feel comfortable paging me if they need assistance.

00:31:32.769

As previously mentioned, we primarily store state in PostgreSQL and Redis due to the confidence in our data team’s infrastructure.

00:31:58.940

I see people queuing for the next talk, so thank you very much, everyone!