00:00:00.900

Hello, and welcome! My name is Stephen Prater, and I'm a Staff Engineer at Shopify. Today, I'm here to talk about performance tuning, specifically what peak performance looks like.

00:00:03.800

You may not like it, but this presentation is meant to help you understand that reasoning about the performance of your code doesn’t need to require a Ph.D. in computer science or specialized tools. This talk will not include a lot of complex math. If you’ve been through a technical interview in the last five years, you’ve likely been asked to write an algorithm and then explain its Big O complexity. However, if you've held a software engineering job in that time frame, you probably haven’t needed to do this at work.

00:00:32.880

In reality, your algorithms may not be so neatly contained. They are likely spread out across several methods in multiple classes, and attempting to calculate the Big O complexity by hand may seem like a waste of time, and you’d probably be right. In our daily work, we encounter three Big O complexities that we should be concerned with. There's O(1), O(n), and then there's O(#$*&!). I know that sounds flippant, but categorizing your runtime into these three buckets can help you understand why they exist and verify that you're extracting the maximum performance from your program.

00:01:05.760

It's quite simple to make these categories without requiring a ton of complicated math or even doing heavy reasoning about the algorithm in your head. So how do we do this? How can we categorize runtimes without relying solely on traditional tricks, such as identifying nested loops, especially when those tricks are not readily available?

00:01:32.700

It's challenging to see a nested loop when it exists deep within a different application or far up the stack trace, but we can use visualizations. OpenTelemetry offers a collection of tools, APIs, and SDKs that can be used to instrument, generate, collect, and export telemetry data such as metrics, logs, and traces. This data can help us analyze the performance and behavior of our software. These tools can provide us with traces and graphs of distributed algorithms, and learning to recognize performance characteristics by examining these graphs can shortcut the process of understanding the complexities within our systems.

00:02:08.880

We won't delve too much into how to instrument your code using OpenTelemetry, though I will share a few tips along the way. More comprehensive tutorials are available for those seeking deeper knowledge. Our focus today will be on how to interpret the data once your code is instrumented. We'll talk about how to spot and eliminate performance problems, earn a reputation as a wizard, and yes, even summon an army of cats to assist in your nefarious plans.

00:02:30.780

As I mentioned, we won't dive too deep into the instrumentation aspect at this moment. However, I do want to emphasize a cautionary note: performance can only be measured, never merely calculated. This is a great quote from a person I know on the internet. It follows that you can only improve the performance that you measure. Hence, how you instrument your code will significantly impact the problems you can visualize.

00:02:57.100

Today, we’ll look primarily at flame graphs and distributed tracing timelines from Jaeger. These traces are generated from code where I’ve instrumented every single method call using TracePoint or clever metaprogramming. I wouldn’t actually implement this in real life, as it would flood you with data that lacks actionable insights. Instead, the goal here is to illustrate the general principle of analyzing graph shapes within your everyday work.

00:03:15.000

In practice, you're more likely to encounter invisible complexities spread across multiple call sites and even various programs. Fundamentally, your program can slow down for two reasons: either there’s too much of something or too little. This means that when your program is waiting on something—be it itself to finish its tasks or another program's completion—that's when the slowness kicks in.

00:03:43.200

A common point of discussion regarding performance is whether your application is I/O-bound or CPU-bound. While those terms have their place, I believe it's more useful to evaluate the problem from a standpoint of what your program is waiting on.

00:04:12.300

Let's discuss waiting on external factors, as this type of slowness is the one you are most likely to encounter in modern applications. In contemporary architecture, you often wait for collaborating programs to return information you need. Waiting is when your beautiful software collides with the harsh realities of operations.

00:04:44.700

Executing processes on the CPU takes time; reading files from the disk takes time; interacting with that challenging API you dread takes time. All these slow functionalities are impacted by the physical world. Sometimes that sluggishness is unavoidable. I mean, what can you do if an API takes three seconds to respond to every call?

00:05:07.920

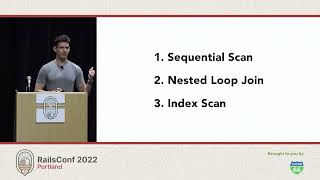

This brings us to our first flame graph. What do you think it shows? The picture represents a common performance issue in Rails known as the N+1 query problem. This occurs when you forget to eager load a one-to-many association. As a result, iterating through the association forces you to execute multiple database queries to fetch each object one at a time.

00:05:42.960

The solution to the N+1 query issue is widely known: eager load your associations. But why is the N+1 query slow? Each row fetched from the database needs to be converted into a Ruby object and then pushed onto the association. The number of trips to the database—especially if you are making multiple requests over the network—takes more time than processing iterations on the CPU. Thus, our first rule of performance is, 'Closer is better.' Move storage closer to your operations and leverage the CPU instead of the network or disk.

00:06:11.060

Now, let's discuss waiting on I/O, which is an inevitability. Every time you read from a non-local data source—like when making an API call or connecting to an external database—you are invariably going to engage in I/O wait. Each of these sequential operations requires waiting for the previous operation to complete.

00:06:41.940

Using our flame graph, we can find that a significant portion of our time is spent on activities like I/O wait. This is the most common performance bottleneck encountered within distributed systems. Tools like Jaeger and Tempo excel at revealing these issues.

00:07:06.000

A telltale sign of waiting on I/O is seeing suspiciously round numbers in your traces. If a call consistently takes precisely 30 seconds or almost exactly a thousand milliseconds, you should investigate whether there's a timeout or a sleep in the code. Another indicator is when you notice significant differences in call durations.

00:07:35.820

This leads us to our second rule of performance: don't wait around! For example, if your program retrieves cat images from an external API, while waiting for those images, it can perform other tasks instead. When we analyze our program, we can optimize the handling of those cat images.

00:08:02.640

In fact, we can optimize our programs to retrieve multiple cat images simultaneously rather than sequentially, effectively reducing the total wait time. This does not mean to write everything as a single sequential block, as that can result in extended wait periods.

00:08:30.720

At this point, I want to transition into JavaScript to discuss parallelism. JavaScript elegantly handles I/O wait with a native support for promises. Depending on your experience level, you might remember how promises are syntactic sugar over traditional async callbacks.

00:09:02.880

You may also be aware that they can be semantically equivalent to a callback function as shown in older examples. In these cases, we don’t need to wait until an asynchronous function finishes before moving on to execute the next task because those tasks can run concurrently.

00:09:33.420

Now, while Ruby's concurrency primitives aren’t as seamless as JavaScript's, it's important to remember that there's often no need to wait for one function to complete before starting another. This is about efficiently managing performance by utilizing concurrency.

00:10:06.060

This insight can yield significant performance benefits, as was demonstrated with the example of fetching cat images. Plus, we can further enhance this by implementing processes that will run in parallel without requiring cumbersome modifications to our code.

00:10:34.800

Imagine we're tasked with summoning a fleet of cats. Each cat requires two seconds to summon, and petting them takes an additional ten seconds—totaling twelve seconds per cat if done sequentially. However, if we summon and pet two cats at once, the total time drops to six seconds.

00:11:08.280

If we summon many cats simultaneously, we can achieve a significant reduction in the time taken. But, while adding more hands seems beneficial, we encounter the limitations outlined by Amdahl's Law.

00:11:36.300

Amdahl's Law implies that the overall speedup of a system is limited by the sequential portion of its workload. Therefore, while we can summon and pet cats at a higher rate, we cannot exceed certain performance thresholds.

00:12:02.640

For any task that remains fundamentally sequential, such as waiting for a cat to be both summoned and petted, there will be a natural limit to the maximum speed achievable.

00:12:33.480

This alludes to one of the core observations in algorithm analysis: the fastest you can go is defined by the time taken to perform a single operation. In Big O notation, we eliminate constants, so an operation taking twelve seconds can still be considered O(1) if it always takes the same time.

00:13:07.200

Remember, most interesting programs don’t deal with singular operations. Instead, programming tends to revolve around collections of objects, e.g., summoning and petting each cat. This cumulative process introduces predictable timelines that we can assess and optimize.

00:13:39.780

The methodology for handling multiple object interactions follows linear time complexity. Consequently, as the number of cats grows, so does the time required for each task.

00:14:12.960

These linear operations might reveal hidden slowdowns, especially when working with larger collections. Are you performing additional linear operations that compound with the original task?

00:14:44.100

Introducing sorting functionality presents algorithmic complexities with increased overhead. Sorting requires interacting with all elements methodically, generating different operating complexities depending on implementation.

00:15:15.720

While it’s obvious that sorting introduces complexity, additional operations like aggregating or bucketing further complicate matters. Understanding that these operations significantly affect computational efficiency leads us to our fourth rule: doing something with everything is complicated.

00:15:49.380

Most algorithmic complexities present challenges, especially as you work with various collections of items. Manipulating entire sets of inputs, such as sorting or executing operations on groups, results in further overhead. The canonical example of this is sorting, which serves as a key focus during performance assessments.

00:16:15.000

A prime example is the BOGO sort algorithm: arranging all cats in every possible combination to find a sorted order. This is an O(n!) operation, driven by factorial time complexity due to the n factorial permutations.

00:16:48.120

In my demonstration using a coding environment, a very similar factorial operation can cause delays to the degree that sorting would be impossible for more than ten objects. This immense time requirement becomes clear when visualizing performance data.

00:17:22.680

Unfortunately, performance bottlenecks grow dramatically with factorial problem complexity due to the rapid increase of permutations as inputs scale. If you come across this shape after visualizing your performance metrics, take it as a signal that something's gone wrong.

00:17:58.440

As we consider sorting algorithms, a selection sort may arise. Here’s how it works: two arrays work together to repeatedly find the smallest element in the unsorted array, placing it into a sorted array. It's pleasing from a conceptual perspective, but overall, this method is less efficient.

00:18:32.040

With selection sort implemented using recursion and no looping, the overhead becomes more manageable but demonstrates the increased complexity. In everyday programming tasks, your performance issues can stem from multiple factors.

00:19:01.620

Our task is to recognize shapes predictably in performance metrics. For nested loops and polynomial time complexity, a common visual representation is the sawtooth pattern. The growth associated with polynomial algorithms follows predictable phenomena but still requires careful oversight.

00:19:31.920

Taking that analogy further, as we organize our collections, the time taken grows polynomially. More input results in proportionately more output. Sorting or aggregating completes in O(n²) for larger collections and is an essential consideration when designing applications.

00:20:04.260

A key improvement in algorithmic design is merging, which works at a logarithmic level. Merger sorts generally function well as they leverage recursive divisions to minimize operations needed for organizing data.

00:20:39.360

If the logarithmic approach observes the performance trends, we find success in categorizing these more effectively. Logarithmic algorithms trend in terms of execution time, often producing an upside-down triangle in visual graphs after an analysis.

00:21:12.960

While parallelization strategies yield different results, mainly as a factor of workload being applied, employing merging techniques is vital to reducing overall complexity when sorting collections.

00:21:50.400

As we strive towards performance pruning, we encounter the universal scalability law which guides the limits of our pursuits. A big takeaway—especially when addressing performance needs—is recognizing how competition for resources can compromise the advantages of parallelization.

00:22:29.100

Balancing the increase in available resources with costs is crucial. This balance weighs against physical realities when scaling successful applications. Performance hinges on allocating resources effectively within a defined budget.

00:23:07.500

Taken as a whole, effective engineering applies sound logic to ensure that we parse results, identifying potential bottlenecks efficiently while fostering ongoing performance improvements.

00:23:30.740

We recognize the necessity for performance testing to avoid future degradation. Whether by managing algorithmic efficiency gains or monitoring rate limits, we can stop any performance regressions before they arise. Measurements taken over time will help you identify your program’s needs and adjust accordingly.

00:24:14.500

In conclusion, we have discussed rules that can aid your performance optimization journey. Rules such as keeping things close, avoiding wait states, recognizing your upper limits, and acknowledging the complexities involved in working with collections.

00:24:48.080

Today's lesson comes back full circle to understanding that delivering fair performance doesn’t always equate to diving headfirst into continuous improvements. You’ll be faced with budget constraints and practicality considerations that require careful assessment of the code you develop and deploy for production.

00:25:23.000

Be mindful that performance is one important aspect, but ultimately, the usability of the program to your users holds the most weight. As we navigate the world of software engineering, always measure your progress and remember to evaluate when it’s time to stop optimizing!