00:00:05.299

Thank you for coming to my session. The theme of this session is scaling Rails API to handle write-heavy traffic.

00:00:12.660

First, I will introduce myself.

00:00:19.199

I am Takumasa Ochi, a software engineer and product manager at DNA.

00:00:25.800

I am responsible for developing back-end systems for mobile game apps. I love both developing and playing games.

00:00:31.920

I also work with Ruby and Ruby on Rails. This is our company, DNA, where our mission is to delight people beyond their wildest dreams.

00:00:41.340

We offer a wide variety of services, including games, sports, live streaming, healthcare, automotive, e-commerce, and more.

00:00:48.920

We started using multiple databases for e-commerce in the 2000s.

00:00:57.780

Today, I will discuss games and multiple databases. This is the agenda for today's session.

00:01:05.960

First, I will present an explanation of mobile game API backends and the characteristics of mobile game apps. We will also have a quick overview of scalable database configurations. Then, we will move on to API designs for write scalability, exploring application API response design and sharding.

00:01:26.880

After that, we will discuss some pitfalls around multiple databases and testing with multiple databases. Finally, I will share considerations about the past, present, and future of multiple databases at our game servers.

00:01:58.500

Let's start with mobile game API backends.

00:02:10.979

Generally speaking, mobile game API backends can be split into two categories: real-time servers and non-real-time servers. In this session, we will focus on non-real-time servers. The objectives of these servers are to provide secure data storage, manage transactions, and enable multiplayer or multi-device communications.

00:02:29.280

For example, non-real-time servers handle inventories, friends, daily bonuses, and other transactions. This figure illustrates an example of API calls: a client consumes energy and helps a friend, while the server conducts a resource exchange transaction and returns a successful response.

00:02:41.340

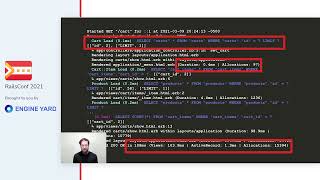

This slide explains the system overview of our mobile game API backends. We started developing our game API server in the mid-2010s. The system runs on Ruby on Rails 5.2 and primarily operates on Infrastructure as a Service (IaaS).

00:02:55.980

The main API is a monolithic application, and there are other components such as proxy servers, management tools, and HTML servers for web views. We utilize the API mode to minimize controller modules and overhead.

00:03:06.360

Additionally, we employ schema-first development using protocol buffers, and we utilize MySQL, Memcached, and Elasticsearch. Now, let's examine the characteristics of mobile game apps.

00:03:15.180

How do you try a new game app? You tap the install button, wait a minute, and start playing. It's super easy for players; however, it is also super easy for the servers to face write-heavy traffic. This slide outlines the reasons for the write-heavy traffic.

00:03:41.040

First, as mentioned earlier, easy installation leads to easy write access. Downloading is very simple, and mobile app distribution platforms ensure installations from all around the globe. There is no need to create an account, and the first in-game action equates to the first write operation. Secondly, various in-game actions trigger accesses, especially writes, such as receiving daily bonuses, engaging in battles, and purchasing items. Generally speaking, the order of magnitude for mobile apps ranges from 10,000 requests per second to 1,000,000 requests per second. Therefore, scaling the API to handle write-heavy traffic is essential.

00:05:06.900

Now, let's take a quick overview of scalable database configurations. This slide provides an overview of our database configurations. From the application side, we use vertical splitting, horizontal sharding, and read-write splitting. On the infrastructure side, we conduct load balancing on readers and automatic writer failover. Let's look at each of these components one by one.

00:05:53.419

The first technique is vertical splitting, where we divide the databases into two parts. The first part is common, containing one writer node optimized for read-heavy traffic; we prioritize consistency for scalability in this section. For example, game setting data and pointers to shards are stored here. The other part is sharded, which is the main focus of today's session. There are multiple writer nodes optimized for write-heavy traffic, and we prioritize scalability over consistency in this section. Player-generated data is primarily stored in this database.

00:07:34.560

In horizontal sharding, we consider data locality concerning each player and partition it based on player ID rather than resource types. This architecture is effective in ensuring consistency for each player and is efficient for player-based data access. We maintain a mapping table called player_shards to assign each shard. We determine a shard based on a player's first action using weighted sampling. This dynamic shard allocation is flexible for adding new shards and balancing the load among them.

00:08:41.460

There is a limitation on maximum inserts per second with this architecture; for instance, thousands or tens of thousands of queries per second are the limitations of MySQL. If we hit this limit, we can utilize a range mapping table or distributed mapping tables. We also employ manual read-write splitting. This method is complex but effective, where API servers access writer nodes to retrieve data used for transactions and previous data, while they access reader nodes for non-transactional data and other players’ data.

00:09:17.460

From the infrastructure side, we implement various multiple database systems. The key concept here is to do that outside of Rails, which increases flexibility and achieves separation of concerns. In our case, we use a DNS-based service discovery system with a custom DNS resolver. Load balancing is conducted on the weighted sampling of IP addresses, and automatic writer failover is executed by changing the IP address to a new writer node.

00:09:56.030

Thus, every aspect of multiple databases is essential for scalability. Let's delve deeper into some critical features.

00:10:15.160

Now we are going to explore the API design for write scalability.

00:10:12.740

Let's start with traffic offload by replication. Replication is an effective technique to offload read traffic. Here, we replicate DDL and DML from writers to readers, meaning that almost the same data is available on reader nodes. By offloading read traffic to these readers, we can reduce the load on writers, making the system more scalable.

00:10:41.360

However, there is an application delay. Historically, the latest data is only available on the writer node. So, how do we balance scalability with consistency?

00:11:13.260

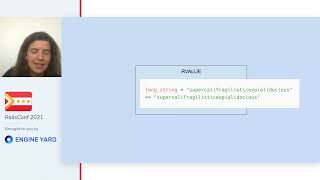

The solution is read-your-writes consistency, ensuring consistency on your writes. This slide shows the read-your-writes consistency in Rails 6. The solution is time-based connection switching; when there is a recent write, the request handler connects to the writer. This connection switching is the default in Rails 6, and a 2-second replication delay is tolerated. Using this architecture, we gain high scalability for read-heavy applications while ensuring strong consistency.

00:11:56.880

On the other hand, there are two main concerns with this architecture. First, it is not ideal for write-heavy applications since the proportion of writer traffic is high, making offloading less effective. Furthermore, we must maintain a delicate balance between replication delay and write-read ratio. When the replication delay increases, the write-read ratio may also increase, leading to scalability issues. Therefore, we must further improve this for write-heavy traffic.

00:12:57.420

This slide explains the read-write consistency for write-heavy applications. The strategy here is resource-based: players own their data, while critical data is accessed from writers, and other less critical data is retrieved from readers. The advantage of this architecture is effectiveness for write-heavy traffic, where much longer replication delays, such as tens or hundreds of seconds, are tolerated. Such replication delays may often occur due to cloud infrastructure or request surge.

00:13:59.800

This architecture effectively offloads inter-player API traffic, as fewer connections to writers are required. However, the drawbacks include complicated business logic and inefficacies concerning offloading inter-player API traffic. In essence, this architecture significantly enhances scalability, but further improvement is necessary, particularly for inter-player APIs.

00:14:52.440

Let's now examine traffic offload by resource-focused API design. Let's conduct a case study. The scenario involves buying hamburgers and adding them to an inventory system, where there is an infinite supply of hamburgers. In this scenario, player A buys hamburgers, decreasing their wallet balance while increasing the items in their inventory.

00:15:33.320

What APIs are required for this scenario? There are three necessary APIs: create burger purchase, index items in the knapsack, and show wallet. On your right-hand side, you can see the Rails routes for these resources. The API call sequence is illustrated on the left. Initially, a client calls the index items in the knapsack API and the show wallet API. The player then decides what to purchase, calling the create burger purchase and index items in the knapsack APIs again.

00:16:23.280

This illustrates that each of these API calls accesses the writer. This write-heavy traffic is driven by the recent writes in Rails 6 and due to the reliance on their own resources for read-your-writes consistency in write-heavy traffic. So, how do we address this issue of numerous write accesses in the API?

00:17:18.000

The question arises: why do we fetch data from a writer? This is necessary because the items in the wallet and inventory are being modified. Those modified items are tracked within the controller as ActiveRecord instances.

00:17:54.840

Thus, the solution is to include all the affected resources in the API response. In this scenario, there are two types of affected resources: the primary target resource, which is the burger purchase, and the affected resources, which include items in the inventory and the wallet.

00:18:30.720

In the JSON sample on the right, all resources are accounted for in the response. As illustrated, the client initiates the index items in the knapsack and show wallet call in the first action. After deciding what to buy, the client calls only create burger purchase, since all required resources are included in this response. Consequently, there are no read API requests aside from the initial call, and there are no direct accesses to the writer database save for the transaction itself.

00:19:20.040

This approach benefits both the databases and the clients. While there may be duplicated resources resulting in increased complexity, focusing on the writer database for transactions remains key, as this is the only aspect unmanageable by the readers.

00:20:01.440

This slide summarizes the mixed results of replication and resource-focused API design. On one hand, read-your-writes consistency based on data ownership proves effective for write-heavy traffic and inter-player APIs. On the other hand, including affected resources in write API responses effectively minimizes unnecessary read API requests and database accesses.

00:20:54.040

As a mixed result, we have achieved the following outcomes: first, we have developed a complex yet adaptive architecture due to manual read-write switching; second, we maximized traffic offload through affected resources in write API responses; third, we enhanced the robustness of replication delay, allowing for significantly longer application delays. Thus, we accomplished both consistency and write scalability while also improving the robustness of our application.

00:21:52.680

We have now successfully redirected writer databases to focus on transactions. However, what happens if the number of transactions exceeds the capacity of the writer databases? The answer lies in sharding.

00:22:07.200

Let's explore scalable data locality in the context of sharding. Generally, multi-tenant sharding involves splitting data by ownership, ensuring minimal interaction between shards.

00:22:46.380

This architecture is scalable; simply adding another shard is sufficient to achieve desired performance. Moreover, sharding represents an efficient architecture that guarantees optimal data locality.

00:23:27.280

However, when discussing single tenant multiplayer games, interactions between shards become crucial. Players in one extensive game world must interact with each other, and sharding does not inherently guarantee optimal data locality.

00:23:47.760

Moreover, sharding can lead to consistency issues or failures over the network, which necessitates careful management. The objective of data locality is to enhance performance and consistency by optimally placing data. The basic strategy involves minimizing operational costs while maintaining access ends to shared resources.

00:24:37.020

Let's conduct some case studies to illustrate this architecture.

00:25:10.500

The first scenario presents a situation where player A and player B train themselves, while player C calls one of them for assistance. In this case, obtaining the latest training state is unnecessary; the request encompasses 95% training requests and only 5% help requests. The solution is a pool architecture, as illustrated in the accompanying figure.

00:25:51.660

Here, we locate data according to ownership indexed by player ID, meaning that 95% of write requests only involve one writer while 5% of read requests necessitate access from one writer and one reader. This architecture performs well for most write requests.

00:26:39.480

The next scenario is about sending gifts, listing gifts, and receiving them. In this context, player A and player B send gifts to player C, who lists them chronologically. The requests consist of 30% gift sending requests and 70% gift listing or receiving requests. The proposed solution lies in push architecture, where data is located at the receiver's end, indexed by the receivable player ID.

00:27:23.760

Consequently, 30% of write requests involve two writers, whereas 70% involve only one writer. Although there is an added cost for sending gifts, this architecture proves effective for the majority of read-write requests, eliminating the need for costly merge sort operations due to already-indexed data.

00:28:13.959

The third scenario involves players becoming friends and listing them. Here, player A, player B, and player C list friends, with player C linking with player B. The request comprises 95% listing requests and 5% occasional linking requests. The solution here is a double-write architecture, enabling data to be correctly indexed by both player ID and friend player ID.

00:28:55.180

Thus, 5% of write requests will involve maximum two writers, while 95% of read requests consist only of one reader. This proves efficient for most read requests. Now, let’s move on to a more complex scenario.

00:29:45.460

In this scenario, players A, B, and C join a guild to combat a raid boss. They share a limited number of consumable weapons, and both actions and performance are critical when updating player health states and remaining weapon states. The optimal solution here is partial reshuffling.

00:30:36.580

From a database perspective, players belong to different shards, yet they are part of the same guild, necessitating both actions and performance. The first step is selecting another shard for the guild using weighted sampling. This approach demonstrates the desired shard inclusion, allowing us to locate essential resources for the raid boss in the guild’s shard.

00:31:26.020

This arrangement ensures both actions and performance since only one shard is involved per transaction. Consequently, non-critical resources remain attached to their original shards. This methodology allows guild-based transactions and APIs to be handled efficiently, ensuring reliable ACID transactions.

00:32:14.700

We have effectively enhanced data locality while achieving scalability. This data locality approach benefits not only conventional databases but also some distributed databases, considering their underlying architecture.

00:32:54.580

However, significant consistency issues due to sharding still need to be addressed. Keeping consistency over multiple databases presents considerable challenges, particularly during the mid-2000s when our game server began to operate.

00:33:50.960

Some claimed that two-phase commits could ensure the atomicity of multiple databases, similar to transactions in Excel.

00:34:32.420

This slide provides an overview of the two-phase commit for comparative study. There are two participant types: transaction managers (here the API server) and resource managers (the MySQL databases). The procedure initiates with the transaction manager requesting resource managers to update records. Upon completion, resource managers inform the transaction manager whether they’re ready to commit.

00:35:27.180

If all resource managers are ready to commit, the transaction manager instructs them to proceed; if not, all are triggered to roll back. At least that is straightforward for nominal cases.

00:36:19.360

However, prior to MySQL 5.7, transactions were incompatible with replication. Moreover, two-phase commits, like XA transactions, introduce scalability issues. To prioritize scalability, we must relax consistency while still maintaining practically acceptable levels.

00:37:23.560

Next, we will conduct a case study focusing on consistency. Consider a scenario where player A consumes an item within their knapsack and player B receives the consumed amount as a gift. While this may seem simple, the implementation of relaxed consistency within Rails 6 is, in fact, quite intricate.

00:38:19.640

Rule one involves reducing the risk of cross-talk between transaction anomalies. Before proposing this rule, we have to verify preconditions for relaxed cross-shard transactions. First, all resource managers should have the same underlying architecture, such as MySQL, and they need to be resilient to failures that may occur during continuous periods.

00:39:04.560

Let's analyze the figures on your right side; two MySQL databases are displayed. The top part illustrates a conventional two-phase commit, while the lower part demonstrates proposed relaxed transactions. In the latter case, the transaction manager directly instructs resource managers to update their records. They respond affirmatively, indicating that they’ll likely commit, which allows the transaction manager to proceed with the commitment commands.

00:39:57.920

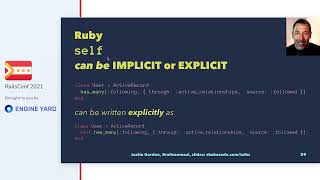

Next, we'll explore the Rails code that facilitates relaxed transactions. First, two consecutive nested transaction blocks execute a commitment at the end of their scope. At the beginning of the outer transaction block, we utilize player.blog.find(player.id) to ensure resource locking and prevent race conditions, guaranteeing a higher success rate.

00:40:59.760

The primary business logic is omitted in this example. However, as the transaction block nears the end, consecutive safe methods need to be invoked during subsequent commit requests. The presented code is integral to executing relaxed transactions, as it minimizes uncertainty during both the transaction manager's and resource managers’ operations.

00:41:51.800

However, unlikely anomalies occasionally arise, including failures of the transaction or Rails process midway through a consecutive commitment; network failures between consecutive commitments; or failures of shadow nodes before the final commitment. Here, we aimed for an XA-compatible success rate in nominal cases, omitting differences in preparation between XA commits.

00:42:37.680

However, challenges may arise if we lack persistent logs within resource managers. Thus, we attain a reasonable success rate for nominal cases.

00:43:11.720

Rule two is to ensure that inconsistencies are observable and acceptable. Before establishing this rule, we should understand the three transaction states: none of the shards having committed (the failure state), some but not all shards having committed (the anomaly state), and all shards having successfully committed (the success state). Given an anomalous state, conventional two-phase commits will require all participating shards to commit.

00:43:53.760

To do what we did, we aim for serialization to control the order of commitment, reducing the chances of reaching an anomalous state. Secondly, we highlight that inconsistencies should be evident to the transaction manager. From this viewpoint, the sender becomes the trigger for trial recovery.

00:44:27.920

This necessitates tolerating differences in perceived outcomes while ensuring that the next action does not interfere with subsequent recoveries. This practice helps to maintain a non-blocking characteristic.

00:45:43.800

Finally, rule three emphasizes maintaining identity and achieving eventual consistency. The proposed solution utilizes retry mechanisms, the simplest yet most reliable method for recovering from failures, including network failures outside server systems. To utilize the retry, we must employ an identity key that identifies the resources involved; this may be referred to as transaction ID, identity key, or request ID.

00:46:30.780

We structure the business logic by incorporating the inconsistency history into tables, allowing identification of initial conditions conveniently. Upon review of Rails code, we see that all resources persist if the associated transaction history is intact. The scenario describes how to handle anomalies by adjusting existing gifts.

00:47:24.750

This demonstrates how introducing identity and eventual consistency enables us to manage database anomalies and failures in external servers. As a result, we not only secure eventual consistency but also enhance scalability. I believe this approach is practically sound. If you’re curious about the requirements, rule four advises using at least one message queue for strict completion.

00:48:15.240

The prerequisites for this rule are that the business logic should already comply with rules one, two, and three. This architecture is divided into two phases: enqueue and dequeue. In the enqueue phase, data is recorded into a single shard database where ACID is guaranteed. A message is committed along with data within the transaction.

00:49:15.180

This ensures that at least one message exists, and messages are processed only after transaction completion. We’ll utilize timeouts within Rails and associated databases while using perform later for delayed queue operations.

00:49:53.400

Meanwhile, during the dequeue phase, we retrieve messages from the queue and ascertain the existence of corresponding data in the database. If it doesn’t exist, the transaction is rolled back. Should the data exist, we proceed with the transaction to completion.

00:50:41.060

This methodology guarantees at least one job execution, though caution must also be exercised to mitigate the risk of Ruby process clashes. With this configuration, we strive for a theoretically guaranteed completion of cross-shard transactions.

00:51:33.650

In summary, we have acquired practically acceptable consistency without sacrificing scalability. These considerations are essential for concerned databases, and ideally, it should be resolved through database solutions.

00:52:26.740

Yet, there are additional aspects to consider regarding write scalability, particularly the pitfalls concerning multiple database configurations.

00:53:10.140

The primary goal of sharding is to achieve horizontal write scalability for databases. Specifically, given n as the number of shards, we aim to keep time and space complexity near O(1) or O(log n). Although this sounds trivial, it is achievable through sharding.

00:54:02.460

Nonetheless, we encounter various pitfalls, such as the number of connections per database. The connection pool within Rails caches and reuses established database connections for performance, presenting limitations since the cached pool cannot be shared across processes.

00:54:57.320

Additionally, Shadow File leveraging GRV (Global Resource Virtualization) permits only one thread to run per process. The result of these dual limitations often generates a number of processes exceeding CPU cores. Most API servers serve a multitude of databases.

00:55:44.460

For example, with 1,000 servers and 32 CPU cores, while each process has a max of 5 connections, we could potentially exceed maximum connection limits across databases when multiplied together.

00:56:34.860

This scenario leads to deteriorating database performance stemming from high connection volumes. Under typical conditions with cloud computing providers, connection limits lie in the hundreds or low thousands, necessitating strategic targeting of connection volumes.

00:57:34.040

Strategies to reduce connection volume include assuming an average of one active connection per database request, followed by efficient release management. The solutions involve multiplexing connections with proxies or periodic flushing of stale database connections.

00:58:24.560

An example of the first solution is the pgbouncer for PostgreSQL, which offers reduced connection overhead. However, this may disable specific features of some databases while connection pooling may require clearing out connections after each request.

00:59:14.560

Active Record has a refresh connection function famous for this purpose. Although there exists a connection overhead, it isn't a significant issue for MySQL, which resolves connection volume concerns effectively.

01:00:02.540

The next pitfall concerns the connection reaper thread assigned per API server, which naturally flushes idle database connections. This was once enabled by default in Rails 5,

01:00:53.780

but resulted in connection surges when numerous threads were created for each database connection. As a result, there were numerous reaper threads and performance degradation ensued.

01:01:47.920

To summarize the pitfalls, we learned that even well-constructed architecture can be challenged by substantial numbers of databases, with the potential for more risks emerging.

01:02:35.380

To avoid pitfalls, thorough testing with multiple databases is essential. If it isn’t tested, it should be assumed to be broken.

01:03:30.440

Recommendations for testing include real remote databases in the test environment, employing actual multi-database configurations, avoiding mocks, and utilizing proper toolset, while ensuring multiple sharding options.

01:04:30.840

We also recommend using real transactions during tests, simulating partial transactional failures to ensure robust error handling algorithms.

01:05:31.140

Additionally, anomalous situations should be injected in sandbox environments to assess system performance under failure conditions.

01:06:32.180

Lastly, conducting load tests before service launches is crucial to understand system performance under variable loads.

01:07:27.190

Now we can relax about multiple databases and their impact on our game server as we assess the past, present, and future of multiple database configurations.

01:08:30.440

When we initiated our project, Rails 2 was the latest version, lacking any inherent support for multiple databases. As a result, we began extending Rails using various gems and implementing multiple monkey patches. Our focus was to prioritize performance over complexity, as many features were implemented successfully for our games.

01:09:39.920

Currently, we find ourselves working on two different Rails versions: Rails 5.2 with extended gems and monkey patches, while Rails 6.2 provides native support for multiple databases.

01:10:21.400

Common issues are being resolved in upstream Rails, and new features continue to be implemented, although discrepancies in codebases remain. Many complex solutions being proposed are being adjusted as user needs evolve.

01:11:16.480

Moving forward, we plan to simplify our multiple database strategy, aligning it with upstream developments. This will allow us to continuously evolve while contributing our technical expertise back to Rails, converting technical debts into capital.

01:12:23.580

Now, let’s summarize today’s session. We have explored various techniques to scale Rails API to handle write-heavy traffic. First, we utilized all aspects of multiple databases, including vertical splitting, horizontal sharding, replication, and load balancing.

01:12:55.200

Next, we allowed writer databases to focus on transactions, while replication efforts handled read traffic more efficiently through resource-focused API response designs that minimized unnecessary access to the writers.

01:13:48.740

Afterward, we optimized data locality, ensuring we reduced performance overhead between shards. We assessed different architectural models, such as pull, push, double writes, and partial resholding, to achieve optimal performance.

01:14:50.560

Finally, we discussed practical considerations for achieving acceptable consistency, navigating risks, and pitfalls that still exist in the ecosystem. Testing with multiple databases is essential to validate our implementations and ensure smooth functioning.

01:15:17.440

Contributing upstream also helps transform technical debt into assets that benefit the broader community.

01:15:55.920

Thank you for listening. DNA loves Rails!