00:00:10

All right, I guess we'll go ahead and get started. I see a few less people trickling in, but this is a pretty full room. I'm excited!

00:00:17

Hey there, everybody! Welcome to this talk about the weird things that happen in production.

00:00:22

My name is Ryan Laughlin, or Raufreg if you know me from the internet.

00:00:27

I am one of the co-founders of Splitwise, which is an app to split expenses with other people.

00:00:35

Right out of the gate, I want to say I'm really excited to be giving this talk. This is actually not only my first RailsConf, but this is the first time I've ever given a conference talk.

00:00:40

Thank you! In particular, I'm really excited to talk about what I think is an important gap in how we think about testing and debugging our applications, both in the Rails community and beyond.

00:00:47

If you want to follow this talk at your own pace or look back at it later, all the slides and the presenter notes I'm reading are up at railsconf.com/talks. So with that out of the way, let's get right into it.

00:01:11

Let's say you're building a new feature for your app. You plan out all the details with your team about how the feature should work. You think through all the edge cases and the possible issues you might encounter.

00:01:38

Then you sit down to actually start writing the code. Maybe you write tests to help ensure that the feature works as intended. You might do code reviews so that your fellow developers can help you spot potential bugs and fix them.

00:01:51

Perhaps you have a staging server or a formal QA process to help catch bugs before things go out the door. So you take your time and fix every single bug you can find. Now it’s time to actually deploy your code to production for the whole world to see.

00:02:07

And congrats! You’re done! You shipped it! Everything's great! Probably. I mean, maybe you’re done. I don’t know about you, but I am a person who makes mistakes. Quite often, when I make an update to an app, I miss some minor bugs or another and I end up deploying that bug to production. I’ve worked on the same Rails app for seven years and in that time, I’ve probably shipped several hundred bugs.

00:03:08

So this is a question I ask myself a lot: if my code has bugs in it, how would I even know? I want to be specific here. Note that I’m not asking how we prevent bugs from happening in the first place—that’s not what I’m asking. What I’m asking is how we detect those bugs when they happen.

00:03:44

We have to expect bugs to happen in production. We should expect to make mistakes and we should expect to make them in production. A quick show of hands: raise your hand if you’ve ever deployed something. Okay, raise your hand if you’ve ever deployed a bug. Everybody, right? Like, this is something that happens.

00:04:04

All the best engineers I know have made these kinds of mistakes and that’s not something to be afraid of. It’s not something to be ashamed of. It’s part of being an engineer. Making a mistake is a chance to learn and grow by figuring out what went wrong, and your systems need to accommodate that.

00:04:31

Now you might think, 'This is what tests are for!' This is why we started doing tests as an industry in a more concentrated way over the last 10 to 15 years. Tests catch bugs so that we can fix those bugs before we ship them, and testing is a super important part of this process.

00:05:00

Tests are really good at ensuring that our code generally works as expected, and they’re really good at protecting our code against regressions when we’re making updates to existing code. But tests do not catch everything, and in fact, it’s sort of tautologically impossible for tests to catch everything because we’re the ones who write the tests.

00:05:29

Most of the tests we write are not exhaustive; they test a handful of cases, so if there’s an important edge case we didn’t think about in advance, there may not be any test for that edge case.

00:06:04

You can improve your chances by including other people in this process, whether it's via code reviews or quality assurance. Other people can help you spot issues and problems that you might have missed by yourself. This is a really important part of development. In my experience, two heads are always better than one, and you’re going to have better results as more people look at something before it goes out the door.

00:06:31

But it has the same problem: even a room full of very smart people are occasionally going to miss something. It’s hard to hold an entire system in your head and to think about all the different parts of your app, how they might interact with each other, and how they might interact with this new piece of code.

00:07:03

That brings me to my second idea: your production environment is unique. Your production environment is different than your test environments, it’s different than your development environment, and it’s different than your staging environment. That means you may have bugs that are unique to production that you do not see anywhere else.

00:07:51

Let me give you a quick example. If your app uses a database, which most apps do, I bet that most or all of your tests assume that that database is empty at the start of the test with no pre-existing data.

00:08:03

That is not what your app experiences in production. In production, you’re working with months or even years of pre-existing data, which can leave edge cases that you completely overlooked in your test environment.

00:08:26

And that’s just one way that these two environments differ. There are always going to be differences between your local environment and your production environment, no matter how much effort you put into making them the same.

00:08:40

So if we know we’re going to have bugs and we know that production is a unique environment, then it’s pretty logical that we should be on the lookout for bugs that happen specifically in production.

00:09:05

That means we need to monitor our production environment. There are a few existing tools for doing this, but they’re not perfect, and I think they’re a little bit incomplete for a lot of apps.

00:09:33

The first line of defense here is exception reporting. These are things like Rollbar, Sentry, or Airbrake. You can use the standalone exception notification gem if you don’t want to use a third party. These are tools that help you by sending you an alert any time an unexpected exception bubbles up in your app.

00:10:03

And this is really great! If my app explodes in some unexpected way, I want to know. However, there are really big weaknesses to exception reporting. First of all, exception reporting can be really noisy, especially if you’re running a big app at scale.

00:10:36

You will get a lot of errors that are not your fault. People will submit requests with invalid string encodings or dates that don’t exist, or they will scan your app for vulnerabilities and submit tons of garbage data—just lots of really odd stuff that happens when you’re a real app in the real world.

00:11:06

While you can tune your exception reporting to screen out a lot of these false alarms, in my experience, there will always be new and exciting exception types caused by really odd, unimportant user behavior. The consequence of this is that because there are so many unimportant alerts, the signal-to-noise ratio is really low.

00:11:52

When you have one critical exception in the middle of 20 false alarms, it’s easy to overlook it. It’s like the boy who cried wolf, right? When something seriously happens and you need to pay attention to it, you might not be because you’ve filtered out in your brain the 20 other false alarms that came beforehand.

00:12:16

Also, very importantly, exception reporting can only catch exceptions. So if you’re only looking for exceptions, there are entire categories of bugs that you might miss where the code runs without crashing but returns the wrong result.

00:12:51

Here’s a very simple example of making a typo in string interpolation: using parentheses around a name instead of curly brackets returns a result. This won’t trigger any exception reporting, but it’s very clearly doing the wrong thing.

00:13:14

This is a contrived example, but it’s surprisingly easy for this kind of issue to slip by in production. So what else do we have besides exception reporting? We have bug reports.

00:13:29

This is often the last line of defense in real production apps—reports that come directly from your users. If you break something hard enough, your users will tell you about it. But there are big problems with this approach, too, for kind of obvious reasons.

00:13:59

First of all, it’s a really bad experience. Bugs make people frustrated, angry, and confused, and it makes them lose trust in the thing that you’ve built. Nobody likes using buggy software; it’s just a bad experience.

00:14:14

Second, a lot of people won’t bother to report issues. It takes time to write somebody an email. If I see an obvious problem with your app or your website, nine times out of ten, I’m just going to leave your site. I’m not necessarily going to spend the time to write you a nice long bug report with full repro steps, especially if I’m a non-technical person.

00:14:56

It’s a lot to ask from your users, and not all of them are going to do it. Finally, users can only report the problems that they actually see. If you have a bug in an internal system or a background job or something like that, it’s very possible that no one will notice for quite a long time.

00:15:12

The bug could cause a lot of damage before anyone is even aware of its existence. So if something wasn’t caught by tests, wasn’t caught by QA, wasn’t caught by exception reporting, and wasn’t caught by users’ bug reports, how the heck are we supposed to know about it at all?

00:15:27

How can we catch silent bugs? The very simple answer is that you can’t. You cannot fix something that you don’t know about. So the question is not how do we catch silent bugs, the question is: how do we turn silent bugs into noisy bugs?

00:16:02

How do we make the low-level problems that we don’t know about actually make a noise? We need a system that makes noise. We need a system that tells us when something unexpected happens so that we can investigate what went wrong.

00:16:41

We’ve gotten pretty good at this in development; this is where test suites really shine. When you make a change to your app and suddenly a dozen tests fail, you know that something unexpected has gone wrong and you need to look into it further so that you can fix it.

00:17:29

What would be really useful is something that’s like a test suite but focused on production—something that doesn’t test specific edge cases but monitors your app for the existence of issues in general.

00:17:50

And that’s where checkups come in. Checkups are tests for production in the same way that a test suite tells you when something is broken in development.

00:18:01

A checkup suite tells you when something has broken in production. Let me walk you through how it works.

00:18:29

First of all, to write a checkup, we need to declare some expectations about how our app is supposed to behave when everything's going well. For example, in my app, I expect every user to have a valid email address.

00:18:46

If I were to write a checkup, it would be a block of code that helps me verify: does every user have a valid email address? I don't actually know unless something checks this.

00:19:13

The checkup then runs on a regular basis, many times per day, checking to see if anything unusual has happened. This is important in production because maybe all my users had valid email addresses at 2:00 p.m. and again at 3:00 p.m., but something may happen between 3:00 and 4:00.

00:19:34

Even if I haven’t deployed anything new recently, it's possible that a new bug may have bubbled to the surface for the first time since my last deploy, and a checkup can help you detect when that happens.

00:20:00

Finally, if your checkup fails, then you need to be alerted so that you can investigate what happened and fix the underlying bug. Once you get that alert, you can start to figure out what the problem is, and that’s the whole idea.

00:20:21

It’s pretty simple, but it’s really, really powerful because what checkups do is help you detect symptoms so that you can fix the cause. They are the number one tool I know for discovering issues you didn’t even know about.

00:21:10

It’s a lot like getting a check-up with a doctor in real life. You go not expecting to find anything wrong, but if you find something, detecting that problem early and fixing it before it becomes a bigger issue makes a huge difference and is way less painful.

00:21:34

An ounce of prevention is worth a pound of cure. To illustrate, let me give you a real example that we had a couple of years ago when we added multiple email support for our users.

00:22:22

At Splitwise, we have a user model. Surprise! For a long time, it was a really simple user model; we made it with Authlogic. A user had one email address—simple enough.

00:22:47

But then, we decided to add multiple email address support because that’s a useful feature people would take advantage of. We created a new email address model and added a has many relationship so one user could have many email addresses.

00:23:06

As we polished up this feature and started writing tests, we realized we needed to ensure that all users have at least one email address—that's important. I didn't want any users without email addresses.

00:23:44

We added the validation to ensure every user has at least one email address. This worked great! Our test passed, and everything was perfect—it’s a very straightforward piece of code.

00:24:10

I checked before this talk; if you Google 'Rails has at least one,' this is the first Stack Overflow result. It's the standard way to do it if you’re stumbling around on the internet trying to figure out what to do.

00:24:44

We wrote a whole bunch of tests to ensure this worked the way we intended. If you tried to delete a user’s last email address, the validation wouldn’t let you continue. So this was well-tested.

00:25:11

Now, I want you to think about this code for a few seconds, and consider what might go wrong. And again, let me be specific: I’m not asking you to figure out what the bug is, I’m asking you to think about what might happen if there is a bug.

00:25:37

If there is a bug, how will we find out what is the thing that we will notice in production? Checkups are really good when you have a hunch that something might go wrong. You think your code’s fine, but you want some extra insurance to be sure.

00:26:17

This is the same reason we write tests: when I write code in general, I’m pretty confident that I’ve written my code correctly. However, tests give me more confidence. They double-check to ensure I haven’t missed something.

00:26:37

In this case, we thought, 'Well, we had that temporary bug where someone ended up with no email addresses till we wrote tests for it, so maybe we should write a checkup for that.'

00:27:06

We wrote a checkup to fetch all users who had recently updated their accounts and then check to see if there are any users with zero email addresses. We run this once per hour. If we find any users who don’t have email addresses, then this checkup sends an alert.

00:27:30

This checkup is literally just five lines of code. It’s very simple! It’s not some crazy complicated technical magic; it’s the kind of thing you would write from the Rails console if you wanted to check this yourself.

00:28:01

So we deployed our new feature and included this checkup to make sure we hadn’t missed anything. For the first day or two, everything was great. After a few days, sure enough, our little checkup sent us an alert.

00:28:34

Someone had slipped through the cracks; there was a user who had ended up with zero email addresses despite our tests, our conscious attention, and all of our best planning.

00:29:09

We investigated; looked through our logs for this user and realized: interesting, this user used to have two email addresses, they added a second email address and then tried to delete both of them at the same time.

00:29:29

We realized there was a race condition that we hadn’t anticipated during our initial testing. If you have a user with two email addresses and they submit two different requests at the same time, each request tries to delete a different email address.

00:29:46

Both requests pass validation, and in request number one, the user still has one email address left, so Rails thinks it's totally valid for both requests. But because it passes validation, both deleted email addresses get fully deleted.

00:30:23

As a result, you end up with an invalid user with zero email addresses—that's a really obvious bug now that we know it's there, but we missed it in our first pass.

00:30:46

Thankfully, because we’d written a checkup, we were able to discover this bug quickly the first time it happened. Because we discovered it so quickly, we could fix it right away.

00:31:11

This is an example of how a few lines of code running occasionally on a repeated basis can make a difference—help you spot something that otherwise you would have missed that could significantly impact user behavior and the function of your app.

00:31:52

For example, a user with zero email addresses wouldn’t be able to log back into their account, and depending on what you do, that could be really bad.

00:32:14

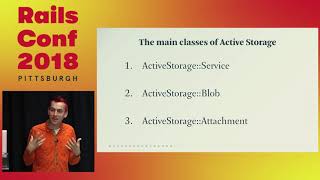

So how do you write a checkup? How do you apply this? Here’s the same little short code snippet from before, and there are a couple of ways we can turn this into a fully functional checkup.

00:32:33

Method number one is by turning it into a rake task. This is how we do most of our checkups. It’s pretty easy to set up a rake task as a recurring cron job, so that it gets called on a regular and repeating basis.

00:33:04

We use Heroku, so we use a Heroku scheduler for this, which makes it easy to configure a rake task to get called once a day, once an hour, or once every ten minutes.

00:33:37

Another good option is an after commit hook or an after save hook. This is an Active Record callback that executes after your model has been fully written to the database. After commit, in particular, is a good place to catch this.

00:33:58

If you’ve accidentally written something incorrect to your database, like a user with zero email addresses, this is an excellent place to catch it. I should note that this approach does add some overhead every time you save an Active Record object.

00:34:22

However, it gives you immediate feedback about any unusual errors. This can be a really good option if you’re writing a checkup about a mission-critical part of your app.

00:34:58

You can also take a split-the-difference approach and perform checkups in background jobs. This is a great way to perform checkups on demand in response to a specific user action like updating a record or making some other change, without slowing down the actual request too much.

00:35:23

You can verify that everything’s in order just a few seconds later, and if it’s not, you can respond and fix your code.

00:35:46

Honestly, that’s just the beginning. Checkups are intentionally a pretty general idea, and there are lots of places where you can use this concept. We have places where we run inline checks in our controller actions to ensure that things progress the way they’re expected.

00:36:08

We’ve used them in service objects to encapsulate them nicely and understand exactly what’s going on. It’s a very broad concept that can be used in many different places.

00:36:41

Okay, a different question: when should I write a checkup? What kinds of problems can checkups catch? Developing an intuition for this can be tough, like when you started writing tests for the first time. Should you test everything? Maybe not.

00:37:03

As we’ve seen, checkups are really good at sniffing out race conditions, which I want to highlight. I think race conditions are probably the best example of a problem that is really rare in development and testing but absolutely common in production.

00:37:46

If you’re like me, you probably find thinking about race conditions really hard. My brain is not built to think in parallel threads, but in production, that’s what my app faces all the time.

00:38:04

It’s extremely common not only to see many users trying to use your app at the same time, but to see an individual user submitting multiple simultaneous requests. Checkups can help you detect if something weird happens due to this.

00:38:34

Invalid data is another issue that comes up pretty commonly in production. The longer you run an app in production, the more likely you are to accumulate some weird, malformed, improper records in your database, whether it's MySQL or other data stores.

00:39:02

Think about caching layers, Redis, Memcache, even static files in S3. As you accumulate stuff, the likelihood of some of it being in a weird format that you didn’t expect just keeps growing.

00:39:33

Going back to the zero email addresses problem, we found the bug, wrote some tests, fixed it, and deployed. However, the problem was only fixed going forward.

00:40:09

If we wanted to solve this problem completely, we needed to go back and fix the existing invalid records that were now in our database. There were still several users who didn’t have email addresses.

00:40:31

Just because our test suite passed doesn’t mean it’s impossible for old users’ records to still be invalid. We had to hand-fix those records before the issue was fully resolved.

00:41:03

This is a common pattern and a difference between development, testing, and production. In development, on purpose, you generally work with a clean slate; you're encouraged to clean out your database very regularly.

00:41:33

But that’s not how production works. In production, you might have malformed records caused by bugs that happened months or years ago. Most of the time, that ends up being okay.

00:42:00

Almost every app has a couple of weird bits of data floating around somewhere. But sometimes that malformed data is really important to catch and fix, and checkups are an excellent tool for sniffing that out.

00:42:34

I also want to highlight this method: raise your hand if you’ve ever used the update_column method in Active Record or the update_columns method. So, you might have invalid data in your database.

00:43:09

This skips validations, which is kind of the point. There are times when you want to bypass validations, but if you've ever used this—even once from a console, not in code that you ship—it’s possible that your data might not check out.

00:43:39

Checkups are also a really good tool for when you know there's a bug but have no idea how to fix it yet. You can use a checkup to gather additional diagnostic information about a bug that you don't understand.

00:44:16

For example, if there’s a model that does something weird every one in a thousand times, and you don’t know what’s causing it, if we check every time we make a change to this model, we can record types of users and interactions causing it.

00:44:56

Depending on the bug, we might even be able to paper over it in real time. If there's a programmatic way to resolve it once discovered, we can write a checkup that detects the issue, tells us, and actually fixes it, minimizing impact in production.

00:45:36

Finally, checkups are really valuable for anyone doing ops work in production. To be explicit, the whole idea of a checkup is borrowed from ops—checking if systems are healthy.

00:46:15

So if you're someone doing ops, this is useful. It can also be useful even if you’re not in charge of ops for your application because checkups can alert you to unexpected changes in behavior caused not by external circumstances, but by your code.

00:46:42

For instance, if your app usually processes a thousand background jobs a day and suddenly starts processing a hundred thousand background jobs a day, that could indicate a bug in your code. You may have an infinite loop somewhere.

00:47:26

An early warning about these types of things gives you a chance to say, 'Okay, wait a minute, did we change something? Why is this happening?' I know a lot of Rails developers can relate to this.

00:48:00

Rails allows small teams to do a lot, and I know, personally, I'm someone who didn’t have ops experience when I started my job, and Rails made that part of the job easy to learn. I know a lot of small apps where developers wear many hats.

00:48:40

At Splitwise, we have an entire suite of checkups; as I said, we think of it like a test suite. It's not just where's the test—it's where's the checkup? Some checkups run daily, some every hour, and some every few minutes.

00:49:12

Some are exhaustive, meaning they check every single record that’s been recently updated because we don’t want to miss a single problem. This is a really good approach if you have something mission-critical.

00:49:47

Other times, they’re just spot checks. They’re not meant to catch every single error that happens, but they let us know if an error is happening frequently enough that it could become a big problem. They give us some sense that something is beginning to degrade, and if that degradation is within acceptable parameters.

00:50:14

I want to share another example where a checkup saved my bacon. My company, Splitwise, helps people share expenses with each other, and one of the most important things we do is calculate balances.

00:50:40

For example, you owe Ada $56. It’s crucial that we get this calculation right. We have tests to validate that everything adds up correctly in every possible edge case; this is the bread and butter of what we do.

00:51:09

One random Tuesday, everything went wrong. Suddenly, our code started returning different answers for the same calculation. When I asked, 'How much do I owe Ada?' our Rails app might reply $56, but it might also reply $139.

00:51:42

The result was completely random; it was like flipping a coin. You would randomly get one of these two possible answers back every time you called user.balance.

00:52:01

This was obviously a huge user-facing problem. It’s massively confusing, and seeing the wrong balance would destroy a user’s faith in our app. This is literally our one job: to keep track of your expenses for you.

00:52:30

If we can’t do that, why would you use Splitwise at all? Here’s the kicker: we hadn’t deployed anything new that day. In fact, we hadn’t touched anything related to this balance calculation code in weeks.

00:53:02

Nothing had changed at all; we had no reason to expect that anything should go wrong. And yet, at 1 p.m. on that Tuesday, our checkup went off. We had a checkup that said, 'Hey, you use caching in your balance layer.'

00:53:29

It’s crucial to ensure cached values are correct, so why don’t we grab everyone who’s been updated recently and check that cached balance value against what the value would be if we re-calculated from scratch?

00:53:56

By continuously comparing these two values, we could verify that our cache-optimized balance method is working as expected. If anything went wrong, we could not only raise an alarm about it, but we could clear the cache and get rid of the incorrect value.

00:54:22

And holy crap, that literally fixed the problem! Not only did this checkup alert us about the issue right away, but it actually mitigated the problem in real-time while we scrambled to figure out the cause and fix it.

00:54:45

In the end, something that could have affected thousands of users and sent us angry emails ended up affecting zero. Nobody noticed; nobody contacted us. If you’re curious about the actual underlying details, this turned out to be a critical infrastructure problem with our third-party caching provider.

00:55:15

They had an issue with a cluster. We detected it so fast that we alerted them instead of them alerting us. What could have been one of the worst days turned into one of the best days.

00:55:37

This is a day I’m really proud of—one where we not only had the foresight to fix our own problems, but helped this third party get on the problem faster. As an engineer, that’s all I can ask for: building something stable that helps not only us, but helps others.

00:56:25

So I want to wrap up with a few final thoughts about checkups. First, I want to be very explicit: this is a work in progress. Checkups are literally an idea that I came up with and that we use internally at Splitwise.

00:56:44

As I mentioned at the start, this is my first big public talk, and this is my first time trying to spread this idea outside my own workplace. I know for a fact this is a common issue because I’ve talked to friends at different companies, and many have internal systems that double-check their production environment.

00:57:15

The problem is that almost no one talks about those systems in public; they’re very siloed. If you’re a Rails developer building a new app, the way you learn this stuff is through trial and error and painful experience.

00:57:38

It’s not yet part of the standard discussion about things that happen when you’re building and deploying an app. I think it’s because we don’t have words for it yet. We don’t have a pre-existing vocabulary about how to double-check our production systems.

00:58:10

Because we don’t have that vocabulary, we don't have best practices either. We’re not thinking about this problem in a communal way; we’re not learning from each other yet. I have years of battle-tested experience, and if it weren’t for this talk, I don’t know how I would share it.

00:58:44

There are ways, but there’s not an ongoing conversation about how to sniff out production-specific issues and make that a healthy part of your standard workflow. My hope is that the idea and concept of a checkup can be a starting point.

00:59:14

I think it’s a good intuitive framing for how to sniff out unexpected bugs in production. If you think about your own apps through this lens, you will begin to see how checkups can help you build something more robust and healthy.

01:00:00

I honestly believe that every app should have a checkup suite once it’s big enough, just like a test suite. You can deploy a successful app without tests or without checkups, but you’re leaving yourself blind to a lot of potential problems and headaches.

01:00:34

With that in mind, I know that building a whole checkup suite sounds pretty intimidating, so here’s my suggestion for one specific small place to start: Active Record has this method called .valid. It runs your validations and returns true or false.

01:01:48

You can easily write five lines of code that grab all the recent records in your database, iterate through them, and call .valid on each one to ensure the persistent data still passes validation.

01:02:21

There are literally five lines of code; it’s a pretty easy place to start. If you run this on all your Active Record models, I’m confident you’ll find some invalid records that managed to weasel their way into your database.

01:02:47

You will be surprised at what you find, especially if you go back in time and run this on all your historical records, not just what happened in the last hour. Finding problems is just the start of the battle—once you find a problem, you can start to fix it.

01:03:24

Again, my name is Ryan Laughlin. I’m Raufreg on Twitter. All these slides are at raufreg.com/talks if you want to reference them. I care a lot about this idea, so I’d love to answer questions and talk about this in general during the conference. Come find me.