00:00:10

So I guess we'll start. It's my first time at RailsConf, and it's also the first time I'm seeing snow in my life. This is the warmest set of clothes that I could find because back where I'm from, even if you don't have a t-shirt, it's fine because it's hot and humid. I'm from India, by the way. So just a shout out to my company and my team back home, Right Panthers. We are a Ruby on Rails shop based in Cochin, India. We have clients all around the world and we help companies scale their dev teams when they need it, and also help build MVPs. So if you need any help, just come talk to me.

00:00:39

This is me everywhere on the web; I'm known as Code Wretches. If you find some place where Code Wretches is not registered, please let me know so I can get it registered there too. I organize a lot of Ruby events back home in my state and I recently just organized Rails Girls Cochin. Thank you! I'm a Ruby developer mainly, but I do flirt with Elixir, and I'm one of the crazy people who have a Crystal application in production. If you guys are into learning something new, try out Crystal. We can definitely run it in production. Another thing you may notice about me is that I love stickers. This is my old laptop, and it's my new laptop, so you guys have to help me fill this laptop as well. I'll give you free branding across the world. I'm going to tie one off this. If you need stickers, I have a few with me, so please meet me after the talk.

00:01:39

This is where I'm from; you can see a lot of elephants there, and that's a real picture. My city is a coastal city in the south of India. If you guys ever come to India and happen to be around this place, just tweet at me, and you'll have a lot of fun-friendly Ruby developers that you can hang out with. There's a fun fact about India that I always like mentioning: we have 22 official languages and thousands of spoken languages with fifty thousand dialects. I know three of them, so if you see two Indians talking to each other in English, don't be surprised.

00:02:40

My talk is actually a story about me, a novice developer who wrote the first lines of code for a web application. Everyone was saying that it was only going to be a thousand rows of data with about ten users. However, it grew so big that we ended up processing gigabytes of data every hour. This was me back in 2014. So this talk is about the last four years of my journey, and I'm giving it to you guys for free. All these learnings have helped me, and they helped our client as well because we didn't have to invest a lot in hardware since we didn't have that much money.

00:03:16

We are all craftsmen, and we can't do anything without our tools. I have to give due credit to my tools. I call them the “oranges”, and they're really good. They work together like superheroes: Ruby is Captain America, Elixir is Ironman, and the database is the Hulk. The problem is that we make the Hulk carry our suitcases, which is the cost of everything. We shouldn't be afraid to use it.

00:04:10

Now, this talk is about how we can offload a lot of tasks we do with our Rails code to our database, so that we are actually making full use of the stack we have. I have done all this in production, so you don’t have to feel great about doing it. We'll talk about several concepts related to the database such as query planner, indexing, attribute pre-loading, views, generating JSON, and asynchronous commits.

00:05:10

Let's start with the brain of the database. As we all know, the database is general-purpose software. It wasn't built for a single industry's use; it’s built for healthcare, aviation, banking, and even used by Twitter. The question is, how does it handle all these scenarios? A general-purpose software does nothing more than define a schema and takes care of data for all industries. The truth is, it doesn't. The database doesn’t know what scenarios it'll be put under, and it's up to us to optimize for it.

00:06:05

There’s cool syntax that most of you might be hesitant to use; it defines what we need in terms of data but doesn’t dictate how we should retrieve that data or how fast to retrieve it. I’m not telling it that if it doesn’t find it in time, I’m going to lose my job. So where are the decisions made? That’s where the query planner comes in; it’s the brain of your database. We need to understand how it works to optimize our systems.

00:07:14

The plan is created by the database whenever we run a query. The plan literally represents the cost of running that query. The last line I want to mention is that this plan assumes it has the best one. However, we as humans know there are oftentimes better options. Therefore, we need to check what the query plan knows to change it and actually improve performance.

00:08:02

Since version 4.2, PostgreSQL has included the EXPLAIN method, which gives us the plan for our queries. Here are two examples from my production environment. We can see that the cost of one query is more than the other, and it also mentions whether it will perform a sequential scan or an index scan. It has assumed that the best way is to do a sequential scan rather than an index scan because there is no index. We check the query plan to identify bottlenecks and fix them to make the plan choose faster methods.

00:09:02

A simple tip here is that, where you saw SQL, you can get the same format in XML, JSON—whichever you're comfortable with. The golden rule is to index everything, so we do index scans. However, I found out that it's not better to index everything. Indexing is a special lookup table that the database can use to speed up data retrieval. An index is like a pointer that points to the actual row where our data is.

00:09:45

Something interesting I found is that databases are incredibly smart, and even if you have indexes, they might not actually use them. They may determine the cost of performing a sequential scan is lower. Imagine you have a hundred million rows; in that case, if you have not just a unique item but multiple rows with the same item, the database prefers to perform the sequential scan to fetch data faster. If you have indexed a column that returns a short, medium, and long size and you are searching for one of them, it may opt for a sequential scan.

00:10:38

The things that you should index are the primary key and the foreign key. The primary key is indexed by Rails by default. Additionally, index all the columns that are going into a WHERE clause, index join tables, and also index date columns to speed up your reports. If you have a condition in your WHERE clause, such as wanting entries with a price greater than $90 or less than $30, you can build a partial index. Do not index fields that you are going to read and write frequently, or tables that will remain small forever, like a settings table.

00:11:26

Next, let's discuss attribute pre-loading. Most databases come with arrays; PostgreSQL is widely used. I’m going to explain how we do this in Rails, particularly when we need to find all tasks. This includes attacks that run two queries together, and we get objects for each task. What I suggest is writing a bit of SQL to gather everything into an array so that we end up with a single object, which can run three times faster. Imagine populating a report view—it doesn’t make sense to have dozens of objects you won’t work on. You just need the data to populate your view; if you need reports or if you’re printing PDFs, having too many objects isn’t useful, particularly with large databases.

00:12:30

So we gather everything in an array, leading to just one object. We then loop through that single object after the query runs. This is an efficient use of arrays to make your system faster. Next, let’s talk about views. We all know that views are essential because the design pattern of Model-View-Controller can be easily implemented with Rails. Back when I started, learning MVC seemed like a lot, but Rails simplified it for me so that I was only following a pattern.

00:13:19

However, it’s crucial not to run business logic within the view. If you find yourself doing this, don't! A view should solely display what we need. For instance, imagine you have a report of all transactions that occur in a day. To generate that view, you may need to run three different queries: one for orders, one for products, and one for deliveries. Each time you visit that particular page, you are running all these queries, leading to performance issues.

00:14:08

In databases, if you have a frequently run query, it's worthwhile creating a view so that you don’t have to run the query repeatedly. Views are much like HTML views, but we do it that way because it simplifies things. We can implement an alternative, but keeping everything separate makes life easier. For instance, if you need to find all the managers in your company, you simply create a view to select the ID, name, and email of all managers, allowing you to pull that information effortlessly.

00:15:05

One excellent aspect of views is that they don't take up physical space on your disk; they reside in the RAM of your database server. The speed gained here is that once you create a view, the system doesn’t need to recalculate costs again. The plan to run it has been established beforehand, and since views are virtual tables—often called pseudo tables—they don’t take up actual storage space.

00:15:58

So, all the prior steps that the database takes to run the query are pre-established, allowing for direct execution without extra overhead. Given that views are pseudo tables, they do not occupy storage, but this has led to the introduction of materialized views, where actual data is stored in physical space. Initially, this concept was introduced by Oracle and has now been implemented in PostgreSQL, Microsoft SQL Server, IBM DB2, and more.

00:16:42

The question then arises: how do you use materialized views in Ruby? I know I'm at RailsConf talking more about databases than Ruby code, but thanks to Active Record, you don't need fancy gems to create such views. You simply write a bit of SQL here within a migration to create the view, and then create a model for it. Set the table name to the name of your pseudo table, and that’s it! You can perform all the usual actions like selects, where, and finds. Everything happens on the pseudo table with data from your view, allowing speedier processing and filtering.

00:17:42

When you have common queries and you want to run filters, the system becomes faster. You won’t need to run eight queries just to find someone who made the maximum number of sales. This simplifies everything. You need to define the ID of this table because it doesn’t have its own ID, meaning you must designate how it works. The benchmark shows a dramatic improvement in performance.

00:18:31

The takeaway is that fetching data from your views is significantly faster. You can capture commonly-used joins and filters while transferring data-intensive processing to the database instead of Ruby. We've traditionally placed a lot of the filtering on the Ruby side; now we can offload that work to the database side which makes live filtering of complex associations feasible. Not only that, but these views can also be indexed due to their physical storage.

00:19:25

The downside is that using views will consume more RAM and storage—it is something manageable nowadays since you can easily pay for the added capacity. Another concern is that if the data gets refreshed daily, certain older versions may lead to a table lock where no one else can read from it. Recent improvements in PostgreSQL have implemented parallelism, allowing access during refreshes and making everything run smoother.

00:20:31

The best use cases for these indexed materialized views are historical data because this data is static and won’t change, unlike the future. For example, sales data from seven days ago or sales data from a month ago can be stored using this technique. This allows your system to work efficiently while providing live results such as graphs, all while keeping current data retrieval practices intact.

00:21:28

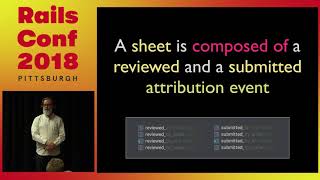

One critical takeaway from my talk today is that we moved our APIs to the database! Yes, we indeed did this as JSON has become essential, acting as a glue between your front-end and back-end. All modern JS frameworks and APIs are utilizing JSON. Thus, it's a natural evolution for databases to support such a format. Databases now even support formats like XML and allow for custom data types.

00:22:24

If you want to convert a row in your table to JSON, you simply call row.to_json in Ruby, and it handles the conversion. In practice, we don’t convert the entire row; instead, we utilize our queries to receive IDs and emails from users, and return that as the response. This is more efficient than converting the entire object, and we can include more complex logic if needed. If you have a view, passing it through becomes simple for the user as they can access their projects all at once.

00:23:45

Even if a schema doesn’t exist within the database, we can generate random data to assist in creating our JSON responses. We use methods that don’t require existing schemas to build structures, effectively filling in the gaps as needed. However, there are several gems that can help format JSON for your models properly, so explore options like Sours if you're looking for straightforward helpers.

00:24:45

However, for more complex cases, SQL will inevitably come into the mix. No matter who claims to avoid it, understanding how SQL functions is essential. I came from a PHP background originally, and Rails initially presented SQL as if I didn’t have to write it—just a simple where condition, and that was mind-blowing. However, as systems expand, complexities arise, necessitating a return to SQL.

00:25:45

Like me, if you wish to retain as much functionality in Ruby, then create a view for it and utilize the gem to generate JSON. The benchmarks show that transitioning our API requests from two seconds down to two hundred milliseconds is extremely desirable, leading to high-traffic capabilities with everything shooting up.

00:26:41

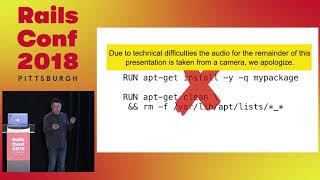

The last topic I want to cover is synchronous commits for systems managing large writes. When persisting data into a database, we often leave non-essential operations to something like Kafka and logs. But if you need to maintain all transactions within the database itself, remember that databases prioritize reliability. Data must be stored adequately, and this reliability sometimes sacrifices writing speed.

00:27:60

Most databases, such as PostgreSQL, are known for their slower writes and faster reads due to their waiting for confirmations from the hard disk. Disabling this confirmation can indeed speed up your system if data loss isn’t critically concerning. It allows a higher throughput, which can be essential for applications like IoT devices collecting large amounts of non-valuable data.

00:28:38

Disabling synchronous commits in select transactions can yield faster results. Of course, the trade-off is that if the database crashes, the last unwritten data may be lost. However, in specific circumstances where data value is minimal—like log data—it may not be significantly damaging. Keeping in mind factions on RAM and storage capacity can also enhance system throughput.

00:29:31

To summarize, all the methodologies I discussed about indexing, array usage, creating views for frequent reports, moving your APIs to the database, and selectively disabling synchronous commits can significantly improve performance. The pivotal takeaway is to truly know your tech stack. As Rails developers, remember you aren’t solely using Rails; you’re employing various tools. It’s crucial to maintain control over your ecosystem; otherwise, your system may reach a bottleneck.

00:30:25

SQL has been a go-to for the last 40 years, and it’s not disappearing. Invest time in learning it; there's no universal rule that works for everyone. These strategies can profoundly help scale monolithic systems. I’ve detailed more on my company blog, so feel free to check that out. Thank you! And don't forget about the 22 languages!