00:00:15.500

Thank you all for coming to my talk. I really appreciate it. I know there are multiple tracks and many ways to spend your time, so I truly value each one of you for choosing to be here. Thanks also to RubyConf and our fantastic sponsors, as well as the venue. I've been in Los Angeles for about three years and don't often go downtown. LA is somewhat like a peculiar archipelago; when you're on your own little island, you tend to stay there. This venue is fantastic, so thank you so much. Let's get started.

00:00:33.960

I tend to speak quickly, and I'm working hard right now to avoid talking a mile a minute. The pace usually gets faster when I'm excited and discussing software engineering, Ruby, and the excitement surrounding ethical programming. If at any point I start going too fast, feel free to make a visible gesture, and I'll slow down. I'll also check in a couple of times to ensure this pace is suitable.

00:01:13.619

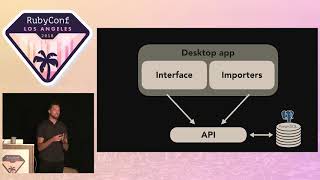

I will talk for about 35 minutes, leaving some time at the end for questions. If I go a little longer than planned, feel free to seek me out after the show—I’m happy to engage in these discussions indefinitely. My name is Eric Weinstein, and I’m a software engineer based in LA. I’m currently the CTO of a startup I co-founded, called Aux, which is developing auction infrastructure for blockchain technology. Some people in other programming communities may wonder if I've gone a bit crazy for diving into blockchain, but it's genuinely fascinating. I welcome further discussions about it after the show.

00:01:46.259

My undergraduate work was in philosophy, mostly in the philosophy of physics, along with some applied ethics. My graduate work focused on creative writing and computer science. The topic of ethics in software engineering has been at the forefront of my thoughts for a while. For those interested, I created a 'human hash' that I will share at the end; you can find all my contact information there. I encourage you to reach out to me, especially on Twitter—for friendly chats about ethical programming or anything else!

00:02:40.500

A few years ago, I published a book titled 'Ruby Wizardry' that teaches Ruby to children aged eight to eleven. If you want to discuss that, I’m happy to chat! The good folks at No Starch Press often create discount codes, so if you're interested in the book but find it financially challenging, please reach out, and we can make it work.

00:03:09.210

Though this is not a long talk, I believe we still benefit from some kind of agenda. We'll start by exploring what it means to be 'good' in the context of software. The notion of being good can be rather fuzzy, right? We should aim for our software to be ethical, and we will examine three mini-case studies in robo-ethics and then three in machine ethics. Robo-ethics pertains to how humans ought to behave ethically when building robots and other systems, while I’ll be a bit loose with the term 'robot' and include programs of any kind.

00:03:36.750

Robo-ethics revolves around considering ethical considerations while developing these machines. Machine ethics leans more towards a science fiction realm, focusing on how we design ethical artificial moral agents. If a machine makes a decision, how do we know if that decision is based on good ethical principles? How can we evaluate the ethicality of a machine's decisions compared to human decision-making?

00:04:00.050

As I mentioned, we will hopefully have a bit of time at the end for questions. Please note that this talk contains stories about real-life people. I won’t specifically name anyone who has been injured or killed, and there will be no images or detailed descriptions of death or gore; however, we will discuss how software and hardware can fail. We will touch on some potentially upsetting topics for the audience, including a couple that are medically oriented. I want to be transparent about the content and the potential stakes.

00:04:31.389

The stakes are very personal for me. This is my son, who was born in March, three months prematurely. We decided to skip the third trimester, which I do not recommend, but luckily, we were blessed with remarkable professionals in the NICU where he spent seven weeks. The staff—doctors, nurses, and therapists—were hardworking, kind, talented individuals whose contributions were phenomenal. He weighed only 2 pounds and 13 ounces at birth. If you look closely at this picture, you can see my wife's hand, indicating how tiny he was. I plan to show this picture at every family gathering forever because of the comical look he has as I take his picture.

00:05:24.880

If you're curious, he's wearing a bilirubin mask, which was part of his treatment. He needed exposure to very bright lights to help break down excess bilirubin in his blood, which can harm his eyes, and the mask was meant to protect him. On a lighter note, I like to think of it as a kind of spa treatment. For those concerned, I promise he is now a happy and healthy 17-pound eight-month-old baby! He has finally surpassed our chihuahua mix in weight which is great.

00:06:22.479

The team at Mattel in Los Angeles provided an incredible gift that I cannot overstate; they treated him as if he were their own child. However, I often reflect not just on the people in the room but also on those outside of it. From his heart rate to oxygen saturation, every metric was monitored thanks to software and hardware designed by engineers. Each treatment he received also relied on proper functioning hardware and software.

00:06:50.000

It's easy to overlook just how much software and hardware is running in the background during a medical emergency. The existence of reliable technology is vital to maintaining the well-being of patients and saving lives. This talk is, in part, about that!

00:07:07.000

So, as mentioned, the stakes are high in more ways than one. One aspect of the fuzziness I wanted to clarify early is what it means to be good. There are several ethical theories that we will be primarily focused on in this discussion, particularly in the realm of applied ethics, which is concerned with making ethical decisions in concrete situations and living ethical lives.

00:07:54.509

Rather than discussing abstract situations or thought experiments, I’ll narrow down to three main schools of thought. The first is utilitarianism, which aims to produce the most good for the most people—whatever that might mean. If we could measure goodness and determine how much good each person receives, we could optimize our ethical decisions for the benefit of the majority. However, there are certainly scenarios where this approach falters.

00:08:39.690

Next up are deontological ethical theories, which are rule-based, resembling the Code of Hammurabi, the Ten Commandments, and Kant's categorical imperative. These theories dictate that we should have rules or laws guiding our actions in broad circumstances. An example might be the principle that killing is never acceptable unless it's to save one's life, for instance.

00:09:31.290

The third approach I want to focus on in this talk is casuistry. Interestingly, the term casuistry can also refer to reasoning that appears informative but is not—something rather contradictory. In our context, casuistry means using case-based ethical reasoning to extract rules in a dialogical way. By evaluating specific cases, we can attempt to formulate a broader ethical rule system.

00:10:10.620

As you can see, my approach will be somewhat casuistical. For the purposes of this talk, being good means safeguarding the well-being of moral agents that interact with our software by deriving best practices from specific instances; thus, we will examine case studies to identify what we should be doing.

00:10:46.740

We'll begin with robo-ethics, which explores how humans can behave ethically while designing robots or software systems. I will share insights from three case studies: the Therac-25, the Volkswagen emissions scandal, and the Ethereum DAO hack. The latter seems to differ from the earlier two, which are more health-related, but it still illustrates the variety of ethical dilemmas we should be considering.

00:11:19.600

How many of you are familiar with the Therac-25? If you are, please raise your hand. And if not, please indulge me as I briefly explain this important story. The Therac-25 was a machine designed for radiation therapy of cancer patients, created in 1982. It operated in two modes: megavolt x-ray (often referred to as photon therapy) and electron beam therapy. The earlier models had hardware locks, but the Therac-25 relied on software constraints instead.

00:12:00.230

Unfortunately, between 1985 and 1987, the machine caused severe overdoses of radiation for several patients, resulting in six reported deaths due to the injuries sustained. What went wrong? We had a machine designed to heal but instead, it caused harm.

00:12:14.279

The review attributed the problems to concurrent programming errors, emphasizing how complex concurrency can be; it can also have lethal consequences. Technicians, if they accidentally selected one mode and then quickly switched to another mode within an eight-second window, would encounter software locks that failed to engage correctly. Thus, patients received radiation doses 100 times greater than intended.

00:12:49.629

This tragedy was made possible because the hardware interlocks designed to protect against such mishaps were replaced with software ones, leading to a less effective safety mechanism. Compounding these issues, the code was not independently reviewed and was primarily written in assembly language, lacking the safety nets of higher-level programming languages.

00:13:37.430

Additional factors include inadequate understanding of potential failure modes, inadequate testing, and a failure to recognize that hardware and software combinations were not subject to robust testing before machine assembly on site. All of these factors contributed to the injuries and unfortunate deaths experienced in the mid-1980s.

00:14:02.949

What can we learn from this case? There's a parallel between the medical profession's code of conduct and our engineering practices. In medicine, there exists a standard of care that guides treatment processes. Deviations from this standard can lead to malpractice suits; similar deviations in engineering can endanger the people who depend on our technology. The lesson is clear: when we deviate from our standard of care—be it failing to test code or being less than fully confident in a software function—we risk endangering lives.

00:14:39.910

Our second case study is the Volkswagen emissions scandal. In 2008, it was revealed that Volkswagen vehicles were equipped with what’s referred to as defeat devices, which modified vehicle performance in test modes to meet emissions standards but behaved differently in real-world driving conditions. This manipulation makes it harder to calculate the total consequences of this scandal, unlike the very clear deaths related to the Therac-25.

00:15:14.259

Financially, the fallout was severe, amounting to billions in fines and legal penalties, and estimates suggest that this resulted in approximately 59 premature deaths due to respiratory illnesses exacerbated by increased emissions. This case illustrates the dangers of ignoring ethical considerations in favor of financial gain.

00:15:53.410

The problem was that various conditions—like steering wheel position and ambient air pressure—could trigger the vehicle to switch into test mode. As software engineers, developing such tests to ensure our products comply with regulations isn't inherently harmful. However, we encounter a moral hazard when companies decide to bypass stringent regulations to avoid financial loss, believing nobody would get hurt.

00:16:31.420

This case teaches us that we must ask ourselves about the potential and actual uses of our code. It’s our obligation to refuse to write programs that will harm people or other moral agents. I will delve deeper into this topic later, but the takeaway here is that software developers need to assert their moral stance, and there are serious consequences if we ignore this responsibility.

00:17:13.400

Now, moving to our third case study in robo-ethics, let’s discuss the Ethereum DAO hack. The DAO, which launched in 2016, aimed to function as a decentralized venture capital vehicle. People invested Ether, and members could vote on how to allocate those funds, driving various project initiatives.

00:17:49.620

Unfortunately, due to a vulnerability within the smart contract, an attacker siphoned off four million Ether, worth approximately 70 million dollars at the time, and about 840 million dollars today. This incident sparked significant discussions about liability in the blockchain and the inability to identify whom to hold accountable.

00:18:36.400

The smart contracts are Turing-complete, which allows them to perform almost any computation. However, this vulnerability—a reentrancy bug—was caused by improper sequencing of state updates and transactions, allowing funds to be siphoned out repeatedly. This could have been identified and fixed with better testing practices and a more robust programming language.

00:19:26.300

Despite the loss, the Ethereum community's reaction involved implementing a hard fork of the blockchain without sufficient reflection on responsibility and learning from the event. The community’s response divided Ethereum into two versions—one continuing the legacy chain and the other reflecting the fork where the incident was rectified. While some investors benefited financially, the moral implications of the attack, and the subsequent lack of accountability, were concerning.

00:20:06.640

I believe the cases highlight significant lessons regarding how we assess responsibilities and moral obligations in our programming practices. These cases demonstrate our need to rigorously evaluate our decisions, especially as technology and its ethical implications evolve.

00:20:50.000

Transitioning to machine ethics, I want to introduce three more hypothetical case studies based on currently existing technologies. This discussion will cover facial recognition technology, the use of police data in predictive policing, and autonomous vehicles. You’ll notice a recurring theme from 'Minority Report,' which somewhat ominously predicted many modern technologies.

00:21:25.200

In machine learning, we're focused on developing systems that can perform tasks without explicit programming, mainly through methods of pattern recognition. For example, a program can learn to recognize objects through supervised learning techniques, which include decision trees and neural networks. Let’s first consider Apple’s Face ID—a system that utilizes biometric data to grant access.

00:21:58.450

This raises critical questions about what it means when a machine can recognize us. Our biometric data including facial recognition is valuable; who owns this data? What happens if my biometric information is used without my consent? What if a massive privacy breach occurs?

00:22:32.490

The main difference between biometric authentication and traditional password systems is that the machine makes inherent decisions based on complex data inputs. And while we can rationally analyze password rejection, biometric authentication is less understood. Potential misrecognition could happen for a variety of reasons—lighting, angles, or biases in the model itself.

00:23:21.030

This leads us to consider the moral implications of machine decisions. We are starting to entrust machines with decision-making capabilities that come with weighty moral considerations, not because these machines ‘think’ in a traditional sense, but due to their increasing functionality.

00:23:57.520

Using policing data in predictive policing creates additional concerns, as I previously discussed in a separate talk. Using rich data sets from police records can help manage resources, but it also risks perpetuating systemic biases and unfair targeting based on historical data.

00:24:37.960

The reality is that teaching machines to recognize likely offenders—especially if trained on discriminatory data—will entrench those same biases in automated systems. The critical concern here is when a machine learns from biased data, the outcome will reflect those biases, mimicking society's prejudices.

00:25:07.570

Lastly, autonomous vehicles pose significant ethical dilemmas, especially after high-profile accidents involving companies like Uber and Tesla. This leads us to ponder liability: when a vehicle makes poor decisions, who is responsible? The software engineers? The car? The companies producing these vehicles? Or the individuals who trained them extensively?

00:25:50.330

Once again, these machines lack the ability to provide explanations for their actions, which makes trusting them in crucial situations extremely concerning. This highlights our need for a framework where we can evaluate the moral dimensions of machine-based actions.

00:26:25.080

The trolley problem, which examines moral dilemmas in life-and-death situations, provides a framework for evaluating programmatic decisions in these autonomous technologies. We must consider how to design ethical mechanisms and reward functions for our AI, focusing not just on outcomes but on the reasoning behind decisions.

00:27:28.740

As we explore the humanization of robots, we must recognize the critical importance of accountability. The ability to explain decisions in human terms—and to accept blame—is essential in ensuring ethical AI systems.

00:28:12.600

I noticed a disparity during my talks on how many individuals have formal computer science education. By show of hands, how many of you have taken a coursework that incorporated an ethical component? It seems supplementing computer science programs with ethical education is essential alongside traditional coding skills.

00:28:47.000

Now let me summarize the key concepts: we need a clear standard for ethical conduct in coding practices, akin to a Hippocratic oath in medicine. Developers must know what's acceptable behavior within their field, allowing for a framework to address ethical concerns during development and in the field.

00:29:18.230

Furthermore, we should promote an organized system of support for software developers who wish to stand against unethical practices. There is considerable pressure to compromise on ethical standards, especially for those without significant organizational power.

00:29:53.830

Relying upon historical examples, the stark reality is that people can get harmed, even physically or face incarceration due to negligence in programming practices. Adopting a united stance with strong guidelines against unethical software decisions is critical.

00:30:28.300

This all leads me back to the quote from Holocaust survivor Elie Wiesel, who famously stated, 'There is no neutral position.' We must speak out if we want to change our industry. Neutrality only empowers those exercising oppression.

00:31:05.950

If we remain silent, we allow ethical failures to perpetuate. Thus, my call to action extends to our community of developers—it is our responsibility to prioritize ethical software development.

00:31:41.730

In conclusion, we should operationalize ethics within our daily processes. A code of conduct outlining our ethical standards can create a foundation that allows everyone to thrive. Through encouraging candid discussions, documenting experiences, and learning from past mistakes, we can progress towards stronger ethical practices in software development.

00:32:30.400

I've settled on calling this initiative the 'Legion of Benevolent Software Developers.' This new organization would support those seeking to resist writing unethical software. Input on this name is welcome!

00:33:15.100

Thank you so much for attending my talk! I’d love to answer any questions you might have. For me, this isn’t just discussion, this is a call for all of us to actively participate in developing ethical software.