00:00:17.119

Thank you all for coming. It's going to be an awesome talk, quite possibly the best talk you'll ever hear in your life. But, I actually have a built-in escape hatch here, so if things start going wrong, I might just bail.

00:00:28.160

A couple of weeks ago, I was having a bit of a panic attack about this presentation. As a programmer, my natural habitat is in some dark place, looking at a screen; it's not talking to a bunch of people under bright lights. What's up with this?

00:00:39.600

During this panic attack, I went for a walk to clear my mind. I was listening to my favorite podcast called Hardcore History. I highly recommend it. This particular episode was about the Punic Wars.

00:01:06.080

In case you haven't heard about the Punic Wars, let me give you a brief rundown. A long time ago, there was this place called Rome, and they decided they should take over the world, which they largely achieved. However, there was this one country that was a thorn in their side for a long time: Carthage.

00:01:30.640

They fought back and forth in a stalemate, and then one day, Hannibal had this brilliant idea. He decided he was going to lead a sneak attack on Rome by marching his army through the Alps. That's pretty cool, right? But the most awesome part of the story, at least for me, is that he had war elephants.

00:01:56.399

Some people have doubted this story over the years. In 1959, a British engineer borrowed a circus elephant named Jumbo and marched it across the Alps as part of a bar bet. I guess the moral here is not to underestimate elephants; they don't like it, and they have long memories.

00:02:40.879

Now, this talk is really about biggish data. So, what is biggish data? It's not about big data or Hadoop clusters; that's way over my head. It's also not about fancy architectures. Instead, I want to discuss something that I find more practical and interesting: how to keep your app working as your production dataset grows into biggish data territory.

00:03:57.200

This issue can arise even if you don't have a fire hose of data coming in. For example, if you run a popular e-commerce site or a site with many users, you can accumulate a ton of data over the years. As this data accumulates, you may find that your site's performance gradually declines in ways you don't quite understand.

00:04:12.560

This talk is based on my experience at Honeybadger. In case you haven't heard of us, we provide exception performance and uptime monitoring. Right now, we have about a terabyte of errors in our database, and we acquire roughly two gigabytes of new errors per day. All of this goes into a plain vanilla PostgreSQL database behind a pretty basic Rails application.

00:04:38.080

The good news is that PostgreSQL can handle this pretty easily. The bad news is that if your app isn't engineered to handle this level of data, you're kind of screwed. A 100 megabyte database behaves fundamentally differently than a one terabyte database.

00:04:54.400

Many of the normal conventions we use in Rails apps just stop working when you have this much data.

00:05:00.720

For example, I learned that pretty much every pagination system breaks after page 2000. Even if you try to delete half your data, deleting can take a lot of time.

00:05:08.159

I will explain why this happens, how you can work around it, and how to optimize your queries in your database. To truly understand this, we need to go back in time to the summer of 1978, a summer filled with disco and the VT-100 terminal.

00:05:30.080

The VT-100 was the first computer terminal you could buy from a company and set on your desk to prove you were the alpha nerd of the office. It was also the time when Oracle 1.0 was being developed, one of the first databases to use SQL. This was written in PDP-11 assembly language.

00:06:00.800

Now, in our modern times, marketers tend to throw around buzzwords like cloud computing and platform as a service, but back then the buzzword was 'real-time computing,' which meant generating your reports in five minutes instead of five days.

00:06:14.240

While that was impressive at the time, it’s not the kind of thing I’d want to build a web app on top of. But over the past 36 years, something funny happened: Moore's Law transformed this idea into a web stack.

00:06:30.360

Most people know vaguely what Moore’s Law is, but in technical terms, it means that computers get more awesome as time goes forward, defined as being able to do more stuff faster and process more data efficiently. I’d like to present, for the first time in public, Starr’s corollary to Moore’s Law: as database growth outpaces Moore's Law, you literally travel back in time.

00:07:10.320

I can already see your minds being blown; it sounds like bubble wrap popping. To survive in this new time period, you need to understand the methods and motivations of the people from that era. We need to start thinking like our ancestors, who were very interested in hardware.

00:07:25.120

If you happen to be experiencing database scaling issues where your app is slowing down due to the growing size of your database, you can probably solve it right now by purchasing a real computer. A real computer can provide the two critical resources databases need for high performance: a lot of RAM and fast disk I/O.

00:07:44.480

Virtual servers tend to lack both of these capabilities, so investing in a physical machine can be a game-changer. While you're at it, make sure to use multiple disks. It’s not just about having a RAID array; you want to separate your operating system, database data, and logs onto different hard drives to enable better I/O scheduling.

00:08:05.600

If solving your issues with a real computer has worked, great! If you want to stay, I won’t be offended. However, if that didn't work or if you want to ensure that everything is optimal, you should next examine your queries.

00:08:30.760

Most of us develop against small datasets, so inefficient queries often go unnoticed. Books have been written on query optimization, and I can't cover everything in 30 minutes, but I’ll point out one vital tool: EXPLAIN.

00:08:43.840

PostgreSQL offers an incredible feature called EXPLAIN, where you pass a query and it shows you the query plan. This can look overwhelming, but for the purposes of this talk, we only need to focus on one metric: rows.

00:09:02.240

The more rows you touch in a query, the more data your system has to process to provide the answers you want. The key to query optimization is limiting the amount of rows touched. For example, if you run an inefficient COUNT query, PostgreSQL will literally count every row one by one; this is not efficient.

00:09:51.199

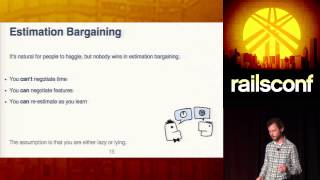

Pagination systems tend to break around page 1000 or 2000 because of how offset and limit work, which is similar to COUNT. They can lead to PostgreSQL counting many unnecessary rows.

00:10:04.080

The solution to the inefficiency is to use range queries. Range queries are faster because they leverage indexes, resulting in fewer rows being touched compared to offset and limit operations. A well-structured query can see performance improvements.

00:10:45.120

Sorting is another tricky element; it can be very fast if done correctly, but it can also lead to performance issues if the data isn't sorted the way the index is configured. Understanding how to structure your sorts in conjunction with your database indexes is crucial for performance.

00:11:00.160

As a recap for query optimization, you should develop against a real dataset and utilize the EXPLAIN tool often. Always strive to minimize the number of rows your queries process because touching more rows generally leads to slower performance.

00:11:45.119

Now, back to the pacing of our talk. For the second half, we’ll cover infrastructure issues related to biggish data, including workflows for handling large datasets. Here’s our plan: we will have cute pictures representing various topics and links if you want to do further reading.

00:12:06.079

Our ancestors were deeply involved in the creation of disk operating systems. It seems like they were developing new systems every couple of seconds, yet today we find ourselves with only a few main choices. It’s interesting how times change.

00:12:34.079

The first recommendation for Linux users is to increase your read-ahead cache. Many people aren't aware of this, but making this change can lead to a doubling of read throughput. Linux pre-fetches the next set of blocks into RAM when it sees consecutive requests.

00:12:53.679

The default setting for the read-ahead cache is typically around 256k, but increasing it to 2 or 4 megabytes can substantially improve performance. Additionally, use a modern file system; ext3 is not recommended, and if you're using ext4 or XFS, you may want to tweak journaling settings.

00:13:21.679

You'll want to adjust PostgreSQL settings based on the RAM available in your machine. An easy way to do this is with a tool called pgTune, which analyzes your setup and configures PostgreSQL with sensible values.

00:13:46.400

Another critical aspect is the VACUUM command in PostgreSQL. It’s essential for cleanup because queries and updates can leave behind a mess, and VACUUM goes and removes unnecessary data. However, this operation can be resource-intensive.

00:14:01.120

If your server is heavily loaded and your VACUUM operations are taking a hit, you might be tempted to reduce how often VACUUM runs. However, this can lead to problems, as we learned the hard way. Generally, the solution to VACUUM issues is to run it more frequently.

00:14:24.759

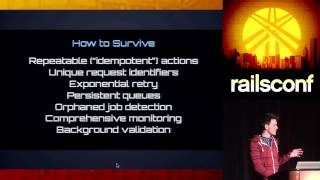

Next, I'm going to touch on velocity. If you have a huge influx of data or many read requests, managing database connections becomes vital. Each connection in PostgreSQL is a separate process that consumes its own RAM, making it crucial to limit your connections.

00:14:43.920

Implementing connection pooling is a great way to address this challenge. In Ruby, there are libraries to handle this, but you can also use a tool like PgBouncer, which serves as a proxy between your Ruby application and PostgreSQL.

00:15:01.759

Another common issue is dealing with locks. When updating rows within a transaction, locks are placed until the action is completed, preventing other operations on the same rows. Avoiding situations where a record is updated continuously in quick succession helps mitigate locking issues.

00:15:14.240

For intensive queries, consider creating read-only replicas using PostgreSQL's streaming replication. You can direct heavy queries to the replica, ensuring that the primary database remains responsive for your users.

00:15:36.240

Partitioning is an excellent practice for managing large datasets. PostgreSQL allows you to set up partitioning schemes where data is organized into separate tables based on different criteria, which aids in significantly speeding up data culling and archiving.

00:15:57.799

This way, deleting old data becomes a matter of dropping a table instead of running lengthy delete queries that can freeze your system, especially when dealing with two terabytes of data.

00:16:15.520

Lastly, managing backups is a significant concern for large datasets. Using traditional cron jobs to dump a two-terabyte database into S3 doesn't scale well. Instead, taking advantage of PostgreSQL's streaming replication allows for incremental backups.

00:16:36.759

Tools such as WAL-E make it simple to upload these incremental backups to S3, providing a straightforward route for point-in-time restores.

00:16:58.559

As I've worked on my slides, I realized there's a lot of information here. This can be overwhelming, but remember that the issues with biggish data tend to present themselves one at a time. You don't have to know everything at once.

00:17:20.480

You can tackle each challenge as it arises, and I have faith that you all can handle this because you're incredible. None of us expected we could turn transistors into cat memes, but we did it, and that’s the type of people we are.

00:17:43.920

If you’re interested in discussing this further, please come up to me after the talk. I have delicious, spicy Mexican candy as an incentive. If you'd like to learn more, all the referenced links from today's talk will be available online.

00:18:06.320

Also, if you're looking for better visibility into production errors, check out Honeybadger. We love Rails developers. And that brings me to the end of the show.

00:18:22.960

Thank you!