00:00:21.170

Welcome everyone to "Cache is King." My name is Molly Struve, and I am the lead site reliability engineer at Kenna Security. If you came to this talk hoping to see some sexy performance graphs, I’m happy to say you will not be disappointed. If you’re the kind of person who enjoys an entertaining GIF or two in your technical talks, then you’re in the right place. If you came to this talk to find out what the heck a site reliability engineer does, well, that's a bingo. And finally, if you want to learn how you can use Ruby and Rails to kick your performance up to the next level, then this is the talk for you.

00:00:37.199

As I mentioned, I’m a site reliability engineer. Most people, when they hear site reliability engineer, don't necessarily think of Ruby or Rails; instead, they think of someone who's working with MySQL, Redis, AWS, Elasticsearch, Postgres, or some other third-party service to ensure that it’s up and running smoothly. For the most part, this is a lot of what we do. Kenna's site reliability team is just over a year old, but when we first formed, you can bet we did exactly what you'd expect. We searched for those long-running, awful MySQL queries and optimized them by adding indexes where they were missing, using select statements to avoid those N+1 queries, and by processing things in small batches to ensure we were never pulling too many records from the database at once.

00:01:06.780

We had Elasticsearch searches that were constantly timing out, so we rewrote them to ensure that they could finish successfully. We even overhauled how our background processing framework, Sidekiq, was communicating with Redis. All of these changes led to significant improvements in performance and stability. But even with all of these optimizations, we were still seeing high loads across all of our data stores, and that’s when we realized something else was going on. Rather than tell you what was happening, I want to demonstrate, so for this I need a volunteer.

00:01:38.150

Come on up! Awesome, thank you! Okay, what’s your name? Shane Smith? Let's remember that! Last time, what’s your name? Shane Smith. Alright, how annoyed are you right now? Okay, how annoyed would you be if I asked you your name a million times? Aren't you glad I didn’t say banana? No? Okay, really nice! What is one easy thing I could have this person do so I don’t have to keep asking for their name? Shout it out! Even simpler, just write it down! If you could write your name down there—your first name will work—great! Now that I have Shane’s name written on this piece of paper, I no longer have to keep asking Shane for it; I can simply read the piece of paper.

00:02:36.170

This is exactly what it's like for your Rails applications. Imagine I’m your Rails application, and Shane is your data store. If your Rails application has to ask your data store millions and millions of times for information, eventually it’s going to get overwhelmed, and it's going to take your Rails application a long time to get the response. If instead your Rails application makes use of a local cache, which is essentially what this piece of paper is doing, it can get the information it needs a whole lot faster and save your data store a whole lot of headache. Thank you!

00:03:01.140

The moment at Kenna when we realized it was the quantity of our data store hits that were wreaking havoc on our data stores was a huge aha moment for us. We immediately started trying to figure out all the ways we could decrease the number of data store hits we were making. Now, before I dive into the awesome methods we use in Ruby and Rails to achieve this, I want to give you a little background on Kenna to provide you with some context regarding the stories I’m about to share.

00:03:28.150

Kenna Security helps Fortune 500 companies manage their cybersecurity risk. The average company has 60,000 assets; you can think of an asset as basically anything with an IP address. Additionally, the average company has 24 million vulnerabilities, which are potential paths through which an attacker could compromise an asset. With all this data, it can be extremely difficult for companies to know what they need to focus on and fix first, and that’s where Kenna comes in. At Kenna, we take all this data and run it through our proprietary algorithms, which then inform our clients about the vulnerabilities that pose the biggest risk to their infrastructure, so they know what they need to focus on fixing first.

00:04:06.440

When we initially gather all this asset and vulnerability data, the first thing we do is put it into MySQL, which serves as our source of truth. From there, we index it into Elasticsearch; this allows our clients to slice and dice their data any way they need to. To index assets and vulnerabilities into Elasticsearch, we have to serialize them, and that is what I want to cover in my first story. Serialization, particularly the serialization of vulnerabilities, is what we do the most at Kenna.

00:04:45.520

When we began serializing vulnerabilities for Elasticsearch, we were using Active Model Serializers to do it. Active Model Serializers hook right into your Active Record objects, so all you have to do is define the fields you want to serialize, and it takes care of the rest. It’s super simple, which is why it was naturally my first choice for a solution. However, it became a less effective solution when we started serializing over 200 million vulnerabilities a day. As the number of vulnerabilities we were serializing increased, the rate at which we could serialize them dropped dramatically.

00:05:09.870

We began maxing out CPU in our database, and the caption for this Slack screenshot was '11 hours and counting.' Our database was literally on fire all the time. Some people might look at this graph, and their first inclination would be to say, 'Why not just beef up your hardware?' Unfortunately, at this point, we were already running on the largest RDS instance AWS had to offer, so increasing our hardware was not an option.

00:05:30.490

My team and I looked at this graph and thought, 'Okay, there’s got to be some horrible, long-running MySQL query.' Off we went hunting for that elusive horrible MySQL query. Much like Liam Neeson in Taken, we were determined to find the root cause of our MySQL woes, but we never found any long-running horrible MySQL queries, because they didn’t exist. Instead, we discovered many fast millisecond queries that were happening repeatedly, again and again. All these queries were lightning-fast, but we were making so many of them that our database was being overloaded.

00:06:13.170

We immediately started trying to figure out how we could serialize all this data and make fewer database calls. What if, instead of making individual calls to MySQL to get the data for each individual vulnerability, we grouped all the vulnerabilities together and made one call to MySQL to get all that data? From this idea came the concept of bulk serialization. To implement this, we started with a cache class. This cache class was responsible for taking a set of vulnerabilities and a client, and then running all the MySQL lookups for them at once.

00:06:38.700

We then passed this cache class to our vulnerability serializer, which still held all the logic needed to serialize each individual field. However, now instead of communicating with the database, it would simply read from our cache class. Let’s look at an example of this: in our application, vulnerabilities have a related model called custom fields that allows us to add any special attribute we want to a vulnerability. Prior to this change, when we would serialize custom fields, we had to talk to the database. Now, we could simply refer to our cache class.

00:07:14.730

The payoff of this change was big. For starters, the time it took to serialize vulnerabilities dropped dramatically. Here’s a console shot showing how long it takes to serialize 300 vulnerabilities individually: it takes just over six seconds, and that’s probably a generous benchmark considering it would take even longer when our database was under load. If instead we serialize those exact same 300 vulnerabilities in bulk, BOOM! Less than a second! These speedups are a direct result of the decrease in the number of database hits we had to make to serialize these vulnerabilities.

00:07:45.490

To serialize those 300 vulnerabilities individually, we had to make 2,100 calls to the database. To serialize those same 300 vulnerabilities in bulk, we now only have to make seven calls. As you can glean from the math here, that’s seven calls per individual vulnerability or seven calls for however many vulnerabilities you can group together at once. In our case, we’re serializing vulnerabilities in batches of a thousand, so we dropped the number of database requests we were making for each batch from 7,000 down to seven.

00:08:16.250

This large drop in database requests is plainly apparent on this MySQL queries graph, which shows the number of requests we were making before and after we deployed the bulk serialization change. With this significant drop in requests came a large drop in database load, as you can see on this RDS CPU utilization graph. Prior to the change, we were maxing out our database at 100%; afterward, we were sitting pretty at around 20-25 percent.

00:08:41.860

And it’s been like this ever since. The moral of the story here is that when you find yourself processing a large amount of data, try to find ways to use Ruby to help you process that data in bulk. We did this with serialization, but it can be applied anytime you find yourself processing data one-by-one. Take a step back and ask yourself if there’s a way to process this data together in bulk, because one call for a thousand IDs is always going to be faster than a thousand individual database calls.

00:09:06.570

Now, unfortunately, the serialization saga doesn’t end here. Once we got MySQL all happy and sorted out, suddenly Redis became sad. This, folks, is the life of a site reliability engineer: a lot of days we feel like this. You put one fire out, and yes, another starts somewhere else. In this case, we transferred the load from MySQL to Redis.

00:09:34.330

When we index vulnerabilities into Elasticsearch, we not only have to make requests to MySQL to get all their data, but we also have to make calls to Redis in order to know where to put them in Elasticsearch. In Elasticsearch, vulnerabilities are organized by client, so to know where a vulnerability belongs, we have to make a GET request to Redis to get the index name for that vulnerability. While preparing vulnerabilities for indexing, we gather up all their serialized vulnerability hashes, and one of the last things we do is make that Redis GET request to retrieve the index name for each vulnerability based on its client.

00:10:02.300

These serialized vulnerability hashes are grouped by client, so this Redis GET request often returns the same information over and over again. Now, keep in mind, all these Redis GET requests are super simple and very fast; they execute in about a millisecond. But as I stated before, it doesn't matter how fast your requests are—if you're making a ton of them, it’s going to take you a long time. We were making so many of these simple GET requests that they accounted for roughly 65% of our job runtime.

00:10:30.860

The solution to eliminate many of these requests was Ruby. In this case, we ended up using a Ruby hash to cache the Elasticsearch index name for each client. Then, when looping through those serialized vulnerability hashes, rather than hitting Redis for every single vulnerability, we could simply reference our client indexes hash. This meant we only had to hit Redis once per client instead of once per vulnerability.

00:11:08.700

Let’s take a look at how this paid off. Given these three example batches of vulnerabilities—no matter how many vulnerabilities are in each batch, we only ever have to hit Redis three times to get all the information we need to know where they belong. As I mentioned before, these batches usually contain a thousand vulnerabilities apiece, allowing us to roughly decrease the number of hits we were making to Redis by a thousand times, which in turn led to a 65% increase in our job speed. Even though Redis is fast, a local cache is faster.

00:11:35.290

To put this in perspective, getting a piece of information from a local cache is like driving from downtown Minneapolis to the Minneapolis St. Paul Airport, which takes about a 20-25 minute drive. Getting the same piece of information from Redis is like driving from downtown to the airport, and then flying all the way to Chicago to get it! Redis is so fast that it can be easy to forget you’re actually making an external request when you’re talking to it, and those external requests can add up quickly and impact the performance of your application.

00:12:05.190

With this in mind, remember that Redis is fast, but a local cache, such as a hash cache, is always going to be faster. We’ve just seen two ways we can use simple Ruby to replace our datastore hits. Next, I want to talk about how you can utilize your Active Record framework to replace your datastore hits.

00:12:33.700

This past year, at Kenna, we sharded our main MySQL database. When we did, we chose to do so by client, so each client’s data lives in its own shard database. To help accomplish this, we chose to use the Octopus sharding gem. This gem provides us with this handy-dandy `using` method, which, when provided with a database name, contains all the logic needed to communicate with that database.

00:13:04.320

Since our information is divided by client, we created a sharding configuration hash that tells us which client belongs on what sharded database. Each time we make a MySQL request, we take the client ID, pass it to that sharding configuration hash, and obtain the database name we need to communicate with. Given that we must access the sharding configuration hash every single time we make a MySQL request, our first thought was, 'Why not store it in Redis?' Redis is fast, and the configuration hash we want to store is relatively small.

00:13:37.370

Originally, that configuration hash was small, but it eventually grew as we added more clients. Now, 13 kilobytes might not seem like a lot of data, but if you're making requests for 13 kilobytes of data millions of times, it can add up. Additionally, we continually increased the number of background workers to improve our data throughput, eventually reaching 285 workers operating concurrently. Remember, every time one of these workers makes a MySQL request, it must first go to Redis to retrieve the 13 kilobyte configuration hash. This quickly added up until we reached the point where we were reading 7.8 bytes per second from Redis.

00:14:06.600

We knew this was not sustainable as we continued to grow and add clients. When we began looking for a solution to this issue, we decided to check out the Active Record connection object. The Active Record connection object is where Active Record keeps all the information needed to communicate with your database. We hoped this might be a good place to store a configuration hash.

00:14:39.210

Upon diving into a console to investigate, we discovered something unexpected: instead of an Active Record connection object, we found an Octopus proxy object that our Octopus sharding gem had created. This was a complete surprise to us, so we began exploring our gem’s source code to figure out where this Octopus proxy object came from. Once we located it, we realized that it already had helper methods for us to access our sharding configuration.

00:15:08.730

Problem solved: rather than hitting Redis every single time we made a MySQL request, we simply needed to interact with our local Active Record connection object. One major takeaway from this whole experience is the importance of knowing your gems. It’s easy to include a gem in your gem file, but when you do, ensure you have a general understanding of how it works.

00:15:40.230

I’m not saying that you need to read the source code for every gem, because that would be impractical and time-consuming. However, consider setting up each gem manually in a console the first time you use it, so you can see what's happening and how it’s being configured. If we had a better understanding of how our Octopus sharding gem was set up, we could have avoided a lot of headaches.

00:16:04.030

That said, once again, caching locally—in this case, using the Active Record framework as a cache—is always going to be faster and easier than external requests. These strategies can help replace your datastore hits. Now, I want to shift gears and discuss how you can use Ruby and Rails to avoid unnecessary datastore hits.

00:16:35.770

I’m sure some of you are looking at this and thinking, 'Duh, I already know how to do that!' But let’s pause for a moment, as it may not be as obvious as you think. For instance, how many of you have written code like this? Come on, I know you’re out there because I know I've written code like this.

00:17:02.470

Okay, this code looks pretty good, right? If there are no user IDs, we skip all this user processing, so it’s fine, right? Unfortunately, that assumption is incorrect. This is not fine! Let me explain why. It turns out if you execute this 'where' clause with an empty array, you're actually going to be hitting the database.

00:17:27.220

Notice this 'where 1 = 0' statement: this is what Active Record uses to ensure no records are returned. Sure, it’s a fast, one-millisecond query, but if you execute this query millions of times, it can easily overwhelm your database and slow you down. So how do we update this code to make our site reliability engineers love us? You have two options.

00:17:56.890

The first option is to avoid running that MySQL lookup unless you absolutely have to. You can do that with a simple Ruby expression. By doing this, you can save yourself from making a worthless datastore hit, ensuring your database won’t be overwhelmed with unnecessary calls. In addition to not overwhelming your database, this will also speed up your code.

00:18:24.700

If you’re running this chunk of code 10,000 times, making that useless MySQL lookup will take over half a second if executed 10,000 times. If instead you add that simple line of Ruby to avoid making that MySQL request and run a similar block of code 10,000 times, it’ll take less than a hundredths of a second!

00:18:52.860

As you can see, there is a significant difference between hitting MySQL unnecessarily 10,000 times and running plain old Ruby to accomplish the same task 10,000 times. That difference can accumulate, significantly impacting your application’s performance. A lot of people look at that top chunk of code and their first inclination is to say, 'Ruby is slow.' But that couldn't be further from the truth. As we’ve seen, a simple line of Ruby is hundreds of times faster in this case. Ruby is not slow; hitting the database is what’s slow.

00:19:21.600

Be vigilant for situations like this in your code where it might make a database request you don’t expect. I’m sure some of you Rails developers are probably thinking, 'I’m not writing code like that; actually, I chained a bunch of scopes to my where clause, so I have to pass that empty array, otherwise my scope chain breaks.' Thankfully, while Active Record doesn’t handle empty arrays well, it does provide an option for handling empty scopes — and that is the 'none' scope.

00:19:46.790

None is an Active Record query method that returns a chainable relation with zero records. But more importantly, it does this without hitting the database! Let’s see this in action: from before, we know that if we execute that 'where' clause with our empty array, we’ll hit the database, especially with all our scopes attached. If instead we replace that where clause with the 'none' scope, boom! We’re no longer hitting the database, and all our scopes still chain together successfully.

00:20:16.270

Be on the lookout for tools like these in your gems and frameworks that will enable you to work smarter with empty data sets. Importantly, never assume your library, gem, or framework will not make a database request when processing an empty dataset. Remember what they say about assuming!

00:20:53.370

Ruby has many easily accessible libraries and gems, and their ease of use can lull you into complacency. When you work with a library, gem, or framework, make sure you have a general understanding of how it works under the hood. One of the simplest ways to gain a better understanding is through logging. Go ahead and log the debug information for your framework, every gem, and each of your related services. When you're done, load some application pages, run background workers, or even jump into a console and run some commands. Then look at the logs produced.

00:21:34.750

Those logs will reveal how your code interacts with your data stores, and some of that interaction may not be what you expect. I cannot stress enough how valuable something as simple as reading logs can be for optimizing your application and identifying those unnecessary datastore hits. This concept of preventing useless datastore hits doesn't just apply to MySQL; it can be applied to any datastore you're working with.

00:22:03.700

At Kenna, we’ve ended up using Ruby to prevent datastore hits to MySQL, Redis, and Elasticsearch. Here's how we did it: every night at Kenna, we build intricate reports for our clients from their asset and vulnerability data. These reports begin with a reporting object that holds all the logic needed to determine which assets and vulnerabilities belong to a report.

00:22:39.830

Each night, to create that beautiful reporting page, we previously made over 20 calls to Elasticsearch and multiple calls to MySQL. My team and I did a lot of work ensuring these calls were fast, but it still took us hours every night to generate the reports. Eventually, we reached a point where we had so many reports in our system that we couldn't finish all of them overnight. Clients would literally wake up in the morning to find their reports weren’t ready, and that became a problem.

00:23:10.160

My team and I started brainstorming how to resolve this issue. The first thing we considered was looking at what data these reports contained. We asked ourselves, 'How many reports are in our system?' The answer was over 25,000! That was a solid number for us, especially since we had only had about 10,000 a few months earlier.

00:23:45.020

Next, we looked at the average asset count per report. Just over 1,600. If you remember, I mentioned earlier that the average client has 60,000 assets, so seeing that 1,600 number felt quite low. Then we examined how many of these reports had zero assets. To our shock, over 10,000 of them, which means more than a third of our reports contained no data.

00:24:11.130

If they contained no data, what was the point of making all these Elasticsearch, MySQL, and Redis calls, knowing they would return nothing? Lightbulb moment! We found we could skip the data stores for empty reports. By adding a simple line of Ruby to skip reports that had no data, we managed to reduce our processing time from over 10 hours to just 3!

00:24:36.310

That simple line of Ruby helped us avoid countless unnecessary data store hits, resulting in tremendous speed improvements in our processing time. I like to refer to this strategy of using Ruby to prevent useless datastore hits as 'using database guards in practice.' It’s simple but often overlooked when writing code.

00:25:05.550

We’re almost there! This final story I have for you actually happened pretty recently. Remember those Sidekiq workers I talked about at the beginning of the presentation? As I mentioned earlier, one of the main things we use Redis for is to throttle these Sidekiq workers. Given our sharded database setup, we only want a set number of workers to operate on any database at a given time.

00:25:45.080

In the past, we’ve found that too many workers on a database lead to overwhelming it and slowing it down. So we started with 45 workers per database, but after implementing these improvements I just mentioned, our databases were feeling well-balanced. We thought, 'Why not try to increase the number of workers to boost our data throughput?' So we did just that, raising the number of workers to 70 for each database.

00:26:18.950

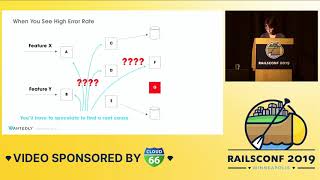

Of course, we kept a close eye on MySQL, and it seemed all our hard work paid off; MySQL was still happy as a clam! My team and I were feeling proud, enjoying our moment. But that celebration didn’t last long. As we learned earlier, often when you extinguish one fire, another one ignites elsewhere. MySQL was content, but then overnight, we received a Redis high traffic alert.

00:26:56.310

By examining our Redis traffic graphs, we saw times when we were reading over 50 megabytes per second from Redis. So, that little 7.8 bytes we noticed earlier doesn’t look so bad now. This load was caused by the hundreds and thousands of requests we were making to throttle the workers, which you can see reflected in this RDS request graph.

00:27:38.520

Before a worker can pick up a job, it must first check with Redis to see how many workers are currently operating on that database. If 70 workers are already on the database, the worker will not pick up the job. If there are fewer than 70, then it can jump in. All of these calls to Redis overwhelmed the system and resulted in numerous errors within our application.

00:28:10.560

Our application and Redis were literally dropping important requests because Redis was so swamped with throttling requests. Given our previous lessons learned about handling issues, our first thought was: how do we use Ruby or Rails to solve this problem? We considered caching the workers' state in Redis, but unfortunately, after brainstorming for a few days, no one on the team came up with any great suggestions.

00:28:47.910

So we decided to take the simplest route: we completely removed the throttling. When we did, the results were dramatic. There was an immediate drop in Redis requests, which was a huge win! More importantly, the Redis traffic spikes we had been observing overnight completely vanished. After removing those redundant requests, all the application errors we had been facing resolved themselves.

00:29:19.330

We kept monitoring MySQL closely afterward, and it remained happy as a clam. The moral of the story here is that sometimes you need to use Ruby or Rails to replace your datastore hits; other times, you may need to prevent unnecessary datastore hits. And occasionally, you might just need to completely remove outdated data store hits you no longer need.

00:29:53.540

This is particularly crucial for those of you with rapidly expanding and evolving applications. Ensure you're periodically auditing all the tools you're using to confirm they’re still necessary. If anything isn’t being utilized, get rid of it! Ultimately, this can save your data store from a great deal of headache.

00:30:22.910

As you build and scale your applications, keep in mind these five tips and remember that every data store hit counts. It doesn’t matter how fast it is; if you multiply it by a million, it’s going to hurt your data stores. You wouldn’t just toss dollar bills in the air because a single dollar bill is cheap, right? Don't throw around your datastore hits, no matter how fast they may seem. Make sure every external request your application is making is absolutely necessary, and I guarantee your site reliability engineers will appreciate it.

00:30:56.040

With that, my job here is done. Thank you all so much for your time and attention. Does anyone have any questions?

00:31:17.150

We’ve got five minutes for questions, so if anyone has any general inquiries, I’ll also be available afterwards if anyone wants to chat. Excellent! So the question was: were we able to downgrade our Redis or RDS instance after decreasing the number of hits we were making to MySQL? The answer is that we did not. We left it at its current size, knowing that, at some point, we may encounter another bottleneck and would have to repeat this entire exercise again.

00:32:06.130

Oh, great question! So the inquiry is whether we learned any cache-busting lessons from the RDS example. We did learn a lot from these examples, and one of the crucial lessons is to set a default cache expiration. Rails provides the capability to do this in your configuration files. We initially neglected this and had keys that had been lying around for five-plus years. Set a default for cache expiration so that every key will expire eventually.

00:32:43.930

After that, figuring out how long a cache should remain active takes some tweaking. Set your best guess initially and then observe how your load looks. See how clients are reacting to the data — do they think it’s stale or not? From there, make necessary adjustments.

00:33:18.610

So the question is: did we face any issues transferring what we had stored in Redis to our local memory cache? The short answer is: no. In this case, the cache is so small; it’s literally mapping this client to this name, and most of the time, that hash was only five to ten keys. The payoff for this change was substantial.