00:00:16.720

Hello, and welcome to the very last session of RailsConf! When I leave this stage, they are going to burn it down.

00:00:24.640

I have a couple of items of business before I launch into my presentation proper. The first of which is turning on my clicker. I work for LivingSocial, and we are hiring. If this fact is intriguing to you, please feel free to come and talk to me afterwards.

00:00:36.399

Also, our recruiters brought a ton of little squishy stress balls shaped like little brains. As far as I know, this was coincidental, but I love the tie-in, so I brought the whole bag. If you would like an extra brain, please come talk to me after the show.

00:00:47.440

A quick note about accessibility: if you have any trouble seeing my slides, hearing my voice, or following my weird trains of thought—or maybe you just like spoilers—you can get a PDF with both my slides and my script at this URL: tinyurl.com/shorts-railsconf.

00:01:03.840

I also have it available on a thumb drive, so if the conference Wi-Fi does what it usually does, please go see Evan, light up in the second row.

00:01:14.880

I'm going to leave this up for a few more minutes. I also want to give a shout-out to the Opportunity Scholarship program here at RailsConf. This program is for people who wouldn't usually take part in our community or who might just want a friendly face during their first time at RailsConf.

00:01:34.960

I'm a huge fan of this program; I think it's a great way to welcome new people and new voices into our community. This is the second year that I've volunteered as a guide, and I've met someone with a fascinating story to tell. If you're a seasoned conference veteran, I strongly encourage you to apply next year.

00:01:53.600

Okay, programming is hard. It's not quantum physics, but neither is it falling off a log. If I had to pick just one word to explain why programming is hard, that word would be 'abstract.' I asked Google to define 'abstract,' and here's what it said: 'existing in thought or as an idea but not having a physical or concrete existence.'

00:02:13.760

I usually prefer defining things in terms of what they are, but in this case, I find the negative definition extremely telling. Abstract things are hard for us to think about precisely because they don't have a physical or concrete existence, and that's what our brains are wired for.

00:02:38.720

I normally prefer the kind of talk where the speaker just launches right in and forces me to keep up, but this is a complex idea and it's the last talk of the last day, and I'm sure you're all as fried as I am. So here's a little background.

00:02:50.480

I got the idea for this talk when I was listening to the Ruby Rogues podcast episode with Glenn Vanderburgh. In that episode, Glenn said, 'The best programmers I know all have some good techniques for conceptualizing or modeling the programs that they work with.'

00:03:08.159

It tends to be sort of a spatial visual model, but not always. He says what's going on is our brains are geared towards the physical world, dealing with our senses, and integrating that sensory input, but the work we do as programmers is all abstract.

00:03:18.879

It makes perfect sense that you would want to find techniques to rope in the physical sensory parts of your brain into this task of dealing with abstractions. But we don't ever teach anybody how to do that, or even that they should do that.

00:03:31.920

When I heard this, I started thinking about the times that I've stumbled across some technique for doing something like this and I've been really excited to find a way of translating a programming problem into some form that my brain could really get a handle on.

00:03:56.000

I was like, 'Yeah, yeah, brains are awesome, and we should be teaching people that this is a thing they can do.' Then I thought about it sometime later and realized, 'Wait a minute, no, oops, brains are horrible, and teaching people these tricks would be totally irresponsible if we also didn't warn them about cognitive bias.'

00:04:10.319

I'll get to that in a little bit. This talk is in three parts: part one—brains are awesome, and as Glenn said, you can rope the physical and sensory parts of your brain, as well as a few others, into helping you deal with abstractions; part two—brains are horrible and they lie to us all the time; and part three—I have an example of an amazing hack that you just might be able to come up with.

00:04:42.080

Our brains are extremely well adapted for dealing with the physical world. Our hind brains, which regulate respiration, temperature, and balance, have been around for half a billion years or so. But when I write software, I'm leaning hard on parts of the brain that are relatively new in evolutionary terms and using some relatively expensive resources.

00:05:07.199

Over the years, I've built up a small collection of techniques and shortcuts that engage specialized structures in my brain to help me reason about programming problems. Here's the list: I'm going to start with a category of visual tools that let us leverage our spatial understanding of the world.

00:05:35.199

These tools allow us to discover relationships between different parts of a model or just to stay oriented when we're trying to reason through a complex problem. I'm just going to list out a few examples of this category quickly because I think most developers are likely to encounter these either in school or on the job.

00:06:03.840

They all have the same basic shape: their boxes and arrows. There's entity-relationship diagrams that help us understand how our data is modeled. We use diagrams to describe data structures like binary trees, linked lists, and so on. For state machines of any complexity, diagrams are often the only way to make any sense of them.

00:06:35.639

I could go on, but as I said, most of us are probably used to using these at least occasionally. There are three things that I like about these tools. First, they lend themselves really well to standing up in front of a whiteboard.

00:06:55.679

Possibly with a co-worker, and just standing up and moving around a little bit helps get the blood flowing and get your brain perked up. Second, diagrams help us offload some of the work of keeping track of different concepts by attaching those concepts to objects in two-dimensional space.

00:07:10.720

Our brains have a lot of hardware support for keeping track of where things are in space. Third, our brains are really good at pattern recognition, so visualizing our designs can give us a chance to spot certain kinds of problems just by looking at their shapes before we ever start typing code in an editor. I think that's pretty cool.

00:07:36.880

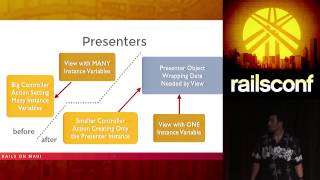

Here's another technique that makes use of our spatial perception skills. If you saw Sandy's talk yesterday, you'll know this one: it's called the 'squint test.' It's very straightforward. You open up some code, and you either squint your eyes at it or you decrease the font size.

00:08:01.120

The point is to look past the words and check out the shape of the code. This is a pathological example that I used in a refactoring talk last year. You can use this technique as an aid to navigation as a way of zeroing in on high-risk areas of code or just plain to get oriented in a new codebase.

00:08:31.440

There are a few specific patterns that you can look for, and you'll find others as you do more of it. Is the left margin ragged as it is here? Are there any ridiculously long lines? There's one towards the bottom. What is your syntax highlighting telling you? Are there groups of colors or colors that sort of spread out?

00:08:55.680

There's a lot of information you can glean from this. Incidentally, I’ve only ever met one blind programmer, and we didn't really talk about this stuff. If any of you have found that a physical or cognitive disability gives you an interesting way of looking at code or understanding code, please come talk to me.

00:09:14.560

I’d love to hear your story. Next, I have a couple of techniques that involve the clever use of language. The first one is deceptively simple but does require a prop. It doesn't have to be that big; you can totally get away with using the souvenir edition.

00:09:39.200

This is my daughter's duck cow bath toy. What you do is you keep a rubber duck on your desk. When you get stuck, you put the rubber duck on top of your keyboard and explain your problem aloud to the duck. It sounds absurd, right? But there's a good chance that in the process of putting your problem into words, you'll discover that there's an incorrect assumption you've been making.

00:10:07.920

You might think of some other possible solution. I've also heard of people using teddy bears or other stuffed animals. One of my co-workers told me that she learned this as the 'pet rock technique.' This was a thing in the 70s.

00:10:32.959

She also finds it useful to compose an email describing the problem. So for those of you who, like me, think better when you're typing or writing than when you're speaking, that can be a nice alternative.

00:10:55.520

The other linguistic hack that I got from Sandy Metz, in her book 'Practical Object-Oriented Design in Ruby'—Pooder for short—she describes a technique to figure out which object a method should belong to.

00:11:13.920

I tried paraphrasing this, but honestly, Sandy did a much better job than I could describing it, so I'm just going to read it verbatim. She says, 'How can you determine if a gear class contains behavior that belongs somewhere else? One way is to pretend that it’s sentient and interrogate it.'

00:11:35.360

If you rephrase every one of its methods as a question, asking the question ought to make sense. For example, 'Please, Mr. Gear, what is your ratio?' seems perfectly reasonable. But 'Please, Mr. Gear, what are your gear inches?' is on shaky ground.

00:11:58.720

'Please, Mr. Gear, what is your tire size?' is just downright ridiculous. This is a great way to evaluate objects in light of the Single Responsibility Principle, and I'll come back to that thought in just a minute.

00:12:05.040

But first, I've described the rubber duck and 'Please, Mr. Gear' as techniques to engage linguistic reasoning, but that doesn't quite feel right. Both techniques force us to put our questions into words, but words themselves are tools.

00:12:25.440

We use words to communicate our ideas to other people. As primates, we've evolved a set of social skills and behaviors for getting our needs met as part of a community.

00:12:45.760

So while these techniques do involve using language centers of your brain, I think they reach beyond those centers to tap into our social reasoning. The rubber duck technique works because putting your problem into words forces you to organize your understanding of a problem in such a way that you can verbally lead another mind through it.

00:13:15.760

'Please, Mr. Gear' lets us anthropomorphize an object and talk to it to discover whether it conforms to the Single Responsibility Principle. To me, the telltale phrase in Sandy's description of this technique is 'asking the question ought to make sense.'

00:13:40.880

Most of us have an intuitive understanding that it might not be appropriate to ask Alice about something that is Bob's responsibility. Interrogating an object or a person helps us use that social knowledge and gives us an opportunity to notice that a particular question doesn't make sense to ask of any of our existing objects.

00:14:00.160

This might prompt us to ask if we should create a new object to fill that role instead. Now, personally, I would have considered 'Pooder' to have been a worthwhile purchase if 'Please, Mr. Gear' was the only thing I got from it.

00:14:16.640

But in this book, Sandy also made what I thought was a very compelling case for UML sequence diagrams. Where 'Please, Mr. Gear' is a good tool for discovering which objects should be responsible for a particular method, a sequence diagram can help you analyze the runtime interaction between several different objects.

00:14:36.080

At first glance, this looks kind of like something in the boxes and arrows category of visual and spatial tools, but again, this feels more like it's tapping into that social understanding we have. This can be a good way to get a sense for when an object is bossy.

00:15:00.320

Or when performing a task involves a complex sequence of interactions, or if they’re just playing too many different roles to keep track of. Rather than turn this into a lecture on UML, I'm just going to tell you to go buy Sandy's book.

00:15:28.640

And if for whatever reason you cannot afford it, come talk to me later and we'll work something out. Now, for the really hand-wavy stuff: metaphors can be a really useful tool in software.

00:15:46.960

The turtle graphics system and logo is a great metaphor. Has anybody used Logo at any point in their life? About half the people—that's really cool! We've probably all played with drawing something on the screen at some point.

00:16:07.920

But most of the rendering systems that I've used are based on a Cartesian coordinate system—a grid—and this metaphor encourages the programmer to imagine themselves as the turtle and to use that understanding to figure out what they should be doing next when they get stuck.

00:16:31.200

One of the original creators of Logo called this 'body syntonic reasoning.' It was specifically developed to help children solve problems, but the turtle metaphor works for everybody, not just for kids.

00:16:57.120

Cartesian grids are great for drawing boxes, mostly great. But it can take some very careful thinking to figure out how to use XY coordinate pairs to draw a spiral, or a star, or a snowflake, or a tree. Choosing a different metaphor can make different kinds of solutions easy where before they seemed like too much trouble to be worth bothering with.

00:17:10.080

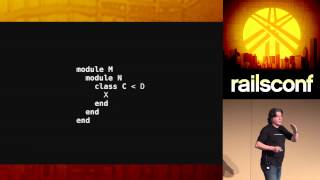

James Ladd, in 2008, wrote a couple of interesting blog posts about what he called 'East Oriented Code.' Imagine a compass overlaid on top of your screen. In this model, messages that an object sends to itself go south, and any data returned from those calls goes north.

00:17:48.960

Communication between objects is the same thing rotated 90 degrees: messages sent to other objects go east, and the return values from those messages flow west. What James Ladd suggests is that code that sends messages to other objects, where information mostly flows east, is easier to extend and maintain than code that looks at data and then decides what to do with it, where information flows west.

00:18:08.320

This is just the design principle known as 'tell, don't ask,' but the metaphor of the compass recasts this in a way that helps us use our background spatial awareness to keep this principle in mind at all times.

00:18:30.560

In fact, there are plenty of ways we can use our background awareness to analyze our code. Code smells are an entire category of metaphors that we use to talk about our work, and the name 'code smell' itself is a metaphor for anything about your code that hints at a design problem, which makes it a meta-metaphor.

00:19:07.679

Some code smells have names that are extremely literal—duplicated code, long method, and so on—but some of these are delightfully suggestive: feature envy, refused bequest, primitive obsession. To me, the names on the right have a lot in common with 'Please, Mr. Gear.'

00:19:35.519

These names are chosen to hook into something in our social awareness to give a name to a pattern of dysfunction. By naming the problem, they suggest a possible solution.

00:19:55.839

So these are most of the shortcuts that I've accumulated over the years, and I hope that this can be the start of a similar collection for some of you. Now, the part where I try to put the fear into you: evolution has designed our brains to lie to us.

00:20:22.240

Brains are expensive: the human brain accounts for just 2 percent of body mass, but 20 percent of our caloric intake. That's a huge energy requirement that has to be justified. Evolution as a designer does one thing: it selects for traits that allow an organism to stay alive long enough to reproduce.

00:20:45.760

It doesn't care about getting the best solution, only one that’s good enough to compete in the current landscape. Evolution will tolerate any hack as long as it meets that one goal.

00:21:03.520

As an example, I want to take a minute to talk about how we see the world around us. The human eye has two different kinds of photoreceptors. There are about 120 million rod cells in each eye; these play little or no role in color vision and are mostly used for nighttime and peripheral vision.

00:21:29.680

There are also about six or seven million cone cells in each eye, and these give us color vision but require much more light to work. The majority of cone cells are packed together in a tight little cluster near the center of the retina.

00:21:55.680

This area is what we use to focus on individual details, and it’s smaller than you might think—only 15 degrees wide. As a result, our vision is extremely directional. We have a very small area of high detail and high color, and the rest of our field of vision is more or less monochrome.

00:22:17.520

So when we look at something, our eyes see something like this. In order to turn the image on the left into the image on the right, our brains are doing a lot of work that we’re mostly unaware of.

00:22:40.559

We compensate for having such highly directional vision by moving our eyes around a lot. Our brains combine the details from these individual points of interest to construct a persistent mental model of whatever we're looking at.

00:22:55.440

These fast point-to-point movements are called saccades, and they're actually the fastest movements that the human body can make. The shorter saccades that you make when you're reading last for 20 to 40 milliseconds, while longer ones that travel through a wider arc might take 200 milliseconds, or about a fifth of a second.

00:23:16.960

What I find fascinating about this is that we don't perceive saccades. During a saccade, the eye is still sending data to the brain, but what it’s sending is a smeary blur, so the brain just edits that part out.

00:23:40.240

This process is called saccadic masking. You can see this effect for yourself next time you're in front of a mirror; lean in close and look back and forth from the reflection of one eye to the other. You won't see your eyes move.

00:24:00.000

As far as we can tell, our gaze just jumps instantaneously from one reference point to the next. And here’s where I have to wait for a moment while everybody stops doing this.

00:24:17.520

When I was preparing for this talk, I found an absolutely wonderful sentence in the Wikipedia entry on saccades. It said, 'Due to saccadic masking, the eye-brain system not only hides the eye movements from the individual, but also hides the evidence that anything has been hidden.'

00:24:41.680

Our brains lie to us, and they lie to us about having lied to us. This happens to you multiple times a second, every waking hour, every day of your life.

00:25:01.440

Of course, there's a reason for this: imagine if every time you shifted your gaze around, you got distracted by all the pretty colors. You'd be eaten by lions! But in selecting for this design, evolution made a trade-off.

00:25:22.960

The trade-off is that we are effectively blind every time we move our eyes around, sometimes for up to a fifth of a second. I wanted to talk about this partly because it's a really fun subject but also to show just one of the ways our brains are doing a massive amount of work to process information from our environment.

00:25:52.640

As programmers, if we know anything about abstractions, it's that they're hard to get right, which leads me to an interesting question: does it make sense to use any of the techniques that I talked about in part one to try to corral different parts of our brains into doing our work for us if we don't know what kinds of shortcuts they're going to take?

00:26:06.720

According to the Oxford English Dictionary, the word 'bias' seems to have entered the English language around the 1520s. It was used as a technical term in the game of lawn bowling and referred to a ball that was constructed in such a way that it would curve, rolling in a curved path instead of a straight line.

00:26:21.760

Since then, it’s picked up a few additional meanings, but they all have that same basic connotation of something that’s skewed or off a little bit. Cognitive bias is a term for systematic errors in thinking—patterns of thought that diverge in measurable and predictable ways from what the answers that pure rationality might give are.

00:26:53.760

When you have some free time, I suggest that you go check out the Wikipedia page called 'List of Cognitive Biases.' There are over 150 of them, and they're fascinating reading. This list of cognitive biases has a lot in common with the earlier list of code smells.

00:27:11.680

A lot of these names are very literal, but there are a few that stand out, like 'curse of knowledge' or the 'Google effect.' And I kid you not, the 'Ikea effect.' But the parallel goes deeper than that.

00:27:35.440

This list gives names to patterns of dysfunction, and once you have a name for a thing, it’s a lot easier to recognize it and figure out what to do about it. I do want to call your attention to one particular item on this list: it’s called 'the bias blind spot.'

00:27:56.960

This is the tendency to see oneself as less biased than other people or to be able to identify more cognitive biases in others than in oneself. Sound like anybody you know? Just let that sink in for a minute.

00:28:22.240

Seriously though, in our field, we like to think of ourselves as more rational than the average person, and that just isn’t true. Yes, as programmers, we have a valuable, marketable skill that depends on our ability to reason mathematically, but we do ourselves and others a disservice if we allow ourselves to believe that being good at programming means anything other than we're good at programming.

00:28:53.680

Because as humans, we are all biased; it’s built into us from our DNA. Pretending that we aren’t biased only allows our biases to run free.

00:29:17.840

I don't have a lot of general advice for how to look for bias, but I think an obvious and necessary first step is just to ask the question, 'How is this biased?' Beyond that, I suggest that you learn about as many specific cognitive biases as you can.

00:29:35.840

That way, your brain can do what it does: look for patterns, make associations, and classify things. I think everybody should understand their own biases. Because only by knowing how you're biased can you then decide how to correct for that bias in the decisions that you make.

00:29:49.520

If you're not checking your work for bias, you can look right past a great solution and never know it was there. So for part three of my talk, I have an example of a solution that is simple and elegant—just about the last thing I ever would have thought of.

00:30:06.720

For the benefit of those of you who have yet to find your first gray hair, Pac-Man was a video game released in 1980 that let people maneuver around a maze eating dots while trying to avoid four ghosts. Now, playing games is fun, but we’re programmers, we want to know how things work, so let's talk about programming Pac-Man.

00:30:28.080

For the purposes of this discussion, we'll focus on just three things: Pac-Man, the ghosts, and the maze. Pac-Man is controlled by the player, so that code is basically just responding to hardware events—boring. The maze is there so that the player has some chance of avoiding the ghosts.

00:30:57.840

But it's the ghost AI that's going to make the game interesting enough that people will keep dropping quarters into a slot. By the way, video games used to cost a quarter when I was your age! So to keep things simple, we'll start with one ghost. How do we program its movement?

00:31:23.760

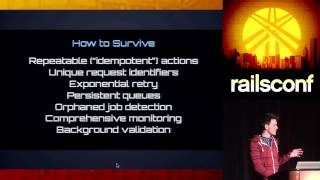

We could choose a random direction and move that way until we hit a wall, then choose another random direction. This is very easy to implement, but not much of a challenge for the player. Okay, so we could compute the distance to Pac-Man in X and Y and pick a direction that makes one of those smaller.

00:31:50.560

But then the ghost is going to get stuck in corners or behind walls because it won’t go around to catch Pac-Man. Again, it’s going to be too easy for the player. So how about instead of minimizing linear distance, we focus on topological distance?

00:32:18.560

We can compute all possible paths through the maze, pick the shortest one that gets us to Pac-Man, and then step down it. This works fine for one ghost, but if all four ghosts use this algorithm, they’re going to wind up chasing the player in a tight little bunch instead of fanning out.

00:32:47.680

Okay, so each ghost computes all possible paths to Pac-Man and rejects any path that goes through another ghost. That shouldn't be too hard, right? I don't have a statistically valid sample, but my guess is that when asked to design an AI for the ghosts, most programmers would go through a thought process more or less like what I just walked through.

00:33:17.120

So how is this solution biased? I don’t have a good name for how this is biased, so the best way I have to communicate this idea is to walk you through a very different solution. In 2006, I attended Uspl in England, a conference put on by the ACM.

00:33:48.640

As a student volunteer, I sat in on a presentation by Alexander Rappaport of the University of Colorado. In his presentation, he walked through the Pac-Man problem more or less the way I just did, and then he presented this idea: you give Pac-Man a smell and model the diffusion of that smell throughout the environment.

00:34:24.960

In the real world, smells travel through the air. We certainly don't need to model each individual air molecule. What we can do instead is just divide the environment into reasonably sized logical chunks and model those. Coincidentally, we already do have an object that does exactly that for us: it's the tiles of the maze itself.

00:35:06.080

They're not really doing anything else, so we can borrow those as convenient containers for this computation. We program the game as follows: we say that Pac-Man gives whatever floor tile it’s standing on a 'Pac-Man smell value' of 1000.

00:35:30.400

The number doesn't really matter; that tile then passes a smaller value off to each of its neighbors, and they pass a smaller value off to each of their neighbors, and so on. Iterate this a few times, and you get a diffusion contour that we can visualize as a hill with its peak centered on Pac-Man.

00:35:55.680

So we’ve got Pac-Man, we've got the floor tiles, but in order to make a maze, we also have to have some walls. What we do is give the walls a 'Pac-Man smell value' of zero. That chops the hill up a bit.

00:36:17.840

Now all our ghost has to do is climb the hill. We program the first ghost to sample each of the floor tiles next to it, pick the one with the biggest number, and go that way. It barely seems worthy of being called an AI, does it?

00:36:39.840

But check this out: when we add more ghosts to the maze, we only have to make one change to get them to cooperate. Interestingly, we don't change the ghost's movement behavior at all.

00:37:06.800

Instead, we have the ghosts tell the floor tile that they're, I guess, floating above that its 'Pac-Man smell value' is zero. This changes the shape of that diffusion contour.

00:37:26.480

Instead of a smooth hill that always slopes down away from Pac-Man, there are now cliffs where the hill drops immediately to zero. In effect, we turn the ghosts into movable walls.

00:37:45.920

So when one ghost cuts off another one, the second one will automatically choose a different route. This lets the ghosts cooperate without needing to be aware of each other.

00:38:06.080

Halfway through this conference session where I saw this, I was like, 'What just happened?' At first, my surprise was just about how interesting this approach was.

00:38:30.080

But then I got really completely stunned when I thought about how surprising that solution was. I hope that looking at the second solution helps you understand the bias in the first solution.

00:38:49.520

In his paper, Professor Rappaport wrote, 'The challenge to find this solution is a psychological, not a technical one.' Our first instinct when we're presented with this problem is to imagine ourselves as the ghost.

00:39:11.520

This is the body syntonic reasoning that’s built into Logo, and in this case, it's a trap because it leads us to solve the pursuit problem by making the pursuer smarter.

00:39:30.320

Once we started down that road, it’s very unlikely that we’re going to consider a radically different approach, even, or perhaps especially if it’s a much simpler one.

00:39:51.520

In other words, body syntonic reasoning biases us towards modeling objects in the foreground rather than objects in the background. Okay, does this mean that you shouldn’t use body syntonic reasoning? Of course not! It’s a tool.

00:40:16.560

It's right for some jobs; it's not right for others. I want to take a look at one more technique from part one: what's the bias in 'Please, Mr. Gear, what is your ratio?'

00:40:37.760

Aside from the gendered language, which is trivially easy to address, this technique is explicitly designed to give you an opportunity to discover new objects in your model.

00:40:58.320

But it only works after you've given at least one of those objects a name. Names have gravity; metaphors can be tar pits. It’s very likely that the new objects that you discover are going to be fairly closely related to the ones that you already have.

00:41:23.760

Another way to help see this is to think about how many steps it takes to get from 'Please, Ms. Pac-Man, what is your current position in the maze?' to 'Please, Ms. Floor Tile, how much do you smell like Pac-Man?'

00:41:48.560

For a lot of people, the answer to that question is probably infinity. It certainly was for me. My guess is that you don’t come up with this technique unless you’ve already done some work modeling diffusion in another context.

00:42:12.240

This, incidentally, is why I like to work on diverse teams. The more different backgrounds and perspectives we have access to, the more chances we have to find a novel application of some seemingly unrelated technique because someone’s worked with it before.

00:42:36.960

It can be exhilarating and very empowering to find these techniques that let us take shortcuts in our work by leveraging these specialized structures in our brains.

00:42:59.920

But those structures themselves take shortcuts, and if you're not careful, they can lead you down a primrose path. I want to go back to that quote that got me thinking about all of this in the first place about how we don’t ever teach anybody how to do that, or even that they should.

00:43:16.720

Ultimately, I think we should use techniques like this despite the biases in them. I think we should share them, and I think to paraphrase Glenn: we should teach people that this is a thing that you can and should do.

00:43:39.200

I also think we should teach people that looking critically at the answers that these techniques give you is also a thing that you can and should do. We might not always be able to come up with a radically simpler or different approach.

00:44:01.920

But the least we can do is give ourselves the opportunity to do so by asking, 'How is this biased?' I want to say thank you real quickly to everybody who helped me with this talk or the ideas in it.

00:44:22.640

And also, thank you to LivingSocial for paying for my trip and for bringing these wonderful brains. They’re going to start tearing the stage down in a few minutes.

00:44:42.240

Rather than take Q&A up here, I'm going to pack up all my stuff, and then I'll migrate over there, and you can come and bug me or pick up a brain. Whatever! Thank you.