00:00:24.800

Alright, good morning! Thank you all for coming. This talk is going to cover geospatial analysis with Rails. We're going to do some interesting things with maps and geodata, so if you like maps and geospatial concepts, this talk is a great place for you to be. However, if you hate maps, this talk may make you dislike them even more. Fair warning: we'll be moving at a very fast pace and will be quite technical, so buckle your seatbelts.

00:00:44.850

Before we begin, here's a quick bio: My name is Daniel Azuma, and I'm from the Seattle area. I'm not a GIS professional, a geographer, or a cartographer; I'm a Ruby developer. Like many of you, I have been working with Rails since the 1.0 release in 2006. During that time, I've done a lot of work in location mapping and am the author of the Geo Active Record PostGIS adapter, among several other tools that some of you may have used for geodata in Ruby. Currently, I serve as the Chief Architect at Perk, a Seattle-area startup that focuses on mobile-based instant deals—no, not daily deals, but instant deals delivered directly to your smartphone. And of course, here’s the obligatory commercial announcement: we are hiring! I noticed the bulletin board outside is filled with hiring notices, so I thought we’d throw our hat in the ring.

00:01:39.060

In this hour, we will discuss our approach at Perk. Our service aims to be very hyper-local; we curate and analyze a lot of location data. Many of us Rails developers have already completed projects integrating with services like Google Maps, perhaps displaying a few pushpins to indicate points of interest. But when your service is highly location-centric, you often have to go beyond that. There are many additional elements you may need to model and analyze. Location impacts people's lives in various ways and influences how they use your application. As you dig deeper, you may find that things can get particularly challenging.

00:02:03.899

There are many concepts to understand: coordinate systems, projections, vector versus raster data, statistics, and computational geometry. All of these involve a learning curve. I could spend this talk, along with several more, trying to cover all this material, but in the interest of time, we're going to try something a bit ambitious. We'll set the learning curve aside and dive directly into examining some code for a few interesting projects.

00:03:04.109

Here’s our agenda: First, we will go over the software installation process for setting up a Rails application with geospatial capabilities. Afterward, we’ll look at code for a couple of projects. The first one is a tool that helps us visualize location activity. I collected about 50,000 location data points directly from Perk's activity logs for a specific time period, allowing us to see where users were most active with our app. The second project will be a service that identifies the timezone based on the provided latitude and longitude. Assuming we don't run too far over time, we will take a few questions at the end.

00:04:01.200

Our goal is to give you a high-level overview of how to go about writing spatial applications. The intent is to provide a general understanding of how all the components fit together. You may not catch all the details as we move quickly through this, but don't worry. At the end, I will provide a link where you can find many more resources to explore further. All the slides will be available online, including links to the software and a variety of further reading resources. So without further ado, let's get started.

00:04:34.600

First, we need to set up a Rails application to utilize geospatial features. When you work seriously with geodata in Ruby, there are a few pieces of software you need to install first. Libraries like libgeotiff and libproj are essential C libraries that perform most of the low-level math involved with geospatial analysis; you can probably get them from your package manager. PostGIS serves as a spatial database and is vital if you plan to do anything interesting with geodata, especially if you have a significant amount of it. Most relational databases and even standard SQL installations lack adequate geospatial capabilities, so using a specialized database for spatial data—such as PostGIS—is crucial.

00:05:02.080

PostGIS is a plugin for the PostgreSQL database and is the best open-source option for spatial data. It's worth making the switch to PostgreSQL, even if you're accustomed to using MySQL, which, while it has some spatial capabilities, falls short in comparison. For our Rails application, we need a few Ruby gems. First is RGeo, which provides spatial data types for Ruby—we'll see a bit of that in this talk. There are several other gems we will examine later.

00:05:59.470

Next, we’ll modify the database.yml file to configure some settings for the PostGIS adapter. You can find all the specifics for this configuration in the ReadMe for the active record adapter, so feel free to check that. We will also add a few requires in the application.rb file, and that's it! With those steps finalised, we can create a development database. The PostGIS active record adapter will automatically add all the necessary geospatial capabilities to your database. This involves creating a variety of SQL functions, datatypes, and more that are essential for this functionality.

00:06:32.830

With our Rails application now set to support geospatial analysis, let's dive into our projects. For our first project, we'll visualize some location point data. These could represent check-ins or other types of activity from a mobile app. For our example, we’ll load our database with approximately 50,000 location points collected from Perk's activity logs over a specific timeframe. Our aim is to visualize this data, perhaps to determine where in Seattle most of our activity was occurring during that time.

00:07:41.980

We'll begin by generating a model to hold the activity data. Many of us may have previously worked on Rails projects that support location storage, typically by using separate latitude and longitude columns in the database. While this is a possible approach, it's akin to using a string to store timestamps in your database—while doable, it limits the database's ability to assist in searching, sorting, and interpreting that information. Most databases offer a timestamp data type for this reason; similarly, PostGIS provides spatial data types, such as the 'point' type, which we'll utilize. The 'point' type allows for distance calculations, spatial searches, and other operations directly within the database.

00:08:51.180

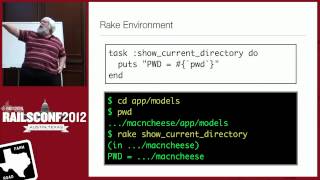

Here's our migration: We will configure PostGIS to specify the coordinate system used to represent data in the database. The coordinate system is typically identified by a standard ID number known as the SRID (Spatial Reference Identifier). For our project, we will use SRID 3785. This specific coordinate system is native to Google Maps and represents a Mercator projection; it's important to note that this is not the same as simple latitude and longitude. Let’s clarify that we will interact with the database using this Mercator projection instead of directly storing latitude and longitude values. When we perform any queries or insertions, we'll need to ensure that these values are converted accordingly.

00:10:15.100

Next, we'll add a database index to our point column. Database indexes serve to speed up searches; this particular index is marked as spatial, offering enhanced capabilities tailored to handle geometric objects in two dimensions. We will seek out points for which this enhanced functionality becomes relevant. Now, let’s examine the model: the Active Record adapter for PostGIS typically requires some ceremony regarding how to manage the spatial data in Ruby and what coordinate system you are using. You manage that by creating an RGeo factory, associating it with a specific column in your database.

00:11:23.020

In this case, we’ll use a smart factory, which understands both latitude and longitude as well as the Google Maps projection, and knows how to transform data between the two systems. You can read more about this, along with other issues surrounding coordinate systems and factories, in the resources I’ll provide at the end of the talk. Now we can rake db:migrate, and at this point, both the database and the model will be set up. Next, let’s acquire the data we want to analyze. I exported a significant number of latitude and longitude points from Perk's logs into a text file. This file contains approximately 50,000 records.

00:12:02.540

I wrote a small script to read that file and create corresponding records in our database. It's essential to note that while our input file contains standard latitude and longitude formats, our database is set up to use the Google Maps Mercator projection. Therefore, we must transform that data into the proper coordinate system before loading it into the database. Next, let’s take a look at how to query the data. For our application, we want to visualize our activity data on a map, specifically Google Maps. In general, we will query the database to select all activity residing within a defined rectangular window.

00:13:16.950

Here’s the plan to write a scope in our Active Record model that selects all records within that rectangular window. A couple of crucial points: First, remember that the data in the database is stored using the Google Maps Mercator projection; thus, our queries must also utilize that same coordinate system. If we use latitude and longitude as the arguments in our scope, we will need to convert them into the targeted projection before executing the query. Secondly, for the query itself, it's quite straightforward—we want to search within a rectangle, so we construct a bounding box and look for points that overlap that area.

00:13:41.220

The PostgreSQL database provides a convenient operator, the double ampersand, which tests for overlap. With this operator, we can effectively implement our scope. This illustrates one of the primary reasons we prefer using the Google Maps coordinate system in our database over simple latitude and longitude. Specifically, querying a rectangle is much simpler in this projection: If we were to consider the same region on a globe with latitude and longitude coordinates, it would appear as a tricky trapezoidal shape with curved boundaries. Therefore, if your database utilized those coordinates and you wanted to execute such a query, you would need to compute what that shape looks like, approximate it using a polygon, and have the database handle that, which complicates the process.

00:14:56.600

To summarize: Given our queries are geometrically rectangular in Google Maps, it makes more sense to maintain our database in the same coordinate system. This way, our queries stay rectangular, keeping our code simple. The bottom line is that when choosing a coordinate system for your database, make sure to select wisely. Latitude and longitude might not always be the right choice based on how you'll query and utilize that data. If you prefer not to write SQL strings directly, the Squeal gem by Ernie Miller is incredibly helpful for constructing spatial queries—enabling you to write SQL in Ruby using a DSL rather than interpolating strings.

00:16:33.990

For this query, it may not provide significant benefits yet, but it truly shines when we delve into more complex spatial queries. Now we'll wrap this up with a simple controller that renders out the data we've pulled in this query as pushpins on the Google Map. At this point, we have an awesome visualization that makes the activity within our app quite evident. Okay, maybe not—we have 50,000 data points, and rendering them with Google’s pushpins might not have been the most effective approach. Something like a heat map could provide a more meaningful representation.

00:17:49.090

A heat map displays data density based on colors, allowing us to visualize hotspots of activity around downtown Seattle and near the University of Washington. There are various ways to create heat maps. If you want real-time heat maps, you can consider JavaScript solutions. This particular solution I'm demonstrating uses Thermo.js, which I developed and uploaded to GitHub last week. Other established libraries include Heatmap.js and Heatcanvas.js. I'll provide the links to these resources later. Heat maps also necessitate careful consideration of your coordinate system. Depending on which library you use to create heat maps—especially if you decide to build your own—you may need to work in image pixel coordinates. A heat map is essentially an image overlay over a map, requiring you to convert between latitude and longitude and image coordinates correctly.

00:19:00.950

When doing this, ensure you use the right mathematics for a Mercator projection, or better yet, allow your tools to handle this for you. Google Maps provides an API for that purpose, and RGeo does the same on the Ruby side. Here’s a quick view of the JavaScript to construct a heat map using Thermo.js. It's quite simple: you set up your Google Map, create an overlay object, and pass a set of data points to it, which renders it using a heat map image. However, if you have numerous data points, JavaScript might struggle to render everything in real time. This dataset consists of 50,000 data points and took about five or six seconds on a fairly fast laptop to render on the client side.

00:20:43.640

When the number of data points increases to millions, rendering becomes impractical. As a solution, a more efficient approach is to pre-render heat map images or any similar visualizations on the backend. By creating raster tiles and storing these image tiles in a database, you can serve them as images—this is essentially how Google Maps functions. They pre-render all those map visuals into tiles, serving them up efficiently. However, this pre-rendering process is more involved, necessitating a discussion for a future talk. For now, let’s recap what we achieved in this first project.

00:21:25.840

In this session, we developed a model to hold location data, using Google Maps' native coordinate system for storing that data. We provided rationales for this design choice, loaded significant data from Perk's activity logs, and read the data using a rectangular query. We saw how to implement this in SQL, and demonstrated the usage of the Squeal gem. Finally, we visualized that data with a heat map. Now, for our second project, we will look up time zones based on location. Conceptually, this task is quite straightforward; time zones correspond to physical regions of the world, each associated with a specific time policy.

00:22:20.580

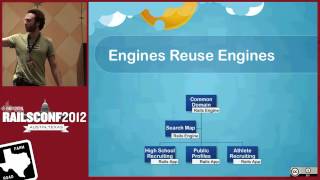

There exists an open database of time zones known as the Olson TZ database (sometimes called the zone info database), which is widely used as a catalog of world time zones. Rails integrates with it directly; in fact, you probably already have a copy installed on your computer. Additionally, polygons representing time zones are available for download online. By downloading these polygons and constructing a database using PostGIS, we can query our database to determine which polygon contains a given location based on its latitude and longitude. This will allow us to identify the corresponding time zone.

00:23:55.080

Now, let’s delve into the specifics: First, we'll generate our model. In our previous example, we used the point type since we were working with point data; this time, we’ll use the polygon type. Again, these are data types provided by PostGIS. Note that it can take multiple polygons to fully represent a single time zone's area; for instance, consider a coastline with multiple offshore islands. The Pacific time zone in the U.S. features several islands off the coast of Washington and Southern California; each island could be represented as a separate, disjoint polygon within the polygon dataset but would all belong to the Pacific time zone.

00:25:24.480

We will structure our time zones and time zone polygons as separate tables, implementing a one-to-many relationship between them. Continuing with modifications to our migration as we did before, we will again use the Mercator projection for our spatial data in the database as this will assist us in geometric analyses later on. Again, we’ll create indexes, including a spatial index on the polygons column. Once complete, we can use rake db:migrate as before. We'll also implement an RGeo factory for the Active Record class, which will involve some necessary configuration before accessing the data.

00:26:12.850

Now we need to obtain the required data. You can download time zone polygons from a website providing the data in the shapefile format. This shapefile is a standard format created by ESRI, a well-known player in the GIS field; shapefiles have become de facto standard formats for nearly all geo data downloads available online. This includes datasets from government sources and other organizations. However, bear in mind that shapefiles are somewhat of a binary format and require parsing for Ruby. There's a gem called RGeo::Shapefile that takes care of the heavy lifting for you.

00:27:16.280

This gem allows you to open the shapefile and gives you a stream of records, where each record comprises a geometric object (such as a polygon) and a set of associated attributes. For our application, we simply want to gather this information and populate our time zone database. In the particular shapefile for the time zone information, one of the attributes in those records will be the time zone name. We will use this time zone name to find or create a time zone object in our Active Record setup, then proceed to create the corresponding polygons.

00:28:33.860

It’s essential to acknowledge that some shapefiles may utilize various coordinate systems; while this particular one employs latitude and longitude, many others may use projections such as state plane or UTM. Regardless of the input data's system, we need to ensure that we convert everything to a Mercator projection before storing it in our database. Moreover, since a single time zone might consist of numerous polygons, we need to iterate through those and add each polygon individually to the database. Once again, this process will be fairly straightforward: we will read the file and add the polygons to our time zone database.

00:29:38.180

Next, we will outline how we can execute our queries. Remember that our goal is to identify the time zone corresponding to a given location. Therefore, for a given latitude and longitude, we will search for the polygon that contains that point, allowing us to backtrack to the appropriate time zone. We will write a query method on our model that checks which polygons contain the point. The core of our query will utilize the ST_Contains SQL function, built into the spatial capabilities of our database.

00:30:56.850

Many spatial queries you write will involve function calls, and this is where the Squeal gem excels. It allows you to use the Ruby syntax for these SQL functions, essentially treating them as standard Ruby function calls. This allows the Squeal gem to convert that into SQL for you. Active Record alone generally lacks robust support for SQL function calls, but by utilizing Squeal, it becomes easy to integrate complex queries within Active Record without lengthy interpolations. This capability is truly impressive—it would be fantastic if this feature became standard within Rails.

00:32:06.670

At this point, let's outline our controller, providing a web API interface to interact with our time zone service. Now that we have this full setup, it should be straightforward for us to retrieve our time zone based on location. However, let’s add an extra layer of complexity. Seattle is surrounded by water—specifically, Puget Sound to the west and Lake Washington to the east. When examining the polygons we've downloaded for our database, we find they cover land masses but exclude bodies of water. This means if I move out into the middle of Puget Sound, a query at that location won't align with any polygon. So, what happens then? Am I even in a time zone?

00:33:26.270

Under international convention, if a ship is in a country's territorial waters, it follows that nation's time zone. Territorial waters are generally defined to extend 12 nautical miles out from the coast. Consequently, if I'm not located directly within a time zone polygon but am within 12 nautical miles of one, I should still adhere to that time zone. In the case of the ferry, I'd still be observing Pacific Time due to my proximity to Seattle's shore. Therefore, we must revise our query to accommodate this scenario: we'll first look for a polygon directly containing the point; should that return nil, we'll execute a secondary query for any neighboring polygons extending within 12 nautical miles.

00:34:38.850

This secondary query can be more intricate, so let’s delve into the specifics. We will create a where clause calculating the distance between the query point and the polygon in the database, ensuring the distance falls within a predetermined value. Here, we use the ST_Distance function. Additionally, because spatial data and distances correlate with specific coordinate systems, we must convert distances—12 nautical miles in this case—into the equivalent value in Mercator space. The relevant formulas to handle these conversions can typically be found online.

00:35:51.720

But what if there are multiple polygons within that 12-mile radius? In Puget Sound, for instance, many islands surround the query point. It would make sense to retrieve all those polygons and take the closest one; so we'll order our results by distance and grab just the first. Fortunately, the Squeal gem allows for easy implementation of these ordering conditions similar to how it handles where clauses. On a side note, I should mention that the last time I checked, Active Record lacked string interpolation in the order clause.

00:37:04.640

After successfully setting up this query, we still need to manage performance aspects, as Rails 3.2 offers nifty explain functionality. Analyzing the query performance, we discover that PostGIS is not optimizing our ST_Distance queries effectively; it's conducting a full table scan and calculating distances for every polygon in the database. Thus, it becomes apparent that we can approach this query differently.

00:38:06.220

Instead of filtering on ST_Distance, we can create a circle with a 12 nautical mile radius around the queried location, and filter for polygons that intersect this circle. PostGIS optimizes intersection queries significantly better than distance queries. We can rewrite our code to leverage this approach: using the ST_Buffer SQL function allows us to create that circle by establishing a buffer around the point, testing for intersection with the polygons within the database. The query optimizer will be much happier, and we're more likely to see improved performance results.

00:39:12.190

Moving forward, we have just a few more minutes in our session, so let’s look at another optimization technique. Spatial data can often be quite large, and while big data typically implicates many database rows, in spatial data, it's not uncommon to have significant sizes within individual rows also. Some polygons may comprise boundaries that navigate along coastlines or rivers, resulting in thousands or tens of thousands of sides, depending on the complexity of the geographical feature.

00:39:50.000

As a result, once we query for any polygon and ask the database to compute the intersection with our buffer circle, this single test may take considerable processing time—database indexing won't assist us in this case. Thus, what can we do? A common optimization strategy involves turning a large polygon into several smaller polygons, increasing the number of rows but decreasing the size of each individual row. Instead of storing one massive polygon with, say, 50,000 sides, we can break it into smaller segments until each polygon fits within a manageable size—allowing our database index to effectively speed up the search process.

00:40:47.020

To achieve this, we can utilize a 4:1 subdivision algorithm. In essence, this method divides a large polygon into four smaller quadrants, each with significantly fewer sides. If those smaller polygons are still too large for our purposes, the subdivision process can be repeated recursively, ensuring all resulting polygons reside within desired parameters, leading to a greater number of efficient rows in the database. This method ensures we can maintain query performance consistently high while still effectively managing the variability in polygon size.

00:41:20.000

I can assure you that the added complexity is not insurmountable. When we parse the shapefiles to populate the time zone database, instead of directly inserting large polygons, we will apply the 4:1 subdivision algorithm, producing smaller polygons to store in the database. The entirety of this segmentation algorithm can be coded succinctly in Ruby, relying heavily on the features provided by the RGeo gem. It provides a number of geometric utilities, allowing us to carry out necessary operations with ease.

00:41:50.000

So to summarize what we accomplished during this project: We created separate models for time zones and polygons that established a one-to-many relationship between them. We loaded data from a shapefile that we downloaded online. We wrote queries to find polygons. The first focused on retrieving the polygon that contained a specific geographical point, and the second queried for any polygons intersecting a circle around that point, extending to 12 nautical miles. Lastly, we further optimized our queries by subdividing polygons into smaller manageable sizes, allowing the database index to help accelerate our search process.

00:42:18.000

We went through this quickly, but I hope you gained a broad understanding of these techniques and how they're implemented in practice. If you wish to delve deeper into the coding and implementation techniques covered, all slides, along with extensive reading material, will be available online. You can write down the URL, which will be active later today. We postponed posting it live to avoid overwhelming our Wi-Fi with a hundred people trying to download large files simultaneously.

00:43:34.000

By the end of today, the page will be uploaded, including links to all the software used and a curated set of articles to assist you in your journey with geospatial projects. If you're interested in discussing geospatial tools and technologies further or if you'd like to network with others in the field, we're organizing a BOF (Birds of a Feather) session for the second annual GeoRails summit right here at RailsConf. Check the boards outside for more information on the schedule.

00:44:02.000

Thank you all for attending; I hope to see many of you at the summit. If you have any questions, feel free to ask!