Ruby

Heroku 2014: A Year in Review

Summarized using AI

Heroku 2014: A Year in Review

by Terence Lee and Richard SchneemanThe video titled Heroku 2014: A Year in Review presented by Richard Schneeman and Terence Lee during RailsConf 2014 focuses on the improvements and developments made by Heroku throughout the year 2014, with a particular emphasis on application performance and various Heroku features.

Key Points Discussed:

Deployment Speed Improvements:

- Heroku identified slower deployment times and conducted extensive profiling to accelerate the processes. After six months, deployment speeds were improved by 40% through several methods:

- Running code in parallel which reduces the time taken to install dependencies.

- Implementing strong caching strategies allowing asset reuse between deploys, significantly speeding up deployment times in Rails 4 compared to Rails 3.

- Optimizations in compressing application packages with the pixie command, reducing the wait time for app builds.

Application Speed:

- Emphasizes the importance of consistency in application response times. They introduced px dynos with more resources to help manage and process requests more efficiently.

- Discussed the advantages of using Puma web server over Unicorn for better resource management, especially in handling slow clients and processing requests efficiently since Puma allows threading.

Ruby and Community Engagement:

- The presentation highlighted the Ruby community’s evolution by emphasizing the end of life for older Ruby versions, urging developers to upgrade for security and performance reasons.

- Heroku's commitment to supporting the latest Ruby versions immediately upon release.

- Recognized the contributions of key Ruby core team members (e.g., Nobu and Koichi) who are committed to enhancing Ruby's performance and fixing bugs.

Heroku Features:

- Introduced features like HTTP Request IDs for better log tracking, and runtime metrics for performance monitoring, which assist in diagnosing slow requests and the overall performance of deployed applications.

- Noted the evolution of the asset pipeline in Rails, emphasizing improvements made that help in minimizing support tickets related to deployment issues.

Conclusions and Takeaways:

- As Heroku continues to enhance its platform, the improvements made in deployment speed, performance consistency, and the introduction of new Ruby features underscore a strong commitment to helping developers build and deploy Ruby applications more efficiently.

- Continuous community engagement and support for the latest technologies reflect Heroku's dedication to providing a robust development environment for Ruby on Rails applications.

- Developers are encouraged to stay updated with platform changes via Heroku's changelog and contribute to the Ruby community to aid in its advancement.

00:00:18.000

All right.

00:00:23.119

Okay, hello everyone! Thank you, thank you! Welcome to RailsConf.

00:00:28.560

Let me be the first to welcome you to our talk today about Heroku: 2014 A Year in Review.

00:00:35.040

This presentation will be a play in six acts featuring Terence Lee and Richard Schneeman.

00:00:41.520

As many of you might know, Heroku measures their years by RailsConf.

00:00:47.840

So this review will cover our journey from the Portland RailsConf to the Chicago RailsConf this past year.

00:01:00.879

We are members of the Ruby task force, and this year has been significant for us.

00:01:07.119

We will discuss app performance, some Heroku features, and community features.

00:01:12.320

First up to the stage, I would like to introduce the one and only Mr. Terence Lee.

00:01:20.320

You might recognize him from his other roles. He hails from Austin, Texas, known for having the best tacos in the world.

00:01:27.600

Now, those are fighting words, my friend!

00:01:34.320

He’s also known as the Chief Taco Officer—or CTO, depending on the context.

00:01:41.920

Something interesting about Terence is that he was recently inducted into Ruby core. So, congratulations to Terence!

00:01:48.479

Without further ado, let's move on to Act One: Deploy Speed.

00:01:54.079

Thank you, Richard. At the beginning of the Rails standard year, we focused on improving deployment speed.

00:02:01.759

We received a lot of feedback indicating that deployment was not as fast as it could be, and we were determined to enhance it.

00:02:08.640

Our first step was measurement and profiling to identify slow areas and find ways to improve them.

00:02:13.760

We aimed to gauge the before and after states to set a clear endpoint before moving on to other enhancements.

00:02:20.640

As you know, you can never be completely done with performance improvements.

00:02:26.239

After about six months of work, we succeeded in cutting down deployment speeds across the platform for Ruby by 40%.

00:02:34.400

We approached this by focusing on three key strategies for improvement.

00:02:40.080

Firstly, we implemented parallel code execution, allowing multiple processes to run at the same time.

00:02:47.920

With this strategy, if you catch something once, you won’t have to do it again.

00:02:53.440

Additionally, we worked closely with the Bundler team on Bundler 1.5 to add a parallel installation feature.

00:02:58.480

If you haven't upgraded yet, I highly recommend moving to Bundler 1.5 or later.

00:03:05.920

Bundler 1.5 introduced the '-j' option, which allows users to specify the number of jobs to run in parallel.

00:03:12.800

This option utilizes system resources effectively during bundle installation.

00:03:19.200

Dependencies are now downloaded in parallel, improving efficiency significantly.

00:03:26.000

This enhancement is especially beneficial when dealing with native extensions like Nokogiri.

00:03:31.200

In previous versions, such installations could stall the process.

00:03:36.319

Furthermore, Bundler 1.5 added a function that automatically retries failed commands.

00:03:41.760

Previously, if a command failed due to a transient network issue, users had to restart the entire process.

00:03:49.280

By default, Bundler now retries clones and gem installs for up to three attempts.

00:03:56.240

Next, I want to talk about the Pixie command.

00:04:01.360

Has anyone here heard of Pixie?

00:04:06.080

Pixie is a parallel gzip command that our build and packaging team implemented.

00:04:11.200

When you push an app to Heroku, it compiles the app into what we call "slugs."

00:04:18.080

Originally, we used a method that involved just tar files, but we found compression was a bottleneck.

00:04:23.520

By using Pixie, we significantly improved the slug compression time, speeding up the overall build process.

00:04:30.080

The trade-off was slightly larger slug sizes, but the performance gains made it worth the change.

00:04:36.639

Next, we turned our attention to caching.

00:04:43.680

In Rails 4, we were able to cache assets between deploys, a feature that was not reliable in Rails 3.

00:04:56.080

In Rails 3, caches would often become corrupted, necessitating their removal between deploys.

00:05:02.800

This inconsistency made it difficult to rely on cached assets.

00:05:08.200

Fortunately, Rails 4 fixed many of these issues, allowing reliable caching that improved deployment speed.

00:05:14.320

On average, deploying a Rails 3 app took about 32 seconds, while Rails 4 reduced this to approximately 14 seconds.

00:05:20.160

This significant improvement came from both caching and optimizations introduced in Rails 4.

00:05:27.040

We also focused on eliminating unnecessary code to speed up the build process.

00:05:33.200

One of our first improvements involved preventing Bundler from downloading itself multiple times.

00:05:40.160

Previously, we would download and install Bundler twice during the build process.

00:05:47.040

To address this, we implemented caching for the Bundler gem, reducing network I/O.

00:05:53.440

We also eliminated duplicate checks in determining the type of application being deployed.

00:05:58.720

Richard took the lead on refactoring the detection process, simplifying it significantly.

00:06:04.640

This allowed us to perform checks only once, leading to further efficiency.

00:06:10.480

For those interested, Richard has given a previous talk about testing and improving the build pack.

00:06:17.040

Now, let me introduce Richard, who will present the next section.

00:06:24.079

Richard loves Ruby so much that he married it—almost literally! He got married right before our last RailsConf.

00:06:31.360

He's also a member of the Rails issues team and is recognized as a top Rails contributor.

00:06:39.120

Many of you might know him for creating a gem called Sextant, which simplifies route verification.

00:06:45.840

Both Richard and I live in Austin, and we have Ruby meetups at Franklin's BBQ. If you're ever in town, let us know!

00:06:55.200

Now, for the first part of this act, we will discuss app speed.

00:07:02.720

Before we dive into that, let's talk about document dimensions.

00:07:11.760

Originally, our slides were designed for widescreen, but the displays here are standard.

00:07:17.440

You will be able to see all the slides without any cut-offs.

00:07:24.160

In talking about app speed, I first want to discuss tail latencies.

00:07:30.000

Is anyone familiar with tail latencies? Some of you are, great!

00:07:36.880

This graph shows a normalized distribution with the number of requests on one side and response time on the other.

00:07:43.680

The further out you go, the slower the response becomes.

00:07:49.600

Customers on either side of this distribution have very different experiences.

00:07:55.680

We can see the average response, which can be misleading.

00:08:02.400

We estimate that roughly fifty percent of your customers get a response time at or below this average.

00:08:08.160

When we move up to Percentile 95, we can see that 95% of customers receive responses faster.

00:08:14.560

While the average may look good, having an understanding of the distribution is crucial for app performance.

00:08:22.160

We must consider not only average response times, but also the consistency of each request.

00:08:28.400

So, how can we address these tail latencies?

00:08:35.200

One solution launched this year was PX Dynos.

00:08:41.520

Whereas typical dynos only have 512 MB of RAM, a PX dyno offers six gigabytes of RAM and eight CPU cores.

00:08:48.800

This allows for better management of high traffic loads and improved processing speeds.

00:08:55.840

Scaling can be done both horizontally and vertically, which adds to the flexibility.

00:09:02.720

When faced with more requests than can be processed, simply scaling up by adding more dynos is one approach.

00:09:09.440

However, effective use of resources is key.

00:09:15.120

In the previous 512 MB dyno, you might think you’re utilizing space well by adding a couple of Unicorn workers.

00:09:21.120

In a PX dyno, adding two Unicorn workers might not utilize the resources effectively.

00:09:27.920

I’ve fallen in love with Puma, a web server designed by Evan Phoenix.

00:09:34.800

It was originally designed for Rubinius, but it also works quite well with MRI.

00:09:41.760

Puma manages requests by utilizing multiple processes or threads and can run in a hybrid mode.

00:09:48.560

If one process crashes, it doesn't affect the entire server.

00:09:55.440

However, even with MRI's Global Interpreter Lock (GIL), many I/O operations can still efficiently run in threads.

00:10:01.440

By implementing multiple threads, we can maximize resource utilization.

00:10:07.680

Scaling effectively within a dyno ensures that we take advantage of the greater RAM capacity.

00:10:14.560

Nonetheless, we must be careful to avoid exceeding memory limits, as this would slow down our application.

00:10:22.000

It’s also critical to consider slow clients.

00:10:28.080

A client connecting via a slow connection may consume resources unnecessarily.

00:10:33.600

Puma can buffer these requests, offering a safeguard against performance degradation.

00:10:40.000

To clarify threading concerns: Ruby is not commonly viewed as a thread-safe environment.

00:10:47.200

However, you can configure Puma to utilize only one thread if desired.

00:10:53.120

You can gradually adapt your application to be thread-safe.

00:10:59.920

Working within the context of application speed, we’ve done significant work to improve routing efficiency.

00:11:07.680

Shared distributed state across multiple machines can be inefficient.

00:11:13.440

In-memory state within a single machine is inherently faster.

00:11:19.680

The Heroku router is designed to quickly route requests to the appropriate dyno.

00:11:25.680

It employs a random algorithm to deliver requests efficiently, minimizing delays.

00:11:31.760

Having intelligent in-memory routing allows for faster processing.

00:11:37.680

A graph produced by the Rap Genius team illustrates request queuing.

00:11:43.840

The goal is to minimize request queuing, ensuring minimal wait times for customers.

00:11:51.920

The top line in the graph illustrates current performance, while mythological scenarios display ideal response times.

00:11:57.920

As we improve our request handling, we aim to reduce queuing times and enhance customer satisfaction.

00:12:05.440

With additional resources, we can push our performance further.

00:12:10.640

Ruby 2.0 introduced optimizations such as garbage collection improvements that help make processes more efficient.

00:12:17.680

As a community, it’s essential to address memory allocation concerns.

00:12:24.000

Enhanced performance means increased consistency across application experiences.

00:12:30.800

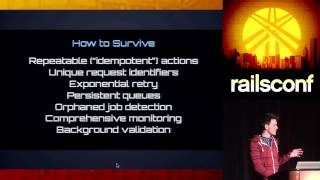

If you experience slow moments in your application, we rolled out a platform feature called HTTP request ID.

00:12:38.080

Each incoming request receives a unique identifier, which you can track in your logs.

00:12:45.920

This helps trace specific errors and debug runtime metrics in your Rails app.

00:12:52.000

If you’re encountering any performance issues, you can check the request ID to pinpoint bottlenecks.

00:12:58.880

Now, let's transition to Act Two, where Terence will discuss Ruby on the Heroku stack and community updates.

00:13:05.120

Thank you for your attention, everyone!

00:13:17.760

I will dive into some Ruby updates now.

00:13:23.360

Who's still using Ruby 1.8.7? Raise your hands!

00:13:30.000

Just one person; please consider upgrading.

00:13:35.520

And Ruby 1.9.2? A few more hands—good!

00:13:41.760

What about Ruby 1.9.3? I see a decent number of you.

00:13:48.480

Let’s not forget that Ruby 1.8.7 and 1.9.2 have reached end-of-life, and I strongly recommend upgrading!

00:13:56.800

As of this year, Ruby 1.9.3 will also reach end-of-life in February 2015, which is quite soon.

00:14:06.720

The minimum recommended Ruby version for Heroku apps is now 2.0.

00:14:12.320

You can declare your Ruby version in the Gemfile on Heroku to use this version.

00:14:21.920

We're committed to supporting the latest Ruby versions as they are released.

00:14:29.120

We roll out updates on the same day as new Ruby versions are released.

00:14:36.000

If any security updates are released, we ensure they are available as promptly as possible.

00:14:44.080

Only push your application again after updates; we avoid automatic upgrades to maintain your control over changes.

00:14:51.920

We publish all Ruby updates, especially security ones, through our changelog.

00:14:57.840

If you'd like to stay informed, consider subscribing to the dev center changelog.

00:15:05.760

You won’t receive excessive emails; it's low traffic.

00:15:11.680

Now, let’s discuss the Ruby team we have built.

00:15:18.080

In 2012, we hired three prominent members from Ruby core: Matsuda, Koichi, and Nobu.

00:15:24.720

Many attendees may not know who these figures are, so let me clarify their contributions.

00:15:31.280

If you look at the number of commits made to Ruby since 2012, you'll see significant activity from Nobu.

00:15:39.920

In fact, he’s the top committer, followed closely by Koichi.

00:15:48.640

I mistakenly included an entry for an unknown entity called 'svn' which isn't a real person.

00:15:56.000

I learned about this during my early contributions to Ruby.

00:16:03.360

Nobu, known as the 'Patch Monster,' is essential for fixing errors within Ruby.

00:16:09.920

Nobu consistently addresses various issues, making Ruby run much better.

00:16:14.160

To illustrate his impact, let’s discuss a couple of bugs he's resolved.

00:16:22.640

For example, the 'time.now equals an empty string' bug.

00:16:26.440

Nobu is also responsible for fixing bugs that involve segfaults in the Rational library.

00:16:31.680

The other key member is Koichi, who has made substantial contributions to Ruby's performance.

00:16:36.400

His work on the YARV virtual machine and performance optimization is commendable.

00:16:41.920

Recently, he has been focusing on the new garbage collection process.

00:16:48.800

The introduction of shorter GC pauses helps improve execution speed.

00:16:54.720

We appreciate the continuous enhancements to Ruby's performance.

00:16:59.440

Koichi has also been heavily involved in profiling the Ruby application experience.

00:17:06.800

Another important aspect is the infrastructure to support faster Ruby releases.

00:17:13.920

Improving release speed has been a high priority for us.

00:17:19.760

With that said, we are eagerly anticipating Ruby 2.2, and we’re keen to hear your ideas for enhancements.

00:17:27.600

We are now entering Act Three, where we will cover Rails 4.1 updates.

00:17:33.440

I hope everyone is enjoying RailsConf so far!

00:17:39.920

In Rails 4.1, we’ve made significant enhancements to Heroku.

00:17:47.200

We are focused on security by default.

00:17:53.120

The new secrets.yml file utilizes environment variables for configurations.

00:17:58.800

Upon deploying, this setup automatically generates a secure key.

00:18:05.760

You can modify the secret key base environment variable at any time.

00:18:11.680

Another essential improvement is support for the database URL environment variable.

00:18:16.800

This has been a long-standing feature but lacked reliable functionality.

00:18:22.480

Now, Rails 4.1 supports this feature out of the box, simplifying database connections.

00:18:29.680

If both the database URL and the database yaml file are present, their values will merge.

00:18:37.040

So now, ensuring proper database configuration has become more straightforward.

00:18:42.720

Additionally, we have made improvements to the Rails asset pipeline.

00:18:48.480

The asset pipeline has historically generated numerous support tickets for us.

00:18:55.680

To reduce confusion, Rails 4.1 will provide warnings in development if you forget to compile assets.

00:19:02.640

Rails will prompt you if you haven't added necessary files to the pre-compile list.

00:19:09.440

Thank you all for joining us today!

00:19:15.680

We'll have community office hours later where you can ask any Rails-related questions.

00:19:24.480

Richard will also be available for a book signing of his new Heroku book.

00:19:30.720

Thank you, everyone, and enjoy the rest of RailsConf!

Explore all talks recorded at RailsConf 2014

+129