00:00:10

Hey, am I miked? Cool. Uh, hi everybody!

00:00:15

Alright, I'm going to go off the title slide for a second and switch back to my code. I’m just going to put this slide up as my blank slide, but I thought you might want the title information to start with.

00:00:27

Alright, hi everybody! Is everyone having a good day so far here? Alright, that's good. That's encouraging.

00:00:34

Um, so I want to start when I get a new feature request. I want to talk a little bit about an application that I was building recently. To give you the details really quickly, it's an inventory management system.

00:00:48

It's a very simple one, and the feature that I was asked to create was an administrative feature where an administrator could verify the inventory of a set of items at a number of different locations in their larger site. They check the values against the observed values, update the values, and manage the inventory.

00:01:09

This was a relatively straightforward Rails feature, but because I think about testing as part of my process, I start to think about how I'm going to test it. There are a couple of options.

00:01:35

I could follow the classic RSpec TDD approach, starting with an end-to-end Capybara test, supporting that with various business logic tests, and focusing on smaller and smaller pieces of code before eventually writing unit tests. How many people does that describe? Is that the kind of testing practice you are familiar with?

00:01:58

Alright, some like maybe half of you. I could also follow the Rails core suggested testing practice, which is to basically write the code and then write a bunch of integration and system tests at the end. How many people does that describe? A few lonely people just because I'm curious.

00:02:35

So from the last couple of talks, how many people here regularly run their entire test suite on their local machine before they check it in? That's maybe a third? How many people's entire test suite only runs on a continuous integration server and never runs anywhere on everyone's local machine?

00:02:54

That is approximately two-thirds or so of the room. That is mildly terrifying to me. And that’s okay, we're going to come back to that.

00:03:11

How many people here are in situations where they feel like they don’t test enough? One, two, three, four, seven, twelve... whatever the number is, that’s actually an option.

00:03:30

All of these choices come down to a decision I’m making— the process I’m choosing, and the way that I’m testing needs to be worth it.

00:03:43

This begs the question really: what does it mean for testing to be worth it? What does 'worth' mean in this situation? What does it all mean? More pointedly, how can I tell if testing is helping?

00:04:06

This talk is called 'High Cost Tests and High Value Tests.' My name is Noel Rappin. You can find me on Twitter at @NoelRapp. Feel free to message me during this. I won’t see it during the talk, but I’m happy to answer questions. My DMs are also open.

00:04:27

If you want to share fantastic things about this talk on Twitter, you should consider yourself encouraged to do so. I work for a company in Chicago called Table XI. I also have a podcast called Tech Done Right.

00:04:51

We'll talk more about that at the end, but let's start with the main course. The question here is how can you measure the cost and the value not just of testing but of any software practice?

00:05:04

The honest answer is that we really don’t know. These things are complicated; there are a lot of factors. It's hard to say how much a given practice costs you and how much value a given practice brings.

00:05:27

Tests have an issue where they serve many different purposes within a project. Tests are code, yes, but they also act as documentation. They also indicate how complete the code is, which makes them process.

00:05:46

If you're doing TDD, tests also inform the design of your code. So they can have costs and can provide value in a lot of different ways, and it's very hard to conceive a single way to measure that value.

00:06:06

But I'm going to do it anyway in the name of having something to discuss for the next 25 minutes. The metric I’m going to use here is time.

00:06:24

Time has a couple of advantages; it's super easy to measure, and we all have an intuitive sense that if you are building the right thing, the time you spend on something is correlated with its cost.

00:06:50

If you are not building the right thing, then none of the advice in this talk will help you. You should figure out why you’re not building the right thing and start building the right thing.

00:07:09

Also, most of the other positives of tests, like improving design and code quality, ideally pay off in saved time when building future features. Otherwise, you could argue that it's not worth doing.

00:07:35

So let's start with the fact that tests cost time. You hear this a lot. One of the problems with TDD is that it just takes too much time to set up.

00:07:56

Let me break that down a little bit. What are the specific ways in which a test can cost you time over its lifecycle? First off, you have to write the test. That’s obviously time spent that you wouldn’t be spending if you weren’t testing.

00:08:12

There is a particular amount of time that you spend just typing or thinking about the test you’re going to write. Then the test runs many times, ideally on your local desktop machine, but for many of you it runs on a distant server.

00:08:28

This is a cost because even if it’s not a direct cost in your development process, it increases build time and the number of test suites you can run.

00:08:46

A new person coming to the feature also needs to understand how the test works as part of their process of learning the code. Additionally, the test needs to be fixed when it breaks, whether or not it's breaking for a good reason.

00:09:11

That’s a cost that comes specifically from bad testing, as a bad test will often fail sporadically.

00:09:31

On the other side, tests save time in a couple of different ways. They can save you time by improving the code design. A certain amount of time that you might spend whiteboarding or doodling can instead be spent writing tests.

00:09:50

A TDD advocate would say that tests actually improve the design of the code, saving you time in the future. One underrated point is that in development, it is often faster to run an automated test than to manually test the code.

00:10:05

For instance, testing that inventory management form manually may involve logging into the site, navigating to a particular page, inputting values into a form, and pressing submit. This could take a minute or two, whereas the end-to-end Capybara test runs in just a second or two.

00:10:24

I often find myself thinking that a task is too simple to write a test, and then I find myself repeatedly doing the manual process, realizing I should have written that test a long time ago.

00:10:44

Tests also validate the code to some extent. While they don’t replace further QA testing, they can give you confidence that might not be present without them.

00:11:05

Finally, tests catch bugs faster. They can catch a bug before other code becomes dependent on the buggy behavior, which can save you time.

00:11:22

This can also save you money by catching a bug before it goes into production and potentially losing customers or incurring server costs.

00:11:45

So we have four different kinds of costs: writing, running, understanding, and fixing. Each has different forms of value: design, development speed-up, validation, and bugs.

00:12:04

One interesting point is that these costs and values happen at different times in the lifecycle of the test.

00:12:27

When you actually develop the test, you take the cost of writing the test. You gain some savings from the design and from preventing a manual walkthrough, but then you need to live with the test forever.

00:12:47

Forever, you have the costs of running, understanding, and fixing, but at the same time, the benefits of bug catching and validation also live in the codebase forever.

00:13:05

Not only does this happen over time, the costs and the values are incurred by different people. The developer writing the test and feature gets most of the value, while the remaining costs and value are spread across the entire team throughout the project.

00:13:26

This structure makes it very challenging for any individual to confidently state whether this practice has value or this test was useful or not, as it can be very dependent on context.

00:13:50

Spoiler alert: I don’t really have an answer to how you can navigate this effectively. I’m not going to say what every single one of you should do right now, as you're all working in different contexts.

00:14:10

However, what I hope you'll gain in the next 20 minutes or so is a sense of how to think about these trade-offs in your own context and approach testing in terms of strategy rather than tactics.

00:14:31

I feel like a lot of test advice, including some I give, focuses too much on tactics. For example, how do I write this particular test? How do I use this specific RSpec matcher? Should I use MiniTest instead?

00:14:58

We don’t spend nearly enough time thinking about strategy— like how many end-to-end tests should we write? When do I switch to a unit test? That’s the kind of process that I want to discuss.

00:15:13

I’m about 13 minutes in, and I’m glad you all are here. Forget everything I said up until now; I was just clearing my throat. I want to present some data from a small project I’ve been working on.

00:15:36

This is the inventory management project I worked on by myself for about 20 to 25 hours a week over 8 weeks, totaling around 200 hours of development.

00:16:04

Since I was the only developer, I got to design the architecture and set all the testing practices. I wound up with largely three different kinds of tests.

00:16:30

First, there were Capybara integration tests—actually RSpec system tests. The input for these tests is simulated user interaction, and the expected output is the HTML we anticipate.

00:16:50

Empirical profiling indicated these tests run between half a second to three seconds. While it’s hard to determine how long it takes to write them, 30 minutes seems like a reasonable time to spend crafting one from scratch.

00:17:13

Let's agree to accept that number, though I understand it’s not scientifically proven.

00:17:30

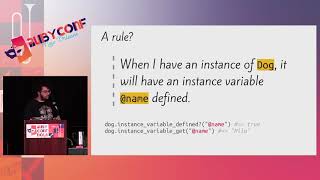

The second level of tests are what I call workflow tests. The structure I use in Rails is to allow the controller to pass data off to a workflow or service object that handles the actual business logic.

00:17:48

The tests for this workflow check passing parameters to the object and concluding with checking the database to ensure it made the expected changes. These tests run much faster, anywhere from five-hundredths to three-tenths of a second.

00:18:08

Again, I actually timed myself writing these tests, and they took about 15 minutes.

00:18:32

Finally, we have unit tests, which test the input and output of a single method. This includes various things in the code base, such as ActiveRecord finders.

00:18:51

In total, this small project has 180 tests, which is fewer than many projects. The breakdown includes 22 system-level specs which take almost 13 seconds to run.

00:19:06

Interestingly, while the system tests accounted for 12% of the code, they represented 75% of the total runtime.

00:19:20

While I spent a similar amount of time writing each class of tests, those system tests were the slowest and had the fewest numbers.

00:19:36

I also found the slowest tests, which involved JavaScript runtimes, took up 40% of runtime despite being a smaller slice of tests.

00:19:52

This variance became very meaningful when I looked at a separate project. This other project has been in development for four or five years with many developers, including myself.

00:20:07

In that project, there were a lot of Cucumber specs that took about 4.5 seconds each, versus a much larger number of unit tests that ran at about two-tenths of a second.

00:20:28

I would characterize the overall project as having too many end-to-end tests for its size.

00:20:45

These end-to-end tests were 18% of the total tests but accounted for about two-thirds of the runtime.

00:21:03

What does this data mean? Honestly, it may not carry much weight outside its specific context, but it did suggest a balance between how much time you spend on different kinds of tests.

00:21:19

The rule of thumb is: the larger and more complicated the test, the fewer of that kind of test you should write, while the smaller and quicker tests should be more numerous.

00:21:38

This concept ties back into the testing pyramid, but I've got empirical experience to support this notion.

00:22:02

Another insight I gained is that as you write similar tests, the cost to write subsequent tests diminishes because you often reuse code from earlier tests.

00:22:20

Therefore, the short-term cost of writing it may not be related to the type of tests you choose to write. It’s important to consider long-term costs.

00:22:44

So if someone suggests you should mostly write integration tests and downplay unit tests, you could argue that unit tests offer long-term savings.

00:23:04

In the life cycle of a project, once the test is written, its long-term cost primarily depends on how long it takes to run and how often it fails.

00:23:31

Both factors are influenced by the complexity of the test. A simpler unit test is less likely to have failures compared to a complex integration test.

00:23:52

The long-term savings from writing tests arise from their focus. A focused test makes failure detection much easier and clarifies where the issue lies.

00:24:15

In conclusion, the way to avoid having a slow test suite is simply not to write slow tests.

00:24:36

No individual test is responsible for a slow test suite. If your tests return long runtime results consistently, it can be a collective decision to write multiple slower tests instead of opting for quicker alternatives.

00:24:57

The next part I want to discuss is how you can think strategically about testing to avoid slow tests. In the case of my inventory management software, the first test I wrote was a Capybara end-to-end test.

00:25:15

This test runs through all parts of the system, including the user interface, controller, and database.

00:25:37

However, this end-to-end test can fail for numerous reasons, all of which can be difficult to troubleshoot.

00:26:01

In response to that, I then write workflow tests that are more isolated. These tests reduce the scope of failure because they only deal with the logic in the workflow.

00:26:26

Next, I wrote individual unit tests focused on specific pieces that might fail. That way, when failures do occur, they lead directly to the underlying issue.

00:26:47

Continuing with this thought process during development makes it clear where in the code base to look whenever a failure is detected.

00:27:05

When a client asks for a new feature, I repeat this process: do I write this acceptance criteria as a system test, workflow test, or unit test?

00:27:25

In this instance, I ended up writing a workflow test that encapsulates how the system logic works.

00:27:43

There was a bug where if a user entered a name already in the inventory, it duplicated that item. I was faced with the same choice: do I write this as a unit test or another workflow test?

00:28:06

Ultimately, I opted for a workflow test based on existing patterns in place, but I recognized that perhaps it should have been a targeted unit test.

00:28:31

When reviewing tests, be on the lookout for excessive copy-pasting as that usually signals that you might be testing at a contextually wrong level.

00:28:50

If multiple tests are failing due to a single bug, it’s worth considering whether all those tests are necessary.

00:29:10

It’s okay to delete tests that no longer provide meaningful value, which quite possibly is the scariest piece of advice to share.

00:29:31

But just as important is that when you design integration tests, you want them to save development time and ensure they support the initial path through the code.

00:29:49

Tests offer valuable services, but each should balance their costs carefully as part of your overall strategy.

00:30:11

Thank you for your attention, and feel free to find me on Twitter @NoelRapp. If you would like to read more, I have a book available titled 'Rails 5 Test Prescriptions' that dives into how to test Rails applications.

00:30:32

It’s available in both beta and final printed form, set for release in January. If you want to grab a copy, you might even get a discount if you check the promotional URL.

00:30:54

Lastly, check out my podcast 'Tech Done Right', where I cover discussions with developers and speakers at various conferences, emphasizing both technical and non-technical topics.

00:31:14

I appreciate you all for spending your time today, and I hope you enjoy the rest of the conference!