00:00:08.900

I think we're right about the start time, so I'm going to jump in and let people struggle with the technology.

00:00:17.100

I'm Andrew, and I work at Stripe on the developer experience of our core product codebase. It's a few million lines of Ruby, and it's a pretty big mono-repo with several large services, including the entire Stripe API.

00:00:30.810

Notably, it's not built on Rails; it's constructed using Sinatra and various other libraries we've pieced together over time to create our unique solution.

00:00:44.879

One of the goals of my team is to keep the iteration loop very tight during development. We aim to make it fast for developers to edit code and test it, whether by running a sample request against a service or executing a unit test.

00:01:01.550

Last year, we faced a significant issue. Originally, the discussion focused on code compiling, but Ruby doesn't compile code in the traditional sense. Instead, it took an exceedingly long time for our code to reload.

00:01:12.990

Actually loading all of the Ruby code in our codebase took too long, which hindered our development process. The big challenge here was that we have a large organically grown codebase where everything is tightly coupled and, in some ways, a bit messy.

00:01:30.540

Long-term, we want to untangle this mess and ensure clear separations between modules, defining roles and interfaces. However, in the short term, we still need a fast way to iterate on this code.

00:01:48.899

We reached a point where touching basically any file required reloading the entire codebase, which took more than 30 seconds. During this time, developers would be waiting, often uncertain if their changes had the intended effect.

00:02:06.060

A lot of this delay stemmed from Ruby's slow code loading, which I discovered while generating a few million lines of synthetic code across Ruby, Python, and JavaScript.

00:02:11.160

This synthetic benchmark isn't a perfect reflection of the real world but gives some insight. Python turned out to be the fastest; its interpreter generates parsed bytecode on the initial run, storing it in .pyc files.

00:02:29.959

If handed a completely new Python codebase and required to load it for the first time, it would be slow. However, during development, when you're altering just one file and reloading, Python performs exceptionally well. JavaScript also exhibits speed advantages.

00:02:41.180

However, Ruby is painfully slow in this instance when the codebase becomes large enough. I want to point out that recent Ruby versions adequately facilitate this process, allowing for the Python-like pyc trick. The instruction sequence library, part of the standard library, enables parsing and reading from disk, improving load times significantly.

00:03:09.440

The benchmarks I conducted demonstrated it worked well, but in practice, our instruction sequence compilation didn't yield the same advantages compared to the synthetic benchmark. We discovered that our file reloading involved significantly more complexity than just parsing the code.

00:03:38.239

So, we ended up implementing an autoloader which reduced our code reloading time from 35 seconds to about five seconds. Another significant advantage of this change was that we were able to eliminate all require relative statements from the codebase.

00:04:05.750

Now, developers no longer needed to explicitly define the path to any file they called upon. Let’s discuss how that works in further detail.

00:04:24.020

In this simplified view of a service, for example, an API service, you could have functionality to make or refund a charge. Each file has require relative statements at the top. As a result, any changes necessitate reloading all related files, which is counterproductive.

00:04:54.710

In reality, all that might be needed is to get the service listening on a socket to start processing requests. If you're testing something that doesn't involve refunds, why load the refund-related code? We needed a way to avoid reloading all code and find an efficient method to load only the necessary components.

00:05:16.070

The solution for us was utilizing Ruby’s built-in auto-loader. Defined within the module, it allows us to say, "Hey Ruby, if you're in the X namespace and you see a request for Y but can’t find it, go load this file instead." Then, once that file loads, Ruby can continue operating smoothly.

00:05:56.670

We auto-generated a series of stub files utilizing static analysis, which tells Ruby where to find all files in our codebase. We built a daemon running in the background that constantly checks and updates these auto-loader stubs with any code changes.

00:06:29.770

This ensures that we can boot the API up and have it listen on a port while problematically loading classes and modules as they’re requested by developers. One of the advantages of autoloaders is that they guess where files are based on how they’re defined. So, this only has to change if there is a change in definitions.

00:06:55.830

If you're simply modifying a method, no other changes will be necessary, allowing you to start running code much faster and sidestepping the lengthy build time.

00:07:16.220

Let me provide more details about the static analysis work we undertook. This chunk of code on the left defines several modules and constant literals. We utilized Ruby’s parser gem to convert it into an abstract syntax tree (AST), which you see represented here as a collection of s-expressions.

00:07:54.180

We extract a range of information from this AST and will delve deeper into the specific pieces of data we need later. However, a critical data structure we created is our definition tree—a structured representation of all definitions across the codebase.

00:08:38.250

Each of these nodes contains various metadata that aids us in the auto-loader generation process, allowing us the functionality to direct Ruby on where to seek unresolved constants.

00:08:59.580

Beyond knowing where everything lies defined, we aspired to gather comprehensive information regarding each reference in our codebase. We desired a full dependency graph, tracking how everything is interlinked. This provided us increased confidence that our rewrites wouldn't break existing functionality.

00:09:39.680

Initially, we attempted to load all the code dynamically via Ruby; it was a cumbersome process. This effort wasted extensive time—it’s particularly challenging to load Ruby code that’s designed to be loaded in arbitrary orders, as developers make various assumptions regarding their order.

00:10:05.590

The manual effort led many developers to execute scripts directly in the root directory for local tasks, which opened further doors for accidental execution of unintended commands in production. Therefore, we shifted to a static analysis approach.

00:10:31.740

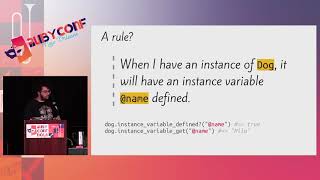

We wrote static analysis code in Ruby that mimicked Ruby's constant resolution methods, employing static analysis techniques instead of invoking Ruby directly. Understanding how Ruby handles constant resolution is essential in mastering this process.

00:10:54.760

The core concept in Ruby constant resolution is the nesting of contexts. The resolution of a constant in Ruby is influenced by the surrounding definitions that may appear several lines above it. For instance, the way a constant resolves can significantly differ based on the overall nesting.

00:11:39.700

Given our definition tree, we can utilize this nested context to resolve references. We begin checking in the innermost context and work our way up the hierarchy, validating step by step until we identify where each component lives.

00:12:17.030

Alongside the nesting resolution, Ruby also checks through its ancestor list. If a constant isn’t defined within a given class but exists at an ancestor level, Ruby allows that association to register.

00:12:57.230

Continuing with another example, we have to break the reference down into its individual components. This means we check for the child in the current level, then traverse the ancestor list for additional identification.

00:13:40.180

Our approach involves tracking all unresolved constants in the codebase and looping through to reevaluate them until nothing remains unresolved. In an ideal situation, all constants would resolve perfectly, but gems introduce complications.

00:14:00.610

For instance, when utilizing gems, there are situations with C extensions or intricate meta-programming that can shift constant resolutions. To counter this, instead of preemptively analyzing them, we load everything that the codebase requires.

00:14:22.250

We parse all require statements during the initial parsing of the codebase, activating gem resolution by loading everything in turn and validating resolutions until we compile a full list of resolved references.

00:15:10.560

Through this work, we uncovered actual bugs and unresolved error paths that hadn't been realized prior. Despite some paths being outright ignored due to complexity, we gained immense insight into our codebase.

00:16:03.960

Let's reflect on the steps required to ensure this process is safe for production. Not all code can seamlessly operate with a conventional loader, particularly one being dynamically generated like ours.

00:16:39.170

One of my first tasks was to run functional tests on our API, but charges routinely failed due to missing backends since they couldn't be accessed without being explicitly required.

00:17:05.300

This arose from the assumption in the code that these files would be required at boot time with the necessary side effects, creating a deadlock scenario. By enforcing engineers to explicitly specify requirements, we circumvent this issue.

00:17:50.000

With the tools we had at our disposal from static analysis, we exploited the generated ancestry trees to produce a dynamic list of required components, which drastically decreased manual specification.

00:18:09.530

Initially, I shared a stub file representing the autoloader, but this was somewhat of a simplification. The actual loader stubs we generate involve greater complexity. Primarily, we require gems before loading user-defined files to resolve potential dependency issues.

00:18:58.240

We also call hooks upon instantiation to enforce conditions regarding what we will allow to load in our context, like ensuring no test files are loaded in production.

00:19:38.090

This includes pre-declaring constants to avoid circular dependency issues and dynamically retrieving next steps utilizing well-mapped lookup strategies for potential constants.

00:20:16.260

In short, we also needed to build checks that prevented the loading of partial classes or conditions. Ultimately, we established rules to bind dynamic behaviors and get rid of code structures that inadvertently introduced complexity.

00:20:56.750

We banned practices that produced uncertainty in loading, such as dynamically set constants or reopening classes too late in the execution pipeline.

00:21:38.820

One notable instance involved instances of class reopening that produced unpredictable behavior when constants were referenced. When certain expectations were made, and the classes weren't defined in a particular order, the output became erratic.

00:22:26.200

Instead, by forbidding such practices, we vastly simplified the code structure. We've encouraged developers to view classes and modules as solitary definitions rather than open-ended traditions, limiting ambiguity and improving clarity.

00:23:20.450

Now, leveraging our enhanced dependency graphs, we've constructed several powerful features, leading to selective test execution. Suppose there exist two defined classes; if a behavior changes within a parent class, the required tests in the child class must also re-run, whereas changes to the child won't necessitate that all base tests execute.

00:24:05.000

This implementation within our CI framework reduces execution time by permitting fewer tests to run, translating to considerable efficiency gains.

00:24:48.340

As a result, when we began visualizing our dependency graph, we recognized its tangleness, dubbing it the 'Gordian knot.' This visual representation, while complex, demonstrated the convoluted relationships developers needed to navigate.

00:25:24.600

To tackle this complexity, we required a methodology for disentangling bits into component parts. Utilizing the dependency information allowed us to methodically remove sections from strong linkages and restrict future reconnections.

00:26:05.900

We have started enabling developers to define packages by creating UML files and specifying namespaces in the directory, importing fellow namespaces.

00:26:51.500

If those boundaries are crossed, violations are triggered, and warnings issue to discourage undesired coupling. As part of code review processes, this visibility allows teams to have informed discussions regarding dependencies when adding functionalities.

00:27:30.990

Looking forward, we aim to implement type-checking mechanisms to add more structure to our Ruby code. We've initiated projects where type annotations show up, checked dynamically but also through static analyzers to identify coding inconsistencies.

00:28:25.240

In summary, loading a million lines of Ruby in five seconds doesn't solely rely on quick optimizations of speed. It requires strategic application of autoloaders and directing Ruby to load less code while allowing a modular architecture.

00:29:11.790

Thanks for your attention, and I appreciate the opportunity to share our journey with you.