00:00:11.549

Today, I'll talk about the Just-In-Time (JIT) compiler for MRI or YARV. I am Takashi Kokubun, and I'd like to introduce myself first. Last year at the conference, some attendees from Japan seemed confused by the attire, with many in traditional clothes. This year, I have opted for a more convenient t-shirt. Please remember my face associated with this outfit.

00:00:34.780

Even if I attended last year and met some of you, some may still say, 'Nice to meet you,' which is understandable. My project this year is called 'Hammer.' It is an original Ruby implementation that is approximately eight times faster than the current Ruby implementations. My interests lie in optimizing the performance of the Ruby engine, and I joined the Hammer organization to further this goal. This year, I managed to optimize the engine, making it five times faster.

00:01:14.500

I am well-acquainted with the Ruby community, having been a maintainer in the Ruby organization, where I made MRI two times faster with version 2.5. I love the Ruby language, so I decided to optimize it further. Now, I would like to introduce the JIT compiler I developed, which is the focus of this talk.

00:01:46.258

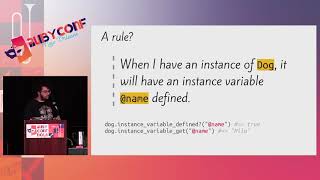

The first thing to note is that JIT stands for Just-In-Time compilation. A JIT compiler optimizes the program by compiling bytecode into native code during execution. Many of you may not have experience developing a JIT compiler, so let me clarify what it does. For example, consider a method in Ruby that performs a multiplication operation. In the Ruby VM, this method is translated into a form that can be executed but is not in native code. The Ruby VM works by pushing and popping values onto a stack for execution, interpreting bytecode one instruction at a time.

00:02:39.070

However, this process is quite different with a JIT compiler. The JIT compiler identifies the frequently executed methods and optimizes them by translating these hotspots into native code. When the VM recognizes a hotspot during interpretation, it switches to execute the compiled native code directly, resulting in faster performance.

00:03:21.230

Why is it necessary to introduce such complexity into Ruby? The primary motivation is speed. If Ruby becomes significantly faster — potentially two times faster — applications built with it could experience comparable performance improvements. A faster Ruby means enhanced user experience, which is essential for applications relying on quick execution.

00:03:57.440

Throughout the year, I have also developed a distributed queue middleware in Java due to performance constraints. However, I would ultimately prefer to utilize Ruby for its productivity benefits. Another point of consideration is the trade-off between Just-In-Time and Ahead-Of-Time compilation. JIT compilers compile methods during execution, allowing for optimizations that regular bytecode execution does not provide.

00:04:43.200

One of the challenges associated with Ahead-Of-Time compilation is that the optimizations are made before execution, which can significantly slow down the start time. However, JIT compares methods during execution, allowing for runtime information to guide optimizations. This dynamicity is pivotal for a language as flexible as Ruby.

00:05:32.670

Our current implementations of JIT compilers have shown promising results. The two JIT implementations that I worked on — the ones you see here — are demonstrating significant performance gains over Ruby 2.5. These implementations have doubled the performance, making them strong candidates for merging into the Ruby interpreter.

00:06:18.320

I want to take this opportunity to explain the mechanics behind these JIT implementations. There are three implementations I want to highlight, two of which were developed by me over the past year. Each of these compilers contributes uniquely to improving performance, focusing on optimizing Ruby's core message handling.

00:06:43.310

After finishing my previous implementation, I created a new JIT compiler called 'MJet,' which was motivated by the 2015 Devika keynote presentation. I introduced some concepts that allow for inline Ruby method calls, making execution faster and more efficient. This progress was initially demonstrated using the Ruby VM series.

00:07:18.490

In the implementation process, the JIT compiler monitors the execution of bytecode for frequently called methods. By utilizing profiling, it identifies hotspots and subsequently compares those methods against native code, achieving optimized runtime performance. My implementation shows how we derive the native code from the bytecode and execute it efficiently.

00:08:02.980

That leads to an essential aspect regarding why we seek such optimizations for Ruby. One primary motivation is the money saved through resource utilization. If Ruby's execution can be optimized effectively, we might need fewer server resources to handle computational loads, which translates to cost savings for businesses.

00:08:51.510

One of the focal points for JIT performance is function inlining. My work has led to optimizations in this area, demonstrating that many computational tasks can be streamlined. However, I have also identified challenges where Ruby's dynamic nature makes certain optimizations difficult to predict and execute effectively.

00:09:36.990

The broader objective of the optimizing compiler is based on removing unnecessary steps in method execution. When using instructions in JIT compilation, minimizing performance overhead represents a proper approach for enhancing the speed of Ruby applications.

00:10:22.410

Setting up these dylib calls and environments can also be cumbersome, as direct compilation needs to happen before execution. All aforementioned optimizations cater to enhancing the Ruby environment's response and speed, particularly when executing loops and conditional statements, as these represent the core areas impacted significantly.

00:11:31.750

In terms of further improvements and detailing, future enhancements will focus on refining recognizing redundant operations and enhancing the template system utilized in JIT compilation. Optimization through user-defined extensions, particularly in how parameters are passed and methods are invoked, will be emphasized.

00:12:29.090

The broader challenge remains in debugging and maintaining the stability of the Ruby environment — we want JIT optimizations to be seamlessly implemented and accepted by the larger Ruby community without disrupting existing capabilities.

00:13:14.170

Though developing refined methods may seem highly complex, focusing on automated processes can dramatically improve developmental speed and efficiency when modifying current APIs. Furthermore, refining memory management by integrating managed memory systems could create significant advantages for Ruby's operational reliability.

00:14:06.140

It is crucial to ensure that debugging remains a fully supported feature across all implementations of the Ruby code. Hence, as we develop these advanced features, maintaining the intuitive user experience of Ruby and preserving the language's dynamics is essential.

00:14:46.540

I am thrilled to be part of this evolving landscape and look forward to engaging discussions about advancing Ruby as a powerful tool in application development. Thank you for your attention. I am eager to answer any questions regarding my work with the JIT compiler and its implications for Ruby.