00:00:08.510

I will go ahead and get started. So, he's actually having a conversation with someone right before this.

00:00:15.139

How many people have been to a lot of Ruby conferences? Let's say more than five? Okay, cool. Out of curiosity, has anyone ever thrown up on one of the live streams? I am asking for a friend. Just kidding! For those of you watching from home, this room is packed—standing room only.

00:00:27.980

Anyhow, thank you so much for coming to my talk, 'The Good Bad Bug: Learning from Failure.' I'm Jess Rudder; that's my Twitter handle. I'm also @compchomp on YouTube if you're into YouTube. This is probably the easiest way to find me, and I love tweeting and being tweeted at, so feel free to do that.

00:00:40.460

Alright, when I was 26, I decided that I wanted to learn how to pilot a plane. My friends and family were pretty skeptical. They said, 'You are afraid of heights, you get motion sick, you don't even know how to drive a car,' and every single one of these things is actually very, very true. But I didn't see how it was relevant—no one was going to clip my wings.

00:01:03.260

Now, we're six months in, and I'm coming into final approach to runway two one in Santa Monica for just a routine training flight. I pull back on the throttle, pull the yoke up so that I'm just gliding in, and I have a more or less beautiful landing. Suddenly, there was a sharp pull on the yoke, and the plane jerked to the side. My instructor, Jeff, was like, 'I've got the plane in my hands!' and I thought, 'You have the plane? I didn't want to be responsible for it at this point.' He safely brought the plane to a stop, notified the tower to let them know what happened, and we got out to look at the damage—a flat tire.

00:01:31.400

By the way, my heart rate had finally started to return to normal; the plane was safely stopped, and a flat tire wasn't a big deal. We were just going to have to go back to maintenance to change out the tire. The runway was going to be blocked for less than five minutes, and everything was fine. So, I was actually pretty surprised when Jeff said, 'Hey, I'm going to drop you back off at the classroom, and then I'll come back and fill out the paperwork.' My heart rate jumped straight back up—whoa, wait! No one got hurt; why do we have to fill out any paperwork? I don't understand.

00:02:09.019

He said, 'I mean, I wasn't the biggest fan of paperwork. I also was really not a big fan of being in trouble, so I was hoping that I hadn't gotten him in trouble.' But Jeff reassured me, 'It's no big deal. You see, it turns out that the FAA collects data on pretty much every event, big or small. Even just a tiny tire blowout on a runway in a four-seat airplane, they want to get as much data as possible so that they can start working out patterns that can help them implement safer systems. They know that having more data means they can draw better conclusions.' But they also know that people don't like paperwork or getting in trouble.

00:02:50.640

So, as long as no laws were broken, and nobody got hurt, if you filed a report in a timely fashion, you're not going to be in trouble. Now, think about the wildly different approach we have for road accidents. When I was 12 years old, I was riding in the back of my parents' brand-new shiny Saturn. We were literally coming home from the dealership with the first car they'd ever bought, their first new car, and we were sitting at a stoplight when suddenly we lurched forward. We had been rear-ended. My dad got out to check, but we were all okay. He checked on the driver, who was an extremely nervous 16-year-old boy looking at the brand-new car he had just hit.

00:03:30.710

My dad looked it over and said, 'There's just a tiny hole in the bumper from the kid's license plate.' He reminded the kid to try to drive a little bit safer. Then we all piled back into our not-quite-as-shiny car and drove home. Not a single bit of paperwork was filed. No data was gathered. In fact, there isn't a single group in the United States responsible for collecting data about road accidents. It's usually handled by local agencies. If you call the police when you have a flat tire, they're not really happy to hear from you; they're probably going to hang up. There's just no one that wants to do it unless someone was injured or you're filing an insurance claim.

00:04:45.500

These two different approaches have actually led to very different outcomes. I looked up the most recent statistics that were available, which were for 2015, and for every billion miles that people in the U.S. travel by car, 3.1 people die. In contrast, for every billion miles that people in the U.S. travel by plane, there are only 0.05 deaths. If you're like me, decimals, especially when you're talking about tiny fractions of a person, can be difficult to comprehend. It’s a little easier if you hold the miles traveled steady—64 people die traveling in cars for every one person that dies traveling in a plane. There’s something really interesting hiding in that data.

00:05:50.930

We have different approaches that lead to two very different outcomes. The key difference, when I started to dig in, really was how each one approaches dealing with failure. You see, it actually turns out that failure is an incredibly important part of learning. Now, before we go much further, it's probably a good time to make sure that we're all on the same page when we talk about failure. So, what is failure? I think for some of us, it's probably that sinking feeling you have in the pit of your stomach when everything's going wrong, and there's a person with an angry, bright red face screaming at you. You're like, 'Why did I even bother getting out of bed today?' I can definitely relate to that.

00:06:50.920

When I was doing prep work for this talk, I started looking online, and a lot of laypeople were like, 'Oh, this one's easy: failure is the absence of success.' I thought that sounded right, but what's success? Oh no worries; they said, 'It's the absence of failure.' Okay, and that's the absence of success? Now we're in an unending loop, and that doesn't work for you in programming. So I went to the experts. The experts have a very specific definition of failure: failure to them is a deviation from an expected and desired result. Now, that’s pretty good. Honestly, there’s some truth in every single one of these definitions, but this last one is actually measurable and testable.

00:07:40.820

So we're going to stick with this one for the rest of the talk. Now, I couldn't find any definitive data, but I think that programmers are some of the people that experience more results than anyone else that deviate from their expectations. Programming would actually be an area where there's a lot of learning from failure. But one of the few places in the programming industry that I could find where people capitalize on failure is actually in video game development. One of my favorite examples of this is the game Space Invaders. You guys know the game, right? It's an old arcade game where you control a small cannon and fire at a descending row of aliens. As you defeat the aliens, they speed up, making it harder and harder to shoot them, right?

00:08:26.900

No, that is not what the game was supposed to be. Space Invaders was developed by a guy named Tomohiro Nishikado. This was back when a game could be developed by one person in six weeks instead of 6,000 people in 27 years. His plan was actually to have the aliens remain at a constant, steady pace, no matter how many you killed, until the end of the level, and only when you switched to a new level would the aliens speed up. There was just one problem: he had designed his game for an ideal world. Now, I don't know how good you are at modern history, but 1978 was far from ideal. He had actually placed more characters on the screen than the processor could handle.

00:09:05.720

As a result, the aliens actually chugged along at a slower pace and only reached the intended speed once enough of the sprites had been killed off for the processor to catch up. He had a few ways he could have dealt with this. He could have decided to shelve the project and wait until the hardware caught up, and that might seem silly, but he's an artist, and maybe he has a vision he won't change. He also could have decided, 'Well, the processor can't handle that many aliens, so I’ll change the spec instead. Instead of having 60 on the screen at once, I’ll have 20 on screen at once.' That's reasonable as well.

00:10:08.030

Instead of being rigid and just making a decision right away, he decided to test it out. So he gave it to game testers, and they loved it! They loved it with the broken algorithm. They got so excited as things sped up that they actually started making up stories in their heads about the game. They were like, 'Oh, the aliens are starting to get nervous because I'm killing so many of them, and that’s why they are starting to move faster. But they can't get away from me because I'm even faster than they are.' It ended up staying in the game because that was their favorite thing! Beyond that, it became an entirely new game mechanic called a difficulty curve.

00:11:36.370

Prior to this, games would remain at a constant difficulty until the end of the level, and then it would get harder in the next level. With this, people realized all bets are off; you can make a game as difficult as you want and switch it up anywhere in the middle of the game. That’s pretty amazing! He failed, but by testing it out and seeing how it worked, he actually succeeded more than he could have ever imagined on his own. I don’t know if he did this because he had read the studies on failures and was capitalizing on them, but the thing is, he actually was doing a great job of following exactly what the research says: you shouldn't hide from your failures; you should learn from them.

00:12:56.590

It turns out that failure presents a great learning opportunity, and the reason is that there is more information encoded in failure than there is in success. Let’s think about it. What does success look like? A check mark? A thumbs up? Perhaps a smile from your manager and a job well done? And when you get these, what have you actually learned? Research shows that people and organizations that don’t experience failure become rigid. The reason is that every bit of feedback tells them, 'Don’t change a thing. Just keep doing exactly what you’re doing, and everything will be okay.'

00:14:01.220

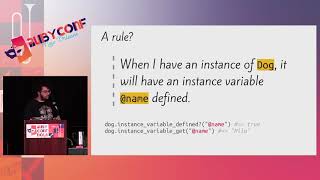

Failure, on the other hand, looks like this: just look at this! Look at how much information is available in this failure. Right away, we know exactly what went wrong. We know which line in the code has an issue, and if you have experience with this particular issue, you're probably going to know exactly what you need to do to fix it. Even if you've never seen this before, you're just a quick search away from pages' worth of information about this particular failure. Now that you have experience with an approach that didn't work and have done some research about what else you could do, it’s probably going to be pretty simple and straightforward to write something that does work.

00:15:08.030

Video game development actually has a long and honored history of grabbing hold of mistakes and wrestling them into successes. In fact, this concept of exploiting failures to make your programs better is so important that it actually has a name: the good bad bug. Having that space to learn from failure came in really handy for a game developer group in the 90s that was working on a street racing game. The concept for the game was that players would race through city streets while being chased by cops, and if the cops caught up to the drag racers and pulled them over, you lost; you couldn't complete the race.

00:15:56.390

But there was just one problem: they kind of got the algorithm wrong, and the cops were way more aggressive than they had intended. Instead of pulling the drag racers over, the cops would slam into them. The beta testers actually had way more fun trying to get away from the police than they had just kind of racing through the city trying not to get pulled over. As a result, the entire direction of the game was switched up, and the Grand Theft Auto series was born.

00:16:49.250

Now, I want you to think about that for a minute: the core concept of the best-selling video game franchise of all time would have been lost if the developers had panicked when they realized that they got their algorithm wrong and had tried to cover it up and pretend it never happened. Instead, they actually let people test it out and discovered, 'Hey, we're on to something here,' and that something was billions of dollars. Now, any program, really, that gets large enough might have a whole lot of work that goes into it before you ever write a single line of code. There may be hundreds, if not thousands, of hours done by product leads, designers, and business folks before a developer ever gets to it.

00:18:01.560

In game development, this is encapsulated in a document called the Game Design Document, or the GDD. It's supposed to be a living document; you are allowed to make changes to it, but when you're really late in the game, literally and metaphorically, making any kind of changes is a big deal. It means that you're going to have to change tech requirement pages, art pages are going to need to be redone, and release dates might have to be pushed back. But just might be off, you guys get the picture—you can change it, but it’s a big deal.

00:18:50.080

Now, that's the unhappy reality that the Silent Hill development team was facing. They had started building out the game to the GDD specs, but they had one problem: you see, the PlayStation's graphics card could not render all the buildings and textures in the scene, and as a result, when you stepped forward, buildings would suddenly pop into existence, and blank walls would magically have textures that weren't there before. It really distracted people from the game, and that's not good in any game, but if you're building a survival horror game, that's really bad because the atmosphere needs to draw you in; you can't have it popping in and out, it just doesn't work.

00:20:39.990

It would have been easy for everyone to start pointing fingers at one another because they'd all played a part in this failure. Designers put in one or two more buildings just to make it look even better; the tech team decided to make it for the PlayStation instead of the more powerful 3DO or Jaguar that were available at the time; and the business team had determined the release date. There wasn't one single individual that had obviously made a bad call; there were just a bunch of tiny, tiny issues that started a snowball until an entire system failed.

00:21:48.180

However, instead of running from the failure, the team at Konami decided to sidestep it. They found a way to work around the failure; they filled the world with this really dense, eerie fog. It turns out that fog is actually a very lightweight thing for a graphics card to render because the heaviest cost of my graphics card is usually light, and fog is all about, 'Hey, guess what? There's no light!' So now, it obscured the objects in the distance, which meant that as you were walking back and forth, it didn’t matter that a building or a texture in the background popped in.

00:22:09.840

Because you couldn't see it anyway! As an amazing added bonus, this creepy fog meant that once the technology had caught up and they didn't need the fog to hide things anymore, they kept the fog in there because people were like, 'You can't have a creepy Silent Hill game with sunny skies and being able to see 20 miles into the distance.' This became part of the game—a success ripped from the jaws of failure. Now, I’ve given you three examples from the programming world, and what I wanted to do was illustrate what was happening at our more high-stakes example in aviation and automobile accidents.

00:23:14.370

The aviation system saves so many lives because accidents are treated like lessons that we can learn from. They gather data, aggregate it, and find patterns. If an accident was caused by a pilot being tired, they never just stop there. They look at pilot schedules, staff levels, and flight readiness checklists to determine what contributed to the pilot being tired. In contrast, who do we blame for road accidents? Yeah, the driver! Right? 'She doesn’t know what she’s doing; he’s an idiot; why does he have a license?' It’s always the driver’s fault. What I’m trying to illustrate here is that airplane accidents are treated as failures of entire systems, where road accidents are treated as failures of an individual, with all the judgment that comes from being found to be like a bad individual.

00:24:54.510

It’s no wonder that people try to cover up those failures instead of acknowledging them and learning from them. I mean, how many times have we been like, 'I definitely stopped at that stop sign,' even if I didn't stop at it? It’s because it was hidden behind a tree or a shadow or something. Now, we’re not all pilots here, but I think we all have a lot to learn from how they handle that failure. If you’re willing to use a system to track and learn from those failures as you write code, you are going to have so much better results. But what should that system look like? In broad strokes, I think there are three important parts.

00:26:40.110

The first one is really important: you have to avoid placing blame. You have to collect data—this is the second one—and you have to abstract patterns. So, step one—you need to make sure that you understand that you are not the problem. Now, I’m sure this is easier said than done. We could probably have an entire talk about learning not to beat yourself up. With aviation failures, they never stopped at that top level of blame.

00:27:32.470

There was actually this case where a pilot made a critical error by dialing in the wrong three-digit city code in his flight computer. On the cockpit recording, investigators could clearly hear the pilot yawn and talk about being excited to finally get a good night’s sleep. They could easily have stopped there and said, 'Well, we figured it out; the pilot was tired, and he made a dumb mistake.' But it was not enough for them to just know that he was tired; they wanted to know why.

00:28:54.270

So they verified that he had had a hotel available to him during his layover, but that wasn't enough—they made sure that he had actually checked into that hotel. That wasn’t enough either; they looked at every time his card had been used to open the door so they could get an accurate picture of how many hours of sleep he actually could have gotten. And even then, they didn’t just say, 'Oh, we’ve shown there was no way he could have gotten more than four total hours of sleep; that was the problem.' They looked at the three-letter readout on the flight computer and considered that if someone was at all tired or distracted, that would be really confusing.

00:30:09.269

That’s when they had the full picture, and they said, 'Now we know what caused this accident.' If you see from that example, they’re not avoiding placing blame on the pilot; they acknowledged that he was tired, but they always look at it as a systemic failure. So if there’s one thing that you can take away from a failure in code or anywhere else, it’s this: if the only thing you take away from it is 'I’m dumb; I just don’t know what I’m doing; this probably isn’t for me,' you’re missing out on the best parts of failure.

00:31:23.610

The parts that show you what you're capable of when faced with challenges; it’s a way of revealing to you a better way of doing things. I know that right now it might be hard for some of us to quiet those inner critics, and that’s okay. It’s not something you have to fix overnight, but if you can at least do a good job of ignoring them for a while and working with the rest of the system, eventually you're going to find that they start contributing helpful insights instead of just telling you how bad you are at coding.

00:32:57.000

Step two is documenting everything, even the things that might seem small—heck, especially the things that might seem small! I mean remember the story about my flat tire? That flat tire on the runway in Santa Monica—that was not a big deal. But the FAA wanted to know about it because figuring out how many times that happens could start to reveal bigger patterns in places where things snowball into major problems. Catching problems early on and course correcting is going to help you avoid those major meltdowns.

00:33:40.100

But how should we document things? I’m actually a big fan of paper documentation, but as long as you have some sort of record, any kind of documentation you can go back to—whether it's notes on your phone or live tweeting events that you can see later or anything else—you’re going to be alright. The things you should include are details about what you were trying to do, what resources you were using, whether or not you were working with other people, how tired or hungry you were, and what the outcome was. You should really be specific, especially when you’re recording the outcome.

00:34:36.330

If you're trying to get data from your Rails back end out of your alt store into your React components, and it keeps telling you that you can't dispatch in the middle of a dispatch, don’t just write down, 'React is so dumb; I could do all of this with a couple of lines of jQuery, and I don’t know why my boss is torturing me,' because that's not going to help. I know because I’ve tried. Now, the final step, once you have all of this data, is to start abstracting patterns from it. Imagine how powerful that data is as you go through and look for those patterns. When do you do your best work? When do you do your worst work?

00:35:59.140

Instead of vaguely remembering that you struggled the last few times you tried to learn how to manipulate hashes in Ruby, you’ll see that you really only had issues two of the last three times. The difference between the one where you felt good and the other two was that you were well-rested for that one. Maybe you notice that you learn more when you pair, or when you have music playing, or when you've just eaten some amazing pineapple straight from Kona, Hawaii. On the flip side, you might discover that you don't learn well past 9:00 p.m. or that you're more likely to be frustrated with something new if you haven’t snuggled with a puppy for at least 20 minutes prior to opening your computer.

00:36:57.459

That’s a really good thing to know! It’s a lot easier to identify the parts of the system that do and don’t work for you when you have a paper trail, and you’re also going to have a really nice log of the concepts you’re struggling with. Let’s say you read your documentation from your last epic coding session, and you see, 'I was trying to wire up the form for my rate this raccoon app, and it worked sort of.' You’ll say, 'It was weird because the data got where I was sending it, but the form data ended up in the URL, and that was strange.' Cool, now you have a problem to research, and it's not going to be too long before you dig into some form documentation and realize you were using the GET action on that form, and GET requests put data in the URL; POST requests are the ones that keep it hidden in the request body. Now you're just going to need 20 minutes of puppy cuddle time and you're ready to go fix that form!

00:38:30.220

Now, throughout this talk, I've mostly been focusing on how individuals can learn from failure, but it’s also incredibly important for teams. There is a famous study that looked at patient outcomes at demographically similar hospitals. The researchers were kind of confused because they found that hospitals with nurse managers that focused on creating a learning culture instead of a culture of blame actually had higher error rates. What made it even stranger was that patient outcomes at those hospitals with higher error rates were actually better.

00:39:56.720

They started digging deeper and found that the nurses in the blame-oriented hospitals were terrified of getting punished, and they would try to cover up their mistakes. Not only did this make it more likely that a patient would be harmed by their mistake, but it also meant that all the underlying issues that contributed to that mistake were never dealt with, and the same mistakes would happen over and over again. It is the same story for our engineering teams: show me a dev team that has a zero-tolerance policy for mistakes, and I will show you a dev team where the engineers spend a good portion of their time covering up mistakes. If you focus on blameless post-mortems and reward experimentation, you are going to have a very different outcome for your team.

00:41:34.360

Like anything else that you try, the process that I'm proposing may not work perfectly for you the first time around. At the risk of going a bit too meta, you just need to figure out what isn’t working and see how you can adjust it—that’s right! You can learn from the failures you learn from while learning from your failures. Just keep learning from failures. Just fail! Now, as you get more comfortable bleeding information from these failures, you’re going to find that every bug is actually really a feature.

00:43:02.920

It’s true, as long as you’re able to learn from it—even if you end up deleting every single line of code. Now traditionally, this was actually the meaning of my talk, and that’s why there’s this big thank you screen with my contact info, but every time I’ve given this talk, I’ve had people (usually minority women) ask a variation of the same question: 'I have worked for years to be respected in my field; do you think it’s safe for me to fail publicly?'

00:43:45.950

And if I'm honest, the answer for most of them that ask is going to be no. So I want to share a story with you: I learned to read when I was three. Hold your applause, please; it’s not that big of a deal. It doesn't impress people that you can read once you’re 36; it’s only impressive when you’re young. But in the 80s, this was enough to get me labeled as gifted, and I got to be put into gifted tracks in school. My entire childhood, people told me how smart and brilliant I was.

00:45:03.740

Now, that meant that I never had to worry about not knowing something. I mean, I couldn’t be dumb because every adult in my life told me how smart I was, so it didn’t bother me not to know something. I would just ask a question, and that stuck with me all the way up through my coding boot camp—front row, hand constantly in the air! I took that with me to my first coding job: if I didn’t understand something, I felt comfortable asking for clarification. If I thought someone else in the room didn’t understand something but I got the sense that they were embarrassed to ask, it didn’t bother me to be the one that asked, so I’d ask their questions too. And that worked fine!

00:46:47.140

There were some weird things, like one time in a one-on-one with my manager a couple of years in, he said, 'You’re like me; you really have to struggle to learn how to code.' And I thought that was strange because learning to code had never been a struggle for me, with the exception of a very sticky backbone Marionette implementation. But I think we've all been there! It just came so naturally to me; it was one of those topics that I felt was easy and kind of strange. But I was like, 'Okay, well, I don’t need to correct his opinion. It’s fine if he thinks I struggled to learn how to code.'

00:48:28.919

The thing is, that was great when things were good. I got plenty of work; there were plenty of interesting problems to solve. And then, my company laid off staff. Well, that’s cool, so then one day the management team decided that they wanted to cut the engineering team down to about a third of its size, and I did not make the cut. My team was full of really amazing people, and there’s a chance that I wouldn’t have made the cut anyway. But I do know that when you have to go down to a skeleton crew, the people you're more likely to cut are the ones you think struggle and are going to be more of a drain on everyone's resources.

00:49:52.430

It sucks because being able to experiment and fail and learn from that helps you learn faster, and that’s what’s going to skyrocket your career. But not if you can’t get hired because people are like, 'Oh, you’re a failure.' So for those people, I would say project confidence and competence: write the blog post as if you’re the smartest person in the world because you know your stuff. Just make sure you find a place where you can fail safely. Lying about making mistakes is going to keep you from learning.

00:50:40.470

So find your people! If you don’t have any—Code Newbies is a great group. I have zero financial interest, but they are fantastic people you can fail with. If you can’t fail with anyone else, hit me up and be like, 'I fell so hard!' and I’ll be like, 'Oh my gosh, I too have failed so hard!' We can just hug it out! So I just don’t let others' biases keep you from learning as much as you possibly can.

00:51:24.270

I am going to step off my soapbox now, and here I want to thank you all so much for coming.

00:51:27.610

If you haven't had enough of me talking code yet, you can check out my YouTube channel: youtube.com/slashcompchomp. I'm also on Twitter at @just_rudder. I think you're supposed to say, 'Don’t @ me,' but I love being @'d, so feel free to @ me!

00:52:08.280

Cool! Does anyone have any questions? Yes, in the blue shirt?

00:52:34.090

Sure! The question is, for people managers, are there suggestions for creating a culture that embraces failure? Yeah, don’t punish people for mistakes. I mean, it sounds trite, but that really is the number one thing.

00:52:43.680

I remember when Amazon Web Services went down sometime in the past year, and it turned out that one developer made a mistake—like a typo— and it took down production, taking it four hours to bring it back up. I remember seeing tweets like, 'Oh man, I would hate to be that dev. He better lose his job; he just cost them millions, if not billions, of dollars!' I thought, 'I really hope none of the people tweeting that are managers or managers of developers.' Because saying those sorts of things—even in jest—is going to make people who you aren’t aware of, whose careers you play a huge role in, just terrified of making mistakes.

00:53:43.150

The more that you can model that it’s okay to fail—whether it’s showing an example of, 'Look here, someone made a mistake. How could we have systems where we would make a similar mistake? How would we fix that?'—always putting the focus back on the system that led to the error instead of saying, 'This is a bad developer'—that’s definitely going to help. Now, there are always business people involved with most companies, so it could be that, as a manager, you just have to do the best you can within the system that others are putting on top of you.

00:54:29.350

So yeah, just using your manager skills as a shield for the people underneath you is one of the best things you can do. Any other questions? I thought I saw another hand, but I could have just been imagining it. Yes, you sir! So suggestions for ways in a team to highlight failures in a positive way can be hard to bring up—I think it was GitLab that had a major outage and then wrote a beautiful post-mortem blog post detailing everything that went wrong. So I think a first step is finding things like that and sharing them; highlighting like, 'Isn’t this amazing? Look at the anatomy of this problem: look how they solved it, look how no one got blamed, but instead they looked for a solution!'

00:56:13.430

So first, find those places that other people have modeled that behavior and find people on your team that feel comfortable modeling that as well. Probably your junior female developer who is four days into her career isn’t going to be the person that you should burden with, 'Hey, why don’t you write a blog post about how you brought down production, but it was really okay?' Sure; she might be comfortable with it, but she might go home and curl up in the fetal position in the kitchen and cry, so you know, maybe find the people who are more confident in sharing their experiences.

00:57:00.110

Definitely don't put pressure on any one individual developer because they may have their reasons for not wanting to be the person that says, 'Yeah, I make mistakes.' Even though every developer on every team has those stories, give them a big hug! Okay, no, sorry! Definitely don’t do that—definitely not without consent! So, the question was, as a people manager, what’s one of the most comforting things you can do when someone makes a mistake? I once made a mistake that cost my company $30,000. I had an account that was owned by the company whose account we were taking over.

00:57:54.540

The client and I were supposed to run theirs while I was setting up ours, and then when ours went live, I was supposed to turn theirs off. I forgot to turn theirs off, and it ran for a month, spending their full budget in our account and their full budget in their account. When I discovered this, I freaked out because that was about what I was making in a year at the time for the company, and now I had tossed them my entire salary. I thought, 'I’ve definitely lost my job; I’m probably going to have this huge bill I’ll never be able to pay back!' So, I was in tears and went to probably one of the best humans on earth, my CTO at the time, Dora Patel.

00:58:43.820

I was like, 'Dora, this is what I’ve done, and I’m so sorry!' He looked at me and said, 'It's okay!' That was, first of all, the most comforting thing! He didn’t freak out. He said, 'It's okay! You know what? I’ve made mistakes that have cost this company way more than that. This is fixable!' Just having that kind of acknowledgment, first of all, that it was okay was huge for me because I was thinking, 'I’m going to have to get 10 jobs to pay this back and I don’t have any job!' Just hearing, 'It’s okay'—it was just huge, you know? Because when you make a mistake like that, you feel like you’re the only one that’s ever done it and just, yeah, having acknowledgment that life was not over helps a lot.

01:00:00.630

I think that’s all the time we have now, but I’m giving away GitHub stickers for anyone interested. I also have compchomp stickers for people who are fans of a YouTube channel you’ve never heard of. And I’m available for questions or anything else afterwards on Twitter or in person! Thank you all!