00:00:17.189

Hello everyone, I'm Simon Kröger from SponsorPay, and it's my first time in Chicago. I'm excited to be here, and it’s fascinating. I've had some great experiences here, similar to my first time in Athens, where it was honestly quite captivating.

00:00:38.110

This year's topic brings us back to discussions about layered caches. We often talk about unicorns and the focus is on performance, but what we really want to discuss is how to handle a volume of requests, particularly in a Rails environment.

00:01:05.450

We host a lot of requests and utilize several unicorn servers running on a content-cache architecture with MySQL. The challenge is to manage all these requests while ensuring performance remains optimal. We sometimes have to face requests from a single user that could overburden our system, hence performance optimization becomes critical.

00:01:50.750

To offer users the best experience, we must think about caching strategies. We want to avoid sending the same content to all users without consideration of their individual needs. There’s a careful balance we need to strike between performance and personalized content delivery.

00:02:58.210

The presentation will cover how we optimize our databases. We aim for a significant reduction of overhead by keeping our systems efficient. Our goal is to handle requests quickly and effectively while minimizing unnecessary load on resources. We analyze the patterns and adjust accordingly.

00:04:40.470

In practical terms, bottlenecks can emerge unexpectedly, especially under heavy loads. A common issue we face involves the mismanagement of requests leading to performance degradation. It's essential to plan for surges in traffic that could potentially overwhelm our database.

00:05:53.120

When we encounter such issues, one strategy involves implementing caching intelligently. Our dashboards allow us to track user interactions and measure the impact of traffic on system performance. The aim is to optimize cache utilization effectively while ensuring minimal latency.

00:07:30.840

Moreover, leveraging similarities among user requests can significantly enhance our caching strategy. By storing commonly requested data, we can avoid frequent trips to the database, thereby improving the overall efficiency of our requests.

00:08:15.470

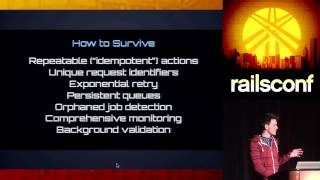

But we must also address the strategies for handling cache expiry and invalidation. The goal is to ensure users have access to the most relevant and updated information without causing undue strain on our servers. We prioritize fine-tuning this aspect to enhance the robustness of our administrative processes.

00:09:41.060

Our approach must consider managing cache states and ensuring that unwanted duplicates are minimized. We also discuss ways to mitigate performance penalties associated with high traffic periods.

00:10:05.360

The presentation outlines how various techniques can be adapted to better handle our specific needs within the Ruby on Rails framework. We've improved upon traditional caching mechanisms, ensuring that our frameworks are resilient and can scale appropriately.

00:11:04.220

Caching is pivotal, but strategies must be context-sensitive. We regularly reassess how effective our methods are and whether they help maintain service quality under load. Through this iterative process, we can adapt our methods to fit evolving requirements and situations.

00:12:38.230

In practice, this leads to us employing a variety of caching mechanisms dynamically, dependent on user activity and system load. Understanding user interaction can guide how we prioritize data retention within our caches.

00:14:20.090

Looking at the biggest challenges ahead, we need to ensure transparent communication of our processes across multiple running instances. Being on the same page is crucial for keeping performance intact when data flows intensify.

00:15:54.440

As we proceed, we aim to build a cohesive framework where cache interactions can happen effectively without causing unnecessary delays. Focusing on correct user flows allows us to maintain high-speed data processing and enhanced user satisfaction.

00:17:23.889

To achieve our goals, a continual reevaluation of methods and tools is paramount. This includes scraping outdated processes and implementing modern techniques to ensure longevity and relevance within our performance landscape.

00:18:55.310

Thank you all for your attention. If you have any questions about my talk or want to discuss performance strategies further, feel free to reach out. I look forward to working with you as we push the boundaries of what's possible in Rails performance optimization.