00:00:11.960

My name is Michael May. In this talk, we'll cover a lot of things. Hopefully, it won't be too fast, but I hope you'll learn something.

00:00:22.840

The first topic we'll discuss is some cool aspects about the internet. We'll cover routing, and provide some background on TCP, DNS, and the essential elements that make the internet work, which we as Rails developers might not touch every day. However, I think it's valuable to know about these foundational things.

00:00:41.200

Next, we'll talk about HTTP and what it provides for caching, including how to handle things like cookies. Finally, the bulk of the talk will revolve around accelerating applications. I'll discuss varnish cache extensively and share strategies for caching dynamic content.

00:01:06.280

Before we begin, I want to point out that I work at a company called Fastly, a content delivery network that focuses on real-time delivery. We emphasize immediate speed, whether that involves log streaming or instant purging in just 150 milliseconds. We're actually built on a fork of varnish cache.

00:01:29.600

Does anyone in the room know about varnish cache? Have you used it or heard of it? Cool! What about VCL, Varnish Configuration Language?

00:01:43.240

If you're interested, please come find me afterward, and let's chat. I'd like to show you a few pictures. At Fastly, we run our own infrastructure, investing heavily in SSDs and RAM in all our cache nodes.

00:02:10.959

Now, I'm going to talk about the internet. If you find that interesting, I recommend checking out a talk by one of my colleagues called 'Scaling Networks Through Software' from SRE Con 2015.

00:02:30.120

First, it's essential to understand that the internet consists of many different autonomous systems. An autonomous system is a network controlled by a single entity. For example, Comcast and Level 3 have their own autonomous systems. Fastly operates its own network, too.

00:02:52.200

The critical aspect of autonomous systems is that they are identified by Autonomous System Numbers (ASNs), which serve as unique identifiers for each network. These ASNs are crucial in Internet routing.

00:03:13.480

There's a protocol called BGP (Border Gateway Protocol) used to find the best path across the internet from point A to point B. When you connect a new router to various ISPs, it pulls all the internet routes from those ISPs and analyzes them.

00:03:36.599

It identifies the shortest AS path, which is simply the path that traverses the fewest autonomous systems. Once the path is established, the next hop is stored in a routing table. This process is vital due to peering agreements.

00:04:01.080

Peering occurs when two autonomous systems exchange routing information, and these agreements define the amount of traffic that can be sent through their networks and their costs. These exchanges typically happen at physical points known as internet exchanges.

00:04:22.400

Some significant internet exchanges can handle hundreds of terabits per second, with Amsterdam ranking among the largest. There's also a group called NANOG, which gathers network operators from North America to form peering agreements.

00:04:46.320

It's noteworthy that while people may say that no one controls the internet, NANOG plays a significant role behind the scenes.

00:05:27.960

If you want to check an internet routing table on a Mac or Linux, you can do so with commands like netstat or 'route'. That's a basic overview of internet routing.

00:05:52.400

Now, let's discuss TCP. To establish a new TCP connection, there’s a process known as the three-way handshake, which requires three round trips between the server and client. It's essential to note that HTTP operates on top of TCP.

00:06:10.360

If every time we send data to a client we have to initialize a new connection, it will slow down the process because these three round trips can contribute to latency.

00:06:40.720

Another important concept is the TCP window size, which controls how much data the server can send to the client at once. An optimization that your CDN might implement is called 'slow start,' which iteratively increases the window size to send more data.

00:07:04.479

Now, let’s turn to DNS, which we use daily to convert human-readable names into IP addresses. DNS has a Time to Live (TTL) that defines how long these records can be cached.

00:07:34.640

Has anyone in the room used the 'dig' command? It's a handy tool for querying DNS records. For instance, if you execute a 'dig' on fast.com, it can return multiple IP addresses. Some of these might be unicast, and some might be for redundancy.

00:08:03.960

Additionally, short TTLs allow us to update DNS entries frequently, ensuring that we can always direct traffic to the closest point of presence.

00:08:40.120

Now, why do we care about all of this? Because it takes time to move data around the world. For example, if we have clients in Sydney, which is roughly 10,000 miles away from Atlanta, a round trip might ideally take around 80 milliseconds at the speed of light.

00:09:16.080

However, in reality, it often takes longer—approximately between 250 to 350 milliseconds for a round trip. If we need to open a new TCP connection for every piece of data sent to clients in Sydney, that adds significant latency.

00:09:59.960

A side note: I also performed this analysis using airplane Wi-Fi, where latency reached around 700 to 800 milliseconds—something we’ve come to expect from inflight connections.

00:10:27.960

This brings us to techniques we can use to accelerate performance. For instance, instead of waiting for a longer round trip, we can employ techniques to decrease response times.

00:11:08.560

In Atlanta, the latency could drop to only 20 to 30 milliseconds, which raises the question: have we beaten the speed of light? The answer is no; we manage this through caching.

00:11:37.520

Now, with the topic of HTTP, we will explore some caching directives provided in HTTP headers. The Cache-Control header is crucial, and I just want to highlight a couple of directives that may be less familiar.

00:12:05.840

For example, there is 's-maxage,' which behaves like 'max-age' but only applies to shared caches like your CDN. This opens up interesting possibilities for setting different caching policies for browsers and edge servers.

00:12:32.520

New directives like 'stale-while-revalidate' and 'stale-if-error' allow the cache to serve stale content while still fetching fresh content in the background, improving user experience.

00:13:05.480

The 'Vary' header can also impact caching. A good practice is to make the Vary header depend on elements like 'Accept-Encoding,' which has fewer variations and thus minimizes cache fragmentation.

00:13:36.839

However, a poor use of the Vary header might be to involve 'User-Agent' due to the vast number of permutations that could arise, which would eliminate the caching benefits.

00:14:01.239

On cookies, the 'Set-Cookie' header is sent by the server, while the cookie header is sent by the client. In general, proxy caches like CDNs will not cache responses containing a cookie header, which is an intuitive decision.

00:14:33.480

Now, let's talk a bit about varnish cache. Varnish acts as an HTTP reverse proxy cache, which means it sits between users and your server, allowing you to cache requests effectively.

00:14:59.600

Essentially, when deployed in production, you'll typically set up your domain to point to the Varnish cache, ensuring that all requests flow through that cache.

00:15:26.320

To illustrate its operation, consider the output of a curl command to Fastly.

00:15:35.440

You may see a 'via' header indicating that this request was proxied through Varnish. There are also other headers like 'x-serve-by' and 'x-cache' that provide insights into cache hits or misses.

00:16:05.639

Headers prefixed with 'x-' are not standard HTTP headers and are custom definitions that reveal metadata resulting from the proxy.

00:16:30.200

When using connection keep-alive, the connection remains open for subsequent requests, avoiding the expensive three-round trip TCP handshake.

00:16:58.280

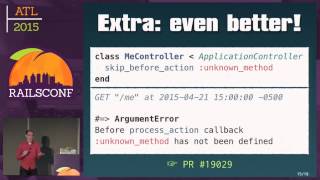

Varnish comes with a domain-specific language called VCL, allowing you to interact with the traffic flowing through your cache. This VCL is translated into C and then into bytecode, which has minimal performance overhead.

00:17:21.200

Varnish uses a state machine to process requests, where specific VCL functions are executed at different stages of this workflow. For example, 'recv' occurs at the beginning, while 'deliver' happens at the end.

00:17:47.120

Consider a scenario where you want to block requests to an admin endpoint unless they include an authorization header. In this instance, VCL allows you to handle the request logic upfront without ever reaching the application server.

00:18:19.560

Using Varnish for such operations reduces latency significantly because the request does not have to make that long round trip to the application server, saving hundreds of milliseconds.

00:18:56.360

Varnish also supports synthetic responses, which can improve performance in certain scenarios. For instance, if you have a like button on a page, the traditional flow might involve sending a post request to the origin server.

00:19:29.280

However, with synthetic responses, the JavaScript can send its request to the cache, which replies immediately with a response while queuing a background update to the origin server.

00:19:58.480

That way, the client receives feedback immediately, allowing the user's experience to remain fluid and responsive without having to wait for the round trip to the server.

00:20:24.960

In VCL, you might implement this by checking if a specific URL matches a condition and send back a synthetic response quickly, ensuring a better user experience.

00:20:51.880

For cases where consistency is important, synthetic responses may not be suitable, but for applications that can tolerate eventual consistency, they can save many milliseconds on response times.

00:21:25.760

Moving on, let’s discuss strategies for caching dynamic content. Firstly, not all dynamic content is cacheable. Things like real-time statistics, or sensitive information such as credit card numbers cannot be cached.

00:21:54.400

However, content such as JSON APIs can often be cached, even though updates may be unpredictable. One common strategy for managing this unpredictability is the use of short TTLs.

00:22:26.720

Another strategy is Edge Side Includes (ESI), which involve generating templates at the edge while fetching dynamic content from the origin server as needed.

00:22:54.239

For example, when generating a navigation bar that displays a logged-in user's avatar and name, the caching server can handle the template while fetching user-specific content as needed.

00:23:28.160

A further strategy to consider is extracting dynamic content into APIs and fetching it asynchronously from clients. This can expedite the delivery of HTML to users, minimizing the build time at the application layer.

00:24:01.520

When these APIs send responses, we can provide an appropriate cache-control directive. This can establish longer TTLs to balance freshness with load management. You can associate cache keys with API responses for efficient invalidation.

00:24:38.960

In Rails, for instance, you can set cache keys based on table identifiers and perform purges on updates to keep content fresh.

00:25:13.480

Using tools like the 'CF-Cache-Tag' meta tag allows for tagging and cleaning up cached content, but care must be taken to ensure this doesn’t make responses uncacheable.

00:25:42.239

Another strategy involves handling cached responses when cookies are present. By suppressing cookie headers during caching lookups, you can cache responses without user-specific data while still restoring cookies to responses as needed.

00:26:11.440

In VCL, you can manage this by checking for cookies at the request stage, stripping them out, and then reinserting them after serving cached content.

00:26:43.680

If the cache misses, you can fetch from the origin while adhering to the same principles of managing cookies. Proper caching practices ensure a fine balance between performance and user experience.

00:27:09.280

In conclusion, tools like 'dig' and 'traceroute' are invaluable for debugging network issues. Adjusting cache-control headers allows for precise control over cache behavior.

00:27:39.680

As an advanced topic, consider using VCL to replace some middleware logic at the edge and examine the performance outcomes.

00:28:08.560

Throughout this talk, we've explored various caching strategies. None are wrong, but choosing the one that best fits your context is essential.

00:28:38.640

Ultimately, we've discussed stripping away elements that might otherwise be considered private or uncachable, enhancing our caching strategies.

00:29:08.000

We'll now open the floor to questions. If anyone has any queries, feel free to ask!

00:29:32.360

One participant inquired about whether varnish could replace existing reverse proxies like Nginx, to which I clarified that it can work with both, without issue. Concerns about synthetic responses handling errors were addressed, confirming that logic can indeed be defined to manage such cases.

00:30:44.200

There were also questions about caching TTLs and ISP practices. I explained that, generally, as long as your caching implementation is sound, many ISPs respect these TTLs. However, it’s crucial to note potential challenges posed by reliant caches in front of your control that may not follow your defined TTL.

00:35:09.360

That wraps up our Q&A, so thank you all for your engagement and participation!